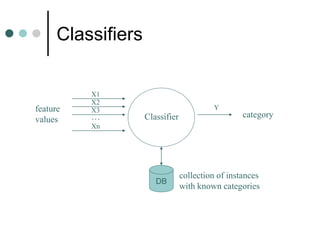

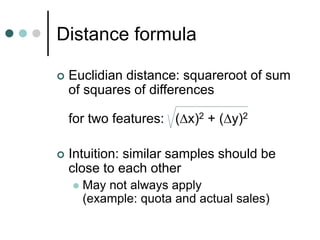

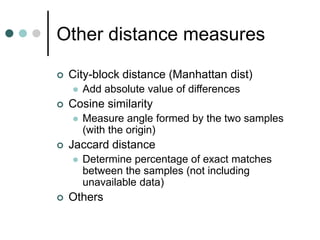

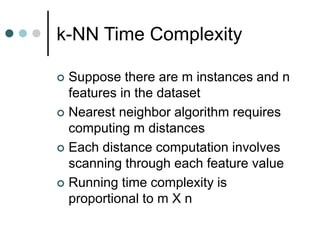

This document discusses supervised learning and the k-nearest neighbors (kNN) classification algorithm. kNN works by finding the k training instances nearest to a new instance, and predicting the new instance's class based on the classes of its neighbors. The document covers how kNN handles numeric and non-numeric data, different distance measures, preprocessing data, variations of kNN, and its time complexity of O(mn) for m instances and n features.