The document details a presentation on building a neural network library from scratch, led by Jozi Gila, a software developer. It covers neural network fundamentals, including function approximation, architecture, the role of neurons and layers, and the process of training using backpropagation and gradient descent. The presentation also provides a simple implementation in Python, emphasizing the importance of matrix operations for efficiency.

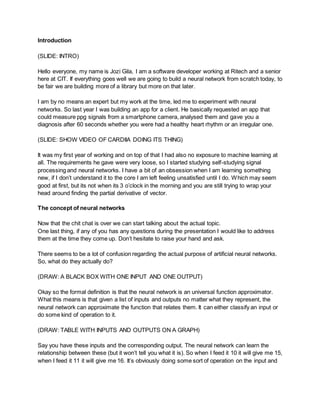

![self.inNodes = inNodes + 1

self.outNodes = outNodes

self.alpha = alpha

# Matrix of weights init+ bias weight

self.weights = np.round(np.random.rand(self.inNodes,self.outNodes) - 0.5, 2)

def fwd (self,input):

# Add the bias value to the input

self.input= np.concatenate((input,[[1]]), axis=1)

# Sum the inputs and normalize for each output

sum = np.dot(self.input,self.weights)

self.output= SIG(sum)

return self.output

def bck (self,dL1):

# Derivative of L1 /w respectto the sum

dSIG_OUT = dSIG(self.output)

dL1_SUM = np.multiply(dL1,dSIG_OUT)

# Derivative of L1 /w respectto input (to be passed to L0)

W_T = np.transpose(self.weights)

dL1_L0 = np.dot(dL1_SUM,W_T)

dL1_L0 = np.delete(dL1_L0, -1,1)

# Change the weights using derivative of L1 /w respectto W

input_T= np.transpose(self.input)

dL1_W= np.dot(input_T,dL1_SUM)

self.weights -= self.alpha * dL1_W

return dL1_L0

class NeuralNetwork(object):

def __init__ (self,features,hiddenNeurons,classes,hiddenLayers = 1, alpha = 2):

# Create the first layer

self.layerStack = np.array([Layer(features,hiddenNeurons,alpha)])

# Create the hidden layers

for x in range(hiddenLayers - 1):

self.layerStack = np.append(self.layerStack,[Layer(hiddenNeurons,hiddenNeurons,](https://image.slidesharecdn.com/letsbuildaneuralnetwork-180928122746/85/Lets-build-a-neural-network-6-320.jpg)

![alpha)])

# Create the output layer

self.layerStack = np.append(self.layerStack,[Layer(hiddenNeurons,classes,alpha)])

np.set_printoptions(suppress=True,formatter={'float_kind':'{:f}'.format})

def eval(self,input):

# Forward the signal through the layers

lastInput= input

for l in self.layerStack:

lastInput= l.fwd(lastInput)

return lastInput

def train(self,input, target, iterations = 10000):

for i in range(iterations):

for j in range(input.shape[0]):

# For each inputvector in the data get the output

inputVector = input[j]

out = self.eval(inputVector)

# Get target value for training setand calc error

t = target[j]

errorVector = ERROR(t, out)

# Logging the error in the output

print(i, "t", np.sum(errorVector))

# Backpropagate the error though the layers

errorDerivative = dERROR(t, out)

for l in range(len(self.layerStack) -1, -1, -1):

errorDerivative = self.layerStack[l].bck(errorDerivative)](https://image.slidesharecdn.com/letsbuildaneuralnetwork-180928122746/85/Lets-build-a-neural-network-7-320.jpg)