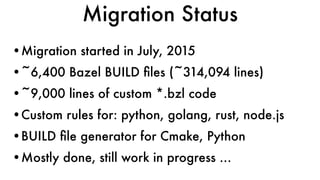

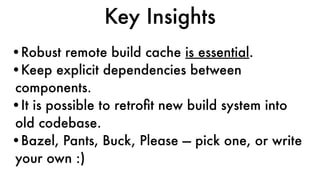

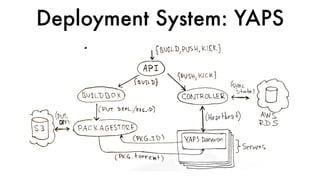

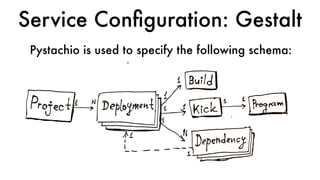

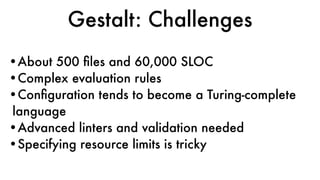

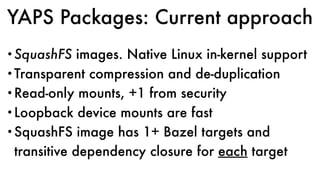

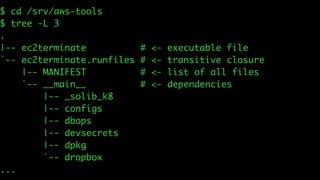

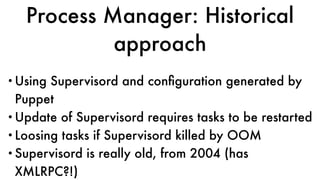

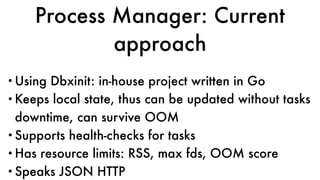

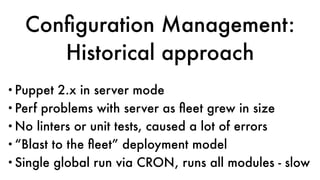

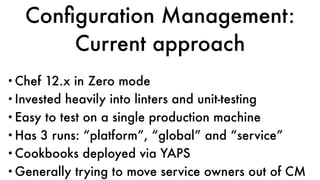

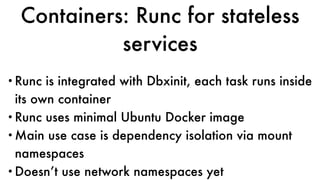

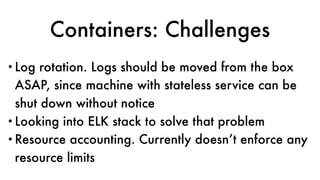

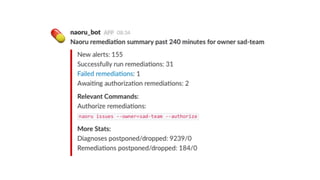

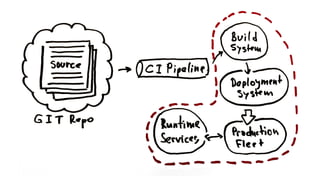

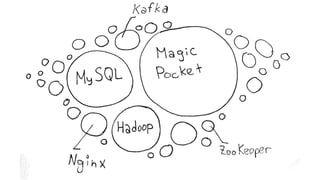

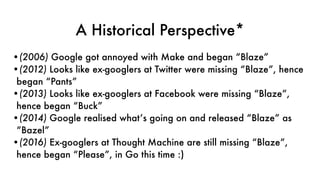

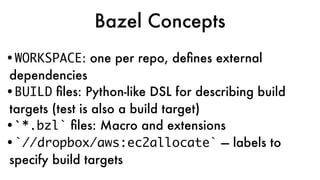

This document discusses Dropbox's approach to building, deploying, and running production code, highlighting their migration to a unified build system using Bazel. It outlines the evolution of their deployment and service management processes, transitioning from older tools like Puppet and Supervisord to more efficient solutions like dbxinit. Key insights from their experience emphasize the importance of robust remote build caching, explicit dependency management, and the challenges faced in containerization and service monitoring.

![native.new_http_archive(

name = "six_archive",

urls = [

“http://pypi.python.org/.../six-1.10.0.tar.gz”,

],

sha256 = “…”,

strip_prefix = "six-1.10.0",

build_file = str(Label("//third_party:six.BUILD")),

)

External Dependencies(1)](https://image.slidesharecdn.com/leonidvasilyevuniteddev2017-170413140008/85/Leonid-Vasilyev-Building-deploying-and-running-production-code-at-Dropbox-17-320.jpg)

![py_library(

name = "six",

srcs = ["six.py"],

visibility = ["//visibility:public"],

)

External Dependencies(2)](https://image.slidesharecdn.com/leonidvasilyevuniteddev2017-170413140008/85/Leonid-Vasilyev-Building-deploying-and-running-production-code-at-Dropbox-18-320.jpg)

![py_library(

name = "platform_benchmark",

srcs = ["platform/benchmark.py"],

deps = [

":client",

":platform",

"@six_archive//:six",

],

)

External Dependencies(3)](https://image.slidesharecdn.com/leonidvasilyevuniteddev2017-170413140008/85/Leonid-Vasilyev-Building-deploying-and-running-production-code-at-Dropbox-19-320.jpg)