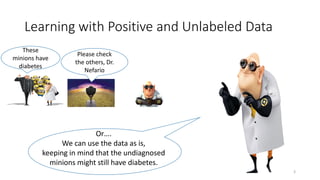

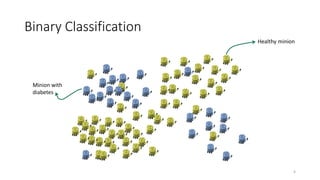

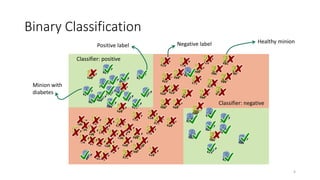

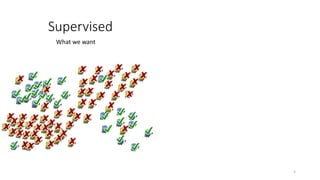

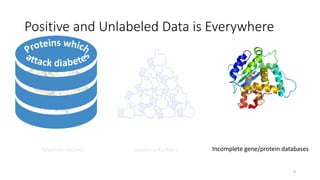

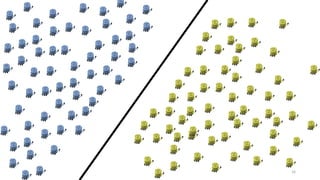

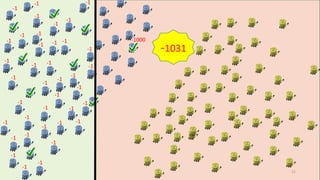

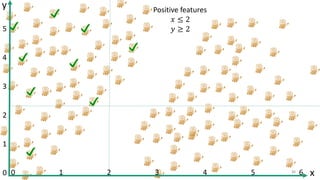

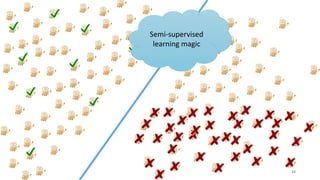

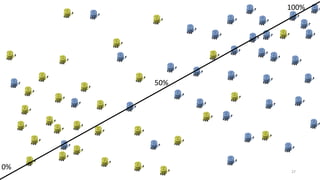

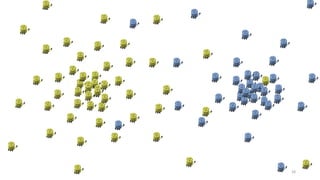

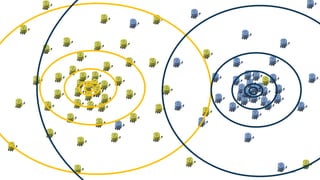

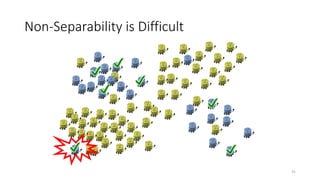

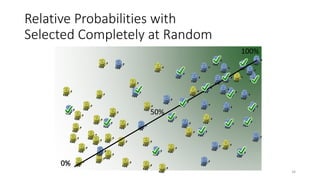

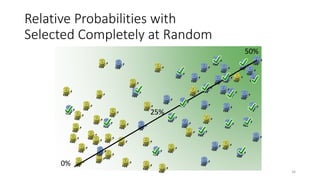

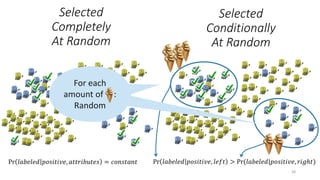

This document discusses learning from positive and unlabeled data. It presents three cases of increasing difficulty: linearly separable data where the unlabeled data can be considered negative, non-separable data where the labels are selected completely at random, and non-separable data where the labels are selected conditionally at random. For the linearly separable case, biased learning or two-step techniques can be used. For the random case, relative probabilities must be considered. For the conditionally random case, the propensity score function must be estimated or used. The goal is to make probabilistic predictions from positive and unlabeled data under different assumptions.