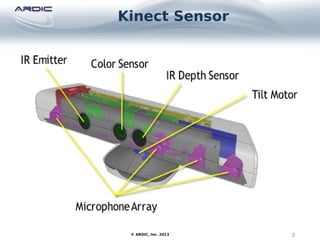

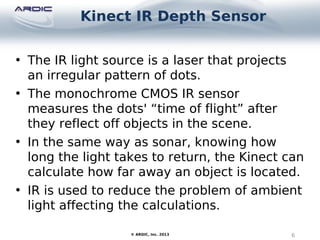

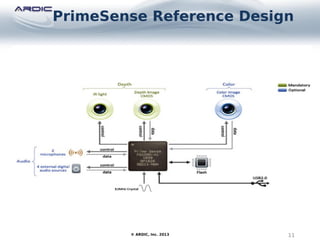

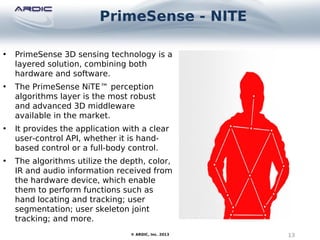

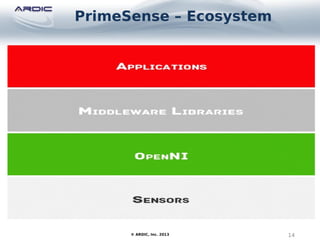

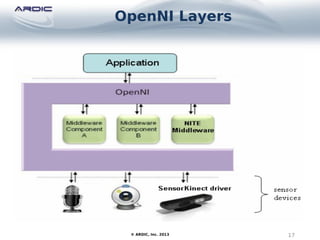

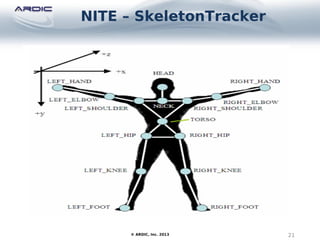

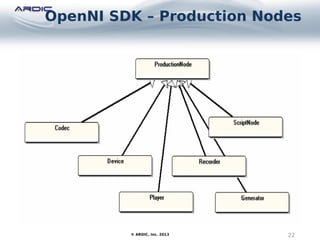

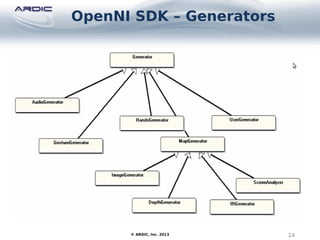

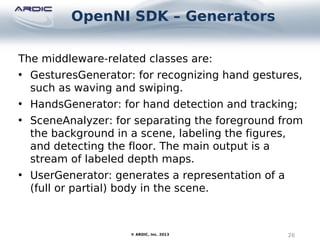

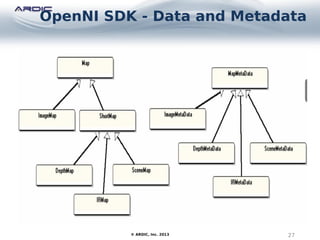

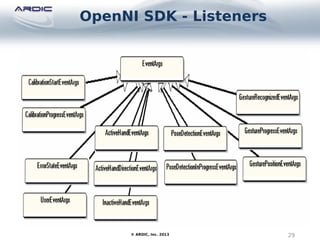

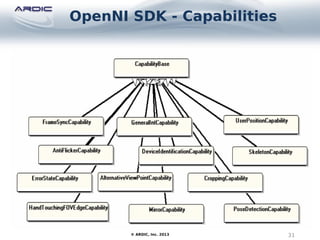

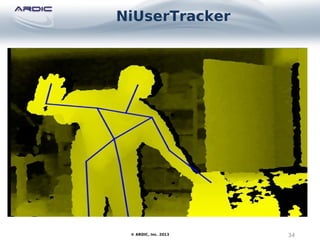

The document discusses Kinect and 3D motion sensing technology. It introduces the Kinect sensor device, the PrimeSense technology behind it, and the OpenNI and NITE libraries for developing applications using depth sensor data. It provides details on the Kinect sensor components and how it measures depth, and describes the various software options for Kinect development including OpenNI, OpenKinect, and Microsoft's Kinect SDK. It also summarizes the PrimeSense technology, OpenNI architecture and nodes, and NITE middleware for gesture and skeleton tracking.