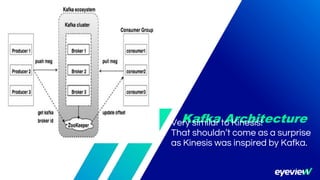

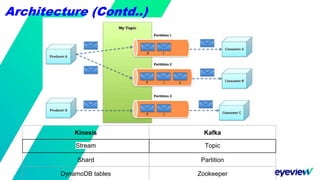

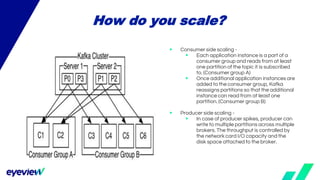

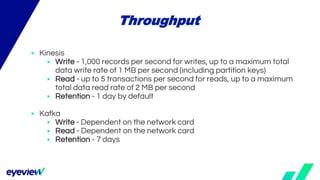

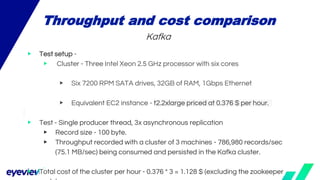

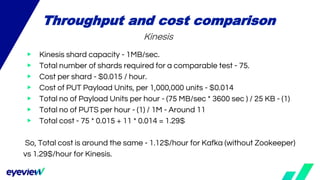

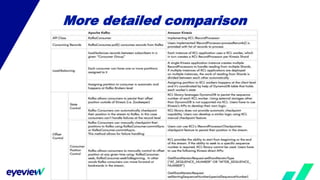

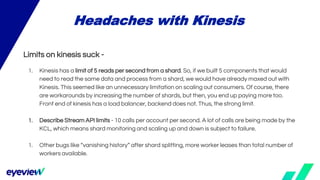

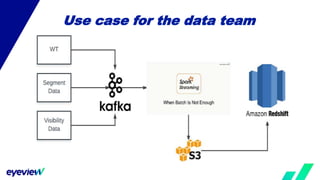

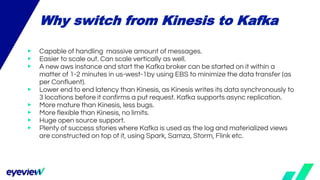

This document compares the architectures of Kafka and Kinesis. Both have similar architectures, with Kafka brokers storing messages in partitions and consumers subscribing to topics. The document finds that Kafka has higher throughput and lower costs than Kinesis due to Kinesis' throughput limits. It also notes headaches with Kinesis' throughput limits and management overhead. The document recommends switching from Kinesis to Kafka for these reasons.