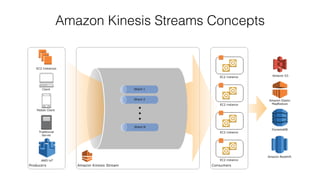

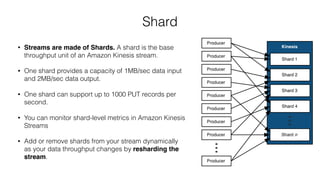

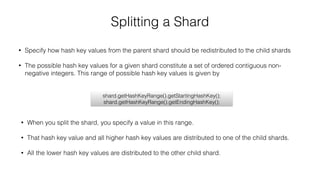

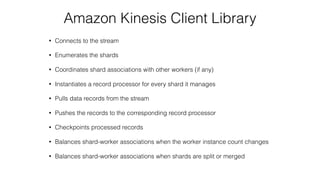

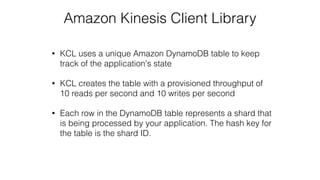

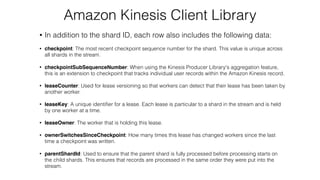

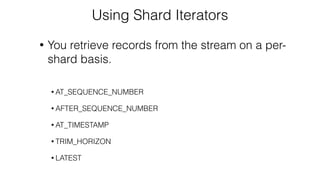

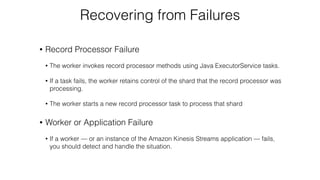

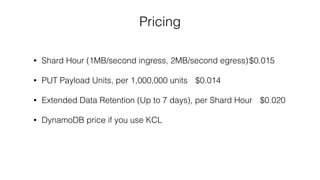

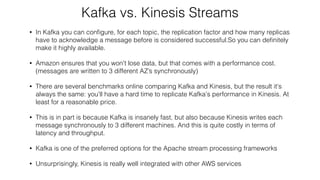

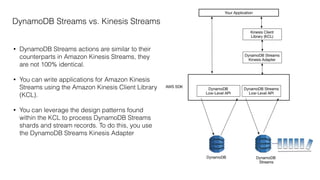

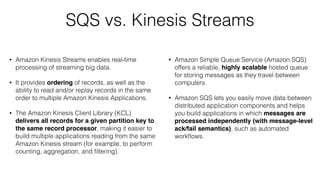

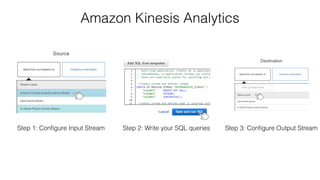

This document provides an overview of AWS Kinesis and its components for streaming data. It describes Amazon Kinesis Streams for processing real-time streaming data at large scale. Key concepts explained include shards, data records, partition keys, sequence numbers, and resharding streams. It also covers the Amazon Kinesis Producer Library, Amazon Kinesis Client Library, and how to handle failures and duplicate records. Amazon Kinesis Firehose and Kinesis Analytics are introduced for loading and analyzing streaming data. Comparisons are made between Kinesis and other AWS services like DynamoDB Streams, SQS, and Kafka.