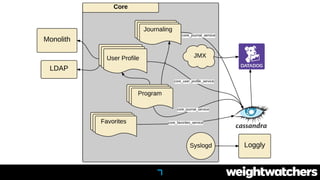

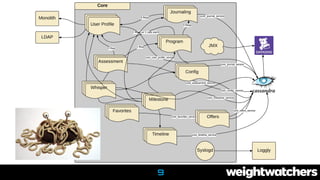

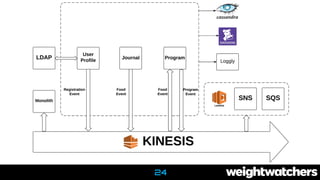

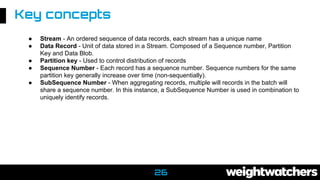

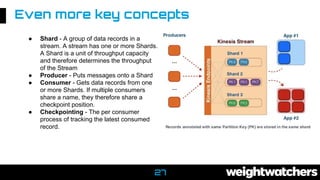

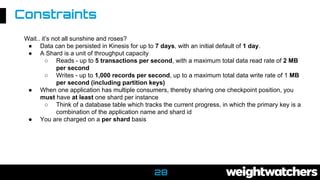

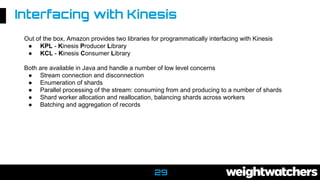

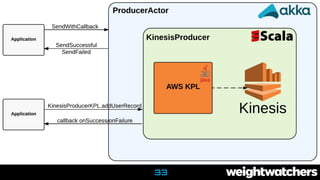

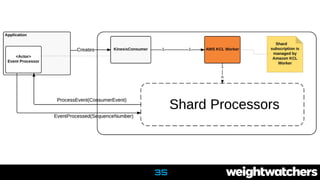

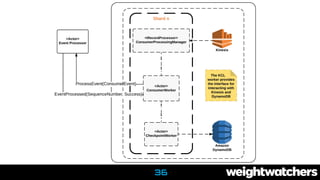

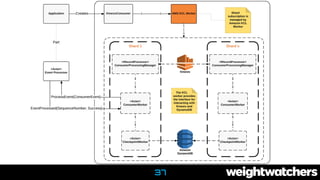

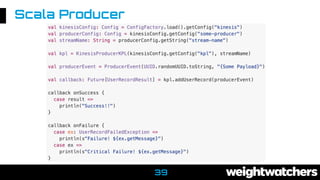

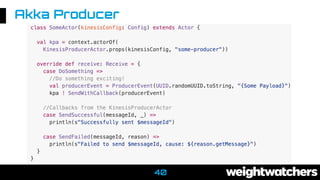

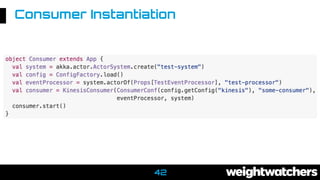

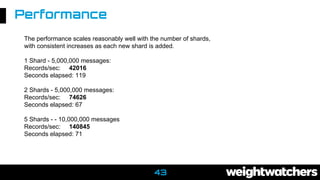

Mark Harrison presented on using Amazon Kinesis for event-driven microservices architectures. He discussed the limitations of traditional monolithic and microservice architectures, and how Kinesis can help address issues like tight coupling, high latency, and lack of event broadcasting. Key concepts around Kinesis included streams, shards, producers, consumers, and constraints like throughput limits. Harrison demonstrated a custom Scala/Akka client for Kinesis that provides asynchronous, non-blocking producers and consumers with features like throttling and checkpointing. Performance tests showed throughput scales linearly with additional shards. In closing, Harrison invited the audience to learn more about open source and job opportunities with Weight Watchers.