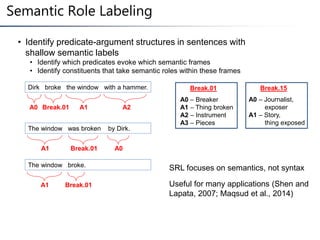

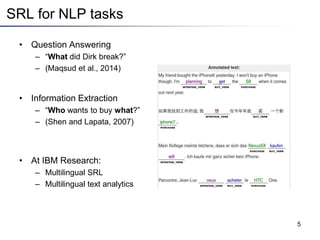

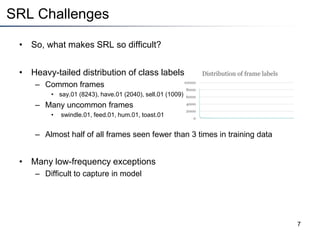

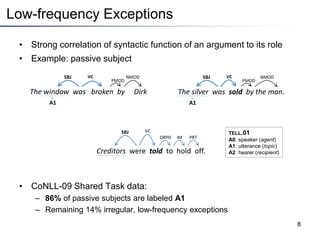

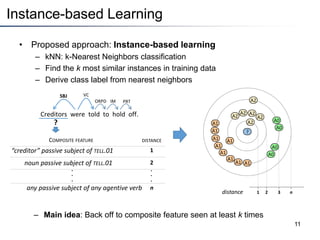

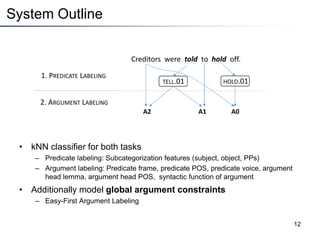

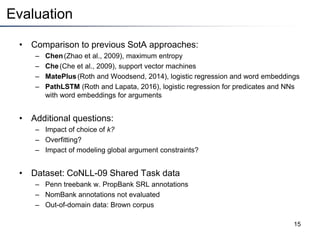

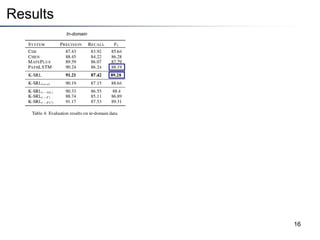

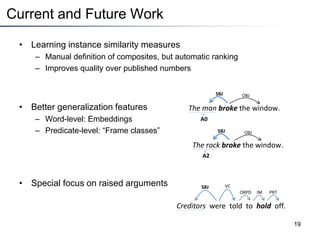

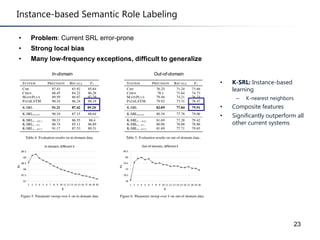

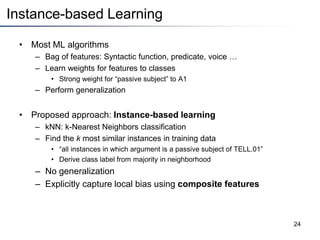

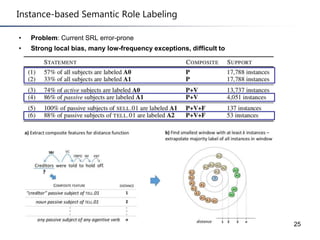

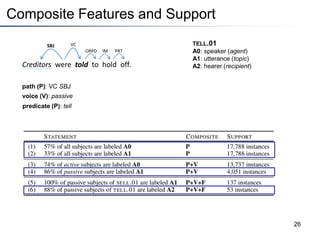

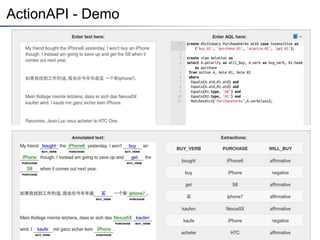

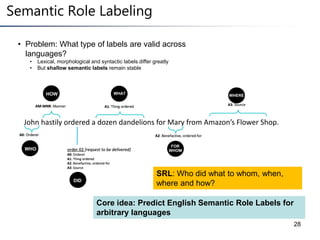

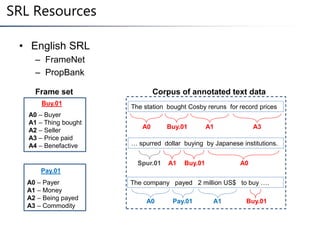

This document summarizes a research paper on instance-based learning for semantic role labeling (SRL). It presents a simple but effective k-nearest neighbors approach using composite features that outperforms previous SRL systems on both in-domain and out-of-domain evaluation. The approach models global argument constraints and addresses SRL challenges like heavy-tailed label distributions and low-frequency exceptions through explicit representation of local biases in composite features.