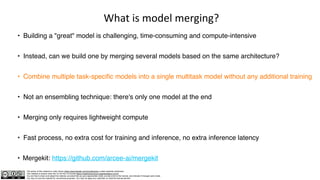

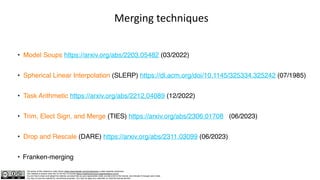

The document discusses various techniques for model merging, describing how to combine multiple models into a single multitask model without additional training, improving efficiency with lightweight compute. Techniques like Model Soups, Spherical Linear Interpolation, Task Arithmetic, and others are highlighted, along with their applications, advantages, and performance outcomes. The material is authored by Julien Simon and is shared under a Creative Commons license allowing non-commercial sharing and adaptation with proper attribution.

![The author of this material is Julien Simon https://www.linkedin.com/in/juliensimon unless explicitly mentioned.

This material is shared under the CC BY-NC 4.0 license https://creativecommons.org/licenses/by-nc/4.0/

You are free to share and adapt this material, provided that you give appropriate credit, provide a link to the license, and indicate if changes were made.

You may not use the material for commercial purposes. You may not apply any restriction on what the license permits.

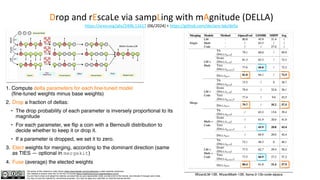

Model breadcrumbs

https://arxiv.org/abs/2312.06795 (12/2023) + https://github.com/rezazzr/breadcrumbs

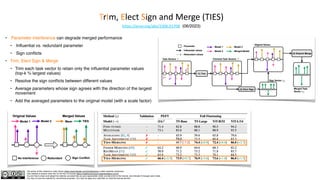

• Improvement of the Task Arithmetic technique

• Compute a task direction for each fine-tuned model

(fine-tuned weights minus base weights)

• Mask (set to zero) a percentage of tiny and large

outliers in each task direction, resp. β and ɣ

(this is equivalent to using the parameter from the base model)

• Sum the base model and the masked task

directions, with a task weight (⍺)

• This method outperforms Task Vectors. It also scales much better to a large number of tasks: « After finding

optimal hyperparameters for both Model Breadcrumbs and Task Vectors using 10 tasks, we kept these

hyperparameters and incrementally merged all 200 tasks to create a multi-task model. […] the observed trend

remains, with Model Breadcrumbs (85% sparsity) consistently outperforming Task Vectors by a significant margin

as the number of tasks increases. »

• Merging also improves the

performance of fine-tuned models

• Optionally, add sign election before

merging (TIES method).

T5-base fined tuned on 4 GLUE tasks, then merged with six other T5 variants (IMDB, etc.)](https://image.slidesharecdn.com/deepdivemodelmerging-240811121521-049c97ee/85/Julien-Simon-Deep-Dive-Model-Merging-11-320.jpg)

![The author of this material is Julien Simon https://www.linkedin.com/in/juliensimon unless explicitly mentioned.

This material is shared under the CC BY-NC 4.0 license https://creativecommons.org/licenses/by-nc/4.0/

You are free to share and adapt this material, provided that you give appropriate credit, provide a link to the license, and indicate if changes were made.

You may not use the material for commercial purposes. You may not apply any restriction on what the license permits.

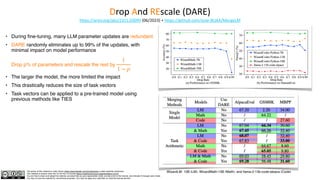

Model stock

https://arxiv.org/abs/2403.19522 + https://github.com/naver-ai/model-stock (03/2024)

• Observation 1: « Fine-tuned weights with different random

seeds reside on a very thin shell layer-wise »

3D intuition: for each layer, vectors from all fine-tuned models live on a sphere

• Observation 2: « Going closer to the center by averaging the

weights leads to improving both performances. » [ID, OOD]

• Model soups work because averaging

many single-task models gets us closer

to the center.

• However, building a large collection of

single-task models can be

computationally costly.

• By understanding the geometry of fine-

tuned weights, model stock allows us to

approximate the center coordinates from

just a few models.

• Periodic merging during fine-tuning

yields even better results.](https://image.slidesharecdn.com/deepdivemodelmerging-240811121521-049c97ee/85/Julien-Simon-Deep-Dive-Model-Merging-12-320.jpg)