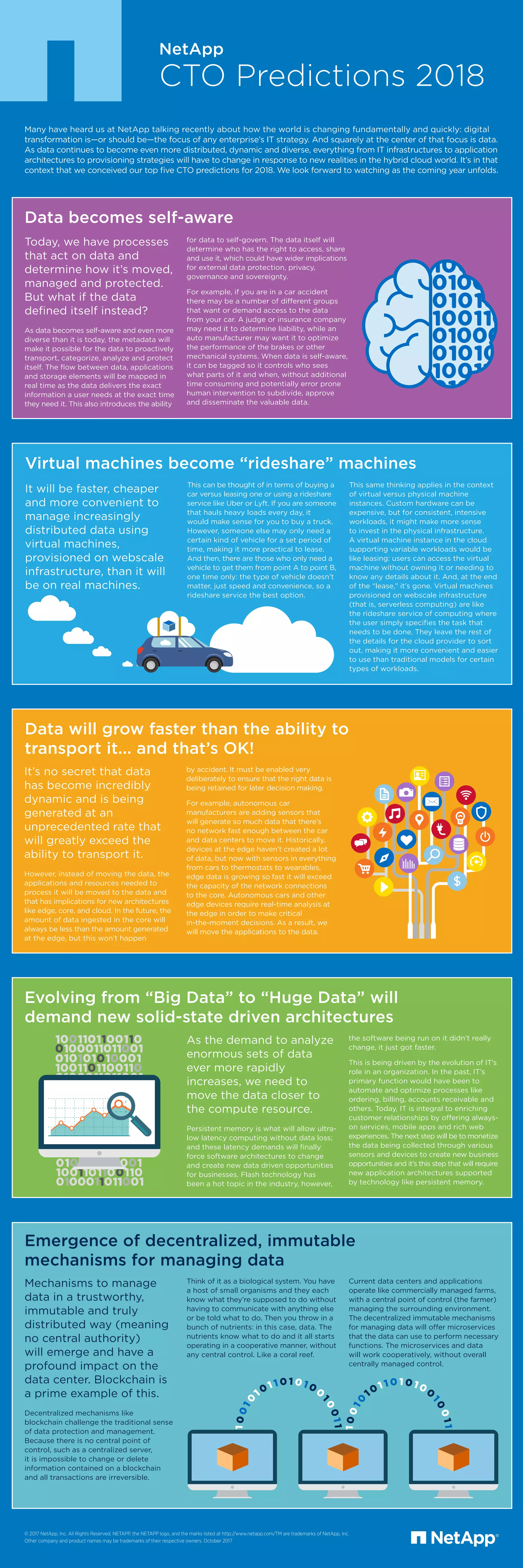

In 2018, the shift from 'big data' to 'huge data' requires new solid-state architectures for faster data processing and analysis. As data becomes self-aware and increasingly dynamic, it will begin to self-govern access and protection, leading to new application architectures, especially at the edge. The rise of decentralized, immutable data management mechanisms like blockchain will further impact traditional data centers and IT strategies, necessitating a focus on digital transformation.