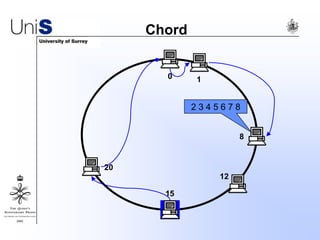

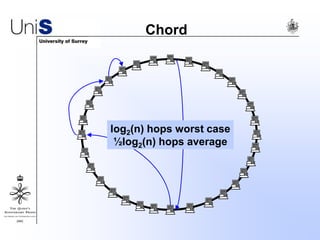

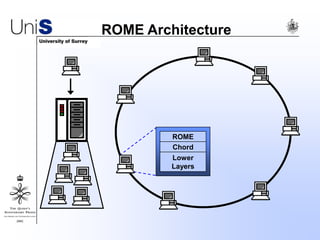

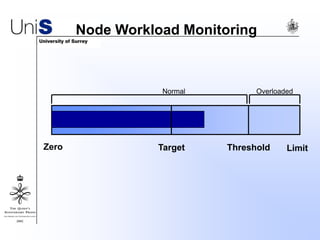

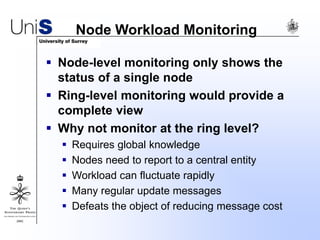

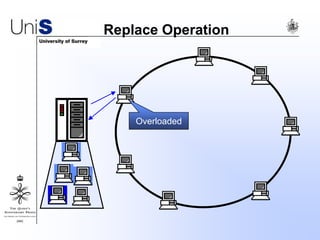

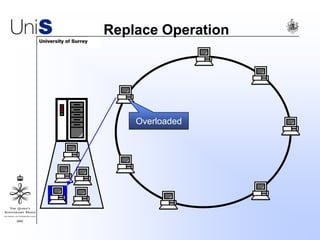

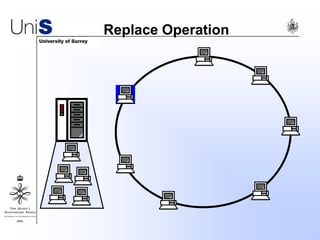

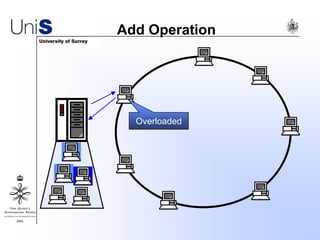

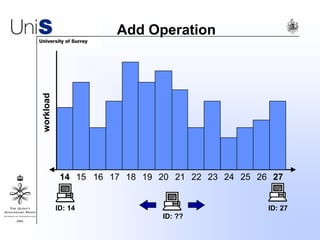

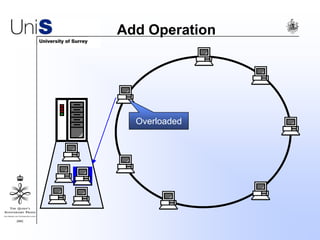

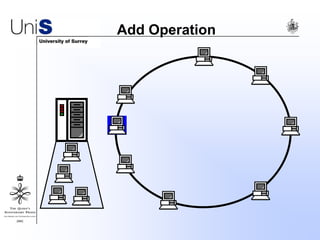

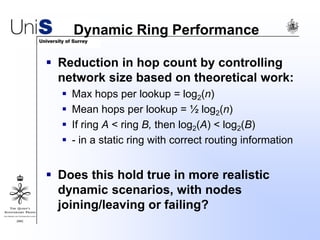

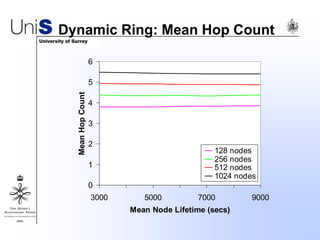

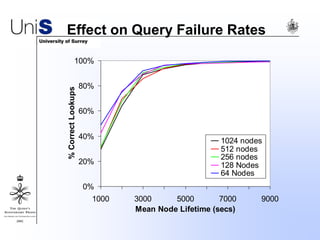

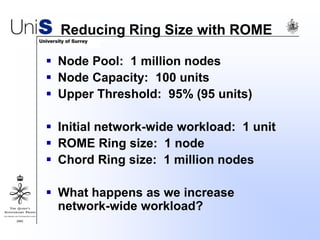

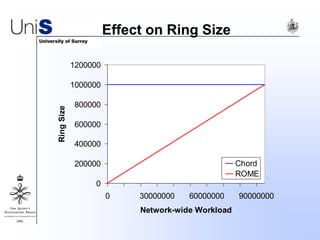

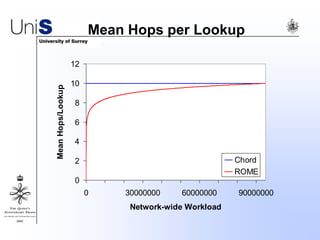

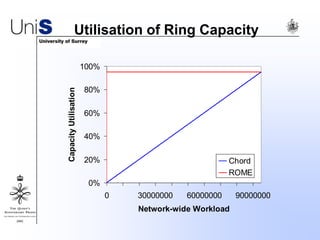

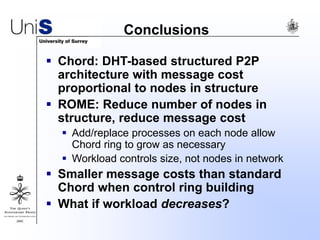

The document describes ROME, an architecture that optimizes DHT-based peer-to-peer networks like Chord. ROME aims to reduce message costs by keeping the ring size just large enough to support current workload, rather than scaling with total network nodes. Each node monitors its workload and performs add or replace operations to dynamically adjust the ring size as needed. Simulation results show ROME achieves smaller average ring sizes, lower hop counts, and reduced query failure rates compared to Chord under fluctuating workloads and node lifetimes.