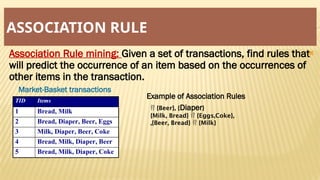

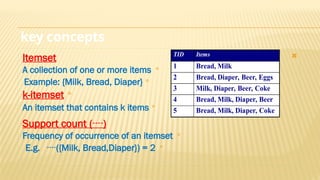

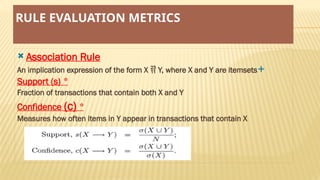

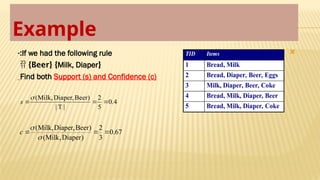

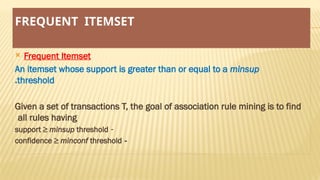

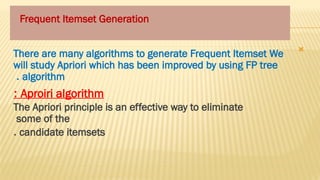

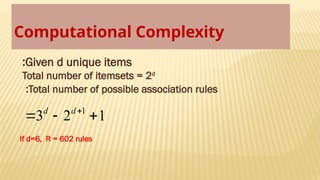

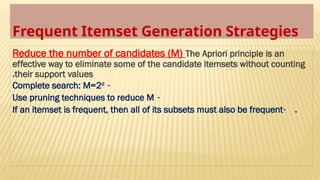

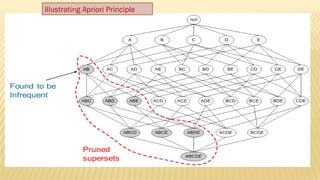

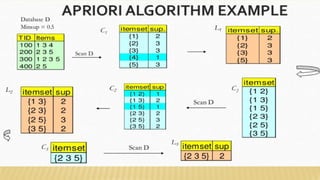

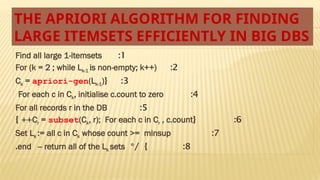

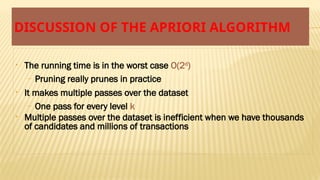

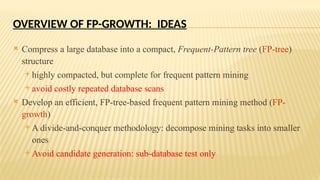

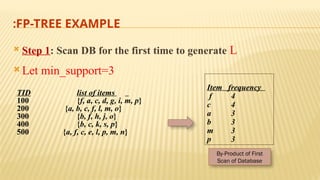

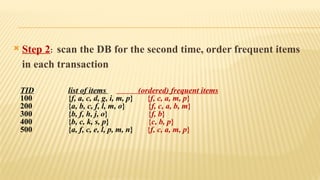

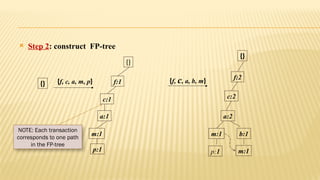

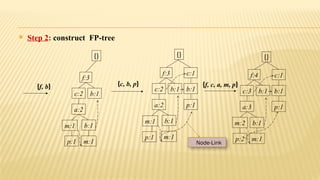

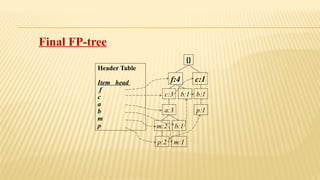

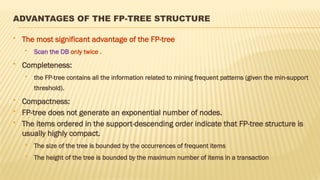

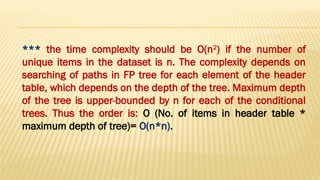

The document presents an improvised apriori algorithm utilizing a frequent pattern tree for association rule mining, focusing on identifying rules that can predict item occurrences within market-basket transactions. It explains key concepts such as itemsets, support count, and the apriori principle, detailing how the algorithm minimizes candidate itemsets through pruning. Additionally, it describes the FP-growth method for efficiently mining frequent patterns from a database, highlighting its two-scan process to build a compact FP-tree structure.