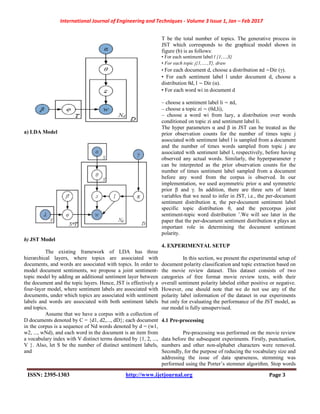

This document summarizes an article from the International Journal of Engineering and Techniques that proposes a new model called the Joint Sentiment Topic (JST) model for sentiment analysis. The JST model aims to detect sentiments and topics simultaneously from text using a Gibbs sampling algorithm. It extends the Latent Dirichlet Allocation (LDA) topic model by adding an additional sentiment layer to model how topics are generated based on sentiment distributions and words based on sentiment-topic pairs. The document describes the JST model and its generative process. It also discusses the experimental setup used to evaluate the JST model on a movie review dataset, including preprocessing, defining model priors using different subjectivity lexicons, and incorporating the model priors into the J

![International Journal of Engineering and Techniques - Volume 3 Issue 1, Jan – Feb 2017

ISSN: 2395-1303 http://www.ijetjournal.org Page 5

vocabulary and for each sentiment label{1 . . . ;S}, if w is

found in the sentiment lexicon, the element λlω is updated as

follows.

1 , i f S ( ) l

0 , o t h e r w i s e

l

5. CONCLUSION AND FUTURE WORK

General domain sentiment classification, by

incorporating a small amount of domain independent prior

knowledge, the JST model achieved either better or

comparable performance compared to existing semi-

supervised approaches despite using no labelled documents,

which demonstrates the flexibility of JST in the sentiment

classification task. Classification performance of JST is very

close to the best performance of Machine learning but save a

lot of annotation work. Topic information indeed helps in

sentiment classification as the JST model with the mixture of

topics consistently outperforms a simple LDA model

ignoring the mixture of topics.

In future, we plan to generalize the proposed

method to solve other types of domain adaptation tasks. The

JST model only incorporates prior knowledge from sentiment

lexicons for model learning. It is also possible to incorporate

other types of prior information, such as some known topical

knowledge of product reviews, for discovering more salient

topics about product features and aspects. Another possibility

is to develop a semi-supervised version of JST, with some

supervised information being incorporated into the model

parameter estimation procedure, such as use of the sentiment

labels of reviews derived automatically from the ratings

provided by users, to control the Dirichlet priors of the

sentiment distributions.

REFERENCES

[1] Chenghua Lin, Yulan He, Richard Everson,” Weakly Supervised Joint

Sentiment-Topic Detection from Text”, IEEE Transactions on Knowledge

and Data Engineering,Vol 24,no 6, pp.1134-1145,2012.

[2] A. Abbasi, H. Chen, and A. Salem.”Sentiment Analysis in Multiple

Languages: Feature Selection for Opinion Classification” in web forums.

ACM Trans. Inf. Syst., 26(3):1–34, 2008.

[3] Shenghua Bao, Shengliang Xu, Li Zhang, Rong Yan, Zhong Su, Dingyi

Han, and Yong Yu,” Mining Social Emotions from Affective Text,” IEEE

Transaction on Knowledge and Data Engineering, Vol 24,no 9, pp.1658-

1670,2012.

[4] T. Li, Y. Zhang and V. Sindhwani, “A Non-Negative Matrix Tri-

Factorization Approach to Sentiment Classification with Lexical Prior

Knowledge,” Proc. Joint Conf. 47th Ann. Meeting of the ACL and the

Fourth Int’l Joint Conf. Natural Language Processing of the AFNLP, pp.

244-252, 2009.

[5] Andreevskaia and S. BerglerOvercoming , ”Domain Dependence in

Sentiment Tagging”. In Proceedings of the Association for Computational

Linguistics and the Human Language Technology Conference (ACL-

HLT), pages 290–298, 2008.

[6] Helmut Prendinger, Alena Neviarouskaya and Mitsuru

Ishizuka“SentiFul: A Lexicon for Sentiment Analysis”, Proc. Fourth Int’l

Workshop Semantic Evaluations (SemEval’07), pp. 70-74, 2007.

[7] Argamon, K. Bloom, A. Esuli, and F. Sebastiani.”Automatically

Determining Attitude Type for Multi-Domain Sentiment Analysis”. Human

Language Technology. Challenges of the Information Society, pages 218–

231, 2009.

[8] P.C. Chang, M. Galley, and C. Manning, “Optimizing Chinese Word

Segmentation for Machine Translation Performance,” Proc.Assoc.for

Computational Linguistics (ACL) Third Workshop Statistical Machine

Translation, 2008.

[9] R. Tokuhisa, K. Inui, and Y. Matsumo “Emotion Classification Using

Massive Examples Extracted From The Web,” .Proc. 16th Int’l World

Wide Web Conf. (WWW ’07), 2007.

[10] W.H. Lin, E. Xing,and A. Hauptmann,“A Joint Topic and Perspective

Model for Ideological Discourse, ” Proc.European Conf. Machine Learning

and Principles and Practice of Knowledge Discovery in Databases

(ECML/PKDD ’08), pp. 17-32, 2008.

[11] G. Mishne, K. Balog, M. de Rijke, and B. Ernsting, “Moodviews:

Tracking and Searching Mood-Annotated Blog Posts,” Proc. Int’l AAAI

Conf. Weblogs and Social Media (ICWSM ’07), 2007.

[12] A.M. Popescu and O. Etzioni, “Extracting Product Features and

Opinions from Reviews,” Proc. Joint Conf. Human Language Technology

and Empirical Methods in Natural Language Processing (HLT/EMNLP

’05), pp. 339-346, 2005.](https://image.slidesharecdn.com/ijet-v3i1p1-180713112658/85/IJET-V3I1P1-5-320.jpg)