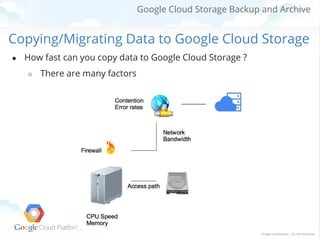

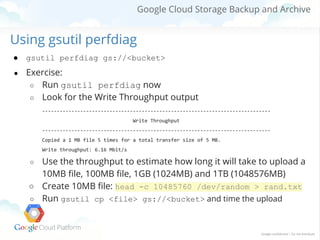

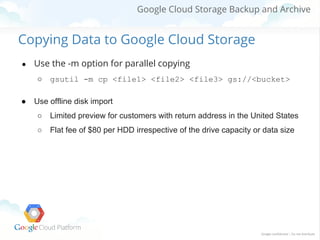

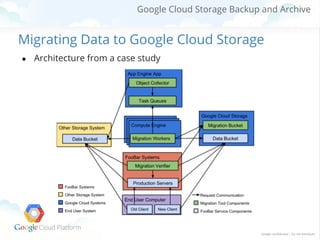

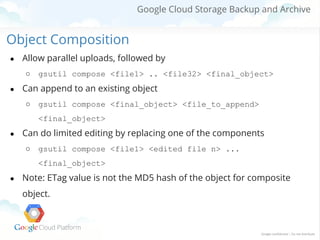

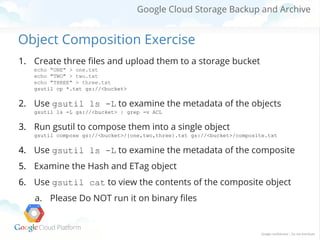

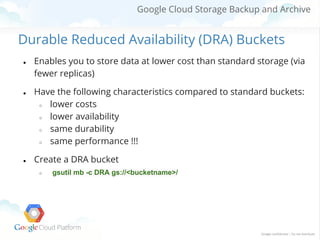

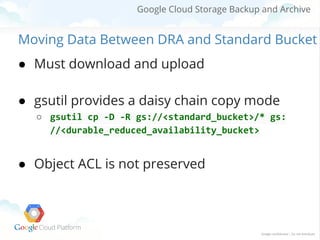

The document covers the process of migrating, backing up, and archiving data to Google Cloud Storage (GCS), highlighting the use of tools like gsutil for data transfer and upload optimization. It discusses strategies for efficient data movement, including parallel uploads and using durable reduced availability (DRA) buckets for cost savings. Additionally, it provides practical exercises and case studies to help users understand and execute these processes effectively.