The document discusses the applications of artificial intelligence in analyzing media web content, detailing processes such as data aggregation, transformation, and refinement, as well as machine learning algorithms and evaluation methods. It highlights the structure and functioning of multilayer perceptrons (MLPs), including their training through backpropagation and their differences from linear perceptrons. Additionally, it covers various classification tasks, algorithms, feature selection techniques, and named entity recognition frameworks.

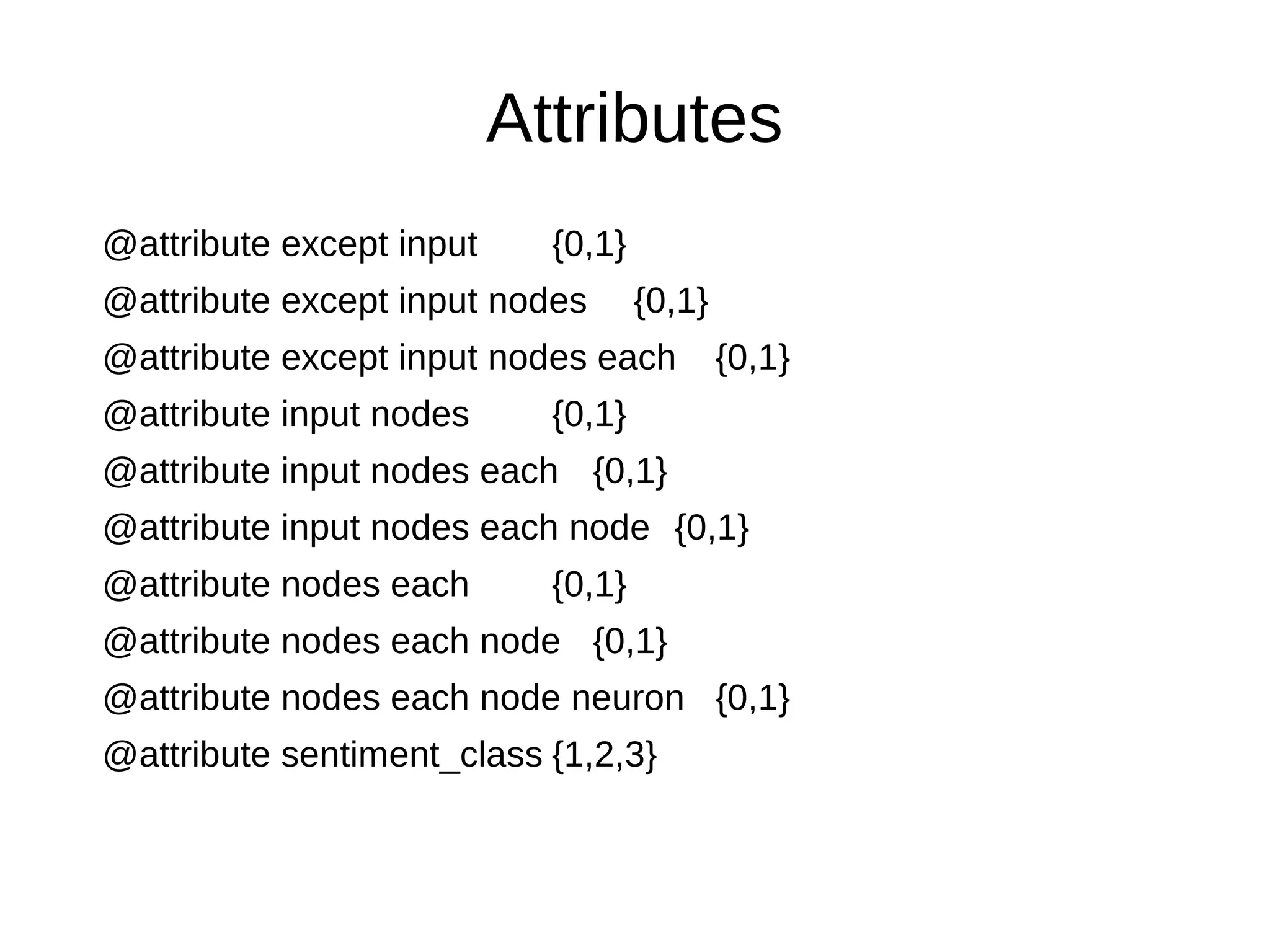

![Data transformation and refinement

Original:

A <b>multilayer perceptron</b> (MLP) is a class of <a href="/wiki/Feedforward_neural_network" title="Feedforward neural

network">feedforward</a> <a href="/wiki/Artificial_neural_network" title="Artificial neural network">artificial neural network</a>. An

MLP consists of at least three layers of nodes. Except for the input nodes, each node is a neuron that uses a nonlinear <a

href="/wiki/Activation_function" title="Activation function">activation function</a>. MLP utilizes a <a href="/wiki/Supervised_learning"

title="Supervised learning">supervised learning</a> technique called <a href="/wiki/Backpropagation"

title="Backpropagation">backpropagation</a> for training.<sup id="cite_ref-1" class="reference"><a href="#cite_note-

1">[1]</a></sup><sup id="cite_ref-2" class="reference"><a href="#cite_note-2">[2]</a></sup> Its multiple layers and non-linear

activation distinguish MLP from a linear <a href="/wiki/Perceptron" title="Perceptron">perceptron</a>. It can distinguish data that is

not <a href="/wiki/Linear_separability" title="Linear separability">linearly separable</a>.<sup id="cite_ref-Cybenko1989_3-0"

class="reference"><a href="#cite_note-Cybenko1989-3">[3]</a></sup></p>

<p>Multilayer perceptrons are sometimes colloquially referred to as "vanilla" neural networks, especially when they have a single

hidden layer.<sup id="cite_ref-4" class="reference">

Stripped:

A multilayer perceptron (MLP) is a class of feedforward artificial neural network. An MLP consists of at least three layers of nodes.

Except for the input nodes, each node is a neuron that uses a nonlinear activation function. MLP utilizes a supervised learning

technique called backpropagation for training.[1][2] Its multiple layers and non-linear activation distinguish MLP from a linear

perceptron. It can distinguish data that is not linearly separable.[3]

Multilayer perceptrons are sometimes colloquially referred to as "vanilla" neural networks, especially when they have a single hidden

layer.

Stemmed:

multilay perceptron class feedforward artifici neural network n consist least three layer node xcept input node each node neuron us

nonlinear activ function util supervis learn techniqu call backpropag train ts multipl layer non linear activ distinguish from linear

perceptron t can distinguish data linearli separ ultilay perceptron sometim colloqui refer vanilla neural network especi when have singl

hidden layer](https://image.slidesharecdn.com/plovdev-2017-identrics-deyanpeychev-171128123924/75/Identrics-3-2048.jpg)