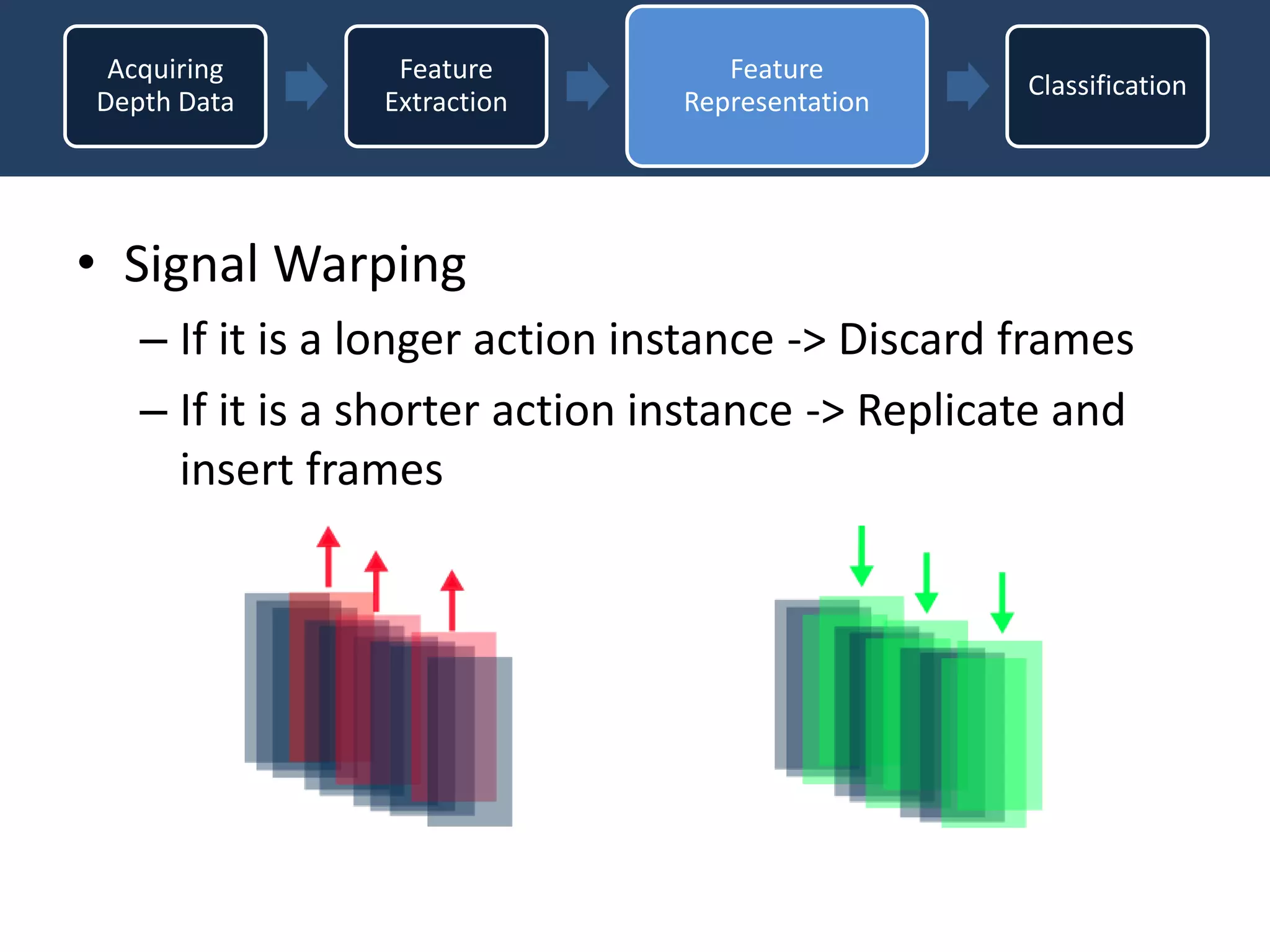

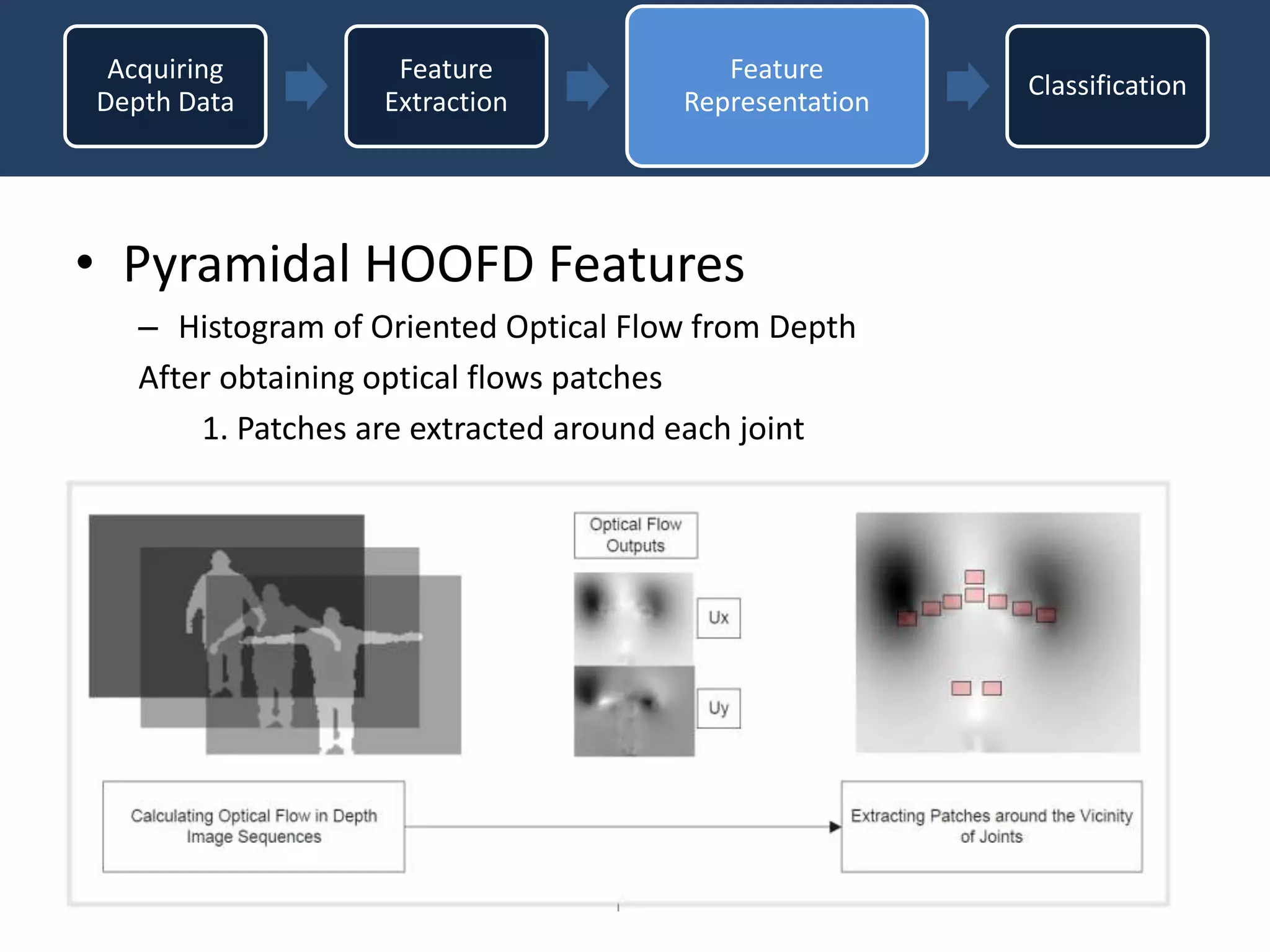

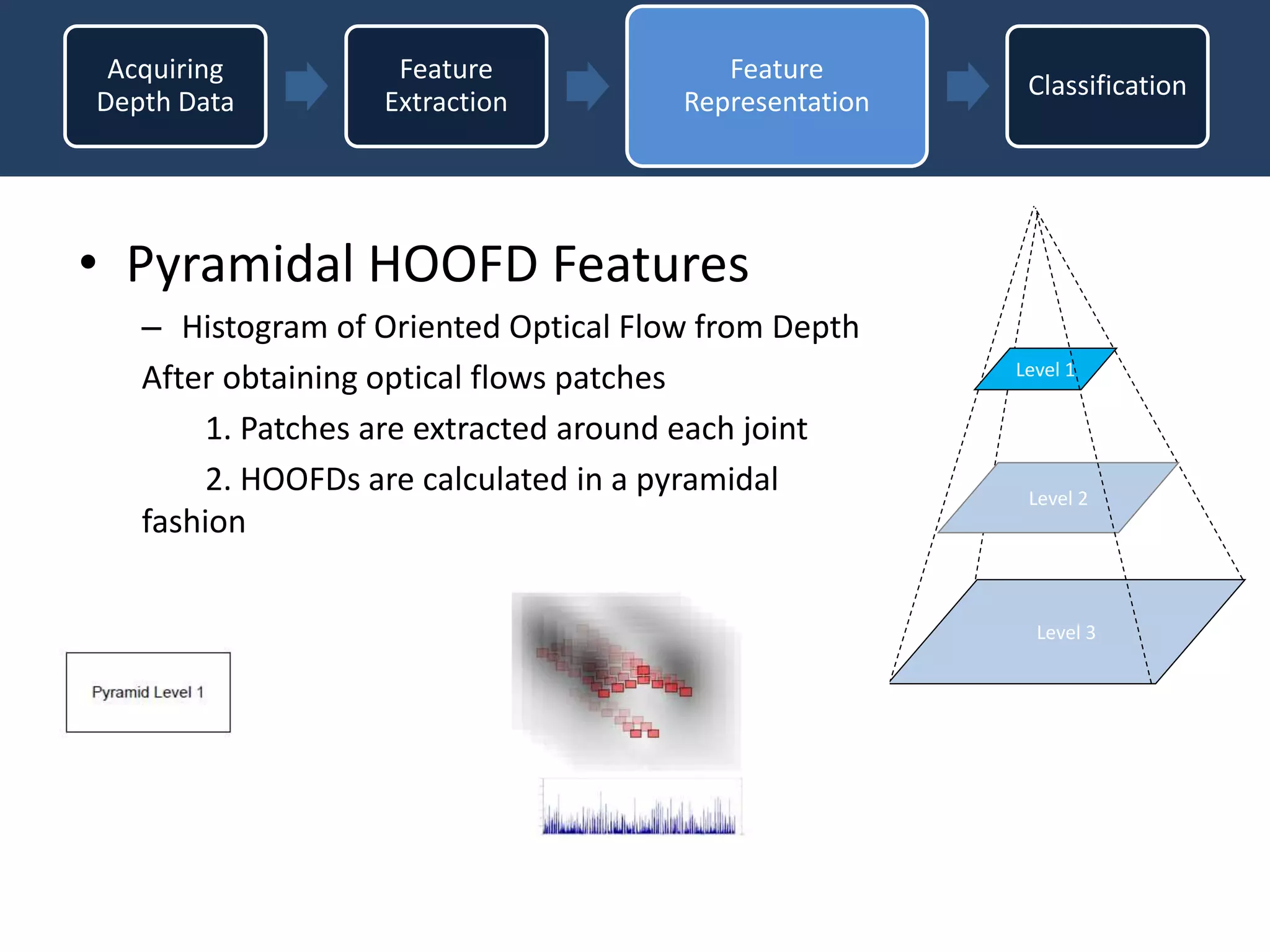

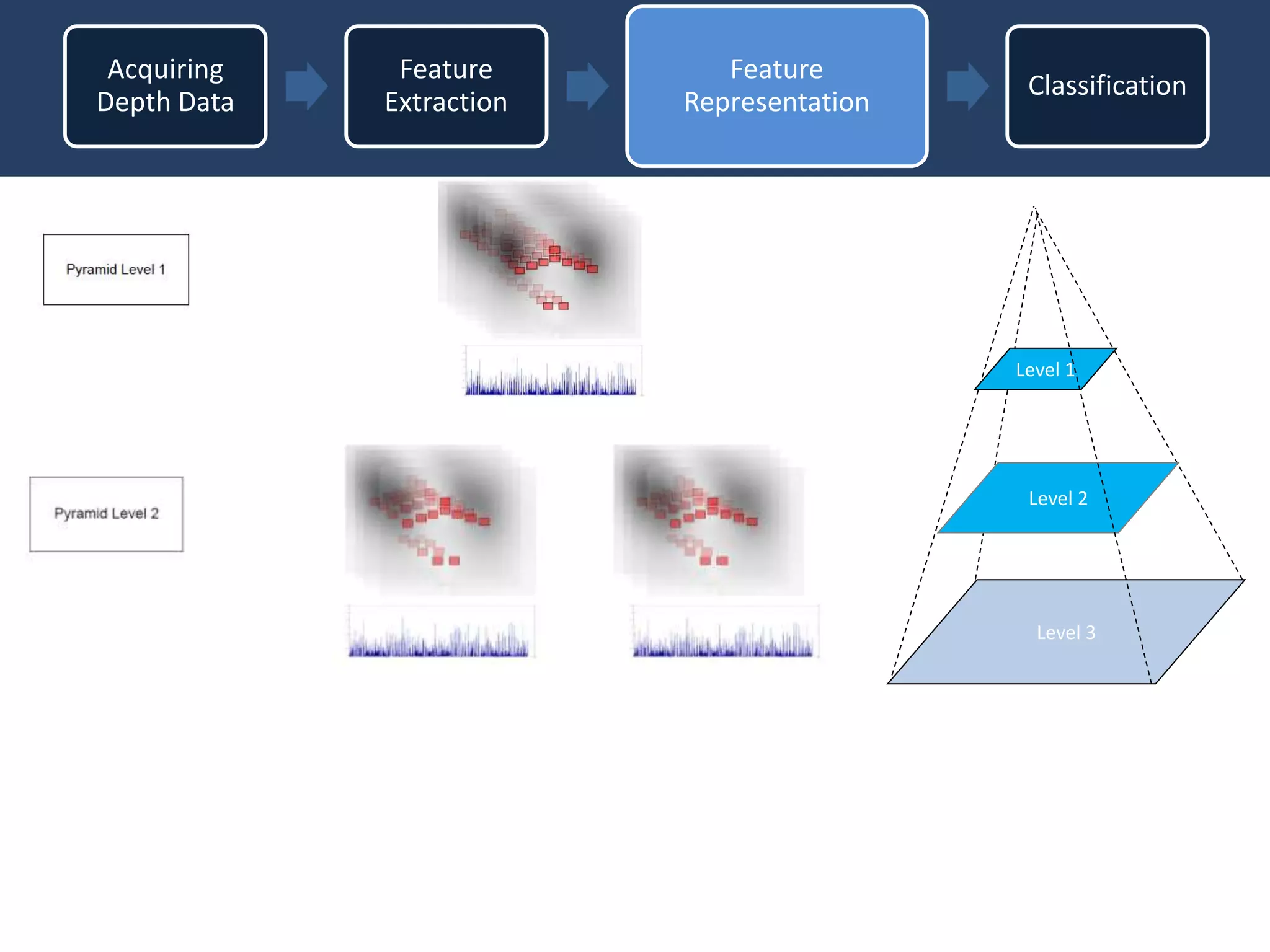

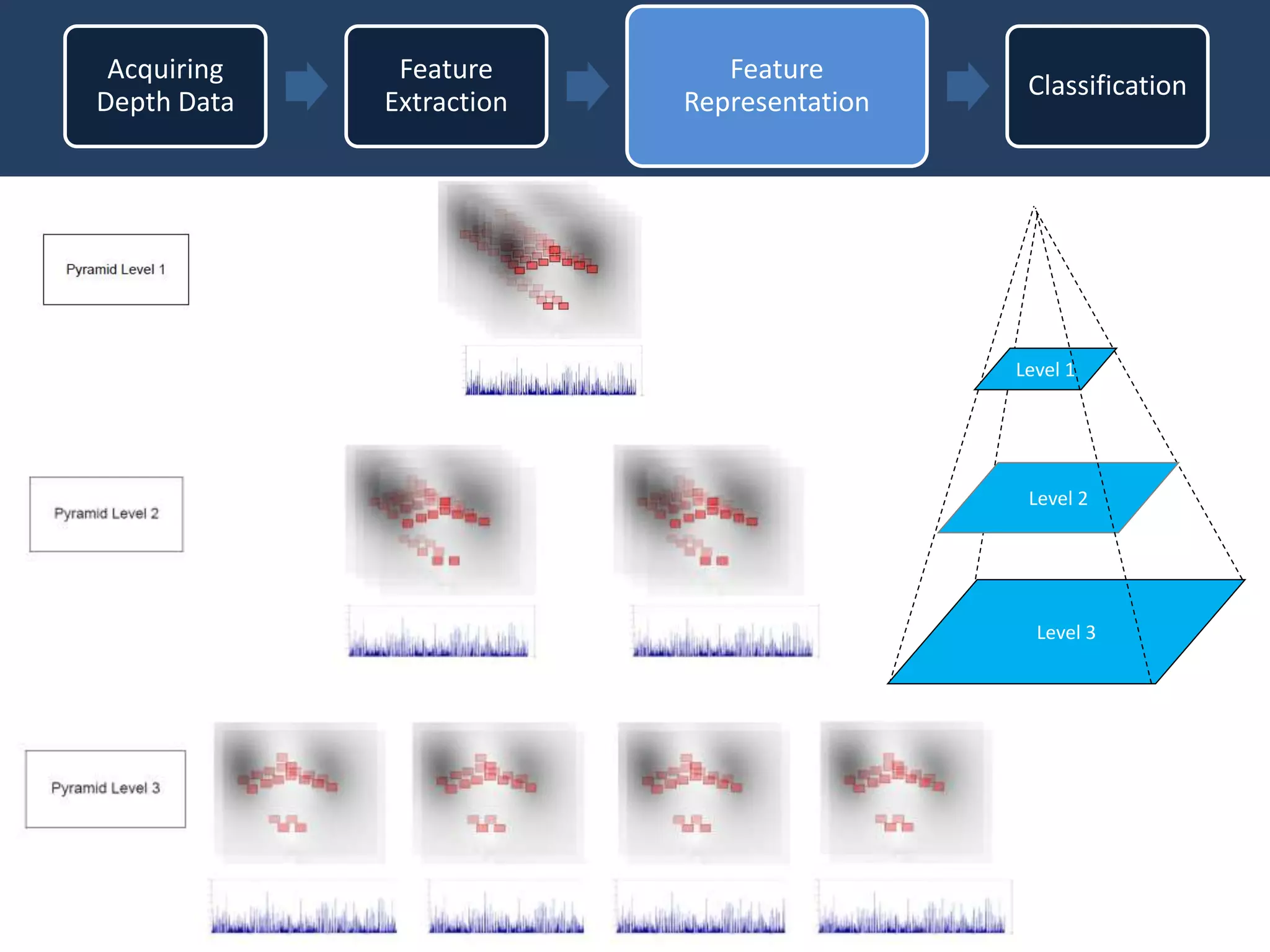

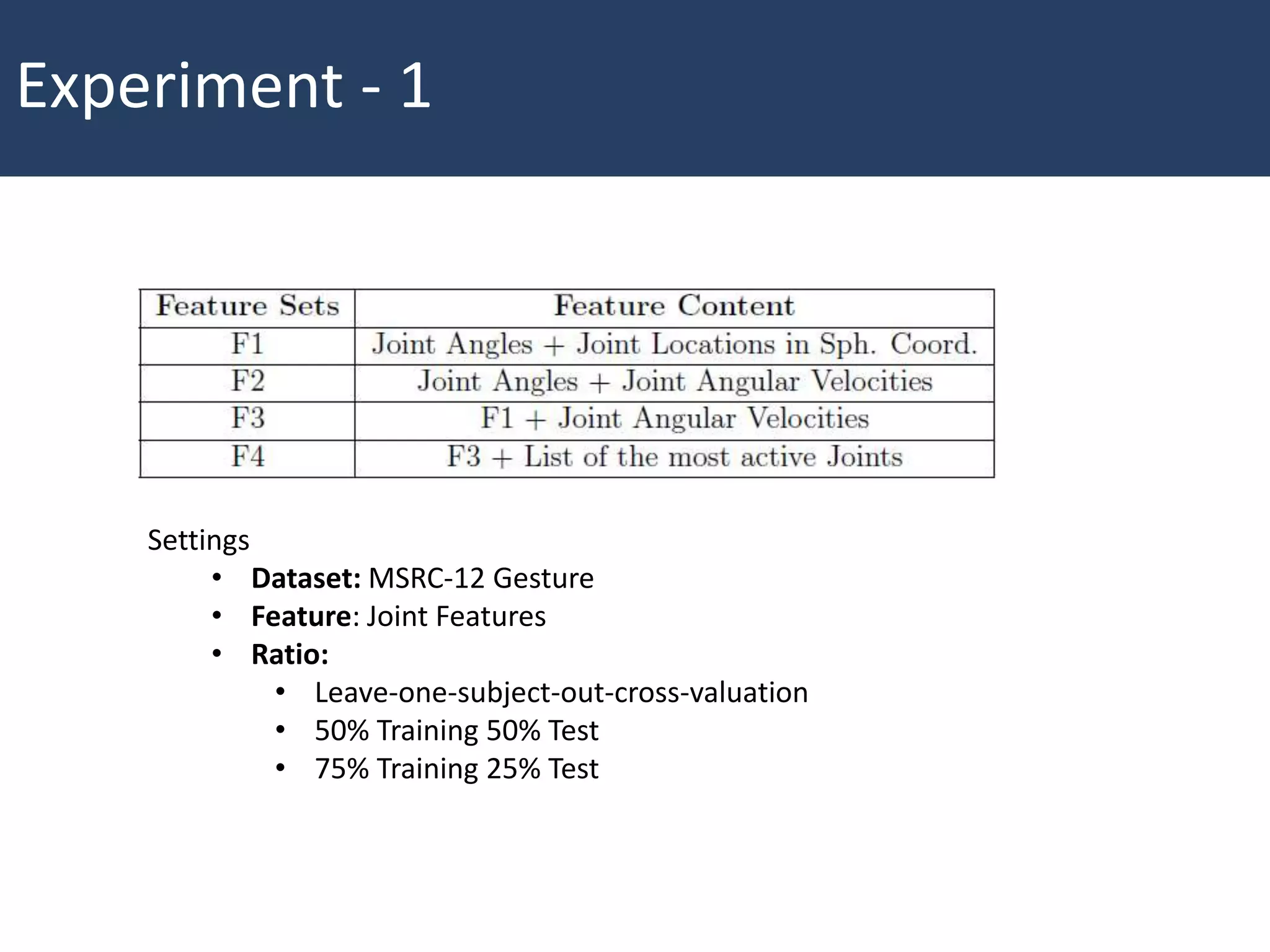

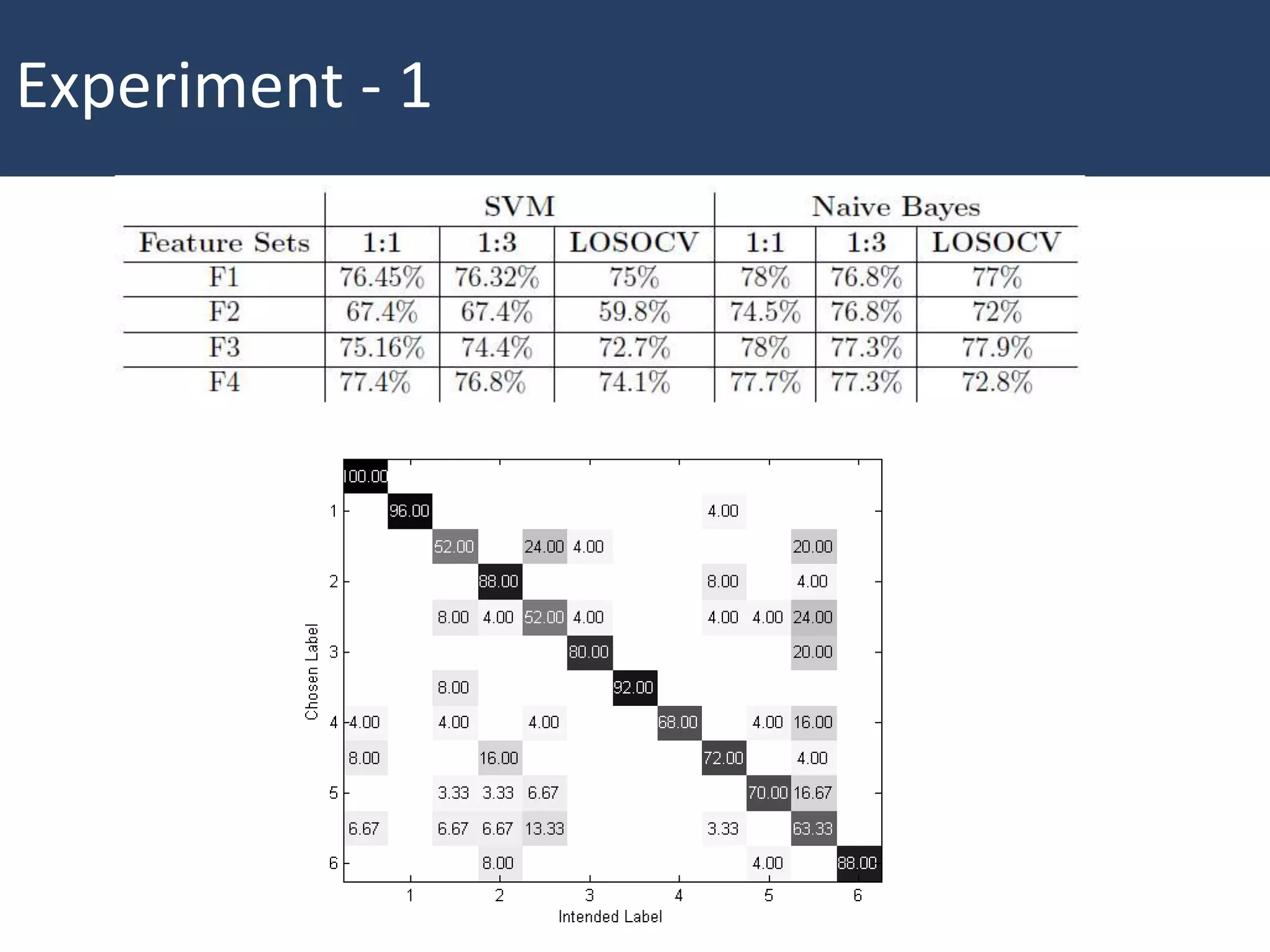

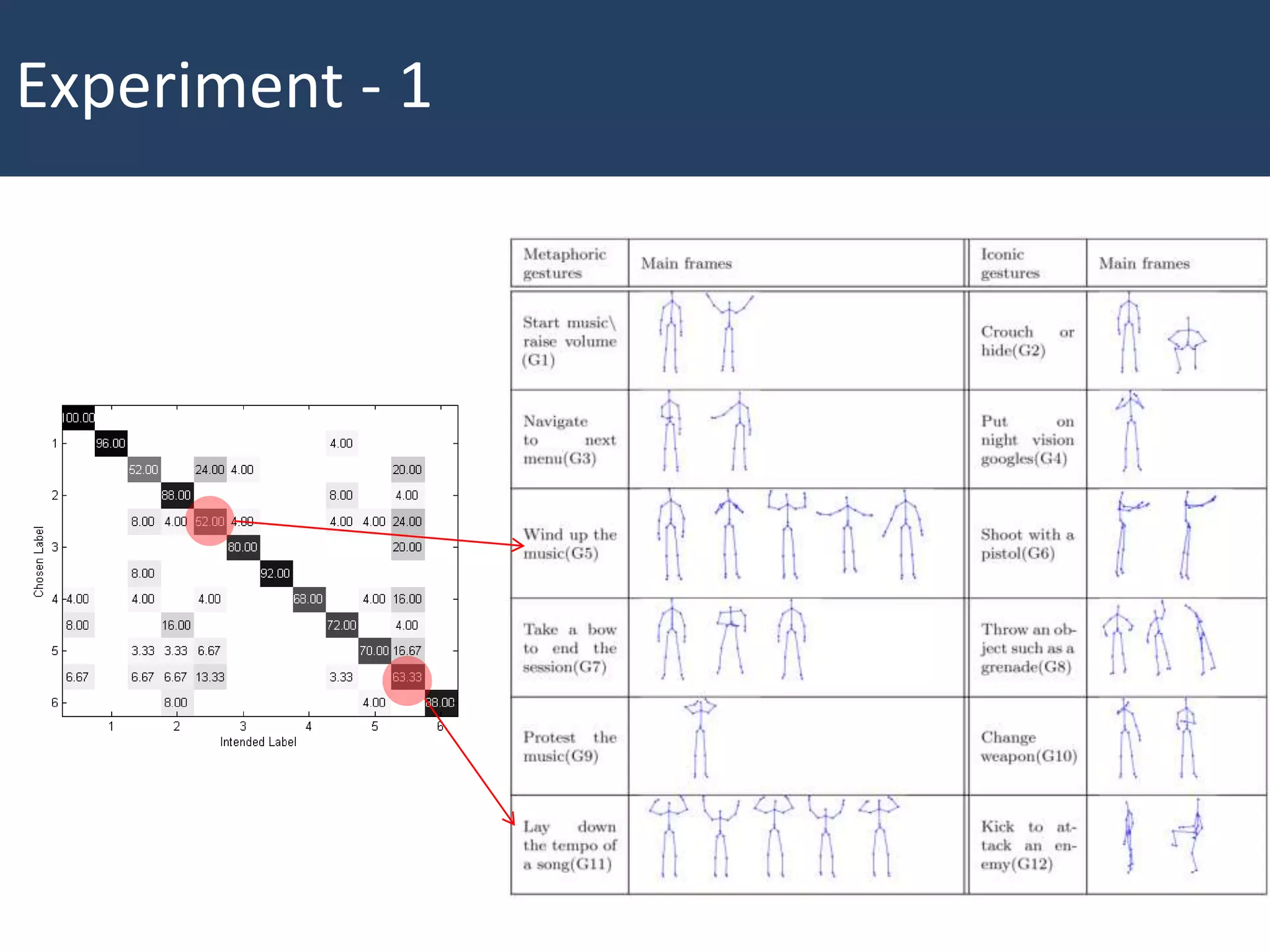

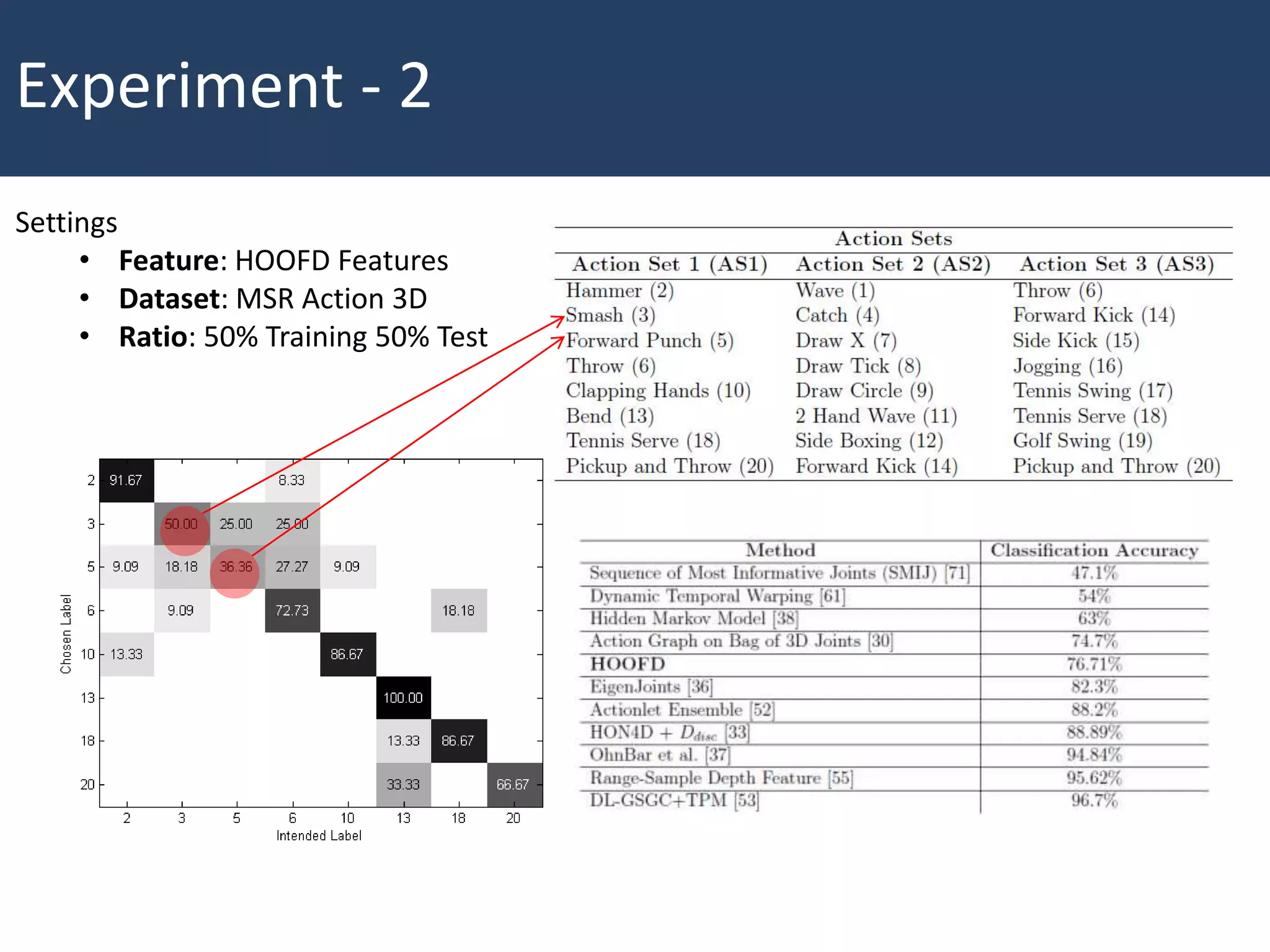

The thesis presents a novel framework for human action recognition by integrating 3D joint information with histogram of oriented optical flow from depth (hoofd) features. It details the methods for acquiring depth data, feature extraction, and classification, and evaluates the approach using various datasets. The results indicate the effectiveness of the proposed technique compared to existing algorithms, with suggestions for future improvements in feature utilization and classification methods.

![• Motion Perception

– Gunnar Johansson [1971]

• Sequence of images for

Human Motion Analysis

• ‘Moving Light Displays’

enable identification of

people and gender

• Motion Capture [2014]

– Dawn of the Planet of

the Apes

Motivation](https://image.slidesharecdn.com/thesisrepresentation-140724033822-phpapp01/75/Human-Action-Recognition-Using-3D-Joint-Information-and-HOOFD-Features-4-2048.jpg)

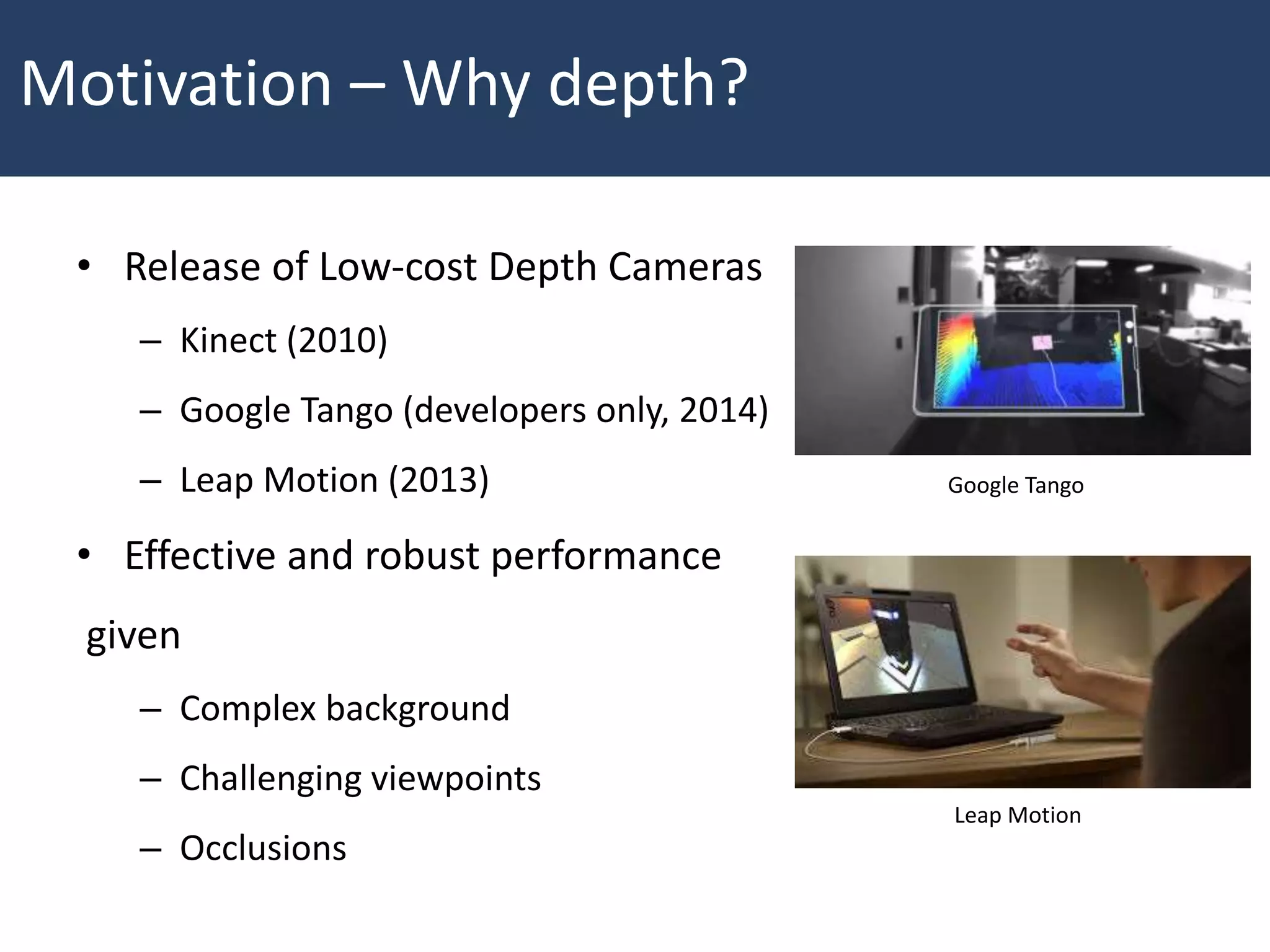

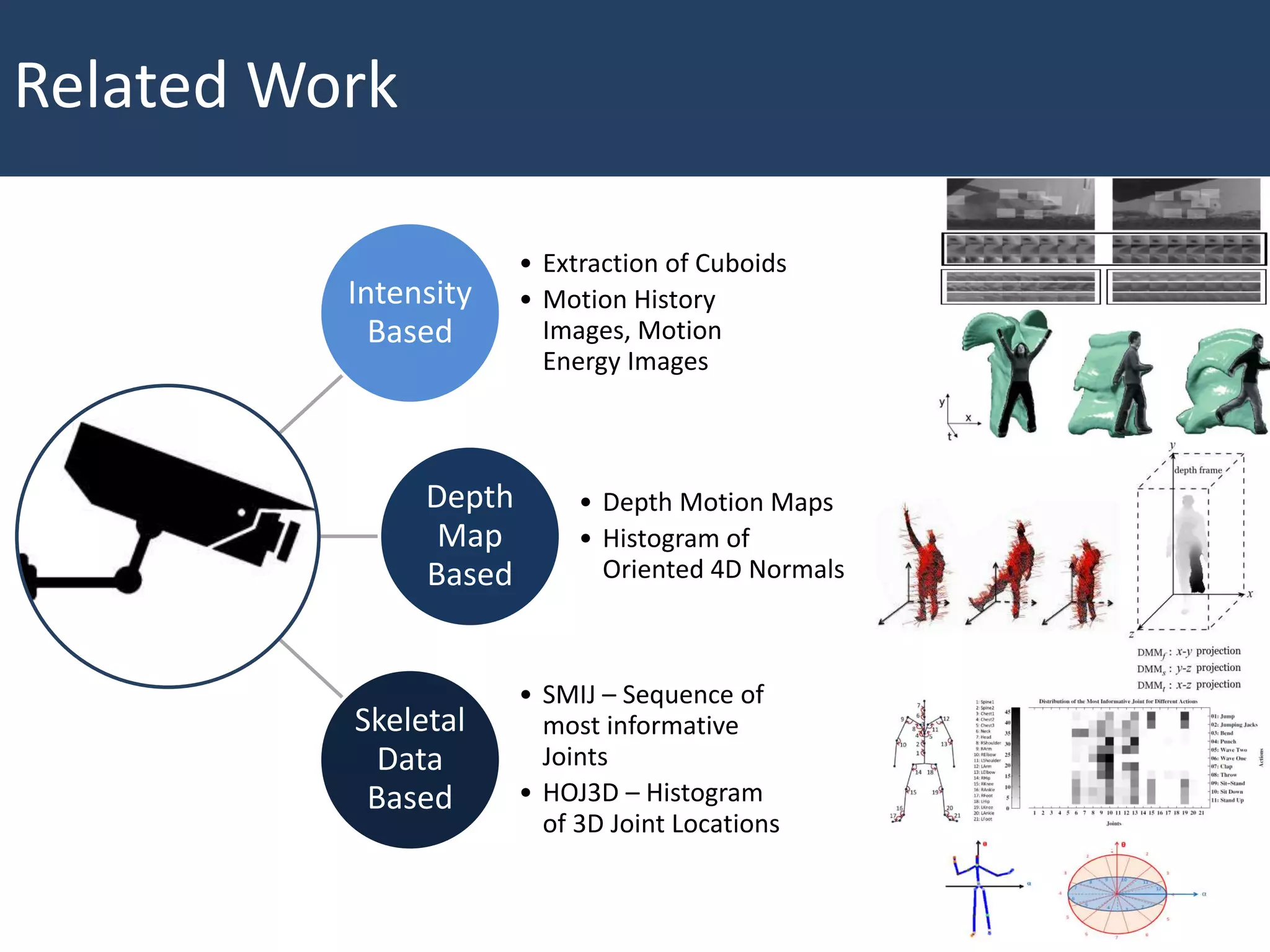

![Related Work

• Extraction of Cuboids,

Dollar et al. [CVPR, 2005]

• Motion History Images

Motion Energy Images,

Gorelick et al. [PAMI, 2007]

Intensity

Based](https://image.slidesharecdn.com/thesisrepresentation-140724033822-phpapp01/75/Human-Action-Recognition-Using-3D-Joint-Information-and-HOOFD-Features-12-2048.jpg)

![Related Work

• Histogram of Oriented

4D Normals (HON4D)

Oreifej et al. [CVPR, 2013]

• Depth Motion Maps,

Yang et al. [JRTIP, 2012]

Depth Map

Based](https://image.slidesharecdn.com/thesisrepresentation-140724033822-phpapp01/75/Human-Action-Recognition-Using-3D-Joint-Information-and-HOOFD-Features-13-2048.jpg)

![Related Work

• Sequence of Most Informative Joints (SMIJ),

Ofli et al. [CVIU, 2013]

• View Invariant Human

Action Recognition

Using Histogram of

3D Joints,

Xia et al. [CVPR, 2012]

Skeletal Data

Based](https://image.slidesharecdn.com/thesisrepresentation-140724033822-phpapp01/75/Human-Action-Recognition-Using-3D-Joint-Information-and-HOOFD-Features-14-2048.jpg)