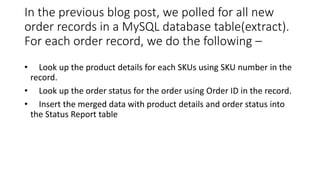

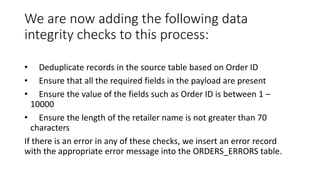

This document provides steps to add data integrity checks to an existing ETL process in Mulesoft Anypoint Platform. It describes checking for duplicate records, required fields, acceptable value ranges, and field length limits. Records failing checks are inserted into an error table with the error message. The process is demonstrated by enhancing a sample order loading workflow to deduplicate on order IDs, validate the JSON schema, and log failed records to a separate errors table.

![• Insert a DataWeave transformer to convert the JSON object into a

Java hashmap so that we can extract individual values from the

payload. Rename the component to “Convert JSON to Object” and

configure it as shown below. You can also optionally set the metadata

for the source and target to show the Order map object.

• Insert a Variable component from the palette into the Mule flow after

the DataWeave transformer. Rename it to “Store exception” and

configure it to store the exception into a variable called “exception”.

Use the following MEL(Mule Expression Language) expression to store

the variable – “#[getStepExceptions()]”](https://image.slidesharecdn.com/howtodataintegritychecksinbatchprocessing-161031103139/85/How-to-data-integrity-checks-in-batch-processing-14-320.jpg)

![• Insert a database connector from the palette after “Store Exception”.

Rename this to “Insert Error” as this would be used to insert the error

records into a separate table. Configure it as shown below with the

following SQL statement – “INSERT INTO

ORDERS_ERRORS(OrderID,RetailerName,SKUs,OrderDate,Message)

VALUES

(#[payload.OrderID],#[payload.RetailerName],#[payload.SKUs],#[payl

oad.OrderDate],#[flowVars.exception.DataIntegrityCheck.message])”](https://image.slidesharecdn.com/howtodataintegritychecksinbatchprocessing-161031103139/85/How-to-data-integrity-checks-in-batch-processing-15-320.jpg)