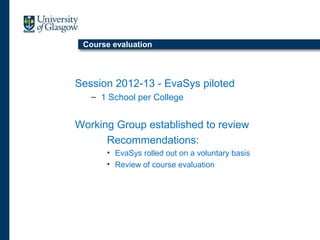

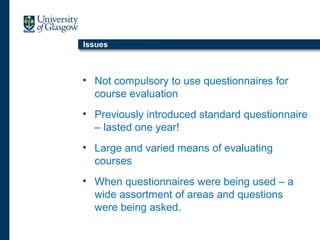

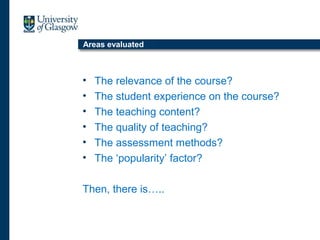

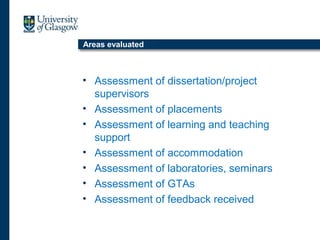

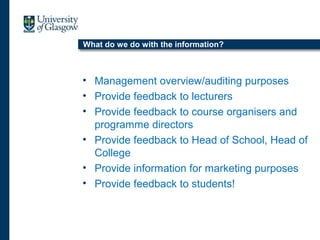

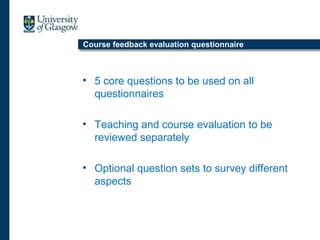

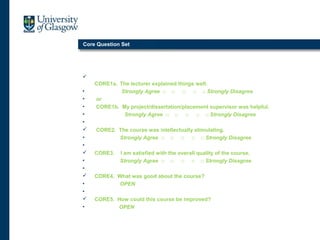

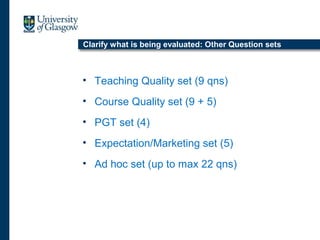

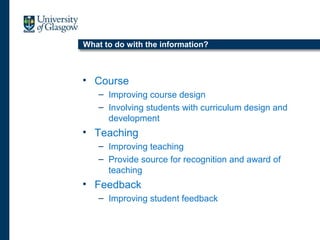

This document discusses course evaluation at a university with over 24,000 students across 19 schools and 9 research institutes. It summarizes the current state of course evaluation, including piloting a new online system, and establishes a working group to review course evaluation practices. The working group identified multiple purposes of course evaluation, from summative reporting to facilitating continuous improvement. They reviewed hundreds of existing evaluation questions and developed a standardized set of 5 core questions, with optional supplementary question sets. The working group aims to provide clearer guidance on the purpose and use of course evaluation to improve both teaching and course design across the university.