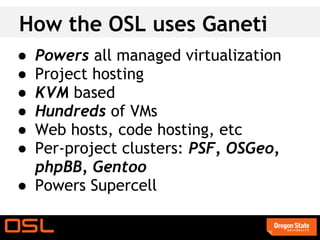

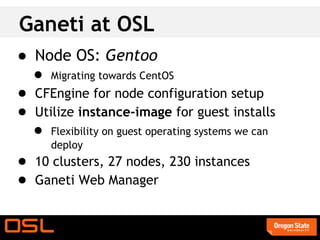

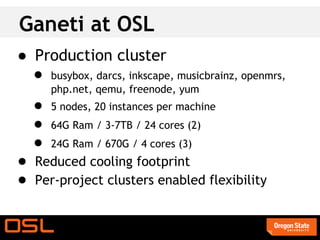

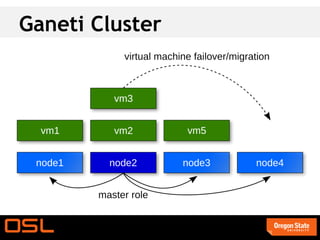

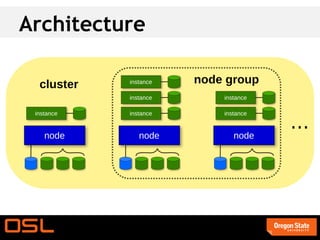

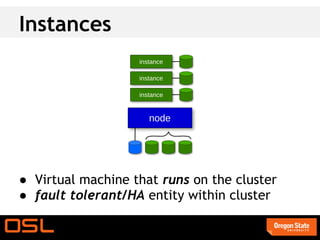

This document is part 1 of a presentation on virtualization with Ganeti. It introduces Ganeti as virtual machine management software that manages clusters of physical machines running Xen, KVM, or LXC. It discusses Ganeti's components, architecture, features like live migration and failure recovery using DRBD, and how it is used at OSU Open Source Lab to power hundreds of VMs. The presentation then demonstrates initializing a Ganeti cluster, adding nodes and instances, and recovering from failures before opening for questions.

![Initializing your cluster

The node needs to be set up following the ganeti installation guide.

gnt-cluster init [-s ip] ...

--enabled-hypervisors=kvm cluster](https://image.slidesharecdn.com/handsonvirtualizationwithganetipart1-linuxcon-120902132947-phpapp02/85/Hands-on-Virtualization-with-Ganeti-part-1-LinuxCon-2012-29-320.jpg)

![gnt-cluster

Cluster wide operations:

gnt-cluster info

gnt-cluster modify [-B/H/N ...]

gnt-cluster verify

gnt-cluster master-failover

gnt-cluster command/copyfile ...](https://image.slidesharecdn.com/handsonvirtualizationwithganetipart1-linuxcon-120902132947-phpapp02/85/Hands-on-Virtualization-with-Ganeti-part-1-LinuxCon-2012-30-320.jpg)

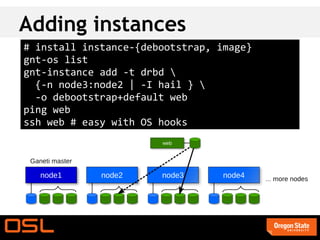

![Adding nodes

gnt-node add [-s ip] node2

gnt-node add [-s ip] node3

gnt-node add [-s ip] node4](https://image.slidesharecdn.com/handsonvirtualizationwithganetipart1-linuxcon-120902132947-phpapp02/85/Hands-on-Virtualization-with-Ganeti-part-1-LinuxCon-2012-31-320.jpg)

![gnt-node

Per node operations:

gnt-node remove node4

gnt-node modify

[ --master-candidate yes|no ]

[ --drained yes|no ]

[ --offline yes|no ] node2

gnt-node evacuate/failover/migrate

gnt-node powercycle](https://image.slidesharecdn.com/handsonvirtualizationwithganetipart1-linuxcon-120902132947-phpapp02/85/Hands-on-Virtualization-with-Ganeti-part-1-LinuxCon-2012-33-320.jpg)