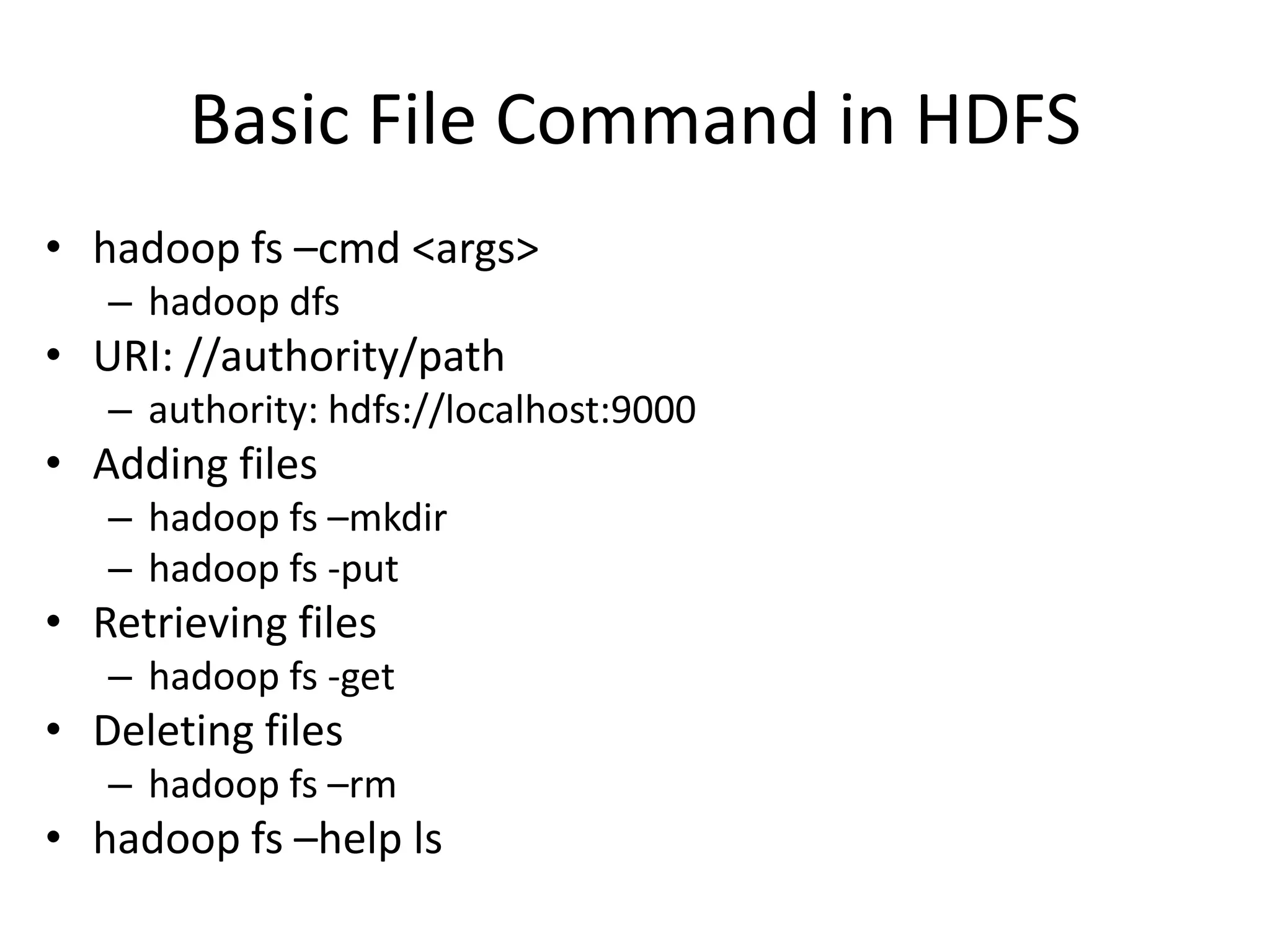

The document provides instructions for running Hadoop in standalone, pseudo-distributed, and fully distributed modes. It discusses downloading and installing Hadoop, configuring environment variables and files for pseudo-distributed mode, starting Hadoop daemons, and running a sample word count MapReduce job locally to test the installation.

![import java.io.IOException;

try {

import org.apache.hadoop.conf.Configuration; FileStatus[] inputFiles = local.listStatus(inputDir);

import org.apache.hadoop.fs.FSDataInputStream; FSDataOutputStream out = hdfs.create(hdfsFile);

import org.apache.hadoop.fs.FSDataOutputStream; for(int i = 0; i < inputFiles.length; i++) {

import org.apache.hadoop.fs.FileStatus; if(!inputFiles[i].isDir()) {

import org.apache.hadoop.fs.FileSystem; System.out.println("tnow processing <" +

import org.apache.hadoop.fs.Path;

inputFiles[i].getPath().getName() + ">");

FSDataInputStream in =

public class PutMerge { local.open(inputFiles[i].getPath());

public static void main(String[] args) throws IOException {

if(args.length != 2) { byte buffer[] = new byte[256];

System.out.println("Usage PutMerge <dir> <outfile>"); int bytesRead = 0;

System.exit(1); while ((bytesRead = in.read(buffer)) > 0) {

} out.write(buffer, 0, bytesRead);

}

Configuration conf = new Configuration(); filesProcessed++;

FileSystem hdfs = FileSystem.get(conf); in.close();

FileSystem local = FileSystem.getLocal(conf); }

int filesProcessed = 0; }

out.close();

Path inputDir = new Path(args[0]); System.out.println("nSuccessfully merged " +

Path hdfsFile = new Path(args[1]); filesProcessed + " local files and written to <" +

hdfsFile.getName() + "> in HDFS.");

} catch (IOException ioe) {

ioe.printStackTrace();

}

}

}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-12-2048.jpg)

![import java.io.IOException;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapred.FileInputFormat;

import org.apache.hadoop.mapred.FileOutputFormat;

import org.apache.hadoop.mapred.JobClient;

import org.apache.hadoop.mapred.JobConf;

public class MaxTemperature {

public static void main(String[] args) throws IOException {

if (args.length != 2) {

System.err.println("Usage: MaxTemperature <input path> <output path>");

System.exit(-1); }

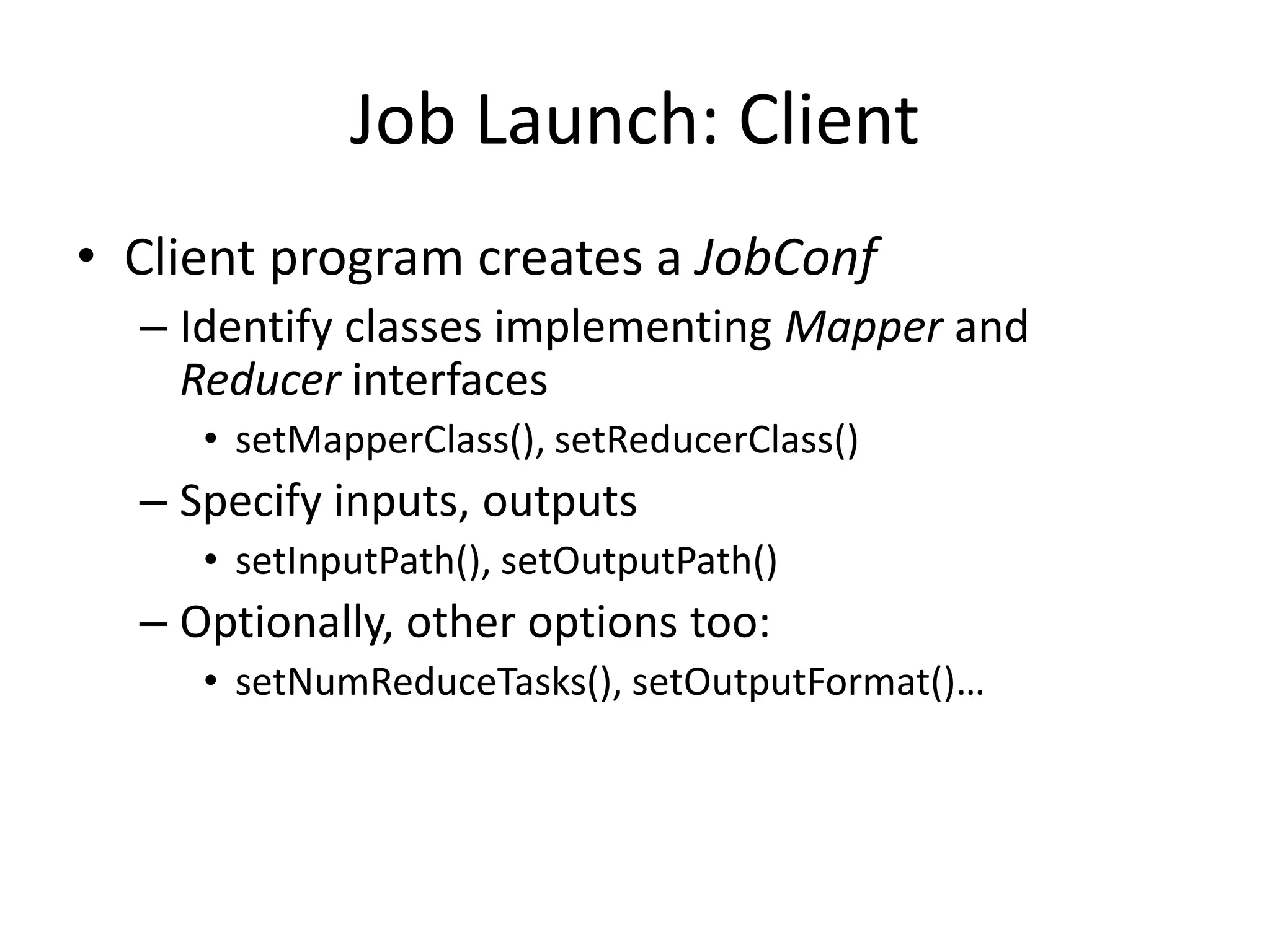

JobConf conf = new JobConf(MaxTemperature.class);

conf.setJobName("Max temperature");

FileInputFormat.addInputPath(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

conf.setMapperClass(MaxTemperatureMapper.class);

conf.setReducerClass(MaxTemperatureReducer.class);

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

JobClient.runJob(conf);](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-13-2048.jpg)

![public class MaxTemperature {

public static void main(String[] args) throws IOException {

if (args.length != 2) {

System.err.println("Usage: MaxTemperature <input path> <output path>");

System.exit(-1); }

JobConf conf = new JobConf(MaxTemperature.class);

conf.setJobName("Max temperature");

FileInputFormat.addInputPath(conf, new Path(args[0]));

FileOutputFormat.setOutputPath(conf, new Path(args[1]));

conf.setMapperClass(MaxTemperatureMapper.class);

conf.setReducerClass(MaxTemperatureReducer.class);

conf.setOutputKeyClass(Text.class);

conf.setOutputValueClass(IntWritable.class);

JobClient.runJob(conf);

}}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-24-2048.jpg)

![public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-25-2048.jpg)

![public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-33-2048.jpg)

![public class ObjectPositionInputFormat extends

FileInputFormat<Text, Point3D> {

public RecordReader<Text, Point3D> getRecordReader(

InputSplit input, JobConf job, Reporter reporter)

throws IOException {

reporter.setStatus(input.toString());

return new ObjPosRecordReader(job, (FileSplit)input);

}

InputSplit[] getSplits(JobConf job, int numSplits) throuw IOException;

}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-39-2048.jpg)

![public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length != 2) {

System.err.println("Usage: wordcount <in> <out>");

System.exit(2);

}

Job job = new Job(conf, "word count");

job.setJarByClass(WordCount.class);

job.setMapperClass(TokenizerMapper.class);

job.setCombinerClass(IntSumReducer.class);

job.setReducerClass(IntSumReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(IntWritable.class);

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}](https://image.slidesharecdn.com/hadoop-130228001905-phpapp01/75/Hadoop-51-2048.jpg)