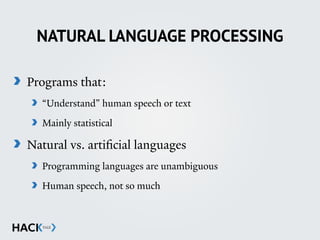

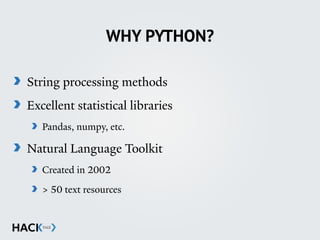

The document is an introduction to a Python course on Natural Language Processing (NLP), covering concepts like text manipulation, tokenization, and model evaluation techniques. It includes resources for further study and discusses applications such as text classification and sentiment analysis. Key programming libraries and corpus information are also highlighted, alongside practical examples of manipulating text corpora.

![STRING MANIPULATION

string'='“This'is'a'great'sentence.”'

string'='string.split()'

string[:4]'

=>'[“This”,'“is”,'“a”,'“great”]'

string[;1:]'

=>'“sentence.”'

string[::;1]'

=>'[“sentence.”,'“great”,'“a”,'“is”,'“This”]'](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-21-320.jpg)

![FILE I/O

f'='open(‘sample.txt’,'‘r’)'

for'line'in'f:

' line'='line.split()'

' for'word'in'line:

' ' if'word'[;2:]'=='‘ly’:'

' ' ' print'word](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-22-320.jpg)

![BUILT-IN CORPORA

Sentences:

[“I”, “hate”, “cats”, “.”]

Paragraphs:

[[“I”, “hate”, “cats”, “.”], [“Dogs”, “are”, “worse”, “.”]]

Entire file:

List of paragraphs, which are lists of sentences](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-29-320.jpg)

![NAVIGATING CORPORA

from'nltk.corpus'import'brown'

brown.categories()'

len(brown.categories())'#'num'categories'

brown.words(categories=[‘news’,'‘editorial’])'

brown.sents()'

brown.paras()'

brown.fileids()'

brown.words(fileids=[‘cr01’])'](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-32-320.jpg)

![TEXT METHODS

from'nltk.corpus'import'some_corpus'

from'nltk.text'import'Text'

text'='Text(some_corpus.words())'

text.concordance(“word”)'

text.similar(“word”)'

text.common_contexts([“word1”,'“word2”])'

text.count(“word”)'

text.collocations()](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-44-320.jpg)

![FREQUENCY DISTRIBUTIONS

from'nltk'import'FreqDist'

from'nltk.text'import'Text'

import'matplotlib'

text'='Text(some_corpus.words())'

fdist'='FreqDist(text)'

=>'dict'of'{‘token1’:count1,'‘token2’:count2,…}'

fdist[‘the’]''

=>'returns'the'#'of'times'‘the’'appears'in'text'](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-50-320.jpg)

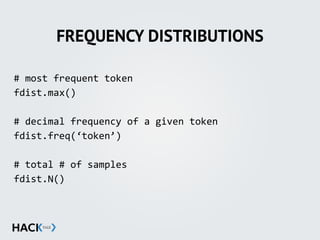

![FREQUENCY DISTRIBUTIONS

#'top'50'words'

fdst.most_common(50)'

#'frequency'plot'

fdist.plot(25,'cumulative=[True'or'False])'

#'list'of'all'1;count'tokens'

fdist.hapaxes()](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-51-320.jpg)

![STOPWORDS

from'nltk.corpus'import'stopwords'

sw'='stopwords.words(‘english’)'

old_brown'='brown.words()'

new'='[w'for'w'in'old_brown'if'w.lower()'not'in'sw]'

#'better,'but'we’re'still'counting'punctuation'

#'sample'code';'brown.py](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-55-320.jpg)

![STOPWORDS & PUNCTUATION

from'nltk.corpus'import'stopwords'

sw'='stopwords.words(‘english’)'

old_brown'='brown.words()'

new'='[w'for'w'in'old_brown'if'w.lower()'not'in'sw'

' ' ' ' ' ' ' ' ' ' ' and$w.isalnum()]'](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-57-320.jpg)

![INAUGURAL CORPUS

from

nltk.corpus

import

inaugural

import

matplotlib

from

nltk

import

Text

inaug

=

Text(inaugural.words())

inaug.collocations()

inaug.concordance(‘freedom’)

inaug.dispersion_plot([‘freedom’,

‘government’,

‘liberty’,

‘hope’])](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-65-320.jpg)

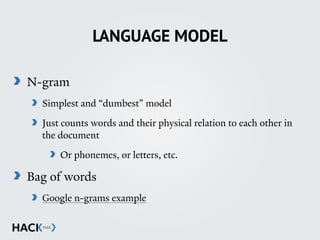

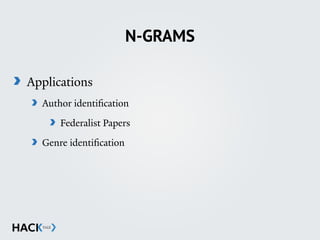

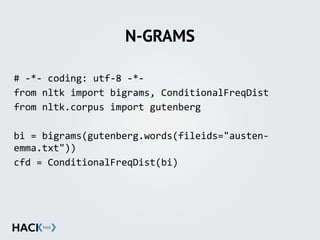

![N-GRAMS

Unigram (simple count):

“This movie is great!”

{‘this’: 1, ‘movie’: 1, …}

Bigram:

“# This movie is great!”

[(‘#’, ‘this’), (‘this’, ‘movie’), (‘movie’, ‘is’), …]](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-80-320.jpg)

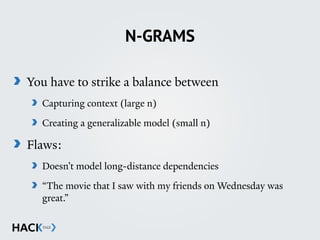

![N-GRAMS

Trigram:

“# # This movie is great”

[(‘#’, ‘#’, ‘this’), (‘#’, ‘this’, ‘movie’), …]

4-grams, 5-grams, etc, etc.

Trigrams are usually good for English](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-81-320.jpg)

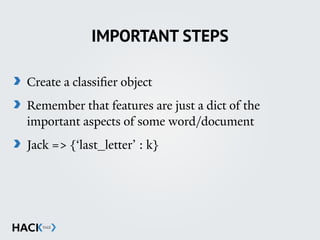

![IMPORTANT STEPS

Define a features function

{‘feature’ : count/bool, ‘feature2’ : count/bool}

Label words in your corpus with their categories

[(category, word), (category, word), (category, word)]

Create a features set

[(list_of_features, category), …]](https://image.slidesharecdn.com/hackyale-allslides-180822221225/85/HackYale-Natural-Language-Processing-All-Slides-91-320.jpg)