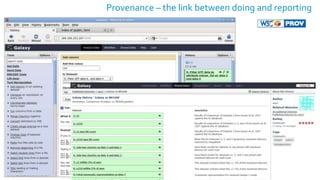

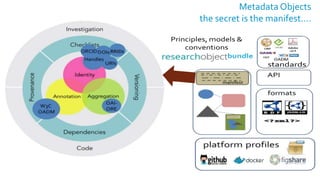

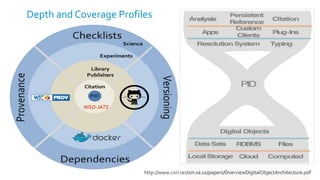

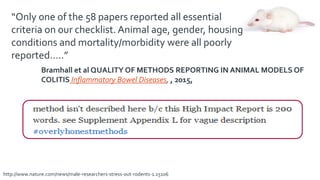

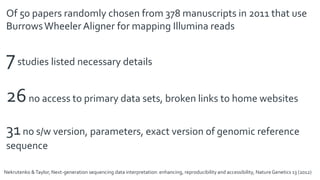

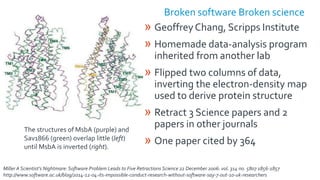

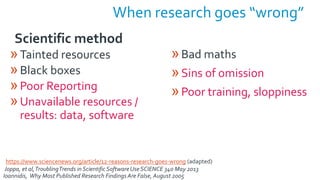

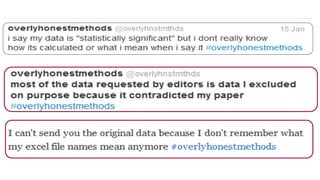

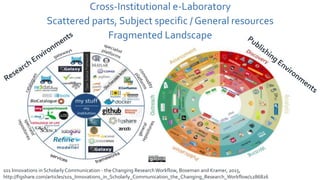

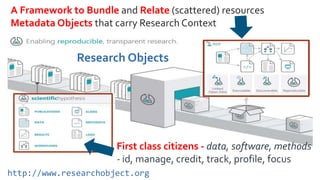

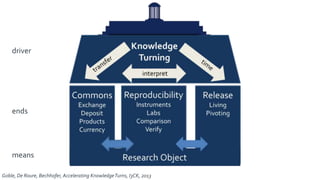

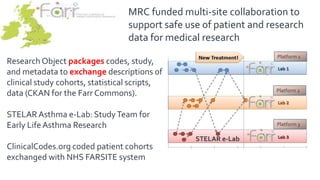

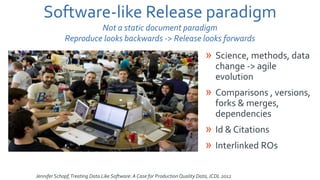

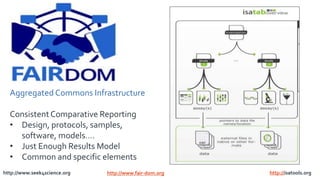

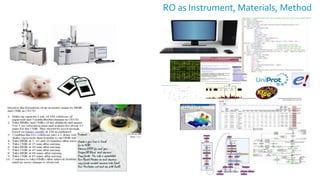

The document discusses the challenges of reproducibility in scientific research, emphasizing the importance of transparent reporting of data, methods, and software used in studies. It highlights the shortcomings in the research environment that contribute to flawed results and the need for improved practices and tools to enhance reproducibility. The text also presents a vision for research objects that bundle data, software, and methodologies to create a comprehensive framework for facilitating reproducible research.

![KnowledgeTurning, Flow

Barriers to Cure

» Access to scientific

resources

» Coordination and

Collaboration

» Flow of Information

http://fora.tv/2010/04/23/Sage_Commons_Josh_Sommer_Chordoma_Foundation

[Josh Sommer]](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-2-320.jpg)

![[Pettifer, Attwood]

http://getutopia.com](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-4-320.jpg)

![VirtualWitnessing*

Scientific publications:

» announce a result

» convince readers the result is correct

“papers in experimental [and computational

science] should describe the results and

provide a clear enough protocol [algorithm]

to allow successful repetition and extension”

Jill Mesirov, Broad Institute, 2010**

**Accessible Reproducible Research, Science 22January 2010,Vol. 327 no. 5964 pp. 415-416, DOI: 10.1126/science.1179653

*Leviathan and the Air-Pump: Hobbes, Boyle, and the Experimental Life (1985) Shapin and Schaffer.](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-5-320.jpg)

![“An article about computational science in a

scientific publication is not the scholarship

itself, it is merely advertising of the

scholarship.The actual scholarship is the

complete software development

environment, [the complete data] and the

complete set of instructions which generated

the figures.”

David Donoho, “Wavelab and Reproducible

Research,” 1995

datasets

data collections

standard operating

procedures

software

algorithms

configurations

tools and apps

codes

workflows, scripts

code libraries

services

system software

infrastructure

compilers, hardware

Morin et al Shining Light into Black Boxes

Science 13 April 2012: 336(6078) 159-160

Ince et alThe case for open computer programs, Nature 482,

2012](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-7-320.jpg)

![Focus on the figure: F1000Research Living Figures,

versioned articles, in-article data manipulation

R Lawrence Force2015, Vision Award Runner Up http://f1000.com/posters/browse/summary/1097482

Simply data + code

Can change the definition of

a figure, and ultimately the

journal article

Colomb J and Brembs B.

Sub-strains of Drosophila Canton-S differ

markedly in their locomotor behavior [v1;

ref status: indexed, http://f1000r.es/3is]

F1000Research 2014, 3:176

Other labs can replicate the study, or

contribute their data to a meta-

analysis or disease model - figure

automatically updates.

Data updates time-stamped.

New conclusions added via versions.](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-30-320.jpg)

![https://doi.org/10.15490/seek.1.investigation.56

[Snoep, 2015]](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-32-320.jpg)

![recompute

replicate

rerun

repeat

re-examine

repurpose

recreate

reuse

restore

reconstruct review

regenerate

revise

recycle

redo

What IS reproducibility?

Re: “do again”, “return to original state”

regenerate figure

“show A is true by doing B”

verify but not falsify

[Yong, Nature 485, 2012]

robustness tolerance

verificationcompliance

validation assurance](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-40-320.jpg)

![[Adapted Freire, 2013]

transparency

dependencies

steps

provenance

portability

robustness

preservation

access

available

description

intelligible

standards

common APIs

licensing

standards

common

metadata

change management

versioning

packaging

Machine

actionable

Machine

actionable](https://image.slidesharecdn.com/goble-jisc-digifest15-150312125603-conversion-gate01/85/RARE-and-FAIR-Science-Reproducibility-and-Research-Objects-46-320.jpg)