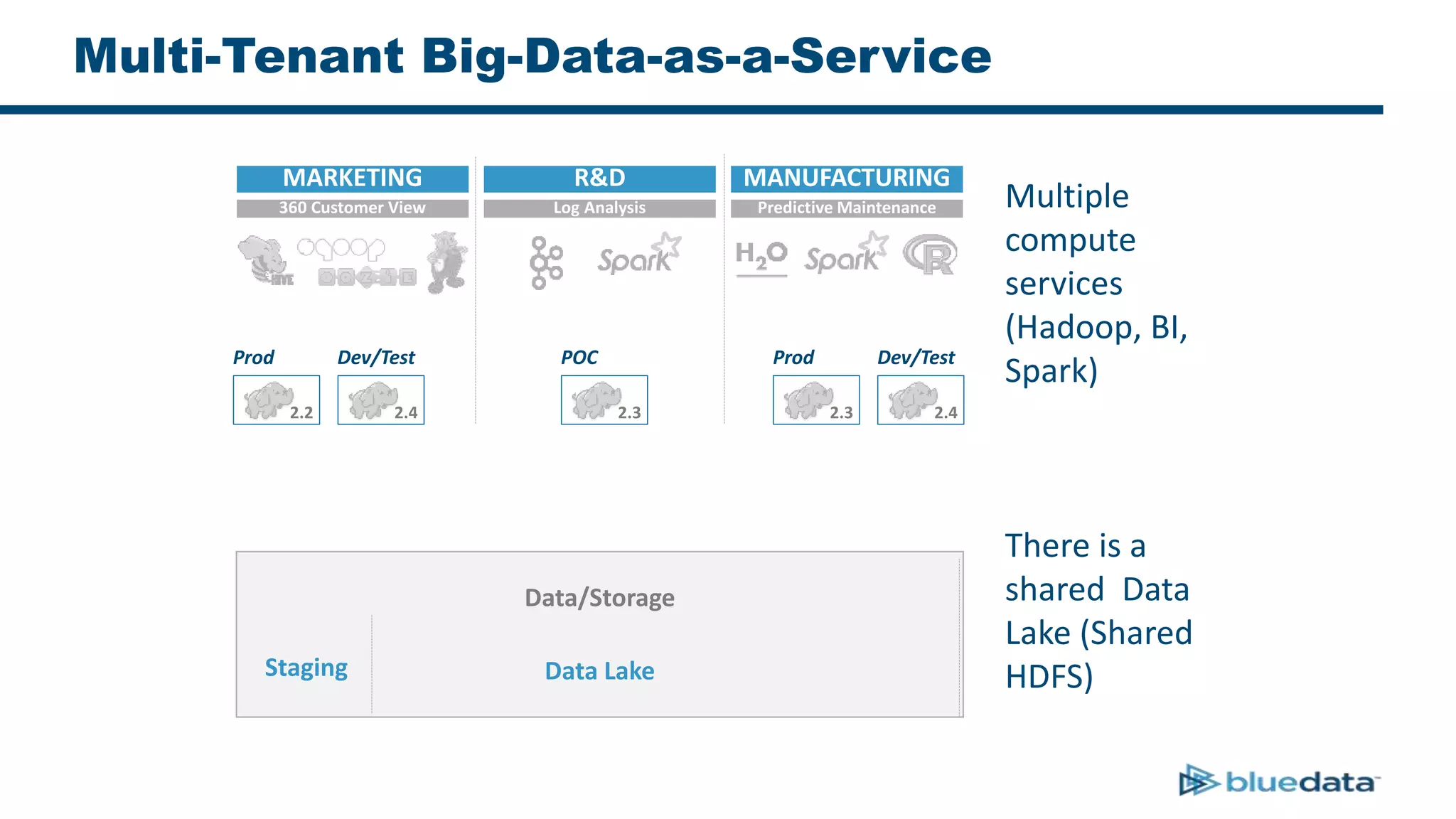

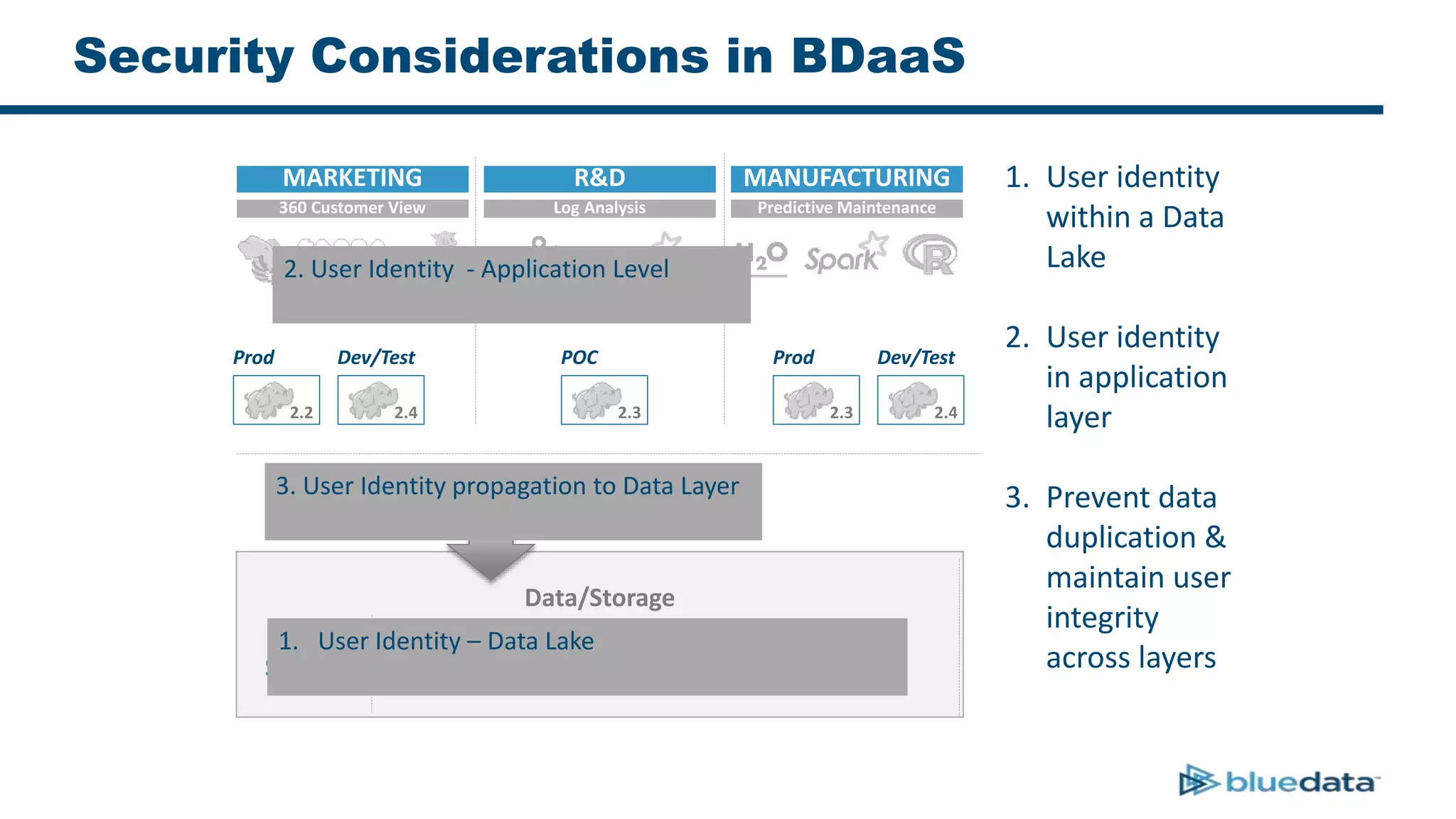

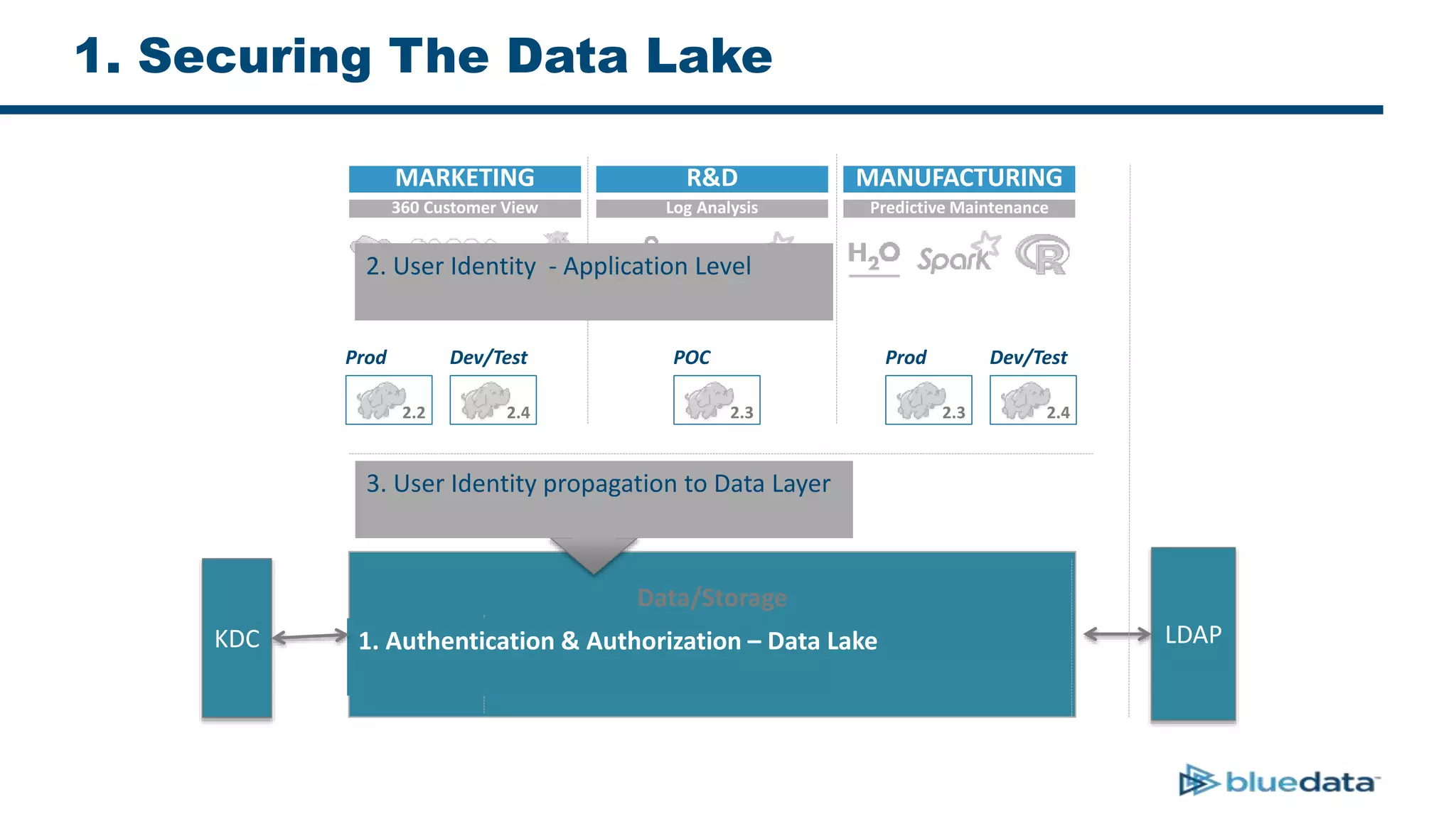

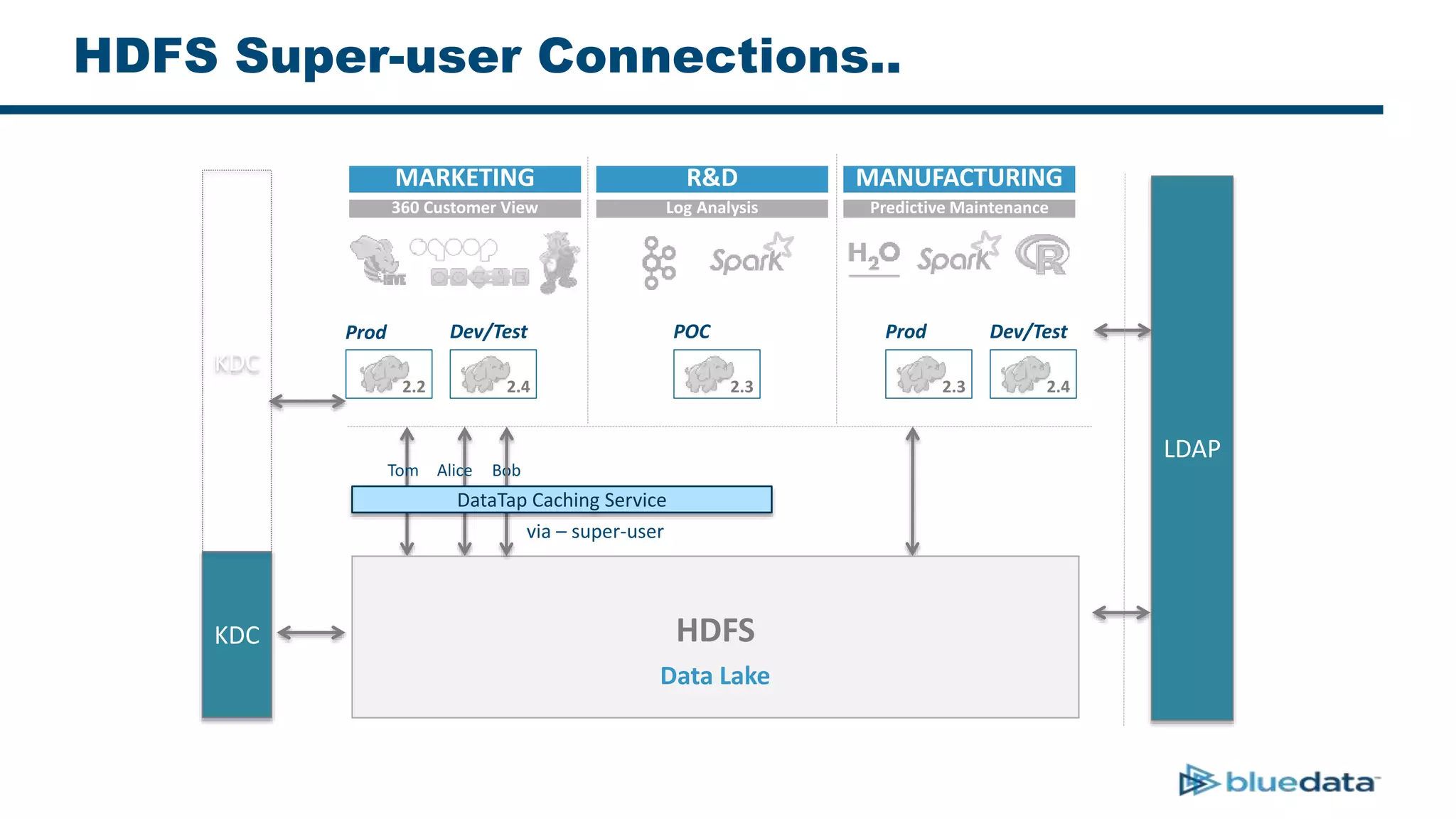

1. The document discusses security considerations for deploying big data as a service (BDaaS) across multiple tenants and applications. It focuses on maintaining a single user identity to prevent data duplication and enforce access policies consistently.

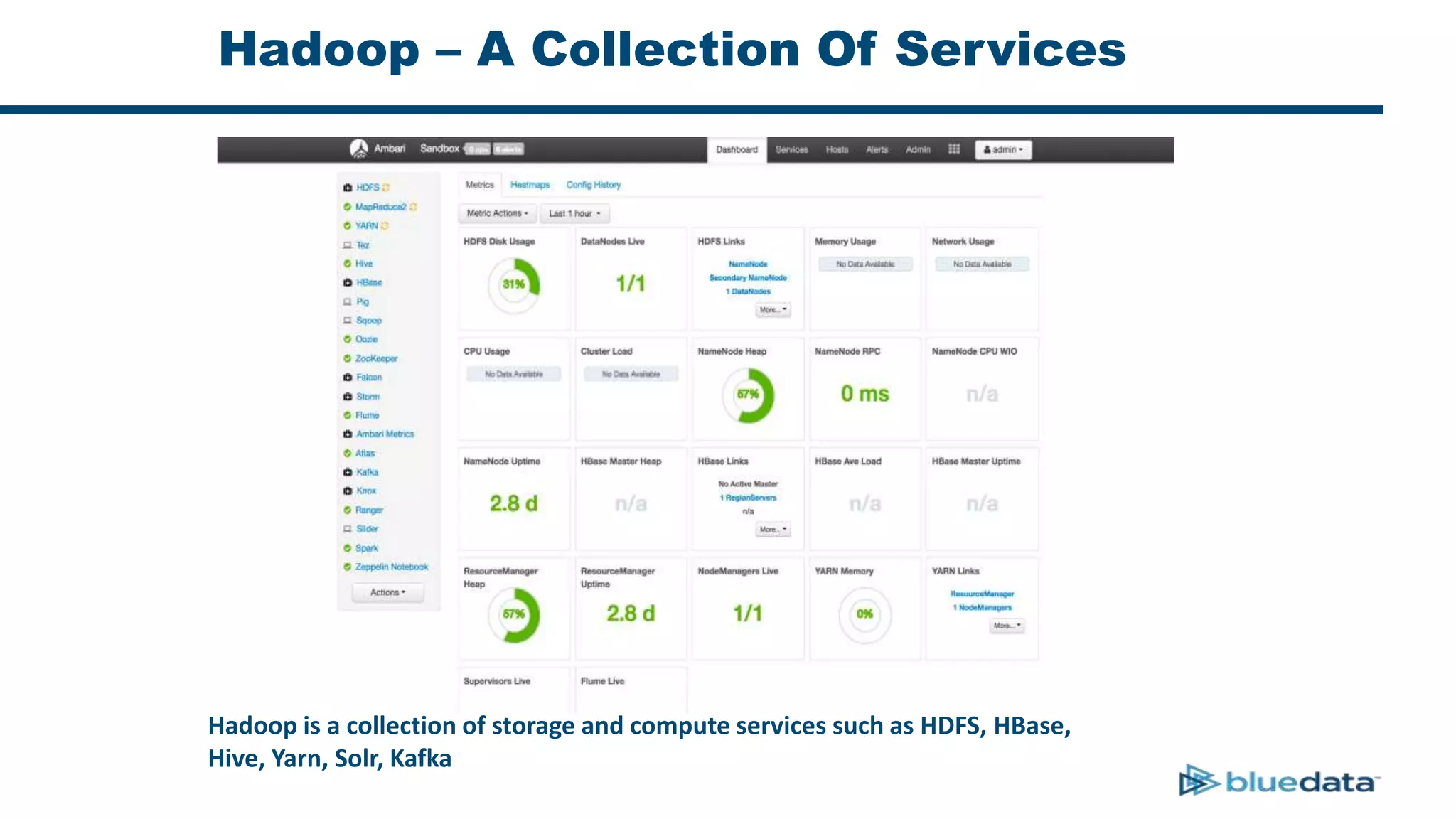

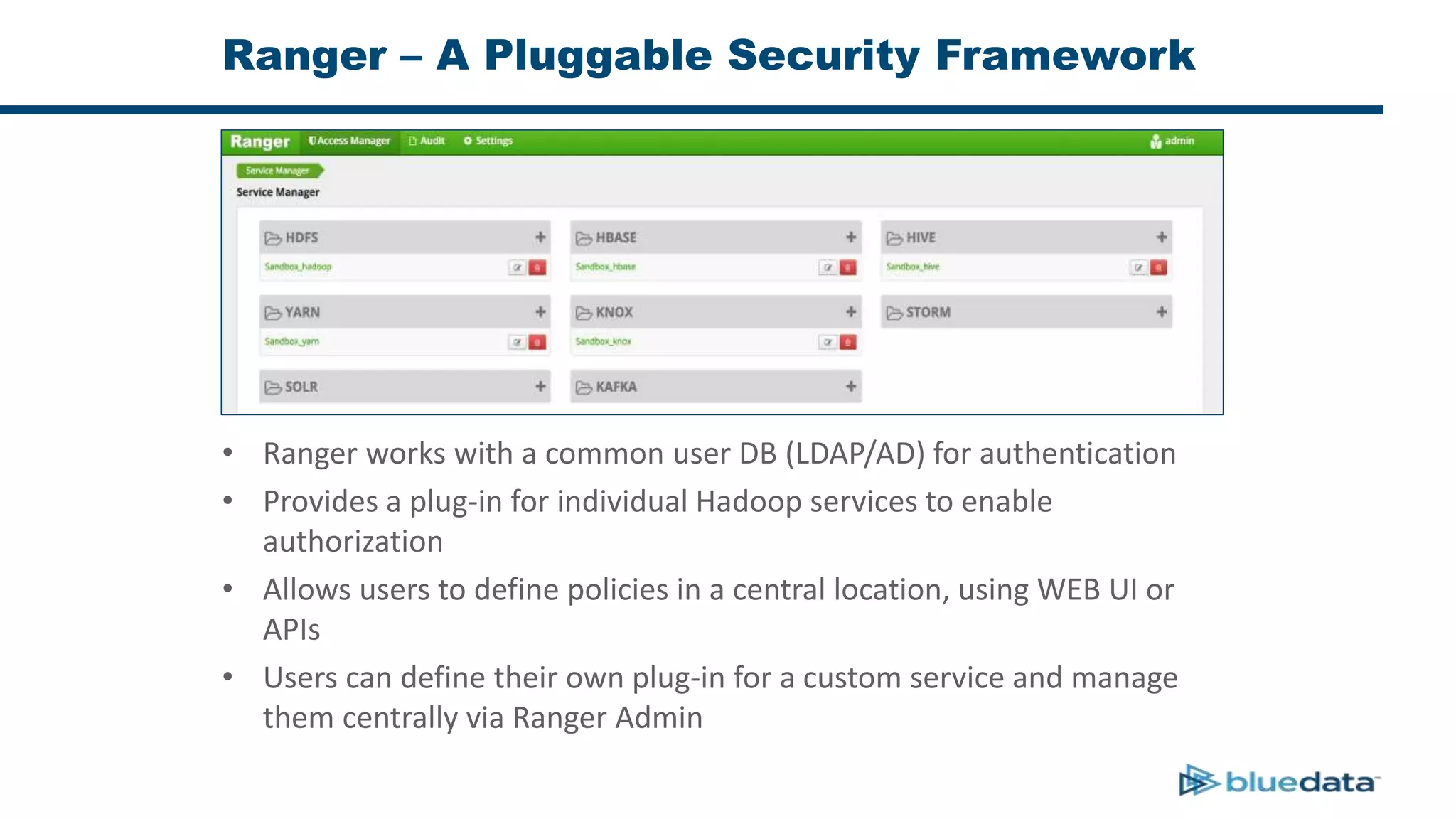

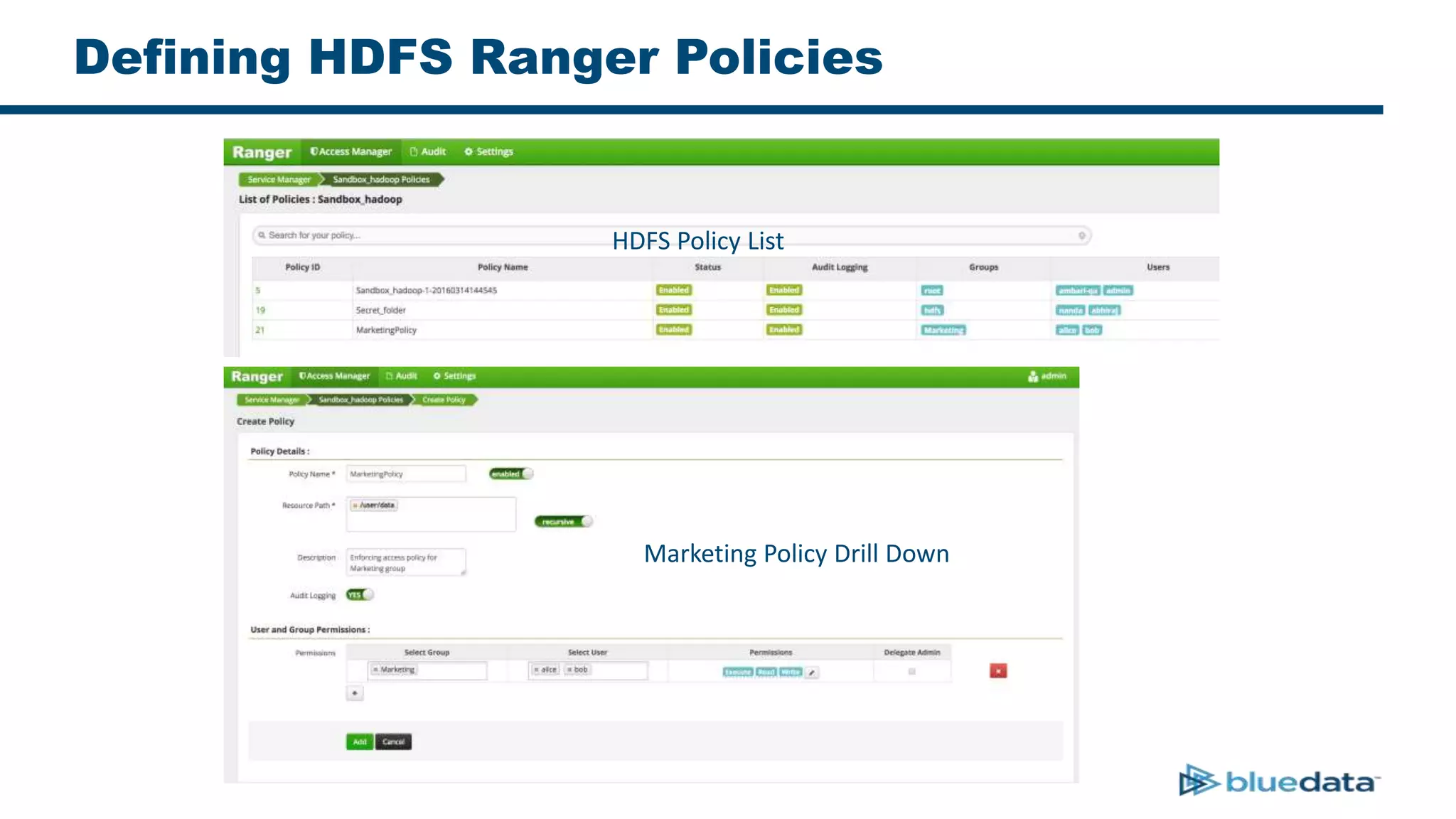

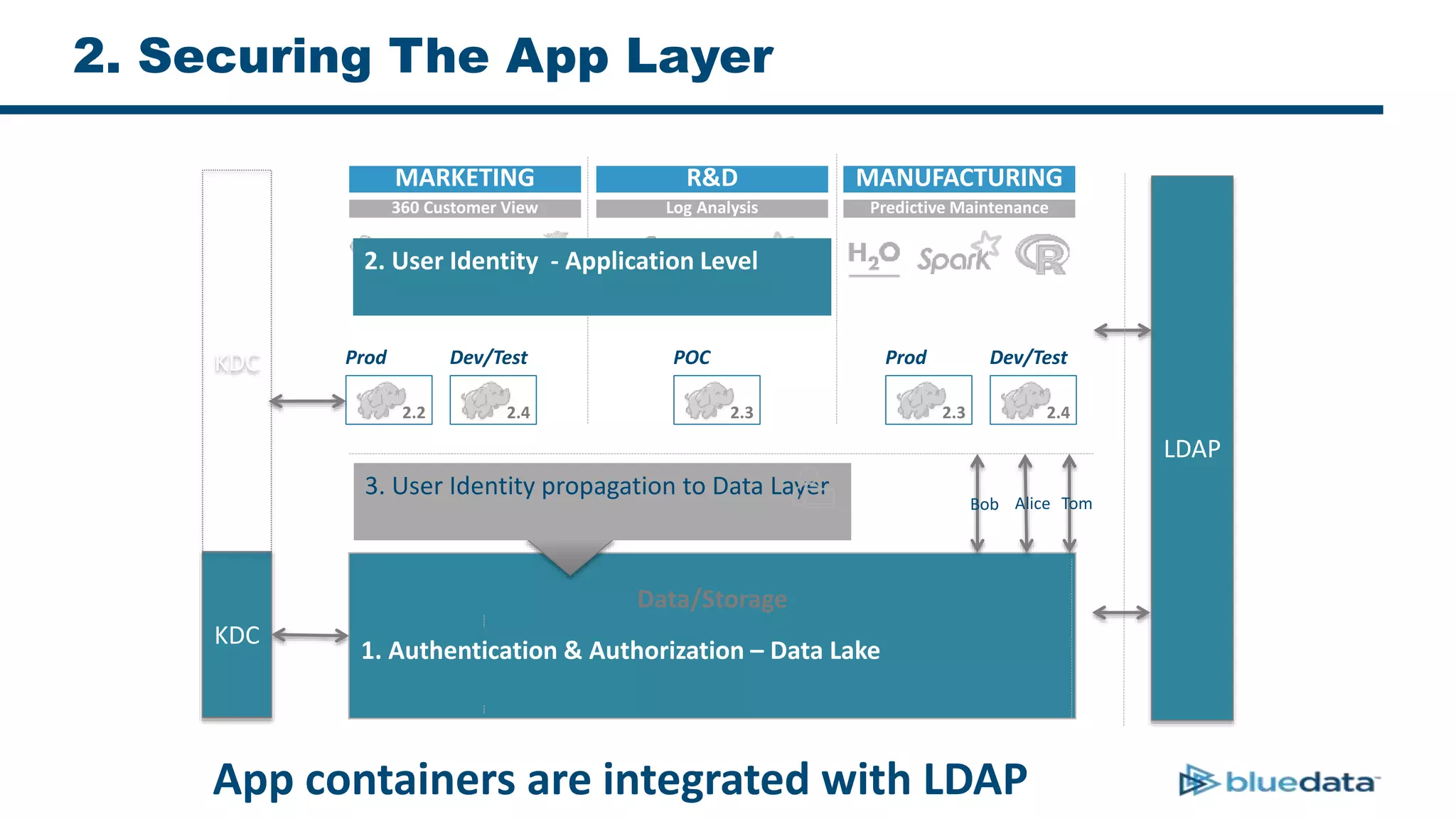

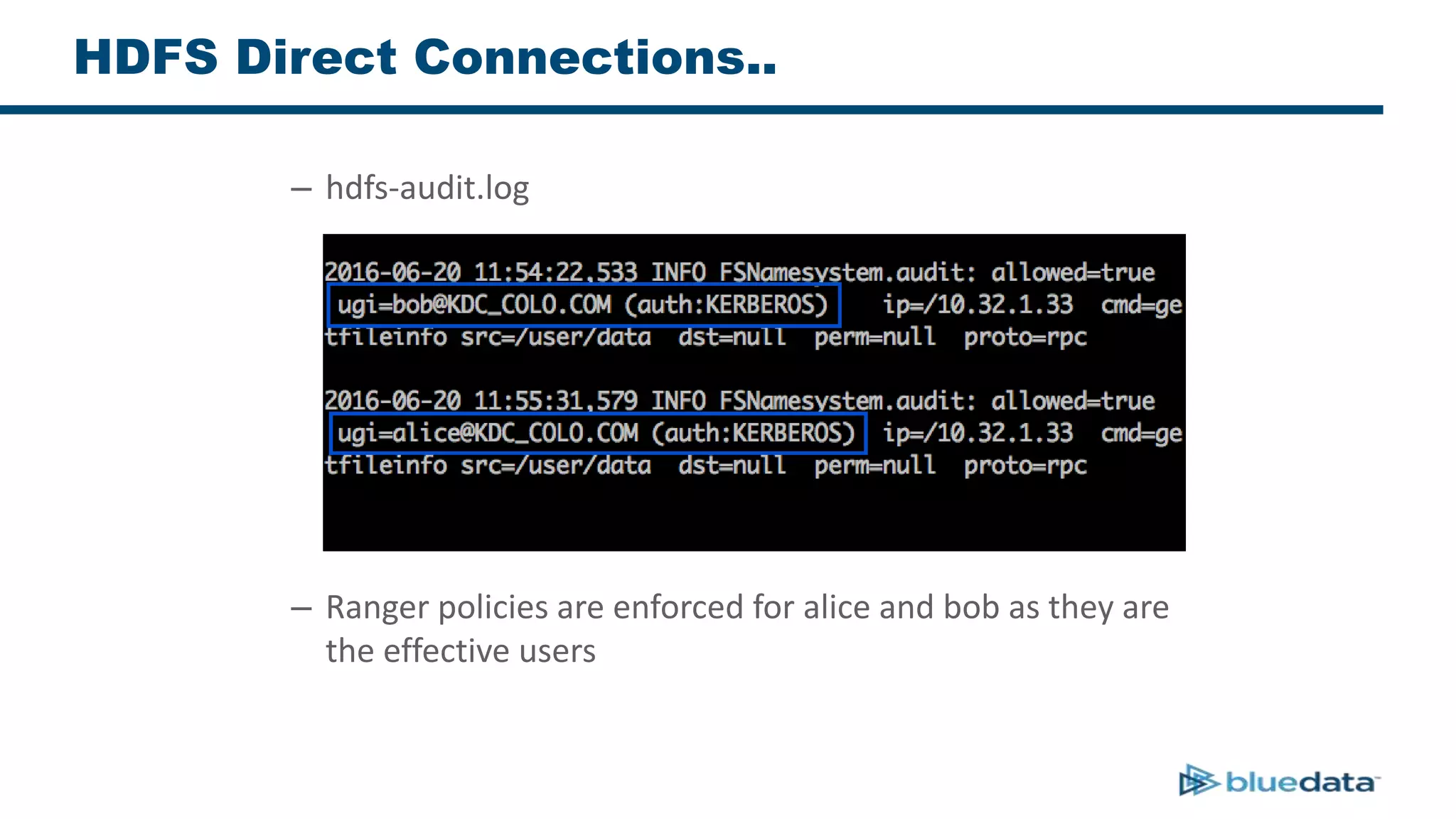

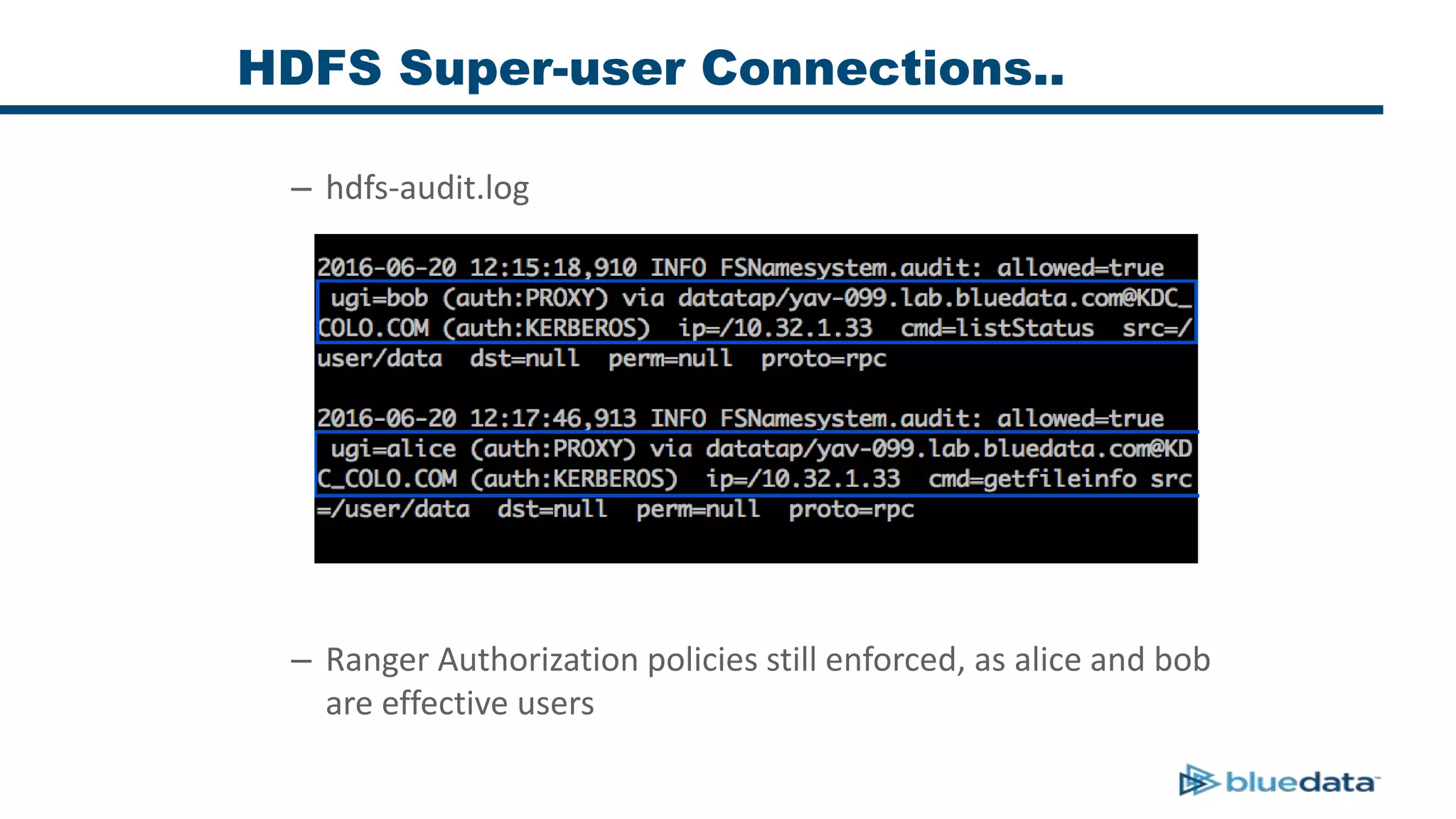

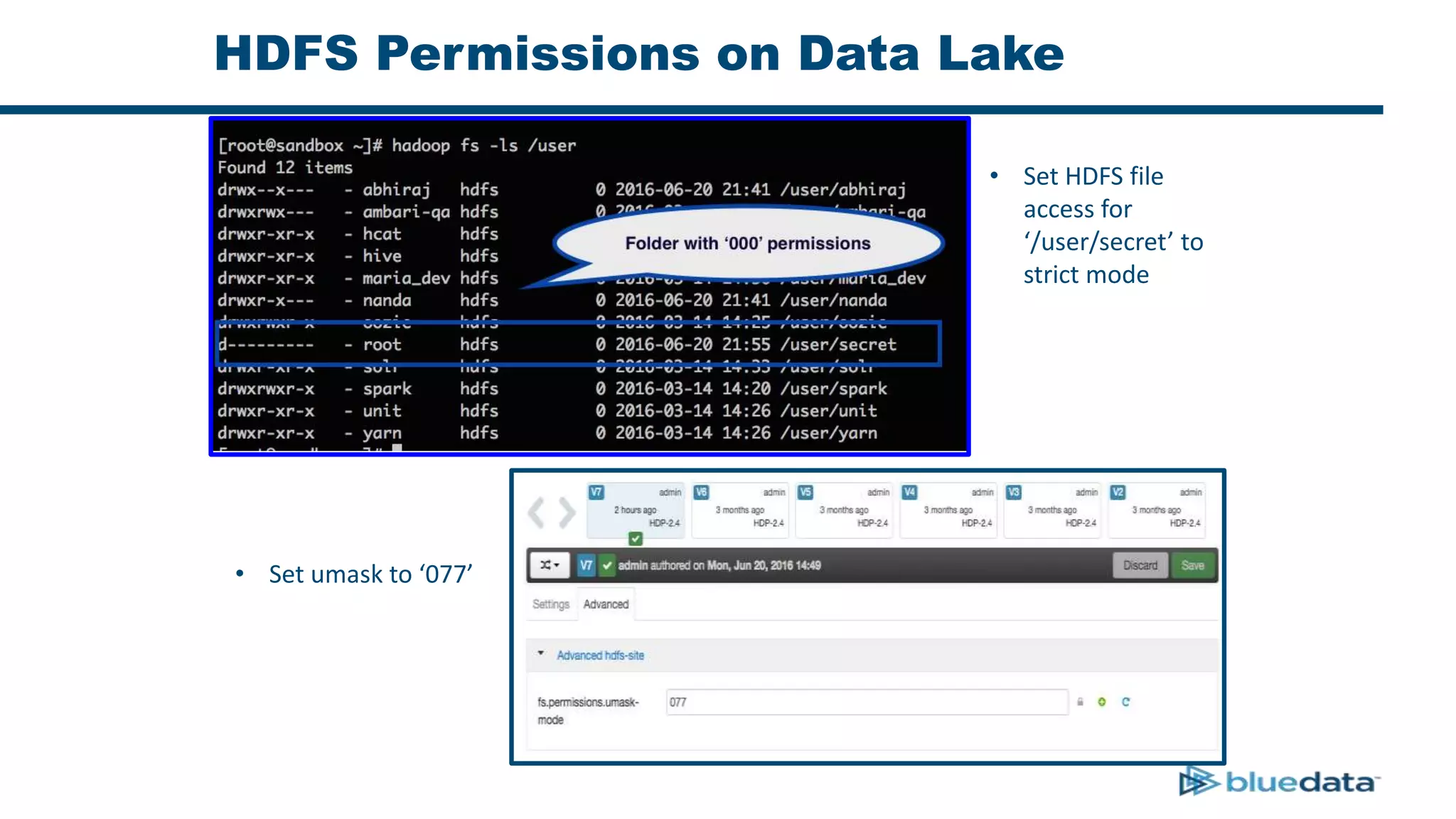

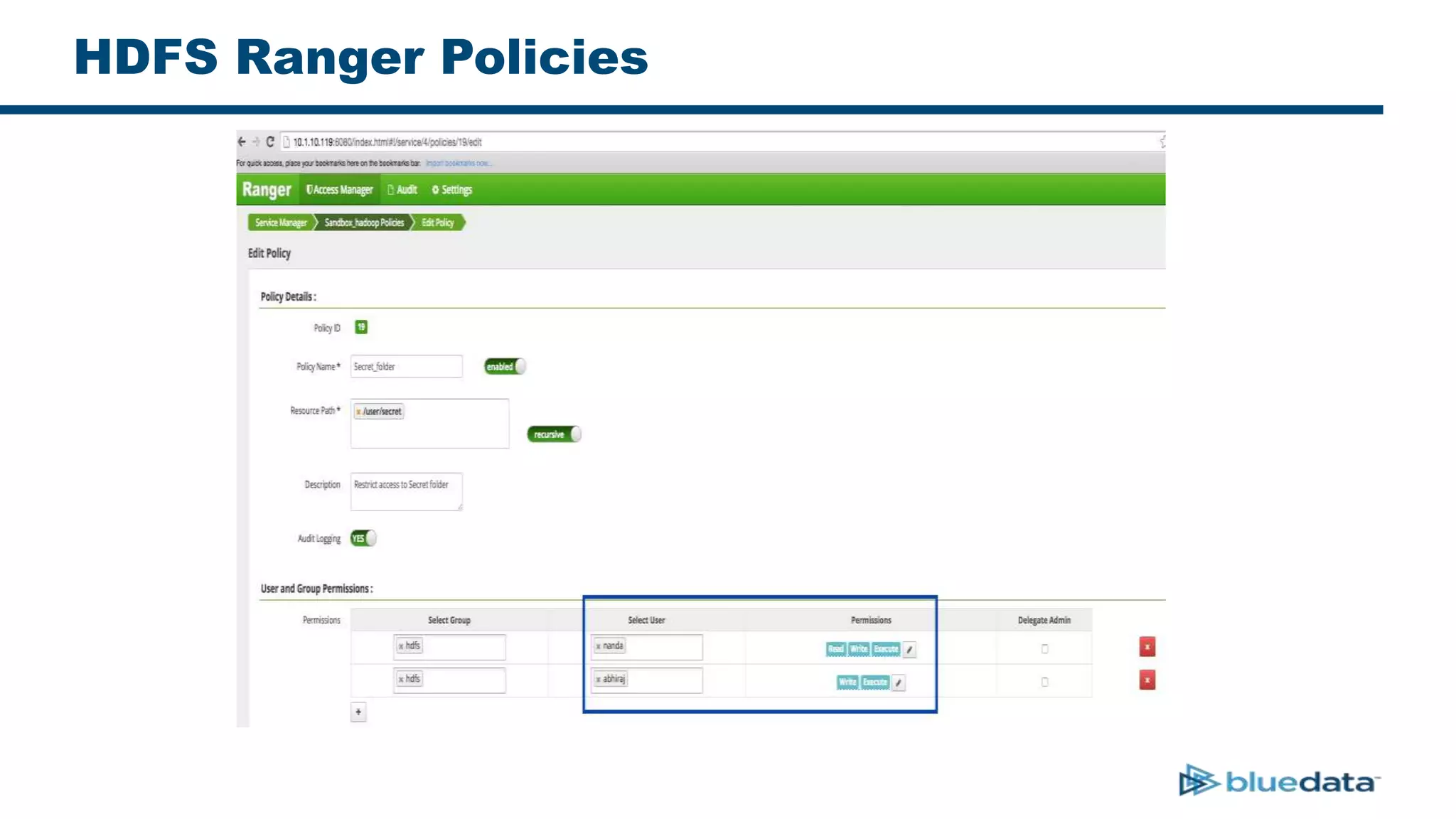

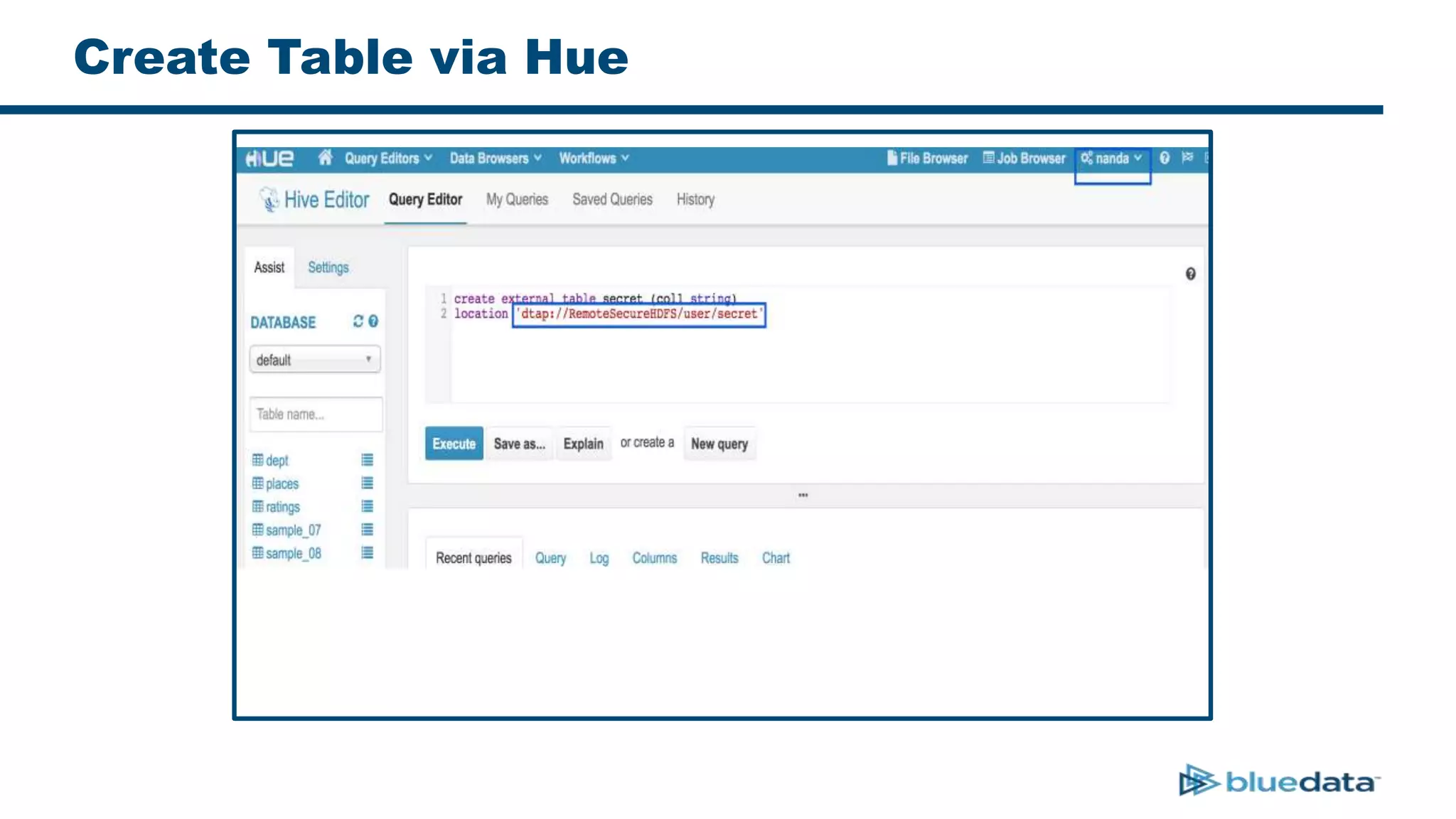

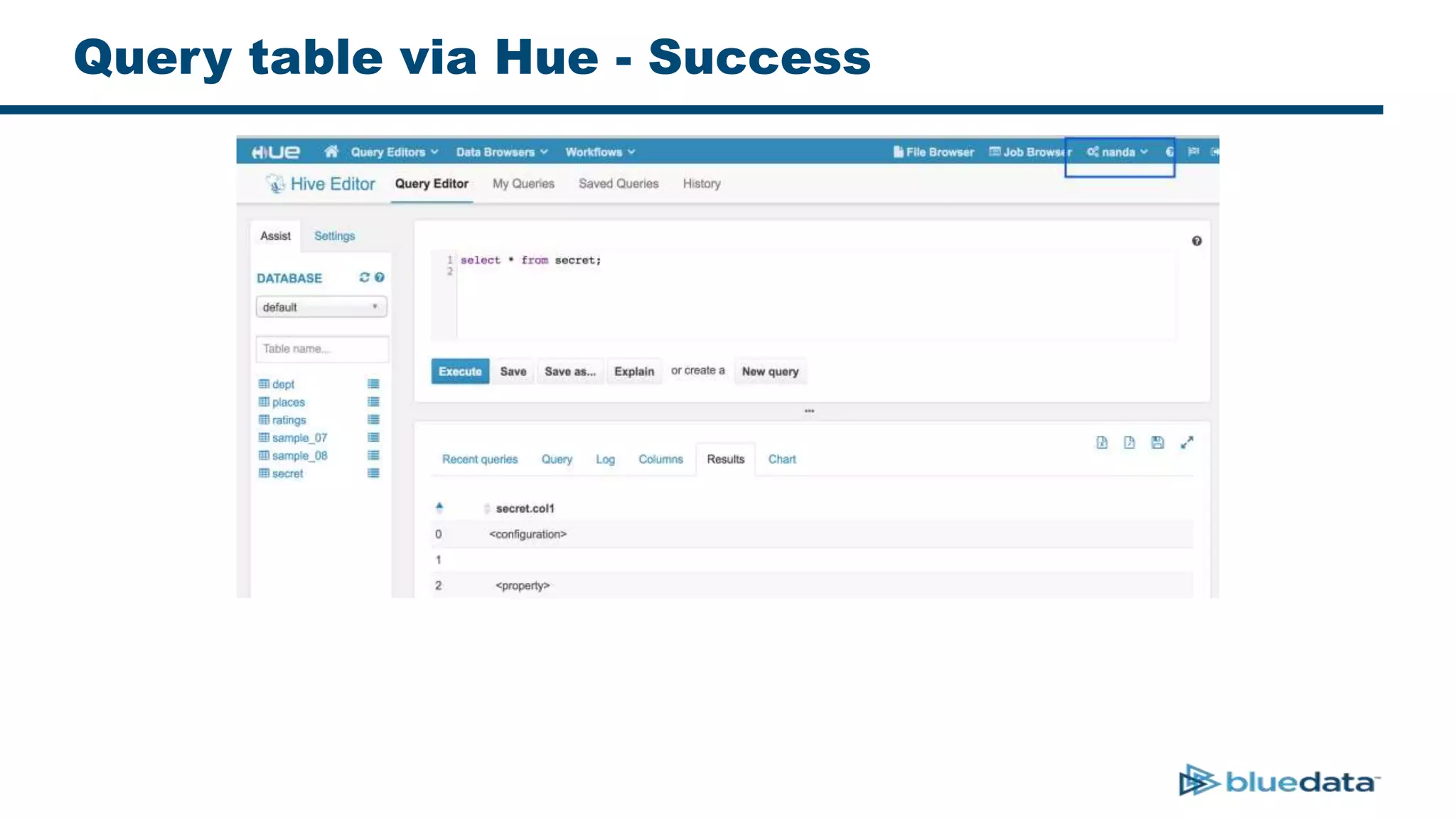

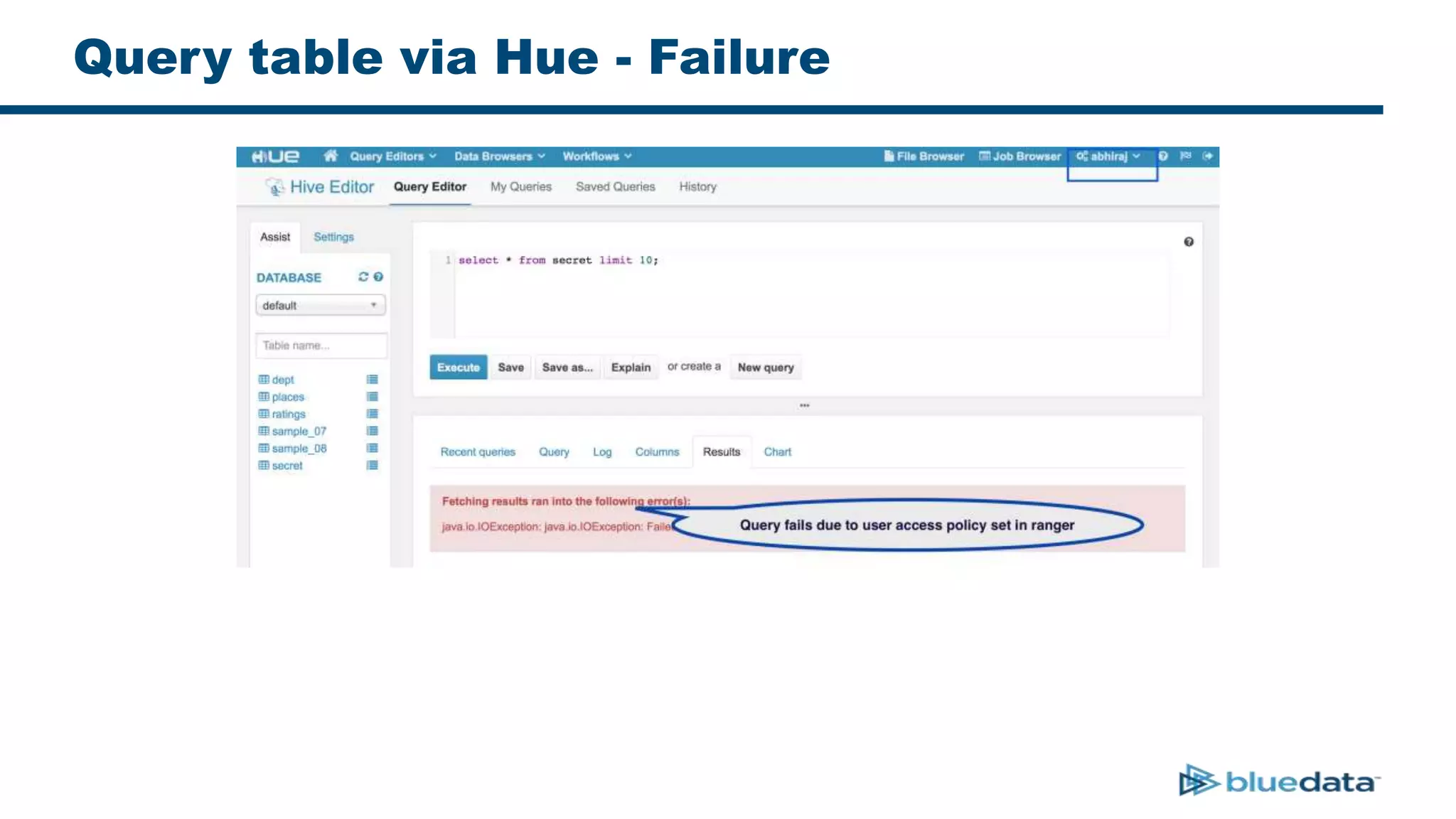

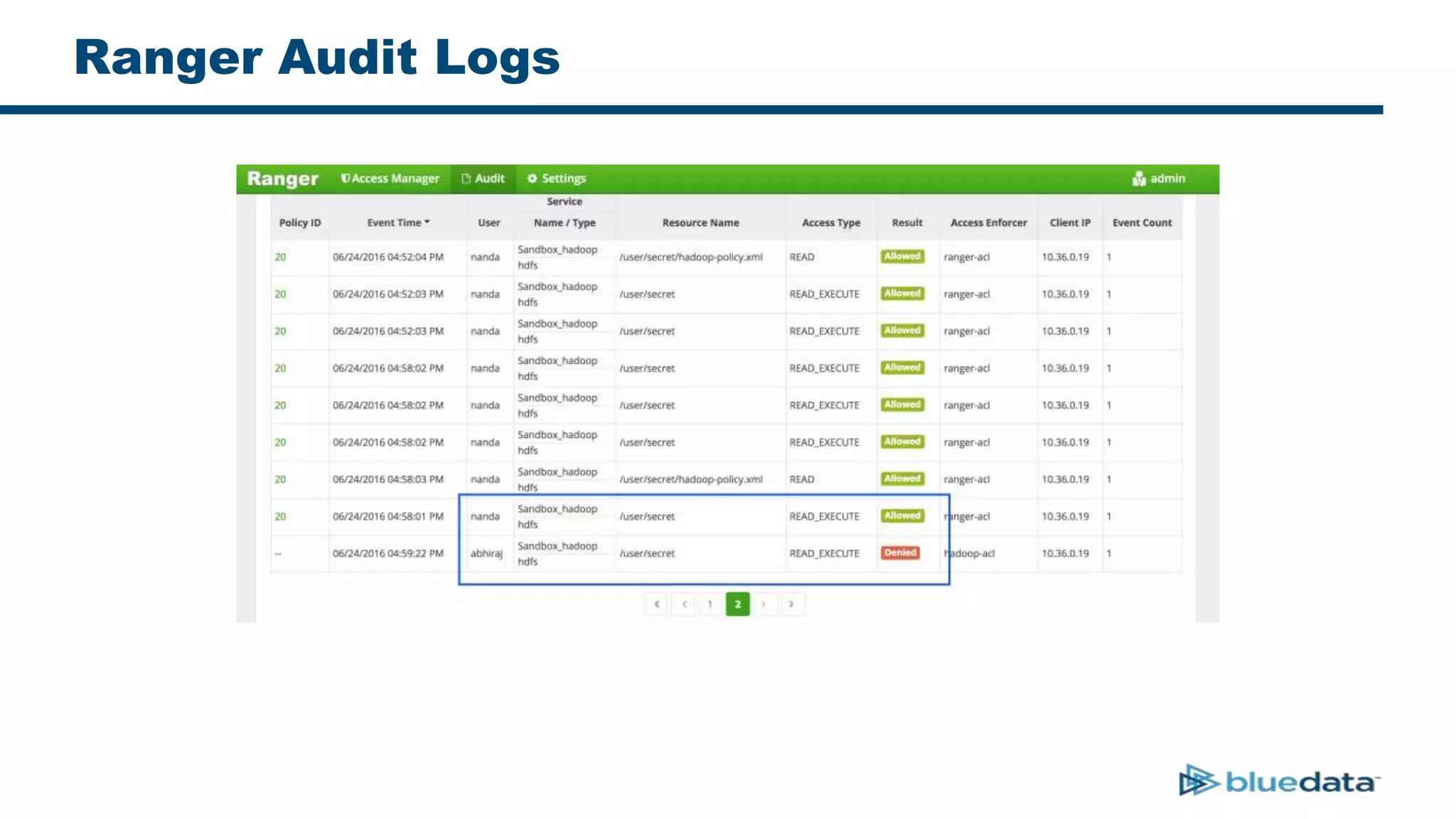

2. It describes using Apache Ranger to centrally define and enforce policies across Hadoop services like HDFS, HBase, Hive. Ranger integrates with LDAP/AD for authentication.

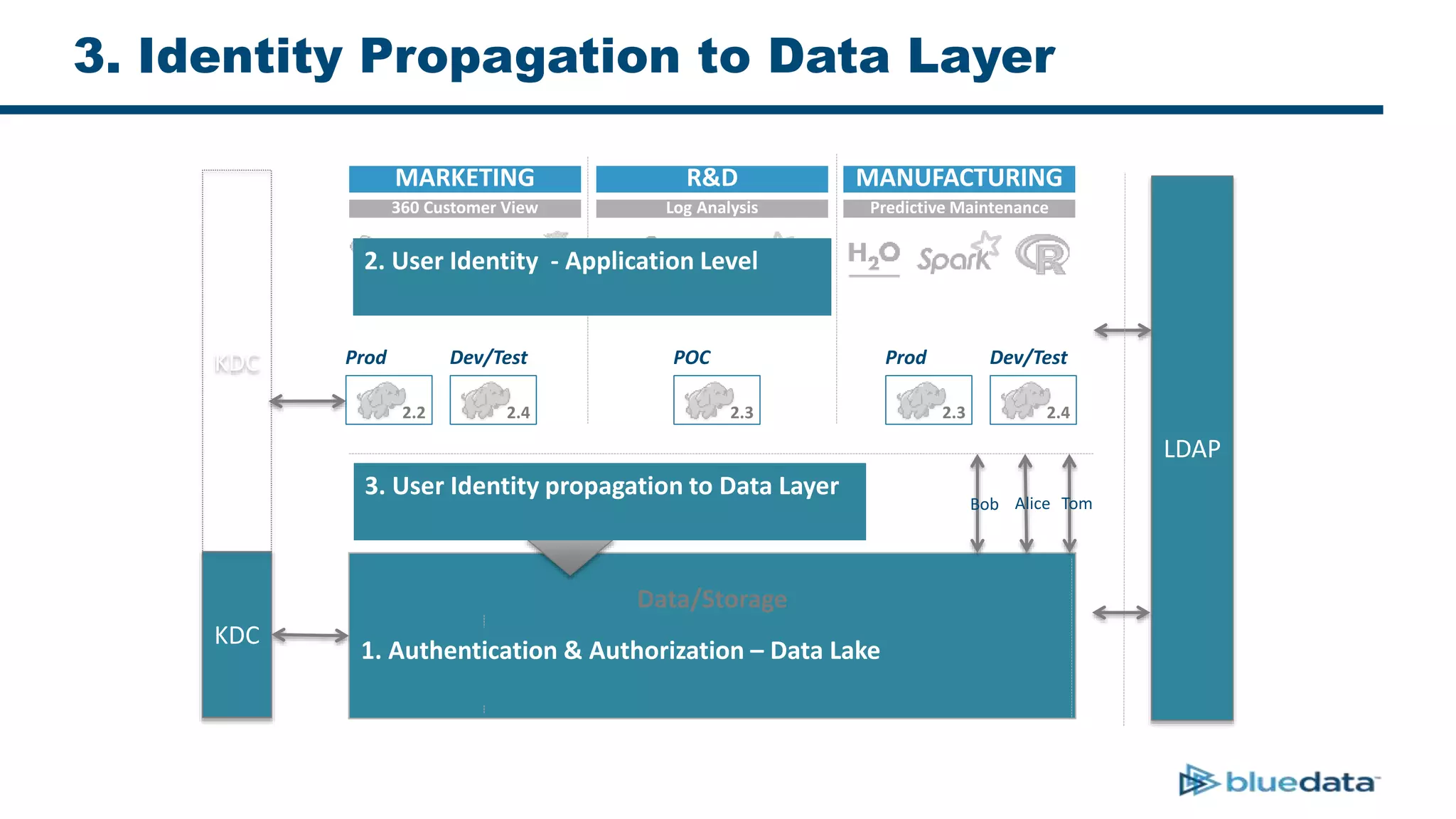

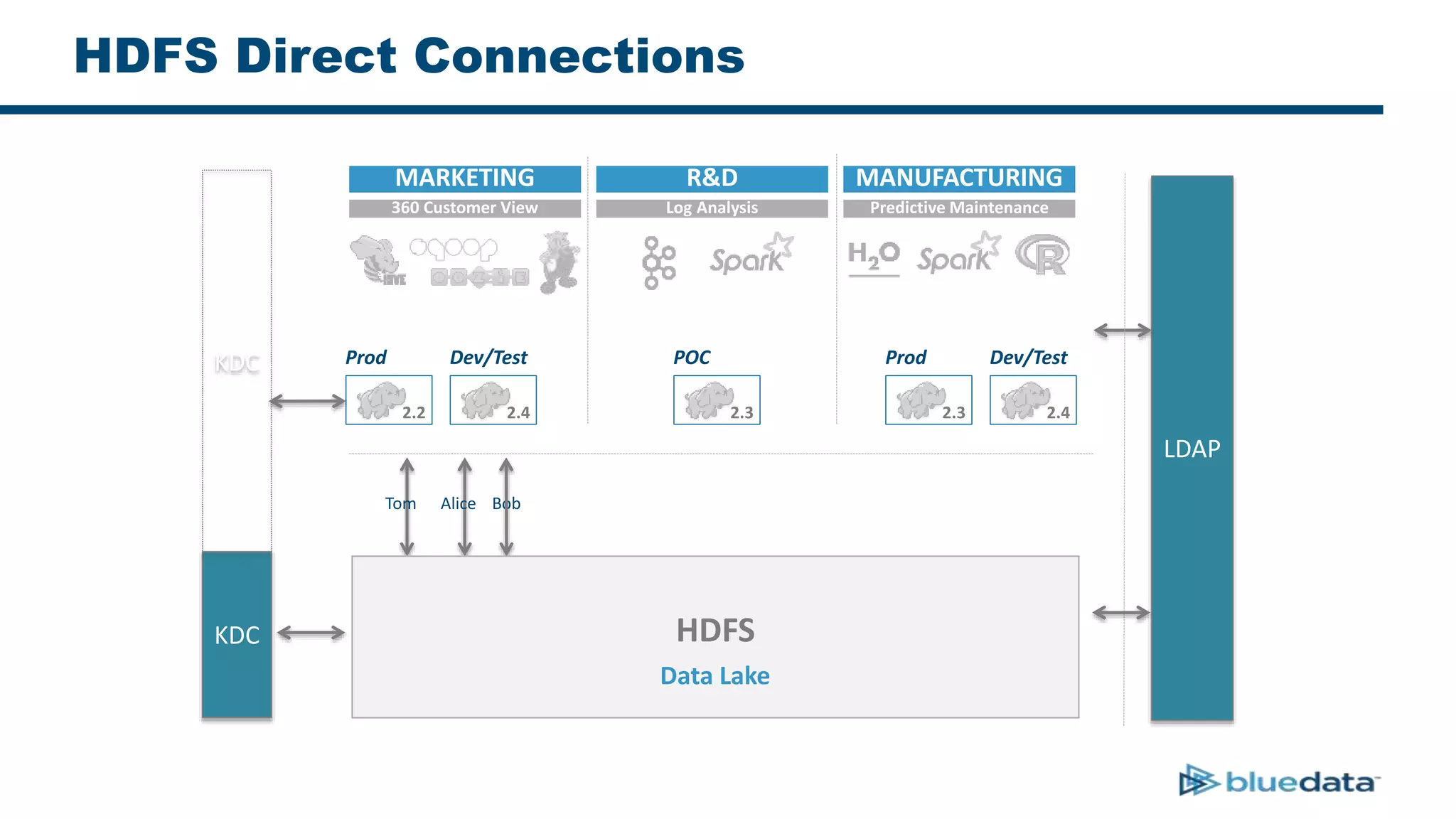

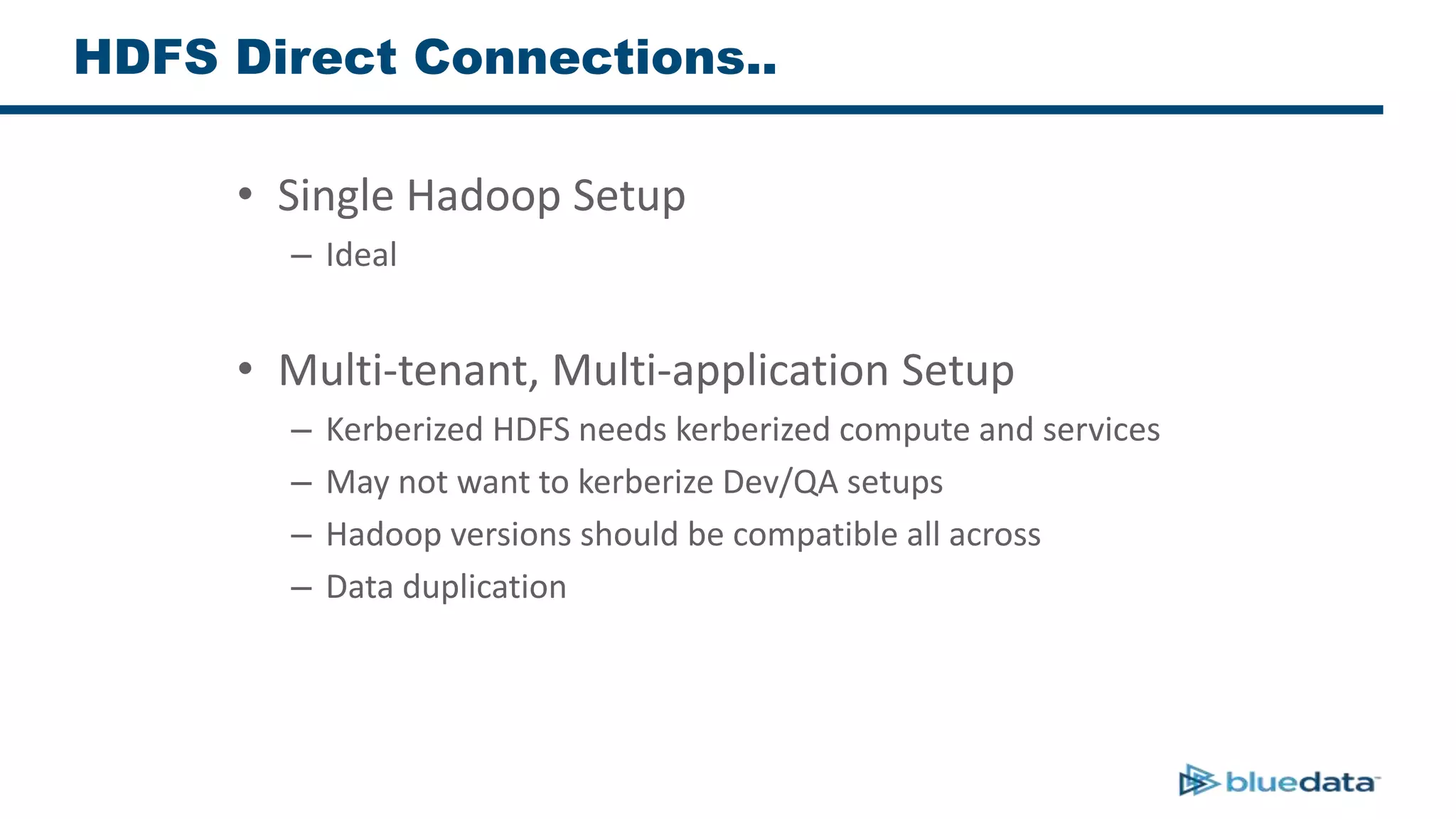

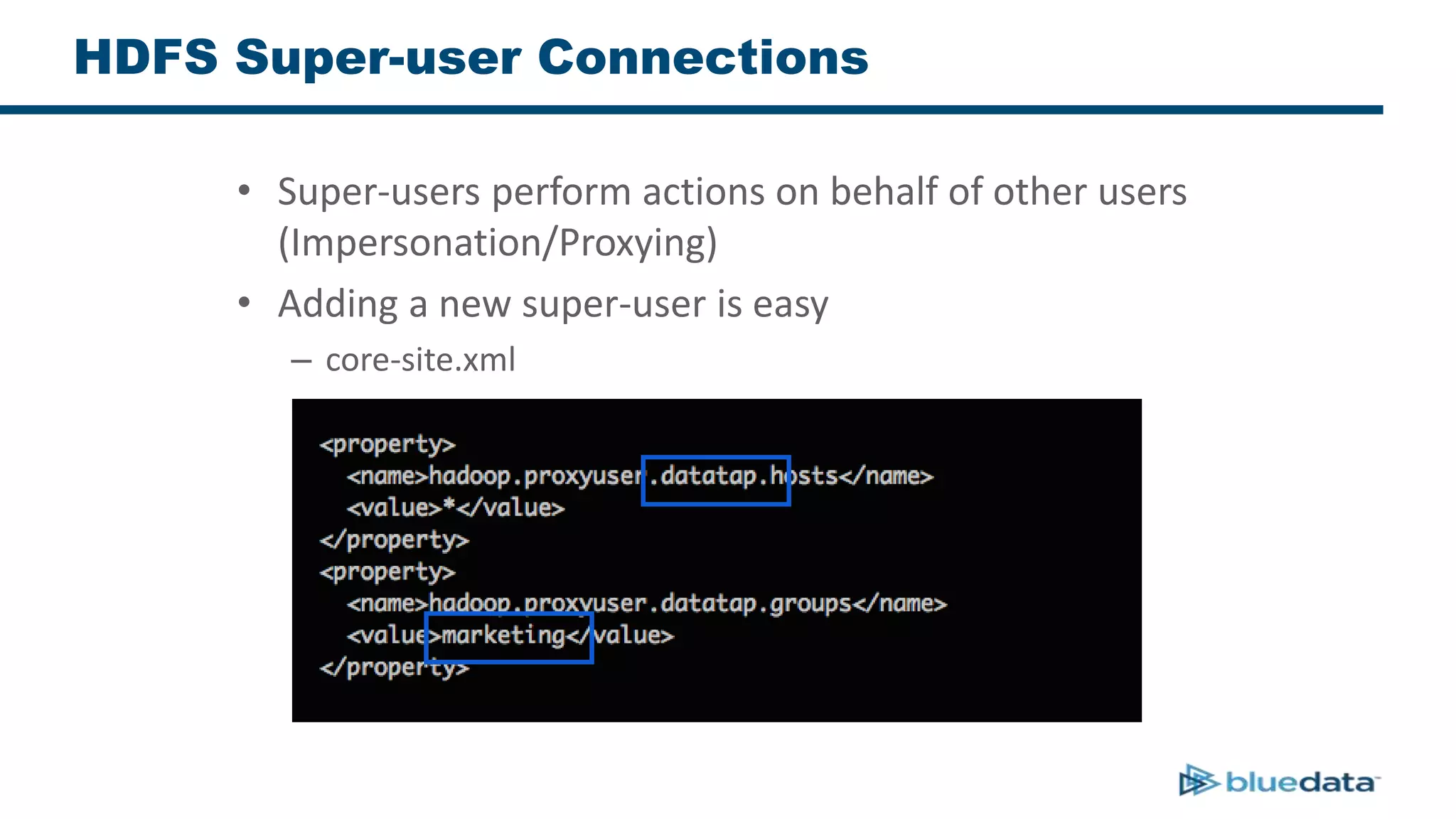

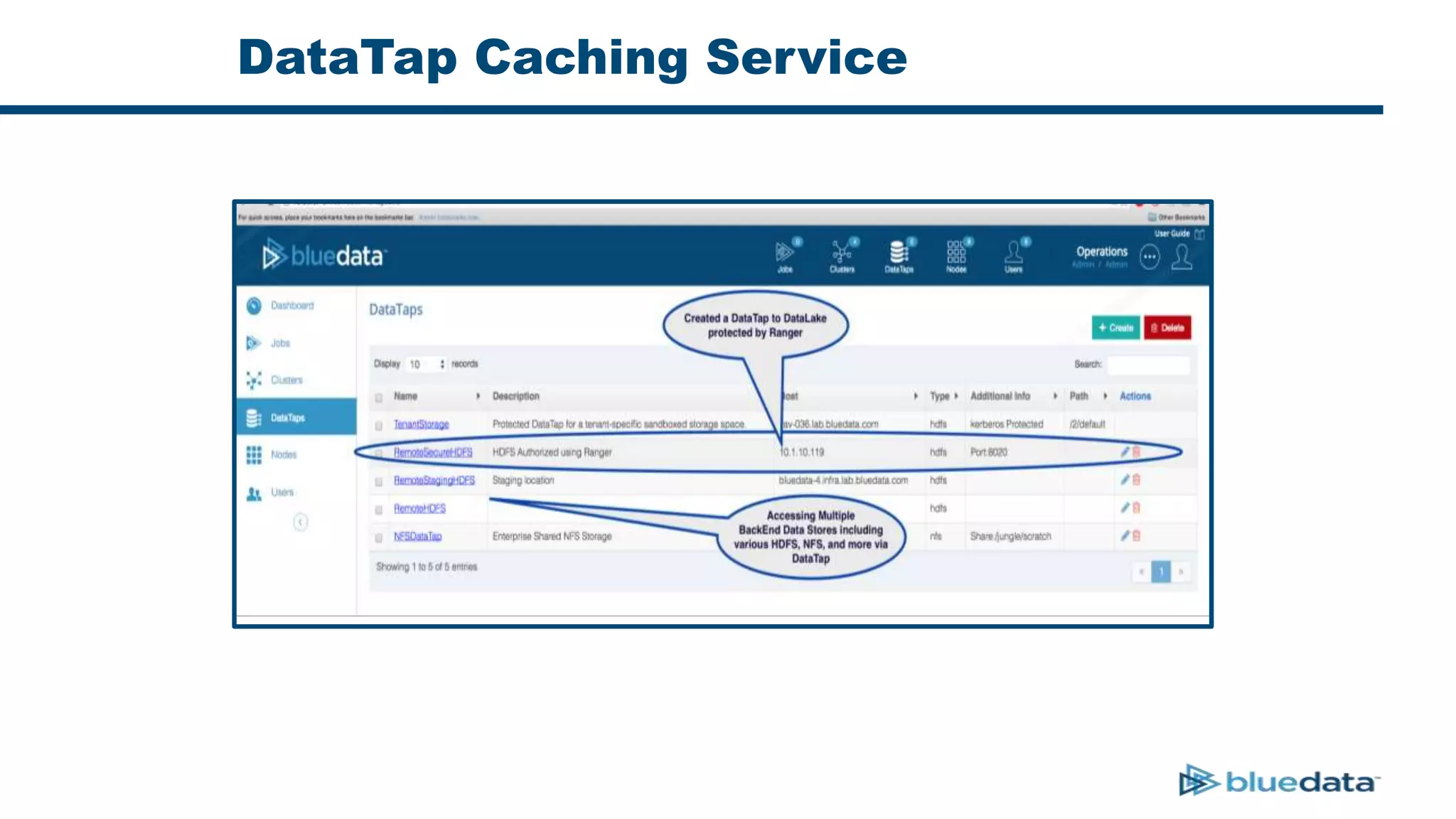

3. The key challenge is propagating user identities from the application layer to the data layer. This can be done by connecting HDFS directly via Kerberos or using a "super-user" that impersonates other users when accessing HDFS.