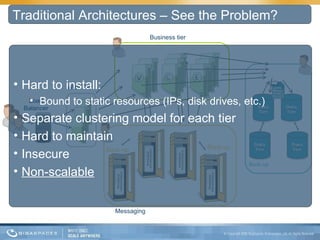

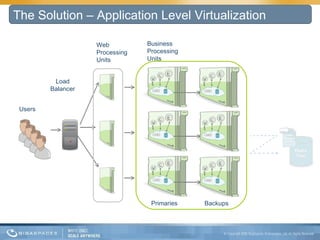

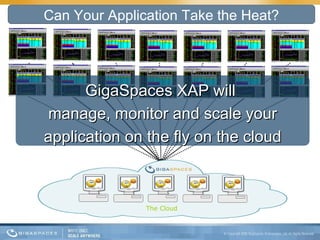

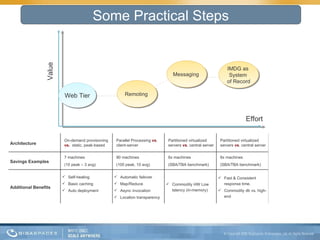

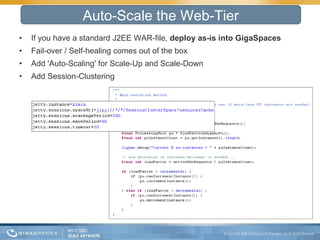

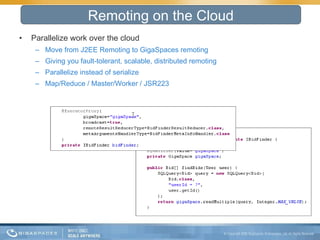

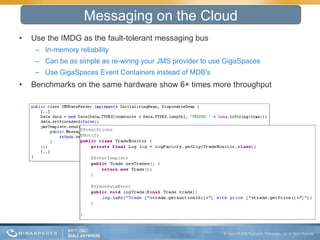

The document discusses challenges and best practices for building applications for the cloud. Traditional architectures are not well-suited for the cloud as they are bound to static resources, hard to maintain, insecure, and non-scalable. Applications need an elastic architecture that can grow and shrink based on demand, with no downtime and data or transaction loss. They also need to be memory-based and easy to operate on the cloud. GigaSpaces XAP provides a solution through application-level virtualization that is linearly scalable, secure, fast, and easy to deploy and monitor on the cloud.