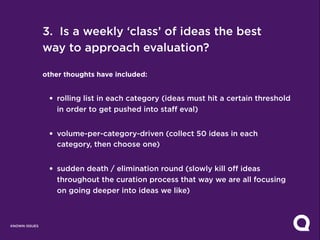

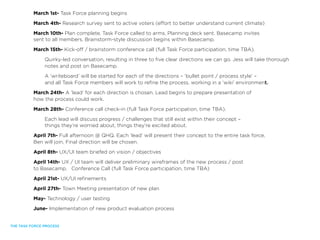

This document outlines the process and members of a task force formed to evaluate and improve a company's product evaluation process. The task force includes community members, staff from various departments, and is led by the COO/CFO. Over the course of several weeks, the group will brainstorm new directions, choose a lead for each, present concepts, and finalize a new approach. User experience design and testing will then inform the implementation of improvements to better surface the best ideas and make the evaluation process more useful, engaging, and constructive.