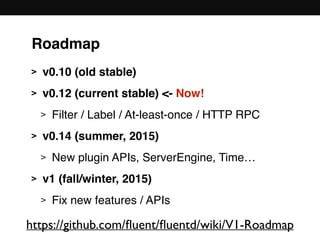

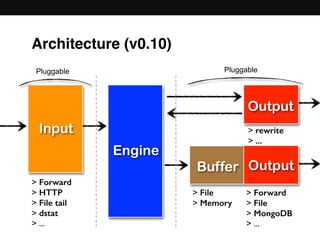

This document provides an overview of Fluentd, an open source data collector. It discusses the key features of Fluentd including structured logging, reliable forwarding, and a pluggable architecture. The document then summarizes the architectures and new features of different Fluentd versions, including v0.10, v0.12, and the upcoming v0.14 and v1 releases. It also discusses Fluentd's ecosystem and plugins as well as Treasure Data's use of Fluentd in its log data collection and analytics platform.

![v1 configuration

> hash, array and enum types are added

> hash and array are json

> Embed Ruby code using "#{}",

> easy to set variable values: "#{ENV['KEY']}"

> Add :secret option to mask parameters

> “@“ prefix for built-in parameters

> @type, @id and @log_level](https://image.slidesharecdn.com/fluentdv0-150601094442-lva1-app6891/85/Fluentd-v0-12-master-guide-9-320.jpg)

![New v1 formats

> Easy to write complex values

> No trick or additional work for common cases

<source>

@type my_tail

keys ["k1", "k2", "k3"]

</source>

<match **>

@typo my_filter

add_keys {"k1" : "v1"}

</match>

<filter **>

@type my_filter

env "#{ENV['KEY']}"

</filter>

Hash,Array, etc: Embedded Ruby code:

• Socket.gethostname

• `command`

• etc...](https://image.slidesharecdn.com/fluentdv0-150601094442-lva1-app6891/85/Fluentd-v0-12-master-guide-10-320.jpg)

![:secret option

> For masking sensitive parameters

> In fluentd logs and in_monitor_agent

2015-05-29 19:50:10 +0900 [info]: using configuration file: <ROOT>

<source>

@type forward

</source>

<match http.**>

@type test

sensitive_param xxxxxx

</match>

<ROOT>

config_param :sensitive_param, :string, :secret => true](https://image.slidesharecdn.com/fluentdv0-150601094442-lva1-app6891/85/Fluentd-v0-12-master-guide-11-320.jpg)

![> Override filter method

module Fluent::AddTagFilter < Filter

# Same as other plugins, initialize, configure, start, shudown

# Define configurations by config_param utilities

def filter(tag, time, record)

# Process record

record["tag"] = tag

# Return processed record,

# If return nil, that records are ignored

record

end

end

Filter: Plugin development 1](https://image.slidesharecdn.com/fluentdv0-150601094442-lva1-app6891/85/Fluentd-v0-12-master-guide-17-320.jpg)

![> Override filter_stream method

module Fluent::AddTagFilter < Filter

def filter_stream(tag, es)

new_es = MultiEventStream.new

es.each { |time, record|

begin

record["tag"] = tag

new_es.add(time, record)

rescue => e

router.emit_error_event(tag, time, record, e)

end

}

new_es

end

end

Filter: Plugin development 2](https://image.slidesharecdn.com/fluentdv0-150601094442-lva1-app6891/85/Fluentd-v0-12-master-guide-18-320.jpg)