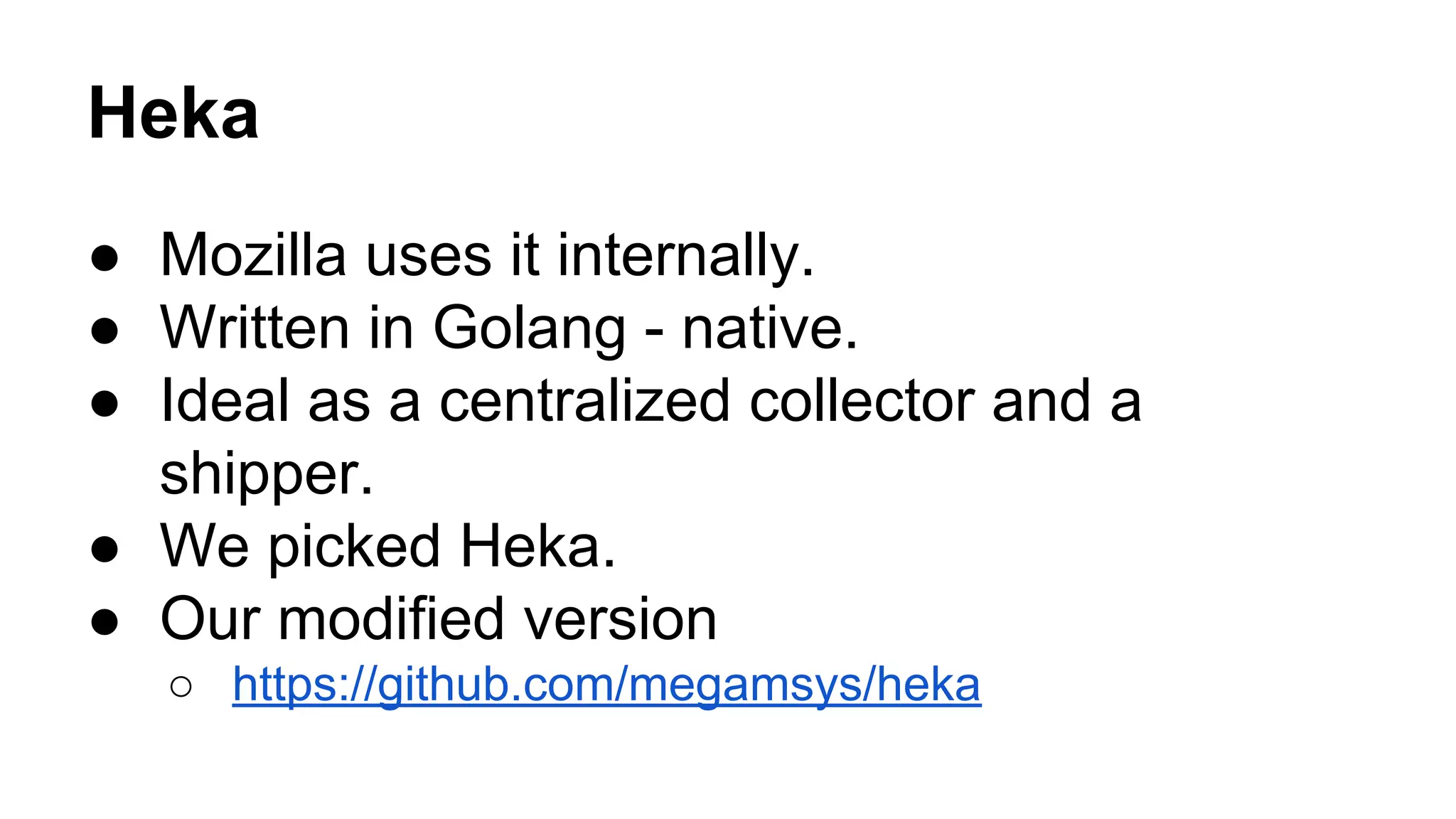

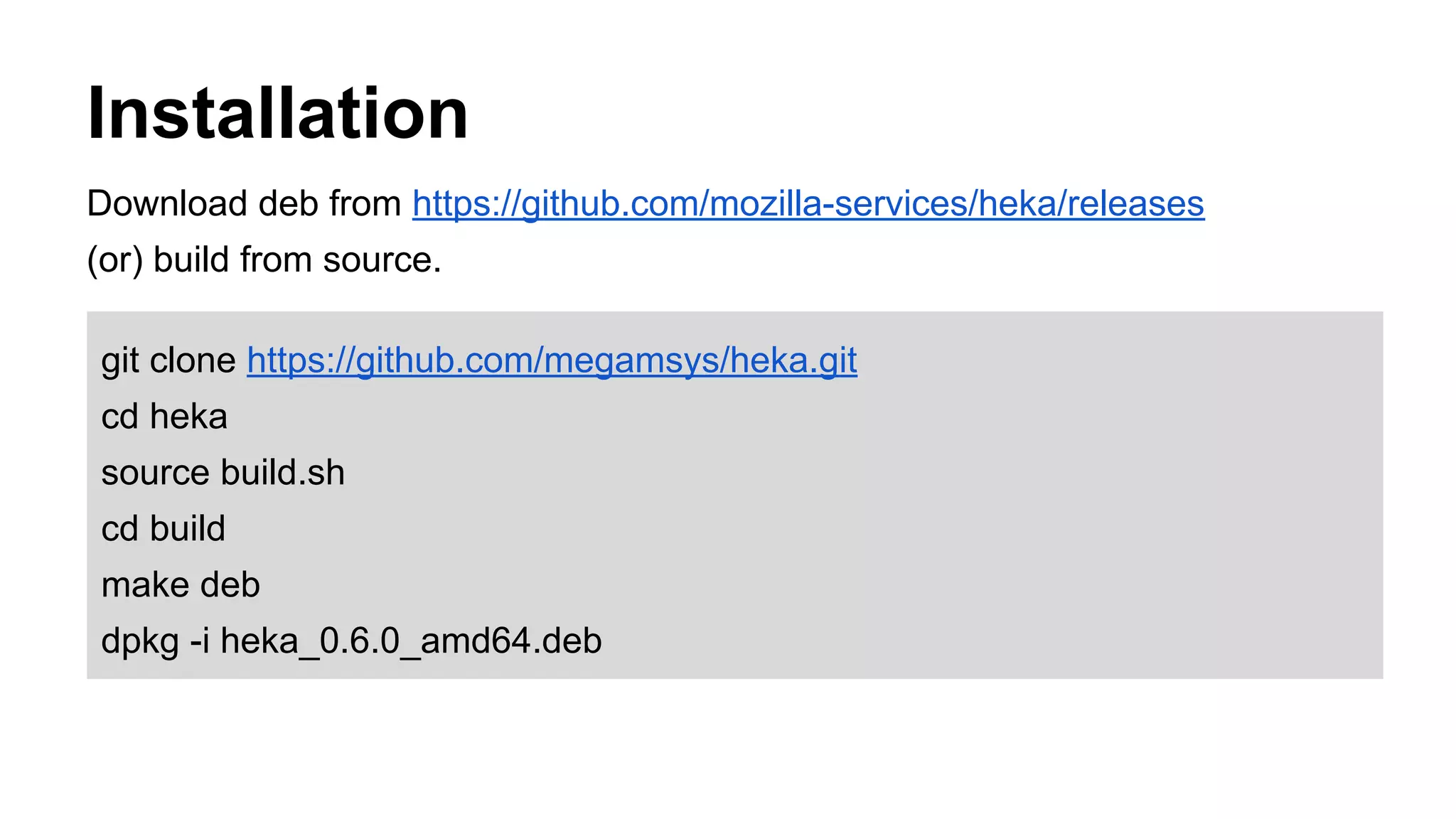

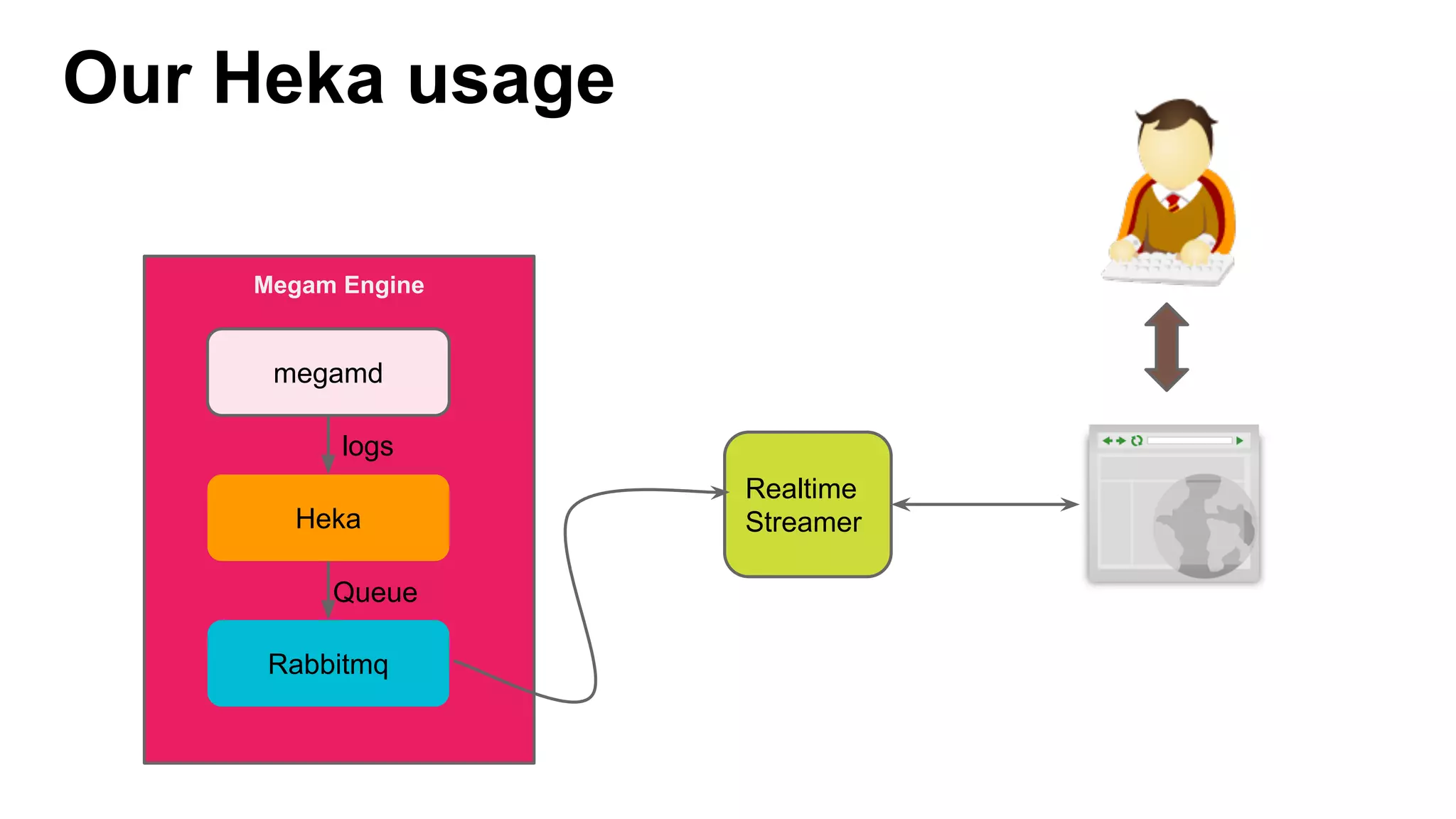

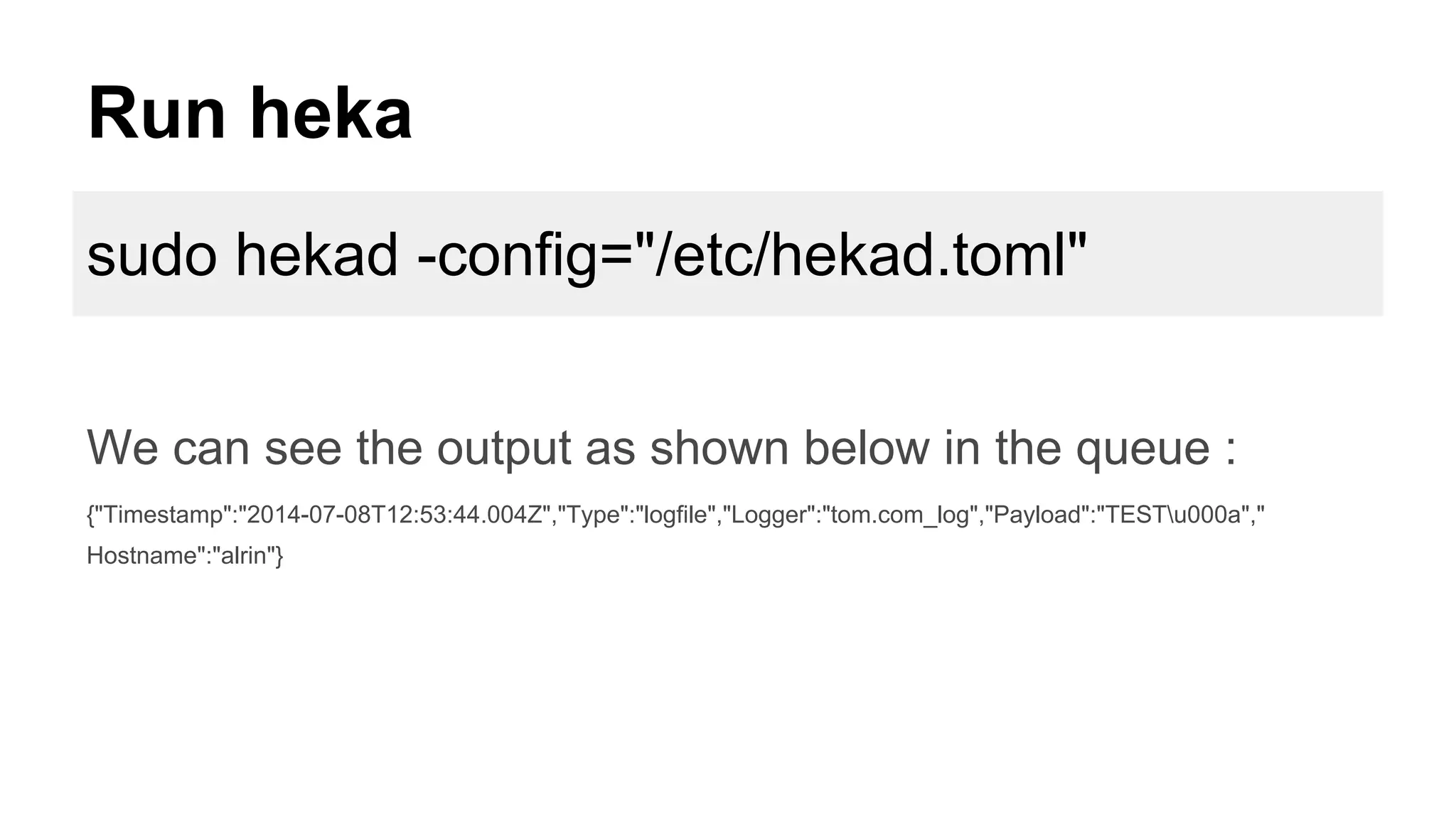

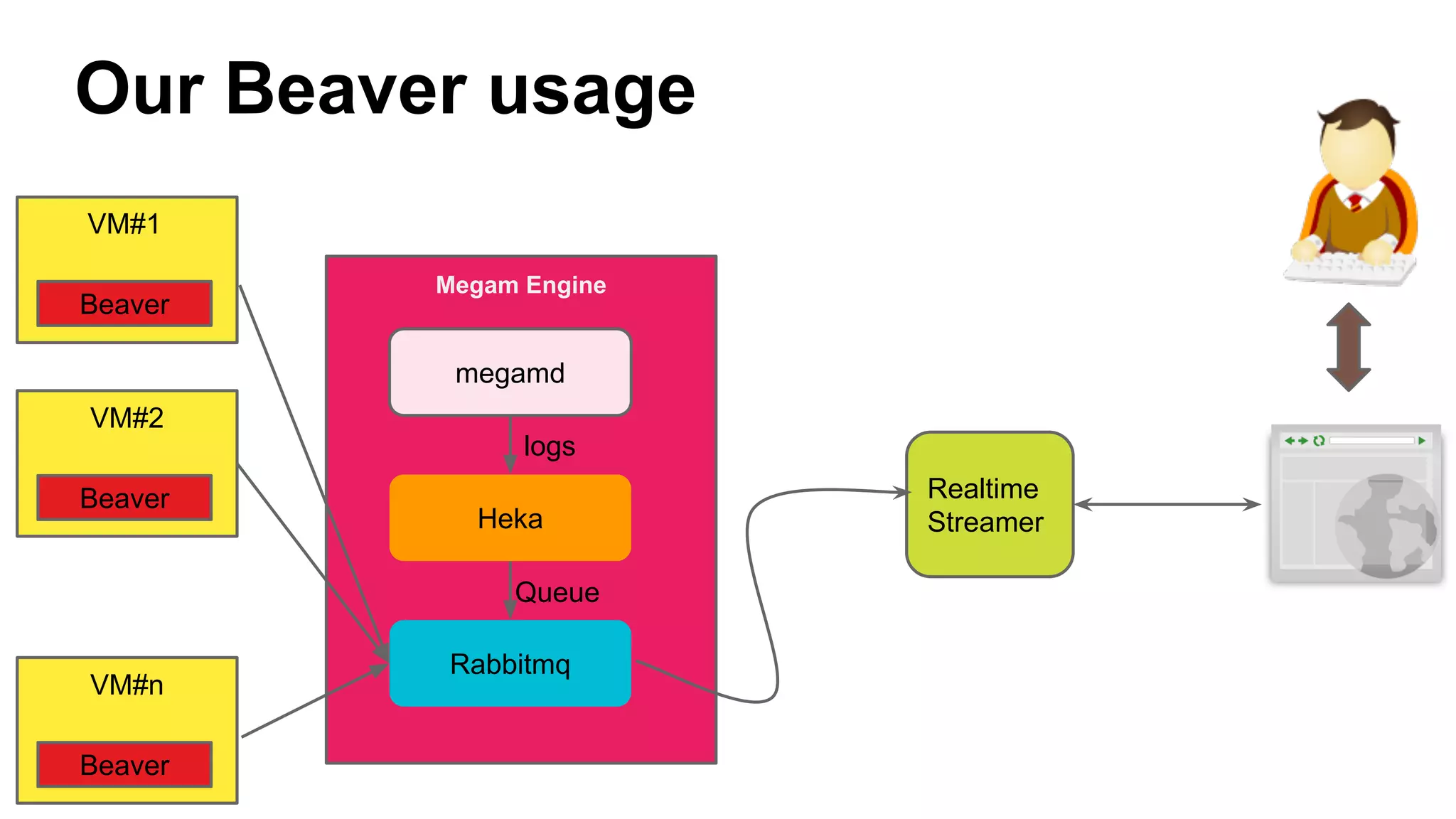

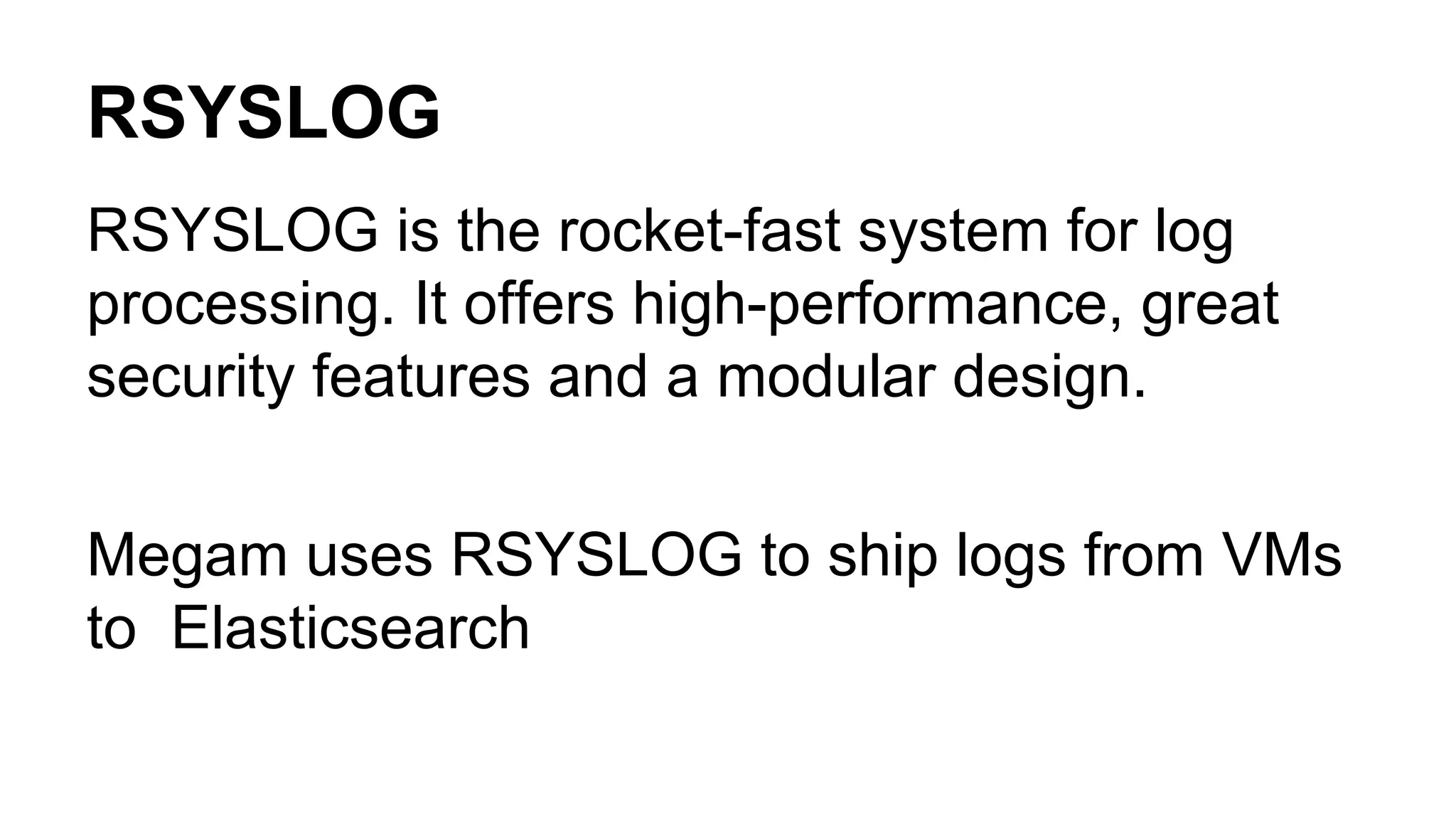

This document discusses options for streaming logs and metrics from cloud VMs in real-time. It evaluates log shipping tools like Logstash, Fluentd, Beaver, Logstash-Forwarder, RSYSLOG and Heka, and recommends Heka and Beaver for shipping logs from VMs to a central queue due to their lightweight footprint. The document also provides configuration examples for using Heka and Beaver to collect logs from VMs and stream them to RabbitMQ in real-time for further processing. Chef recipes are used to dynamically configure log shipping tools on VMs.

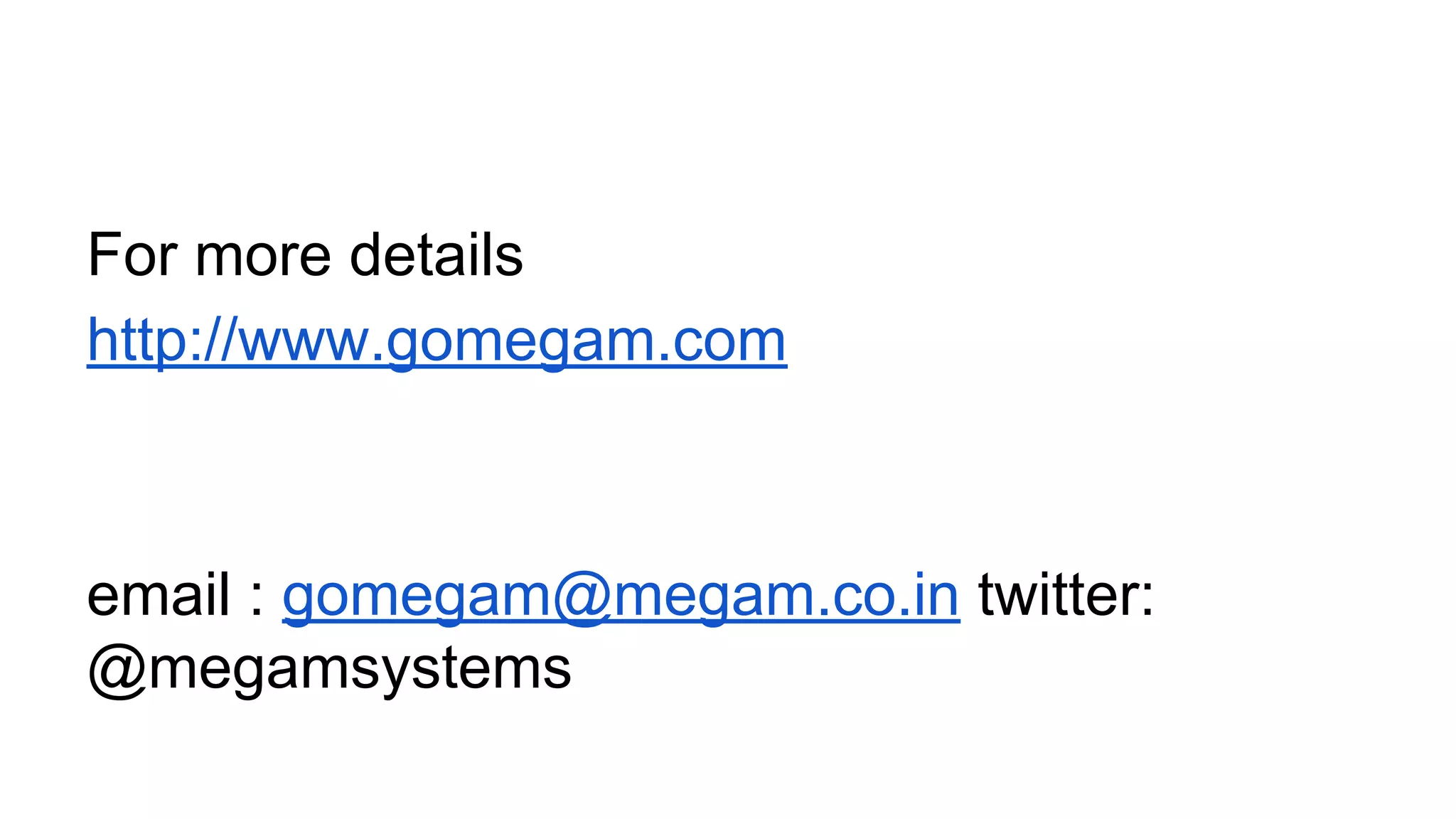

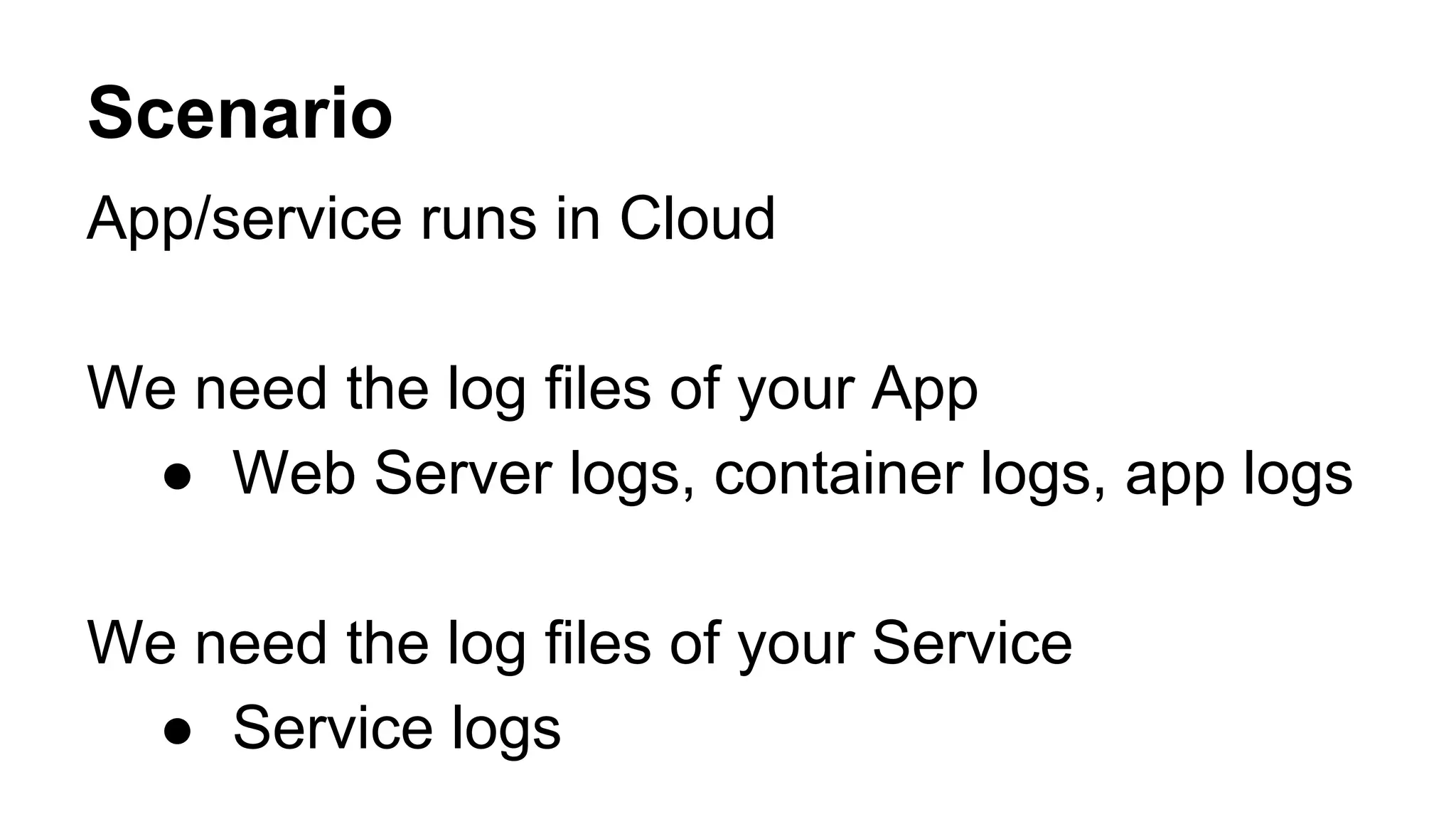

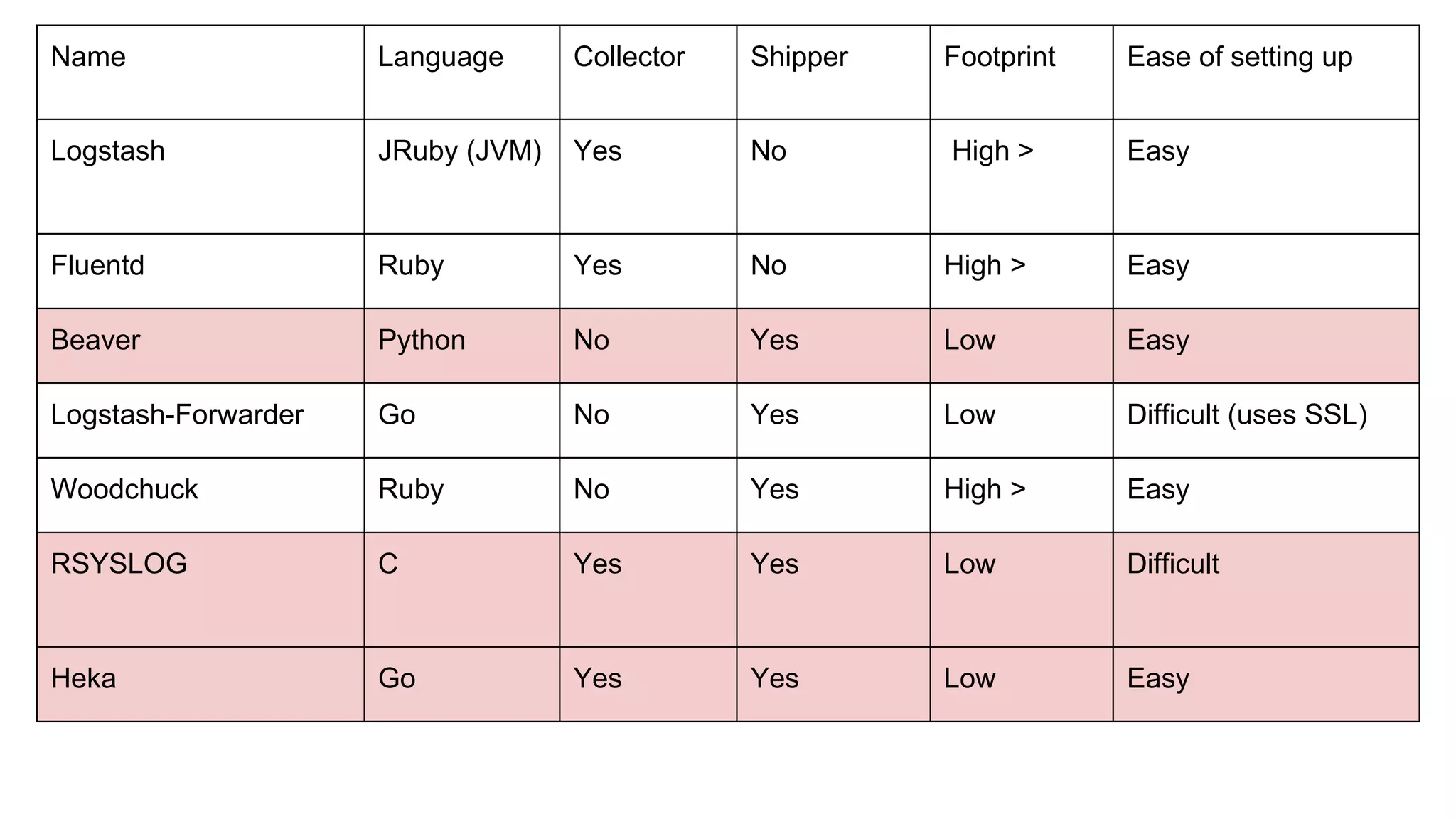

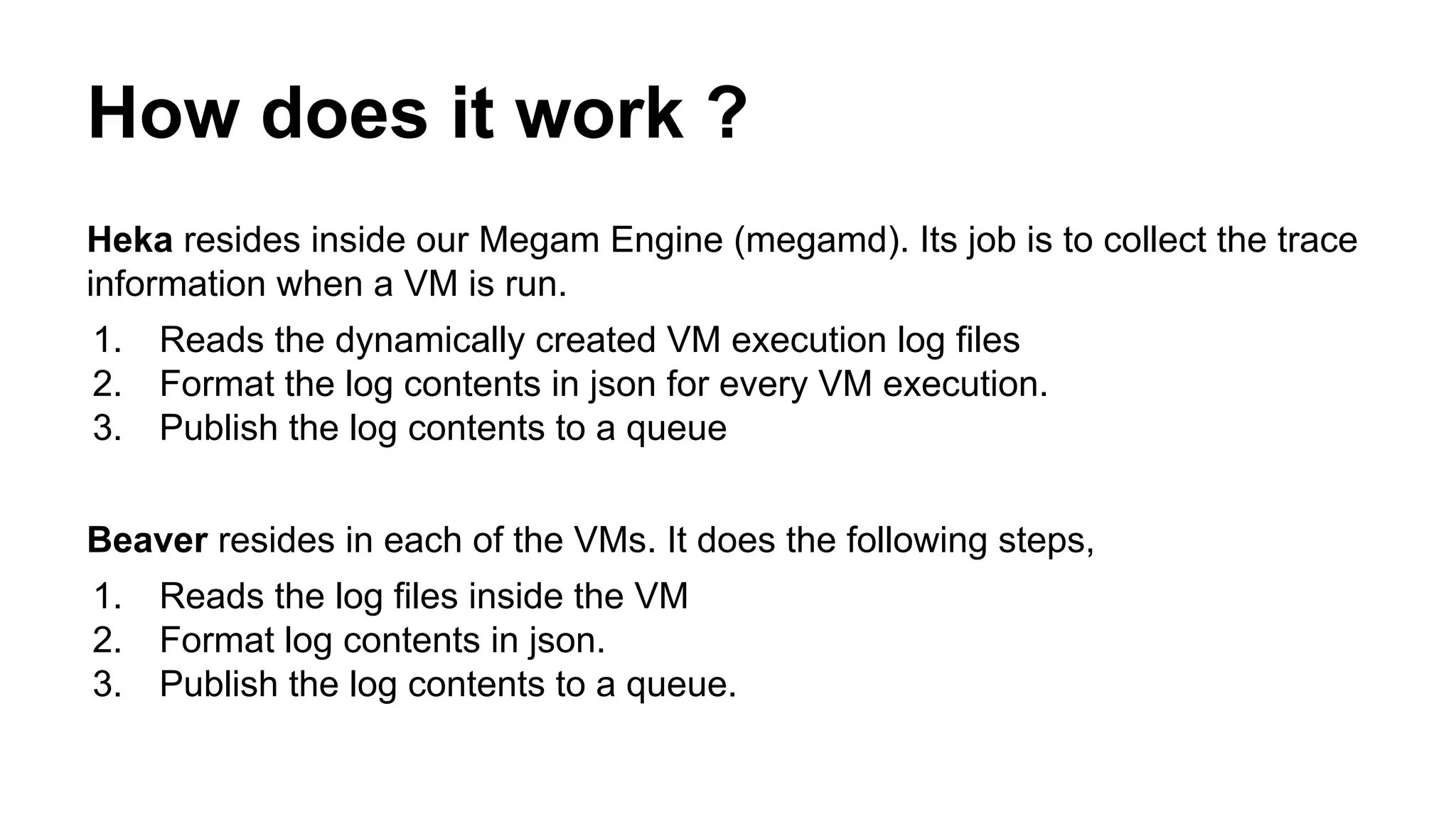

![Logstash Shipper - Sample conf

input {

file {

type => "access-log"

path => [ "/usr/local/share/megam/megamd/logs/*/*" ]

}

}

filter {

grok {

type => "access-log"

match => [ "@source_path",

"(//usr/local/share/megam/megamd/logs/)(?

<source_key>.+)(//*)" ]

}

}

output {

stdout { debug => true debug_format => "json"}

redis {

key => '%{source_key}'

type => "access-log"

data_type => "channel"

host => "my_redis_server.com"

}

}

Logs inside <source_key> directory are shipped to Redis key named <source_key>

/opt/logstash/agent/etc$ sudo cat shipper.conf](https://image.slidesharecdn.com/likelogglyusingopensource-140711030330-phpapp01/75/Like-loggly-using-open-source-15-2048.jpg)

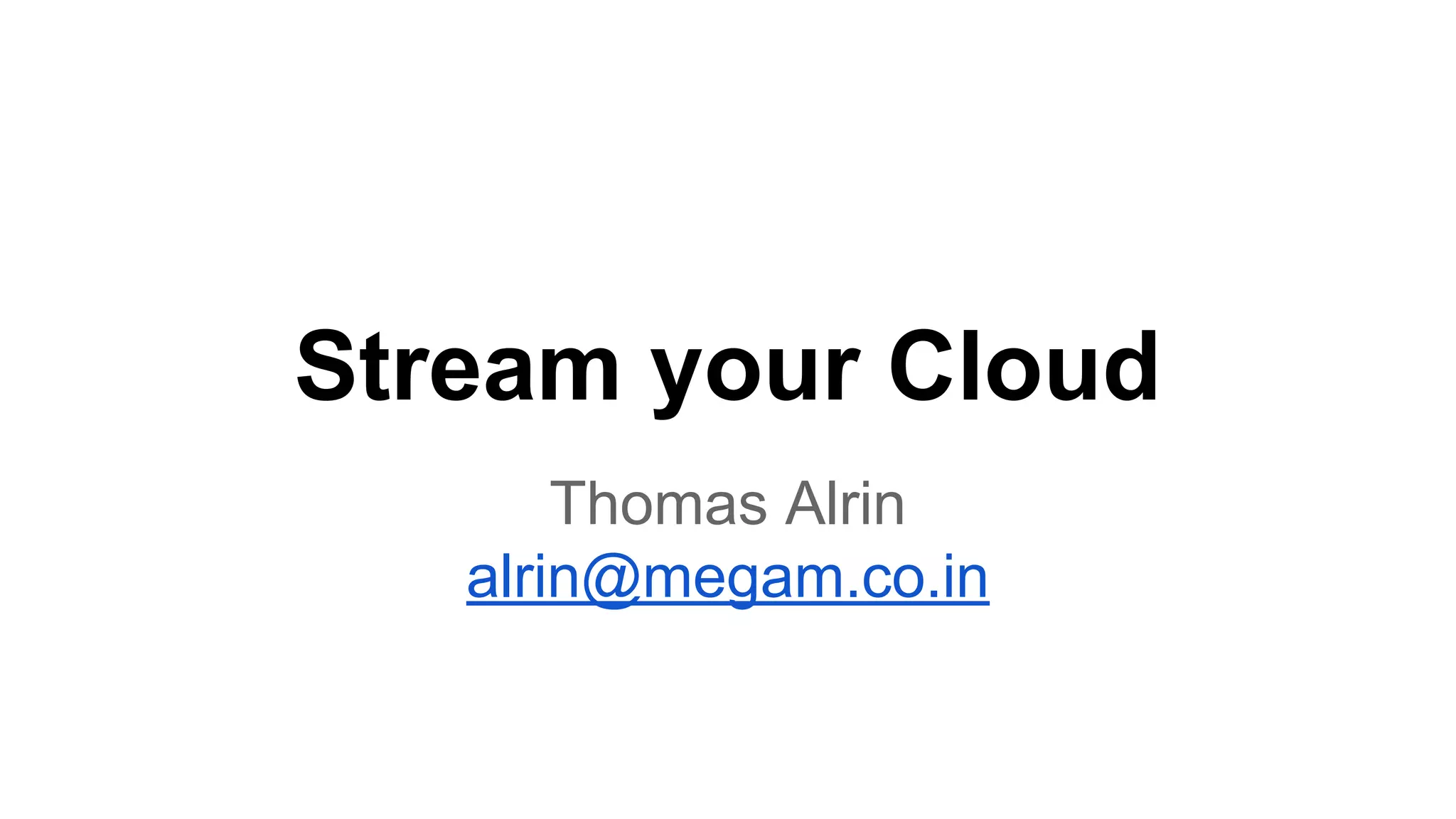

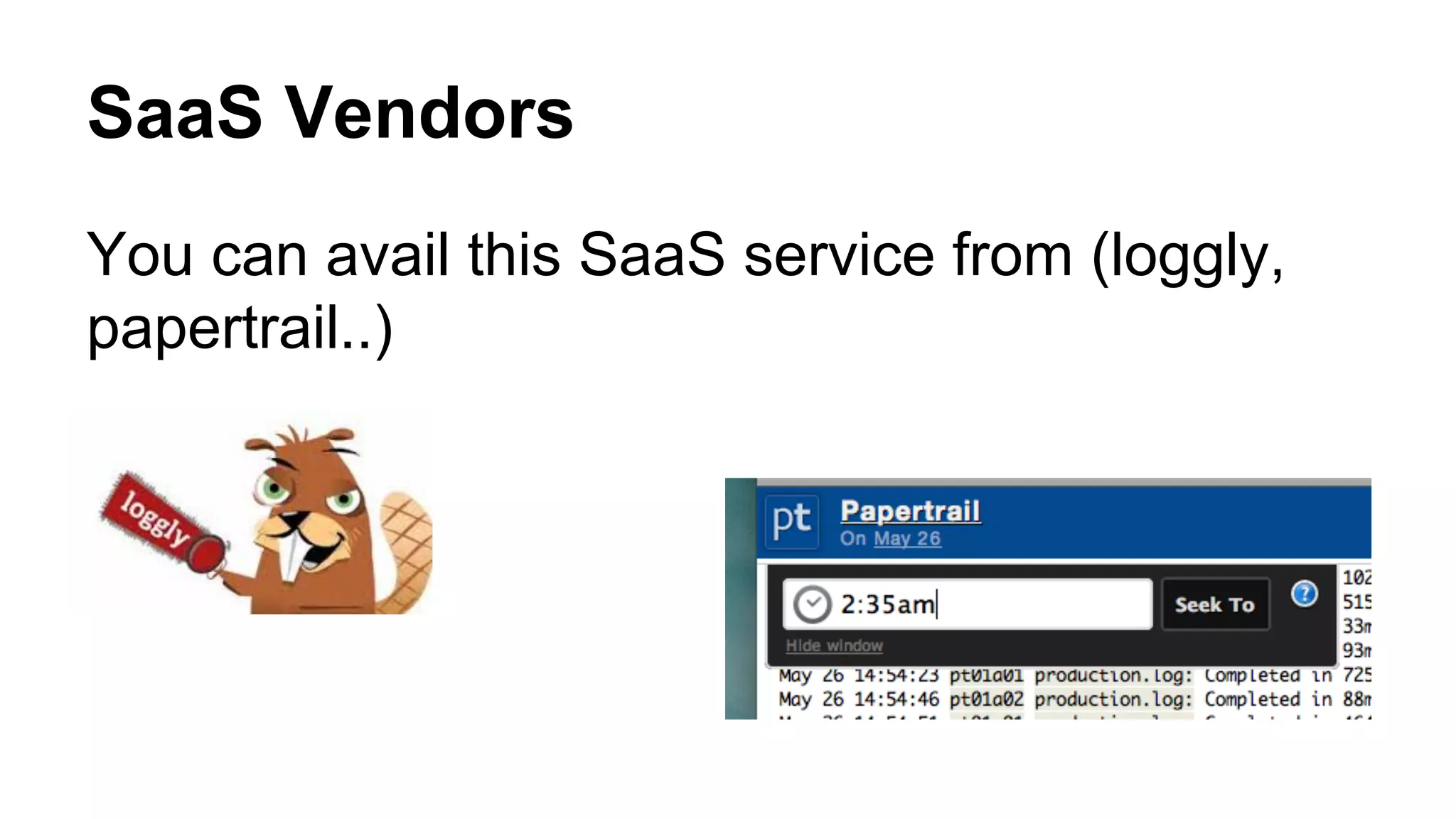

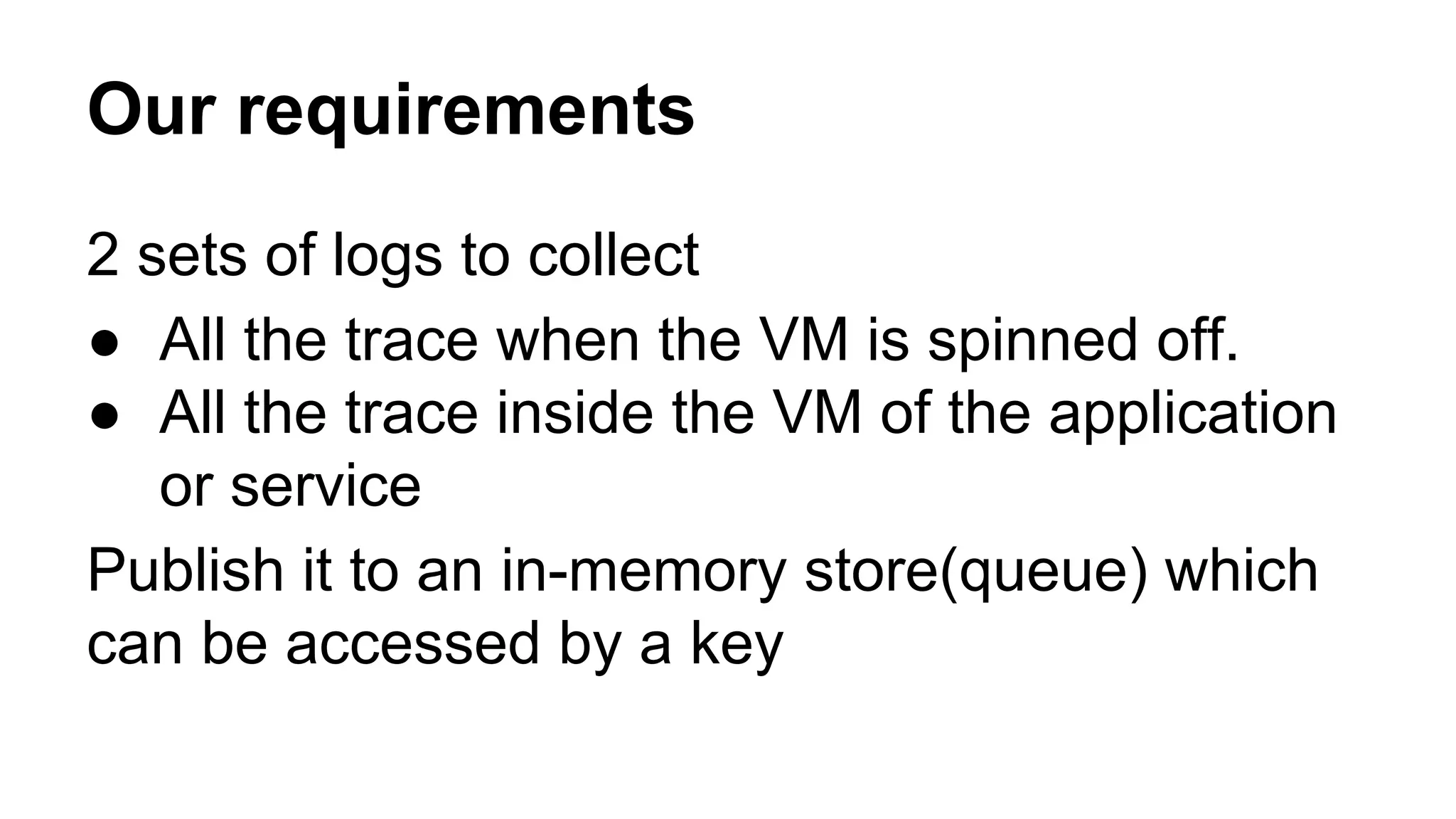

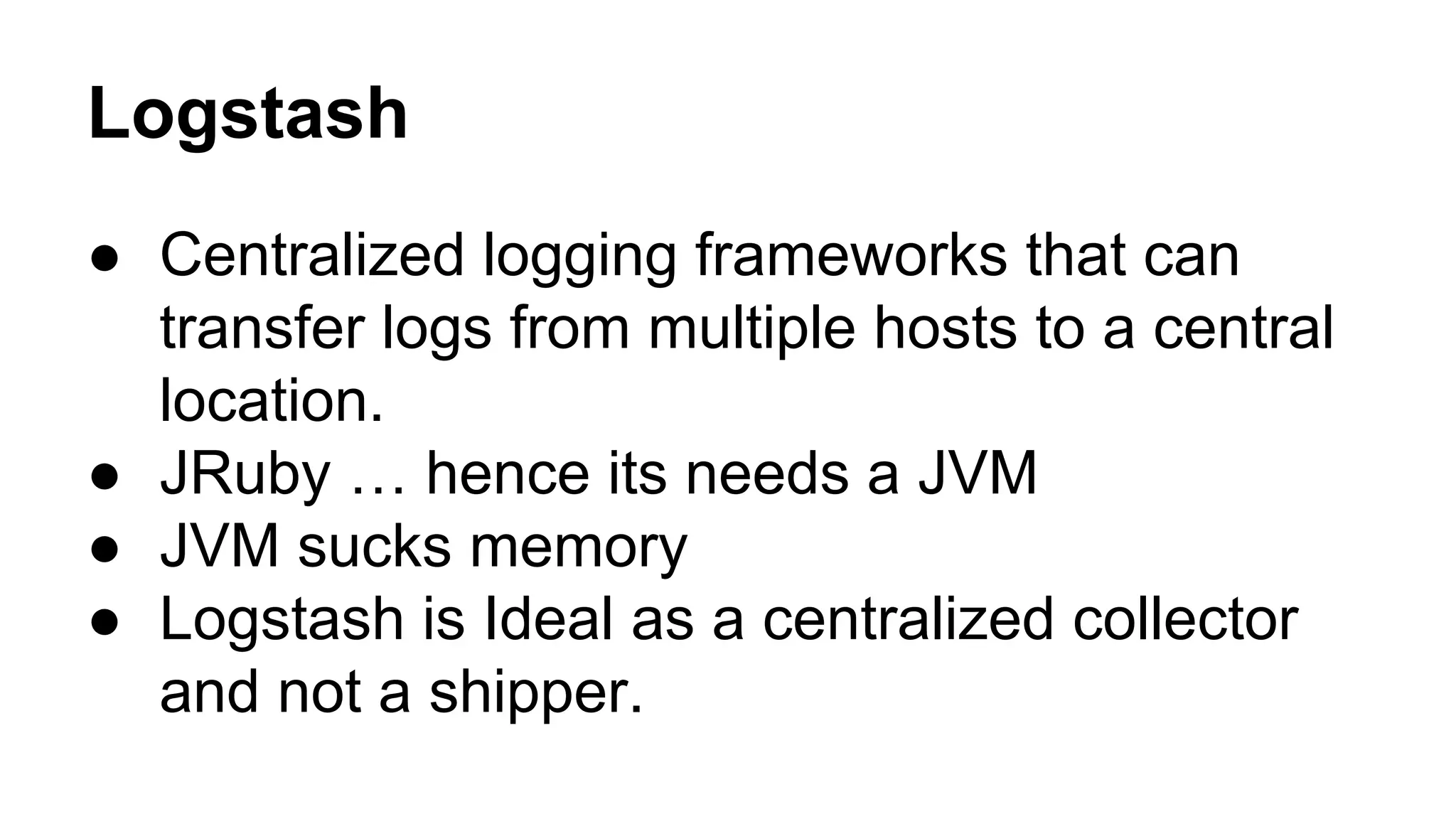

![Heka configuration

nano /etc/hekad.toml

[TestWebserver]

type = "LogstreamerInput"

log_directory = "/usr/share/megam/heka/logs/"

file_match = '(?P<DomainName>[^/]+)/(?P<FileName>[^/]+)'

differentiator = ["DomainName", "_log"]

[AMQPOutput]

url = "amqp://guest:guest@localhost/"

exchange = "test_tom"

queue = true

exchangeType = "fanout"

message_matcher = 'TRUE'

encoder = "JsonEncoder"

[JsonEncoder]

fields = [ "Timestamp", "Type", "Logger", "Payload",

"Hostname" ]](https://image.slidesharecdn.com/likelogglyusingopensource-140711030330-phpapp01/75/Like-loggly-using-open-source-20-2048.jpg)

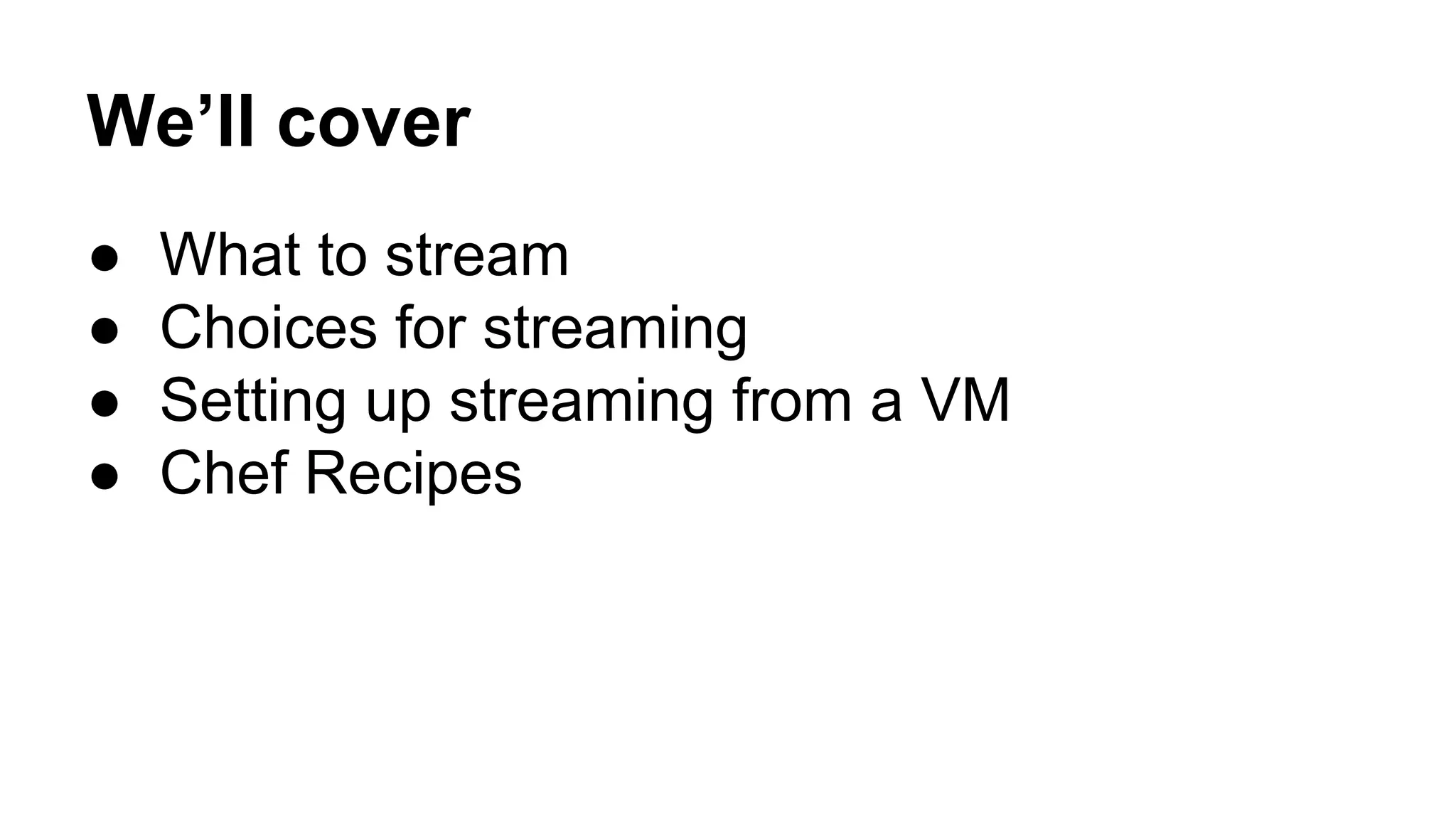

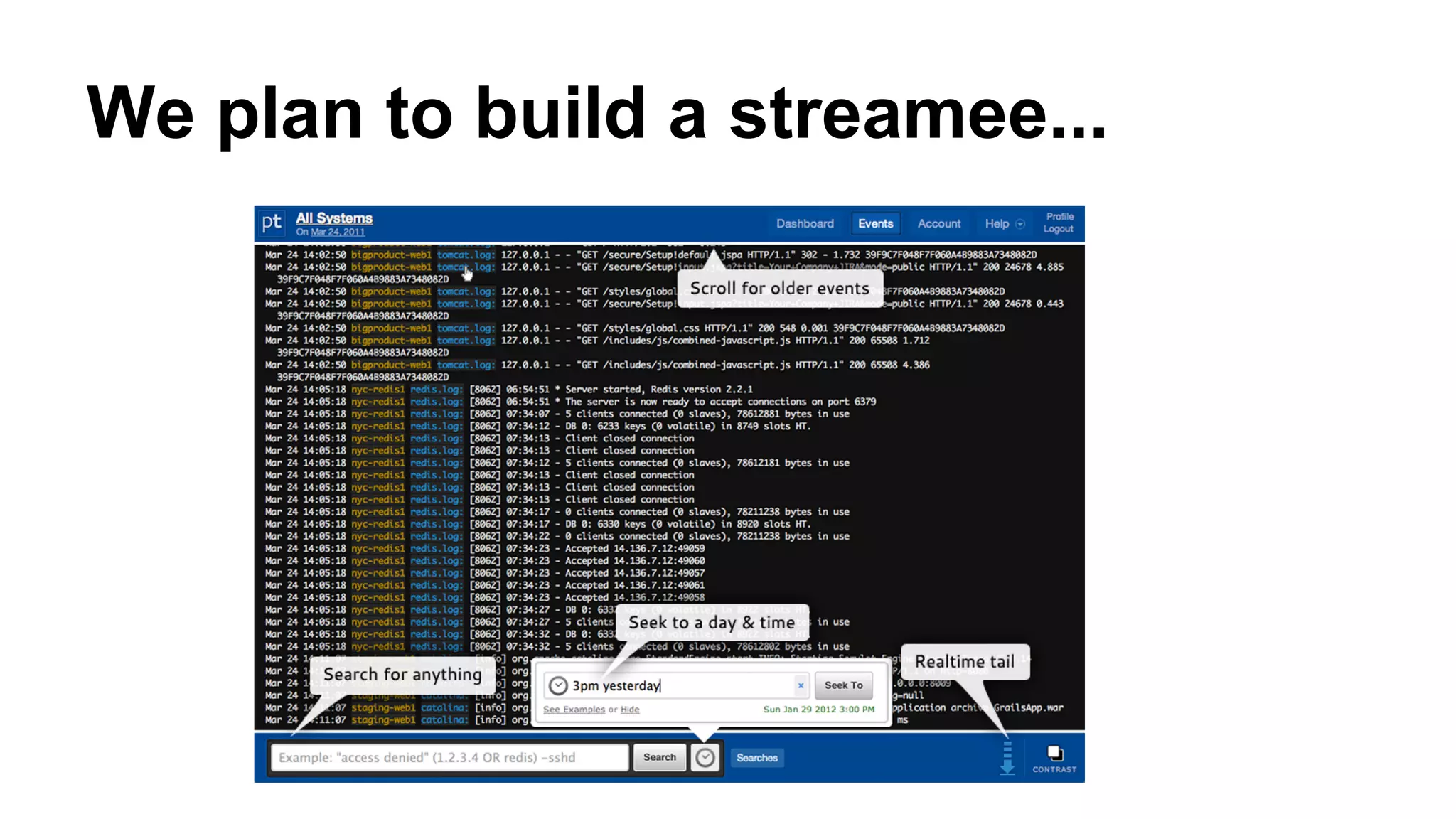

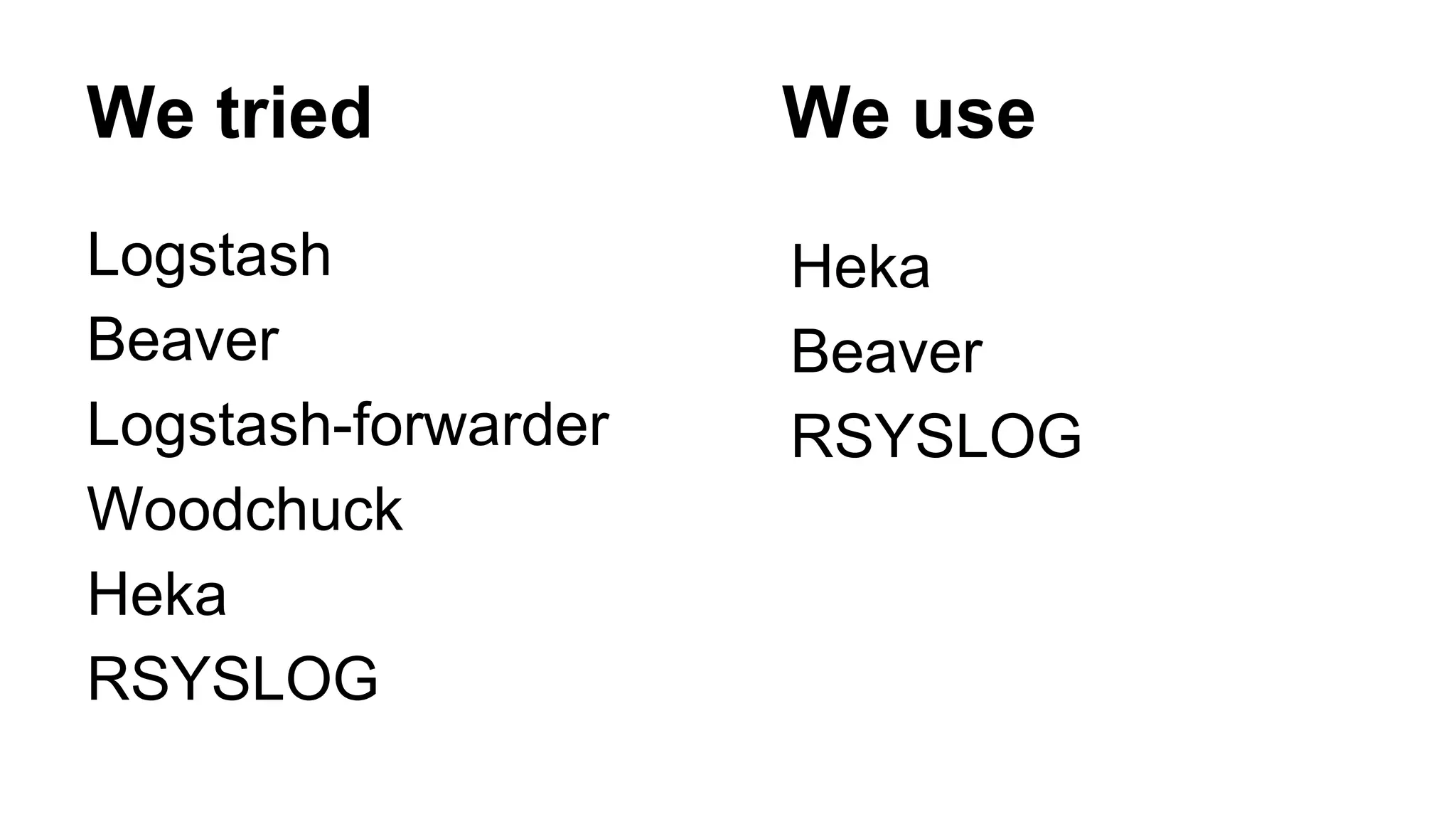

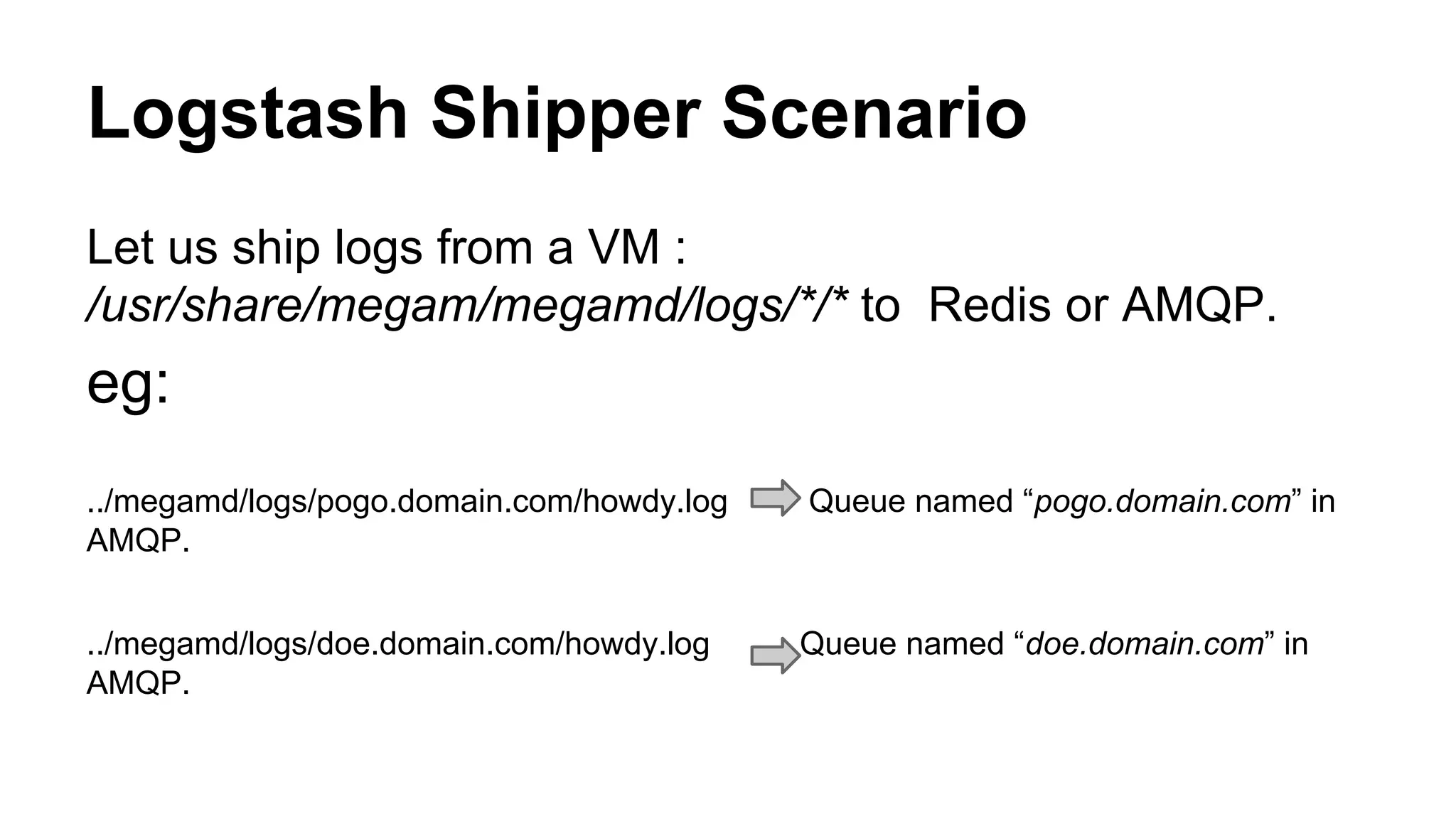

![Chef Recipe : Beaver

When a VM is run, recipe(megam_logstash::beaver) is

included.

node.set['logstash']['key'] = "#{node.name}"

node.set['logstash']['amqp'] = "#{node.name}_log"

node.set['logstash']['beaver']['inputs'] = [ "/var/log/upstart/nodejs.log",

"/var/log/upstart/gulpd.log" ]

include_recipe "megam_logstash::beaver"

attributes like (nodename, logfiles) are set dynamically.](https://image.slidesharecdn.com/likelogglyusingopensource-140711030330-phpapp01/75/Like-loggly-using-open-source-24-2048.jpg)

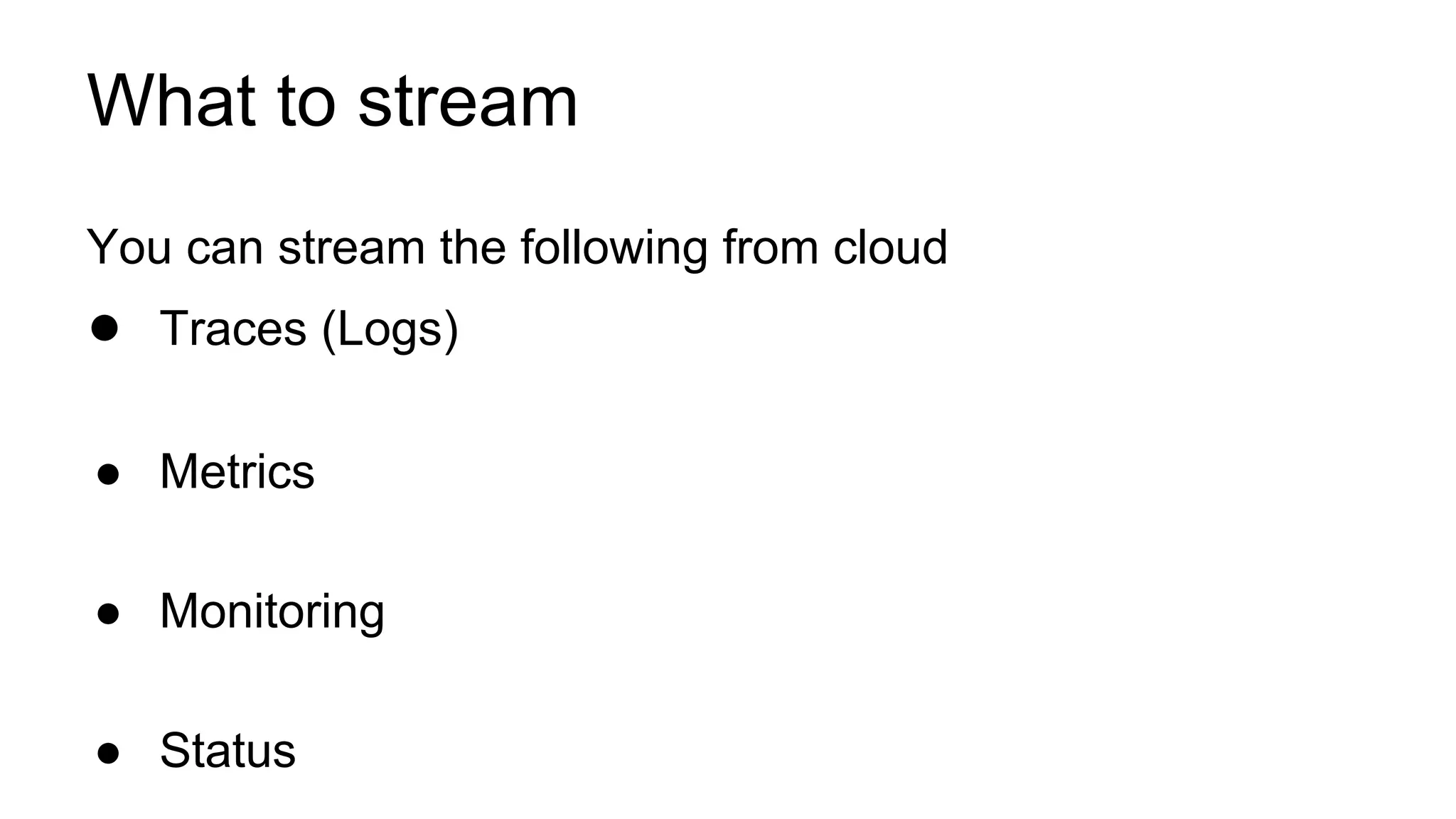

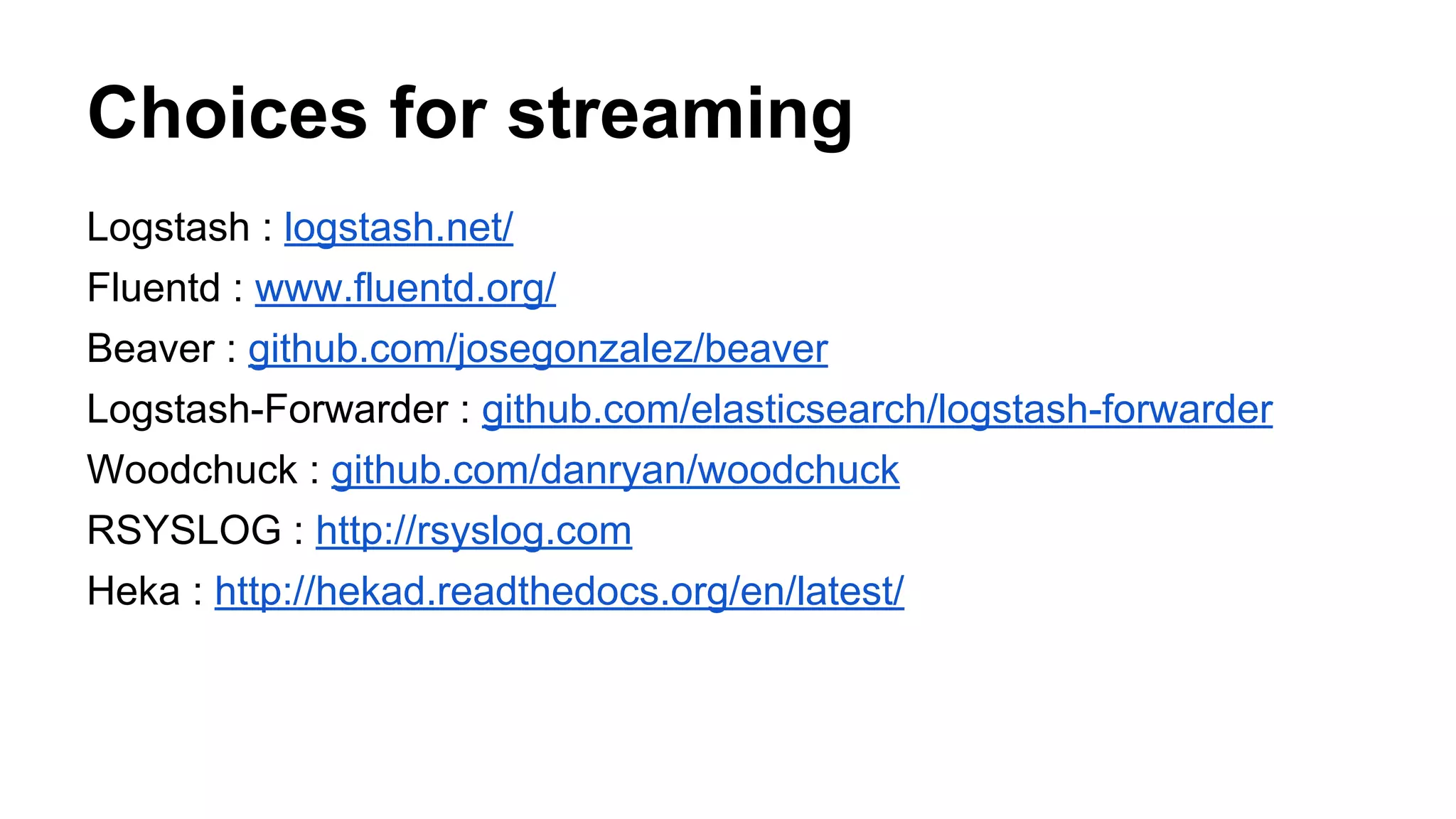

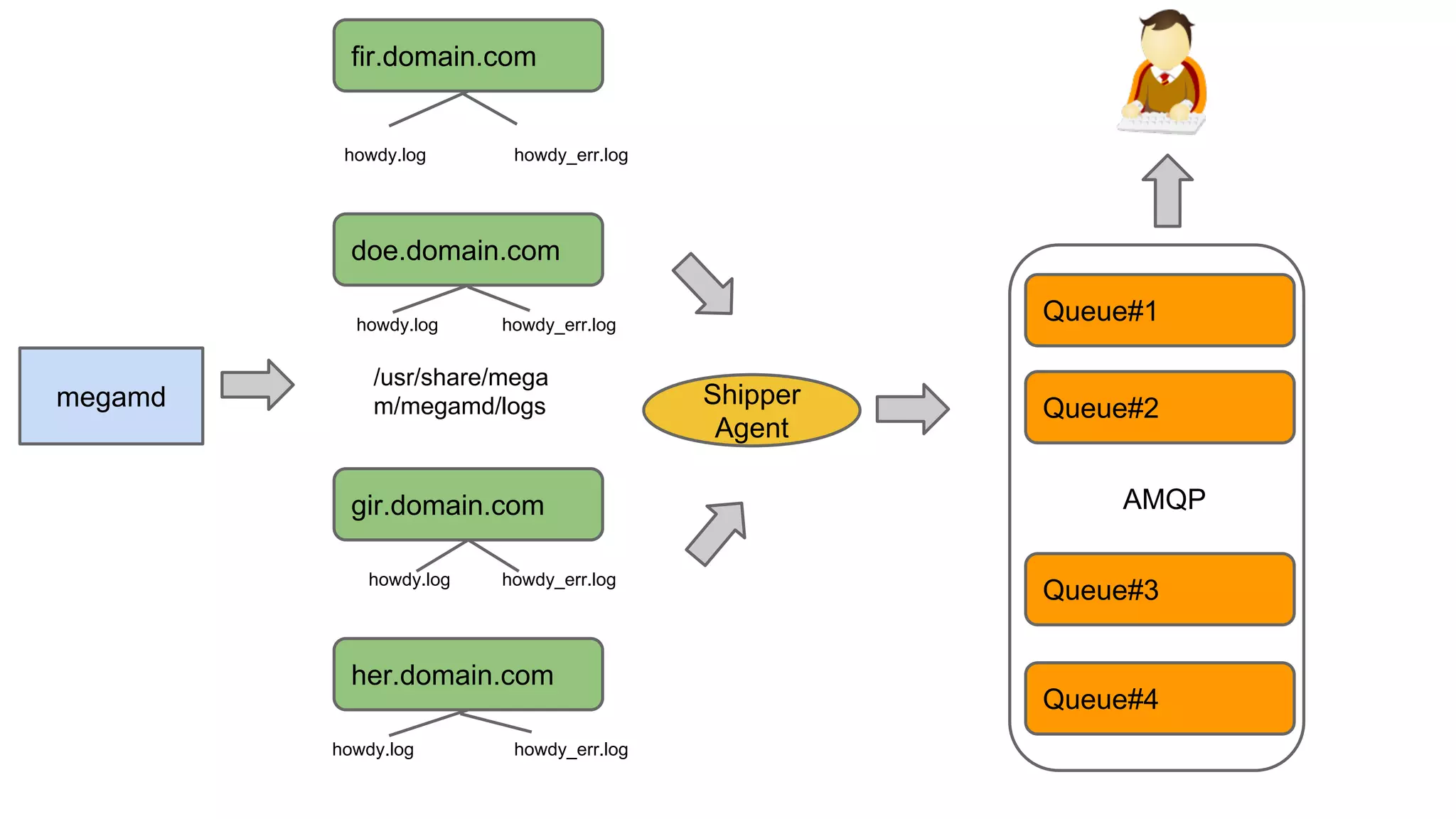

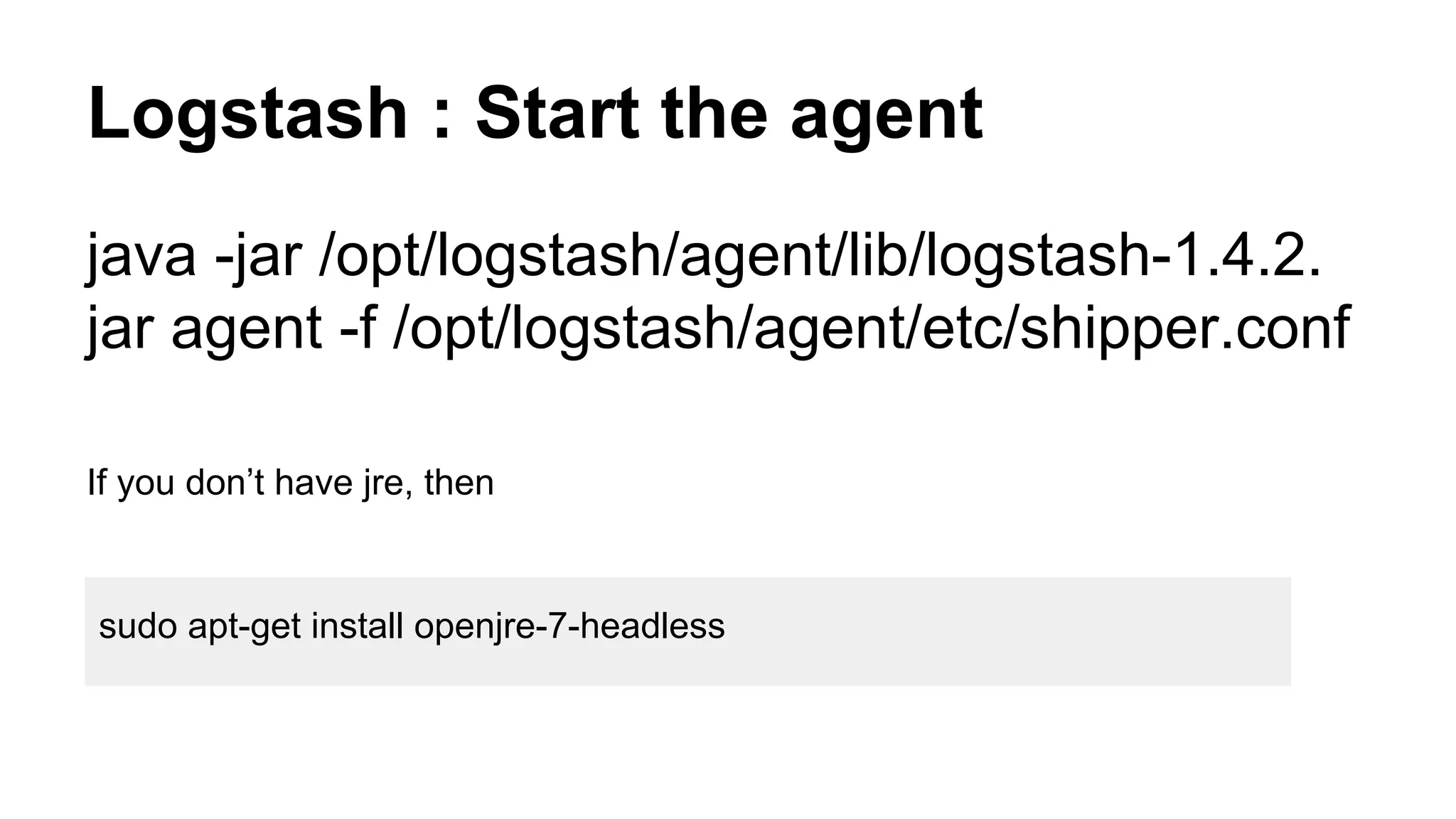

![Chef Recipe : Rsyslog

When a VM is run, recipe(megam_logstash::rsyslog) is

included.

node.set['rsyslog']['index'] = "#{node.name}"

node.set['rsyslog']['elastic_ip'] = "monitor.megam.co.in"

node.set['rsyslog']['input']['files'] = [ "/var/log/upstart/nodejs.log",

"/var/log/upstart/gulpd.log" ]

include_recipe "megam_logstash::rsyslog"

attributes like (nodename, logfiles) are set dynamically.](https://image.slidesharecdn.com/likelogglyusingopensource-140711030330-phpapp01/75/Like-loggly-using-open-source-26-2048.jpg)