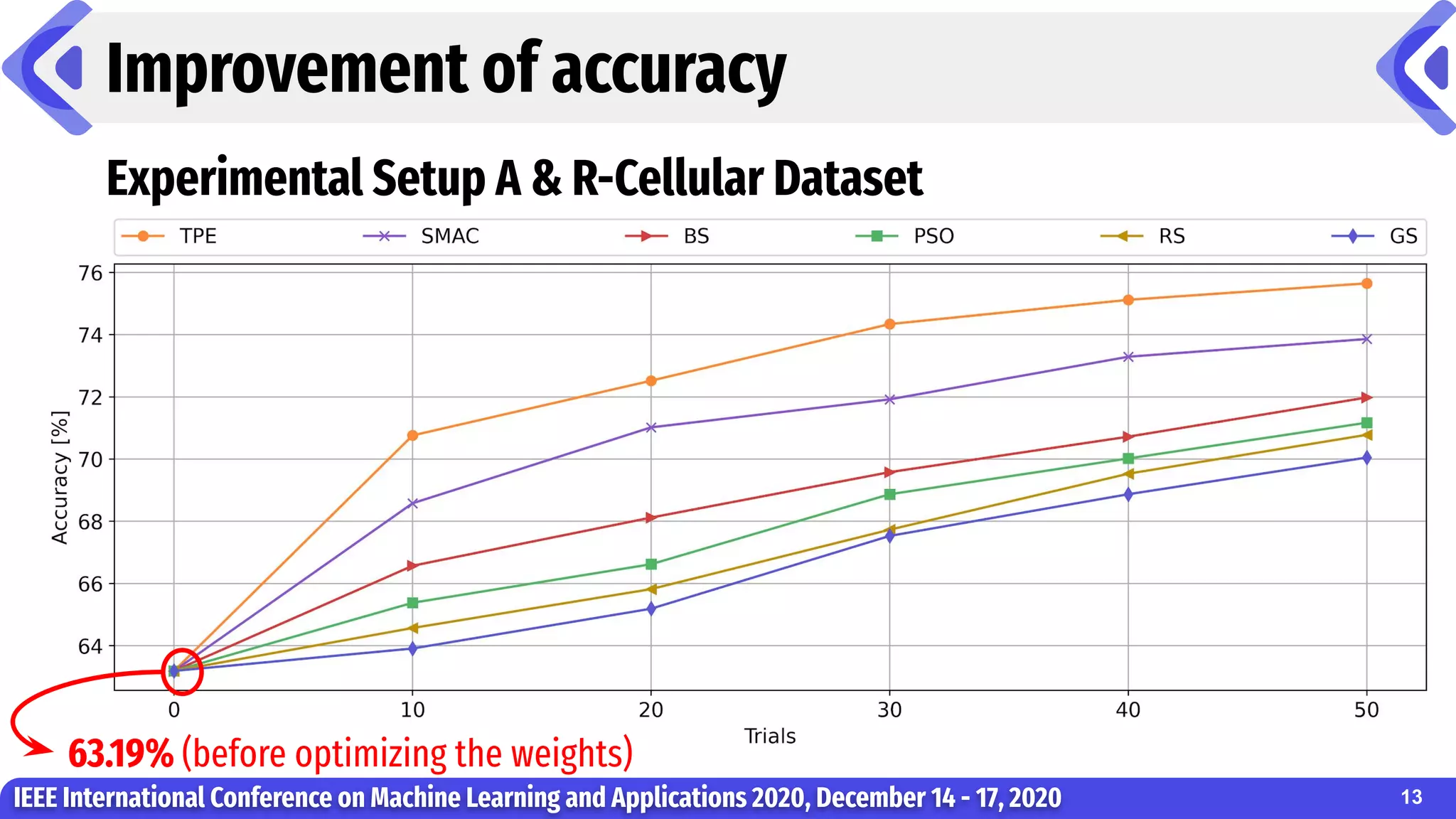

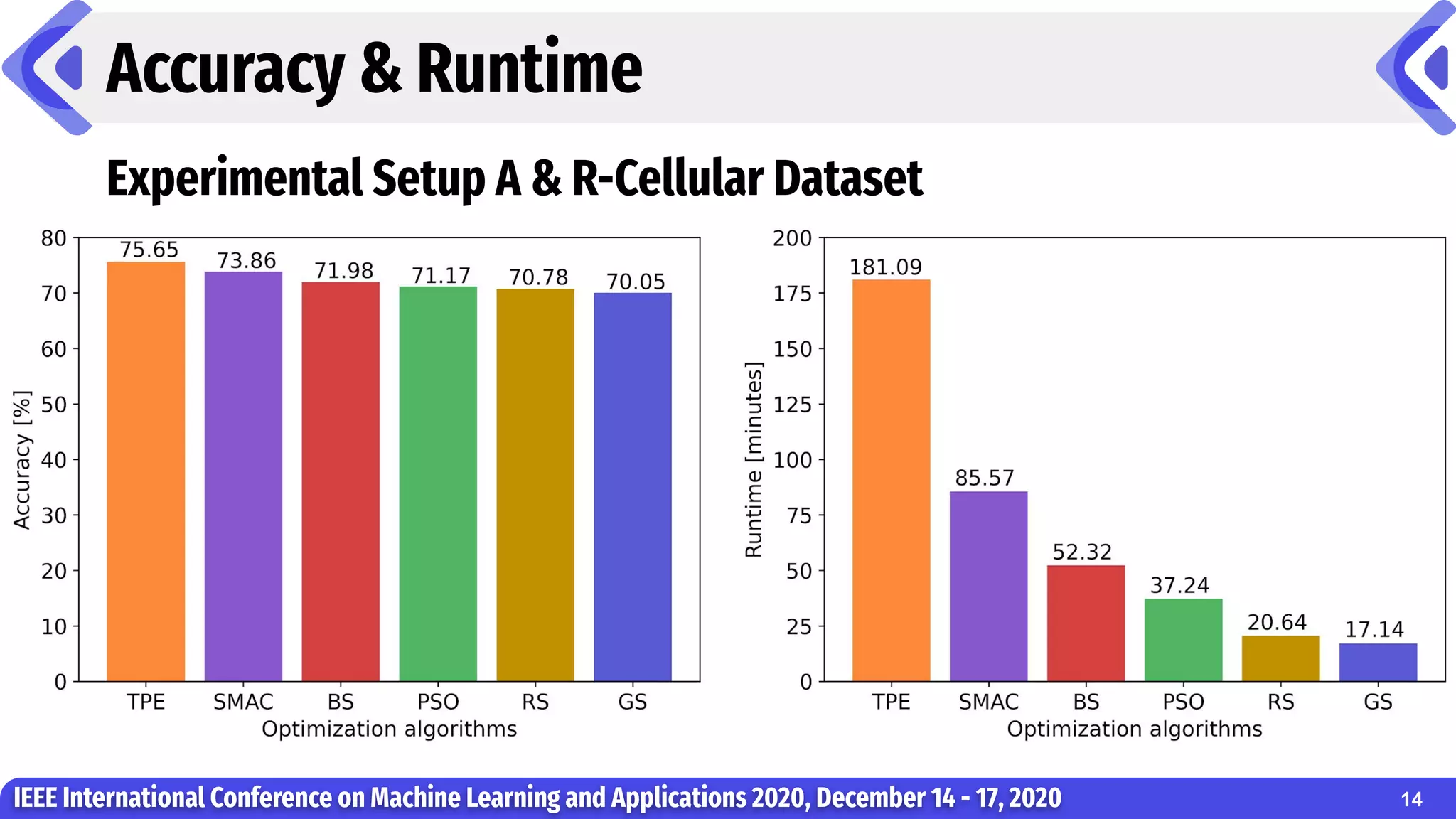

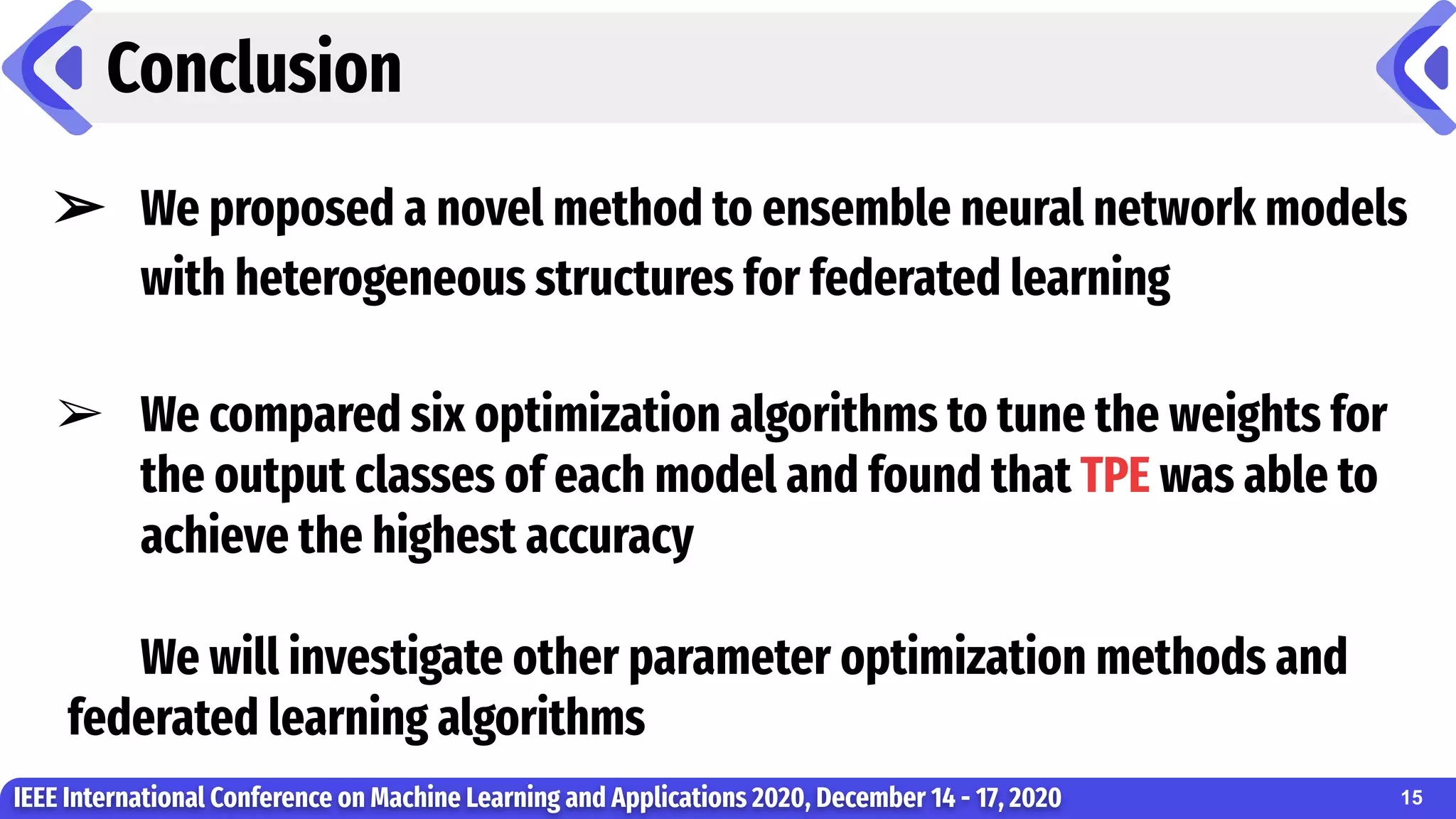

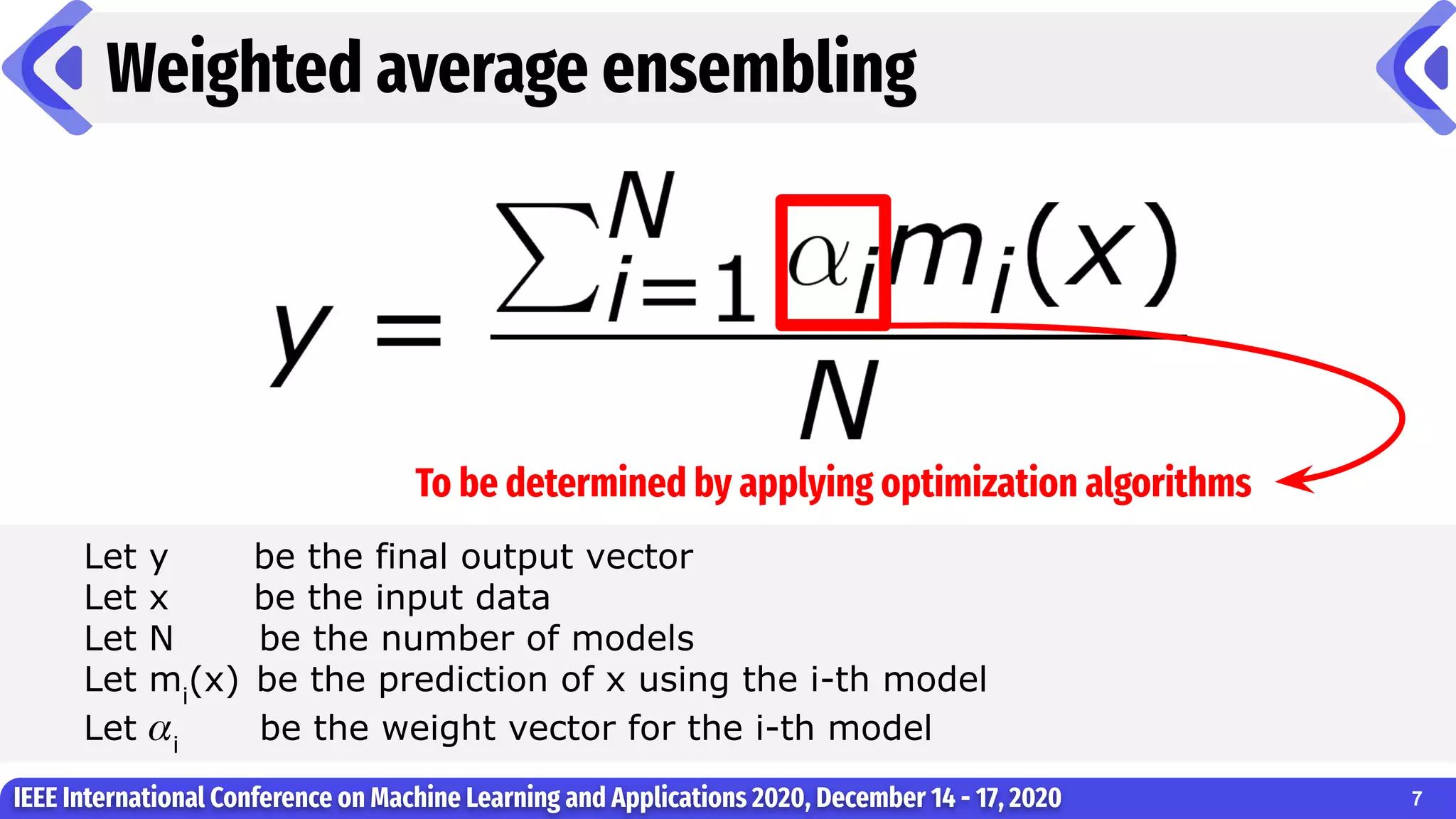

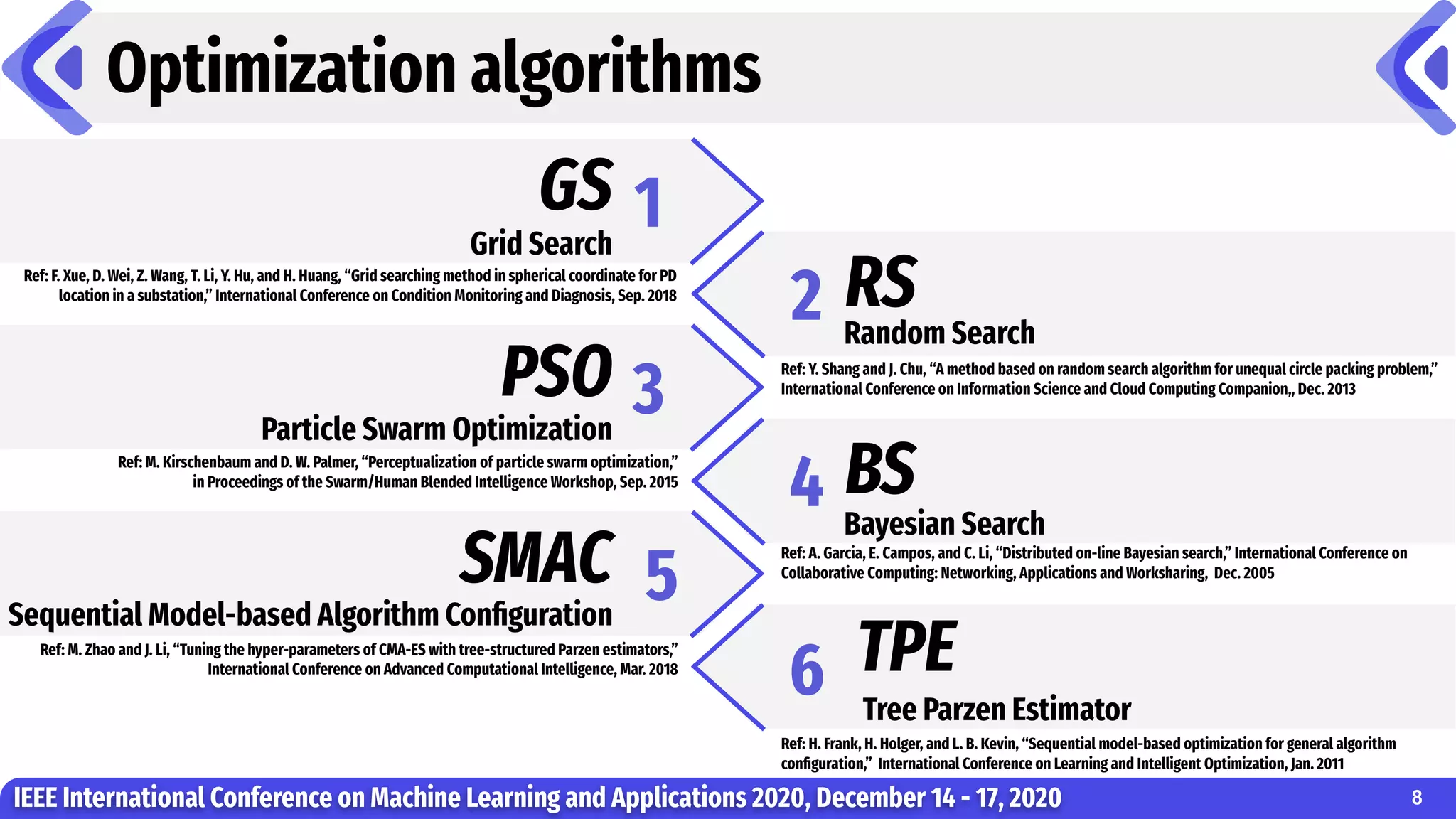

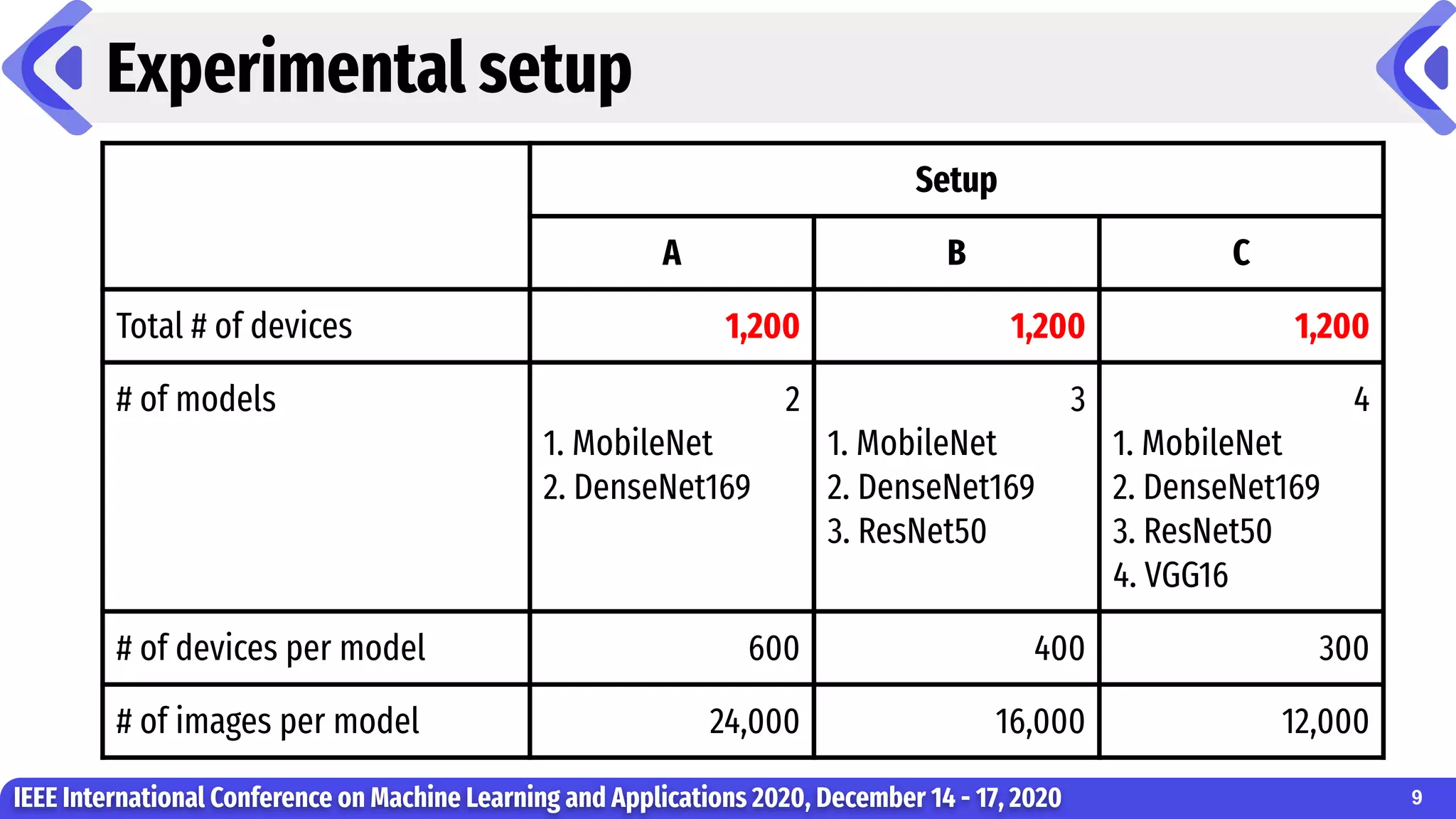

The document presents a novel method for federated learning that enables the ensembling of neural network models with heterogeneous structures. It evaluates six optimization algorithms for tuning model weights, finding the Tree-structured Parzen Estimator (TPE) achieved the highest accuracy. The study also emphasizes the benefits of federated learning in enhancing data privacy and reducing response time, while addressing the challenges posed by model heterogeneity and resource limitations.

![Federated Learning

Algorithm

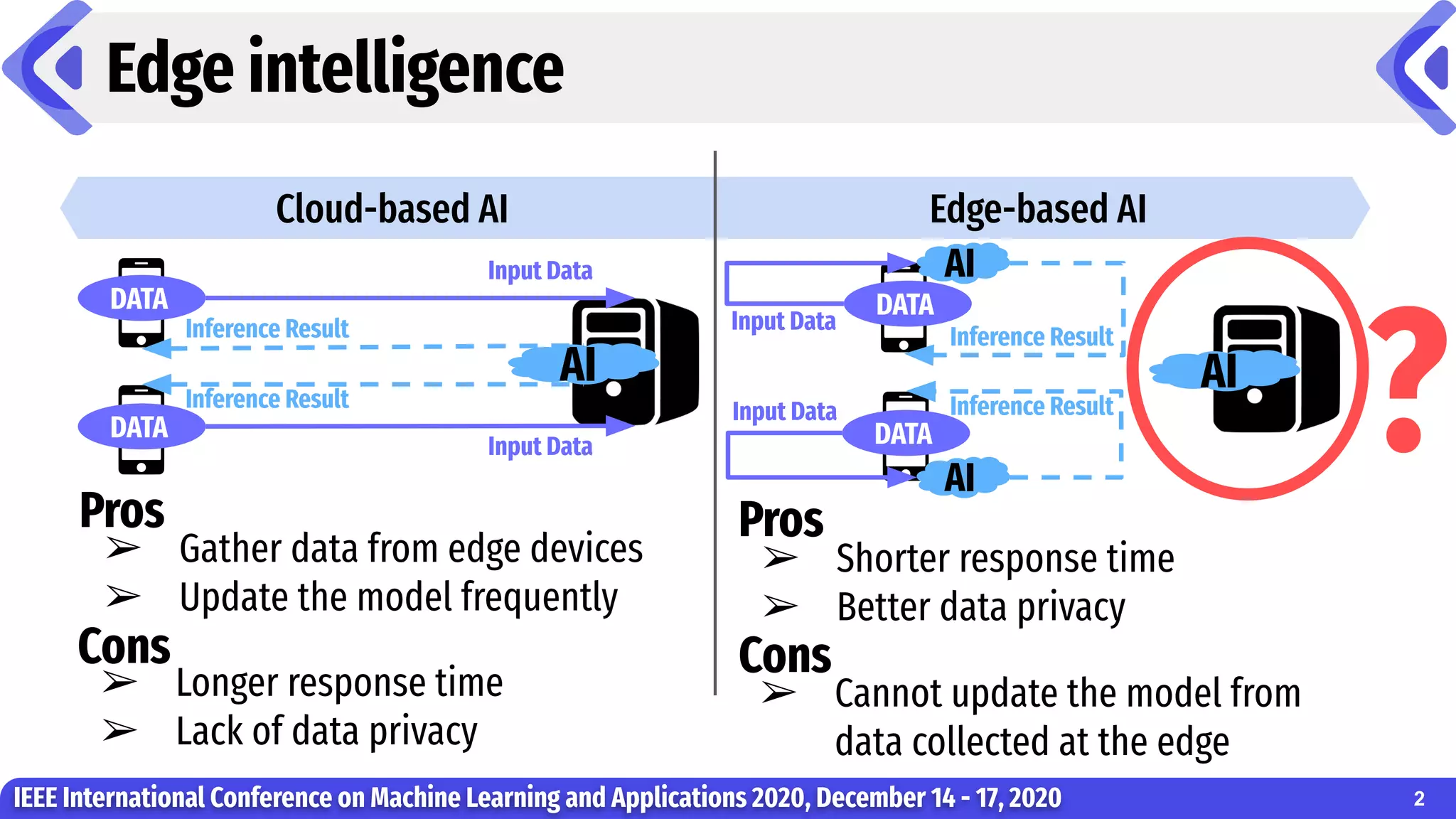

Federated learning for heterogeneous models

IEEE International Conference on Machine Learning and Applications 2020, December 14 - 17, 2020

DATA

DATA

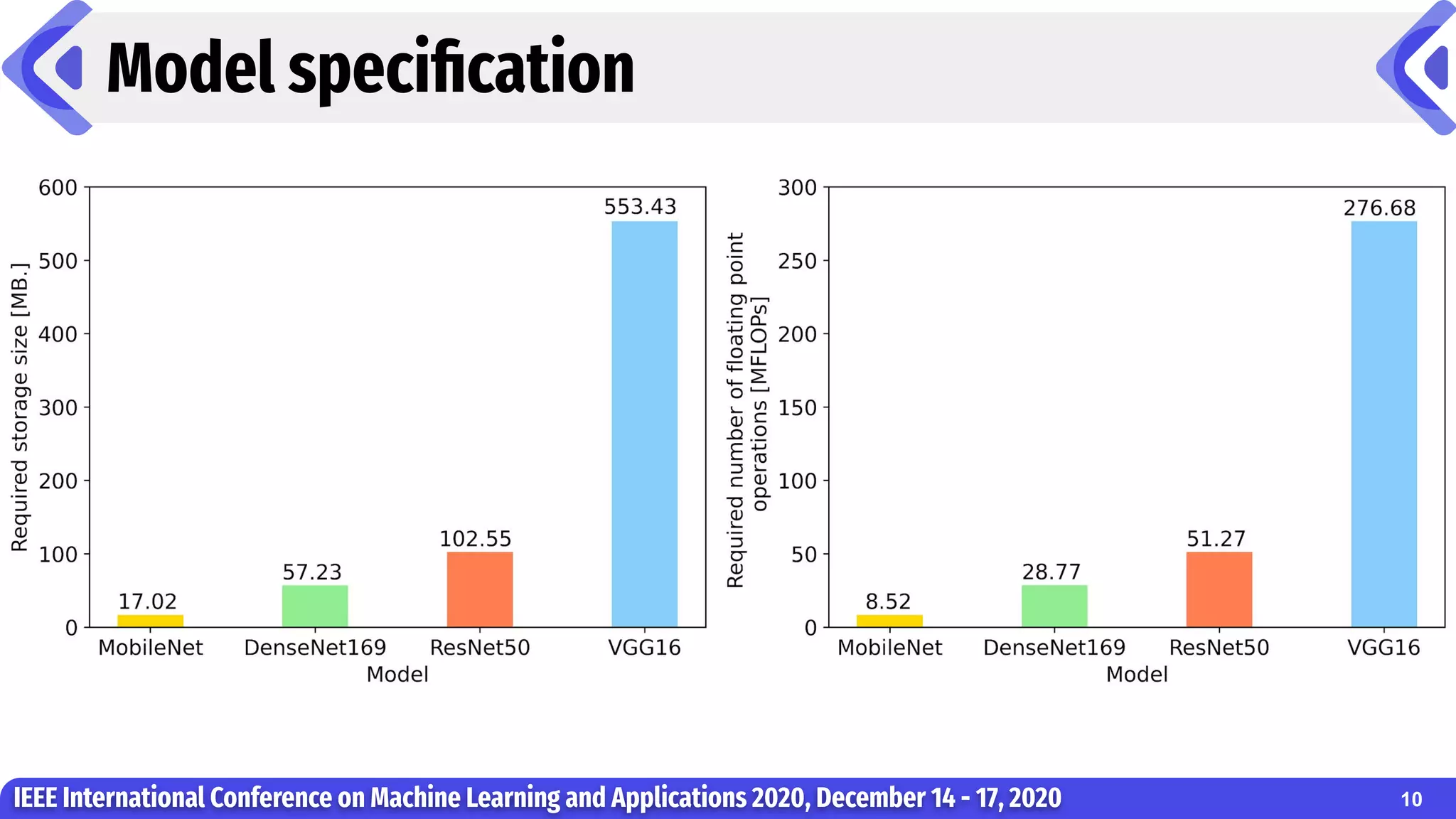

MobileNet

[17.02 MB.]

VGG16

[553.43 MB.]

Proposed

Method

5

Updated parameters of MobileNet

Updated parameters of VGG16](https://image.slidesharecdn.com/federatedlearningofneuralnetworkmodelswithheterogeneousstructures-220502101732/75/Federated-Learning-of-Neural-Network-Models-with-Heterogeneous-Structures-pdf-5-2048.jpg)

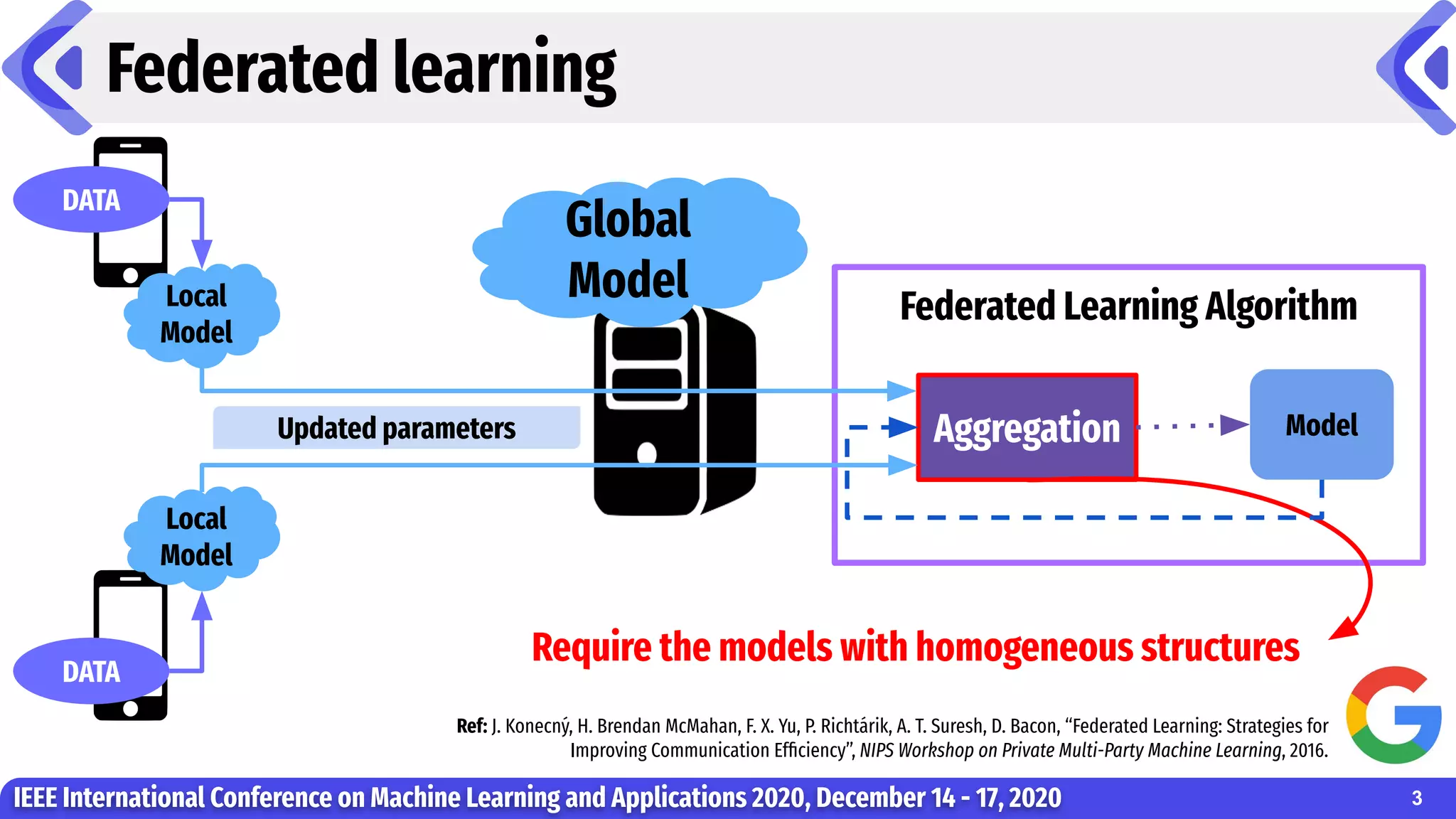

![Centralized Server

Proposed method

IEEE International Conference on Machine Learning and Applications 2020, December 14 - 17, 2020

Proposed Method

Weighted Average

Ensembling

6

DATA

DATA

DATA

DATA

Model

1

Model

1

Model

2

Model

2

Model

1

Model

2

Federated Learning Algorithm

[FedAVG]

Federated Learning Algorithm

[FedAVG]](https://image.slidesharecdn.com/federatedlearningofneuralnetworkmodelswithheterogeneousstructures-220502101732/75/Federated-Learning-of-Neural-Network-Models-with-Heterogeneous-Structures-pdf-6-2048.jpg)

![Datasets

IEEE International Conference on Machine Learning and Applications 2020, December 14 - 17, 2020

Name # of images # of output classes

CIFAR-10[1]

70,000 10

CIFAR-100[2]

70,000 100

ImageNet[3]

100,000 1,000

R-Cellular[4]

73,000 1,108

References:

[1] https://www.cs.toronto.edu/~kriz/cifar.html#CIFAR-10

[2] https://www.cs.toronto.edu/~kriz/cifar.html#CIFAR-100

[3] http://image-net.org/download

[4] https://www.kaggle.com/c/recursion-cellular-image-classification

11](https://image.slidesharecdn.com/federatedlearningofneuralnetworkmodelswithheterogeneousstructures-220502101732/75/Federated-Learning-of-Neural-Network-Models-with-Heterogeneous-Structures-pdf-11-2048.jpg)