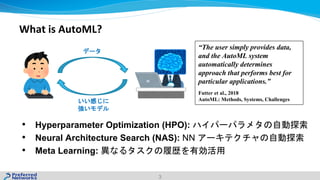

The document discusses two papers presented at NeurIPS 2018 on AutoML:

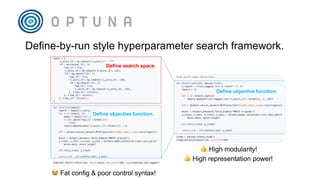

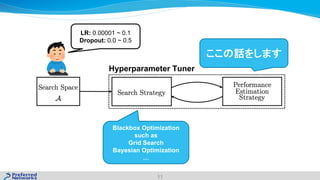

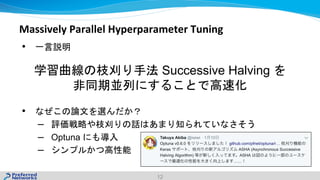

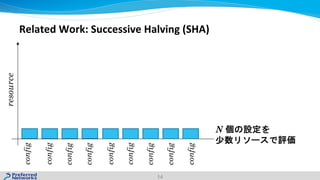

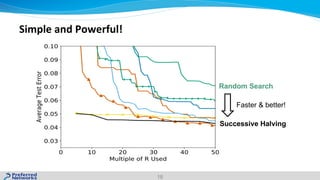

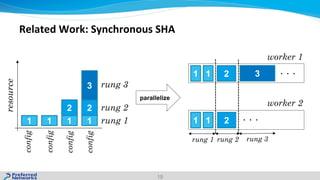

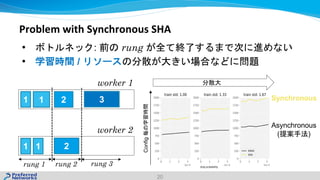

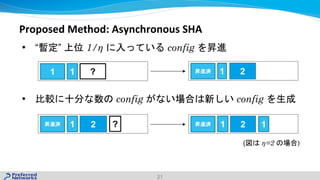

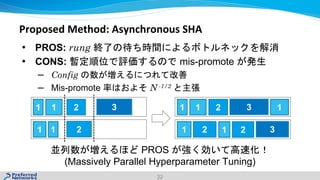

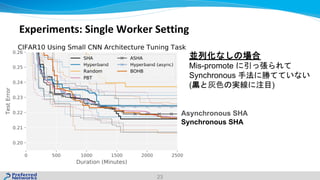

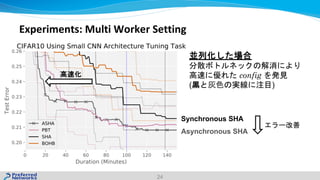

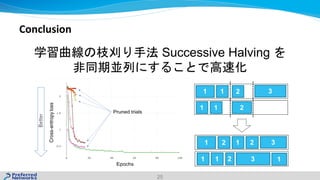

1) "Massively Parallel Hyperparameter Tuning" which proposes an asynchronous successive halving algorithm to parallelize hyperparameter tuning. This approach improves on synchronous successive halving by avoiding mis-promoting configurations.

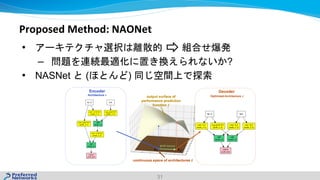

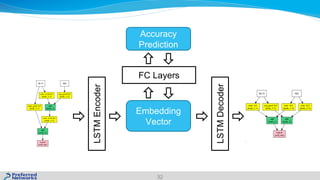

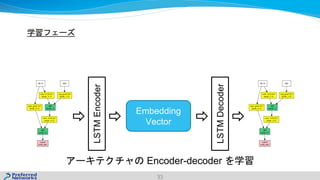

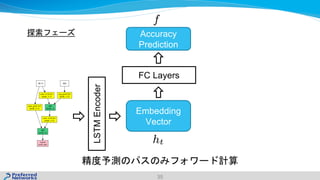

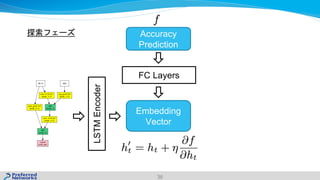

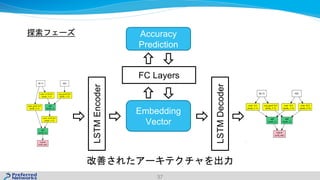

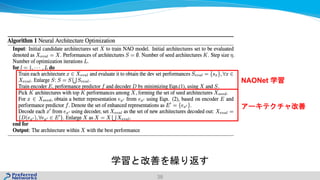

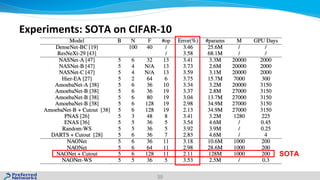

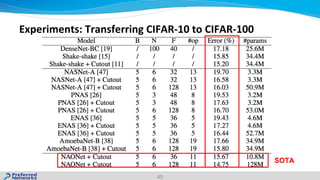

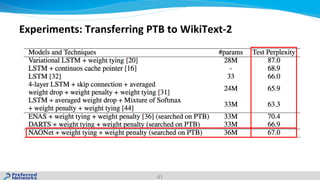

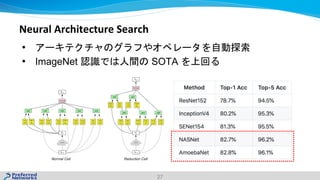

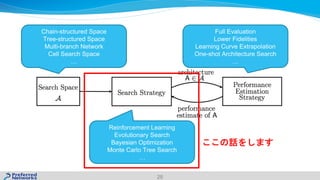

2) "Neural Architecture Optimization" which uses a neural network to learn embeddings of neural network architectures for optimization. The approach achieves state-of-the-art results on CIFAR-10 and shows good transferability to other tasks.

![Agenda

• AutoML @ NeurIPS 2018

• 1

“Massively Parallel Hyperparameter Tuning” [Li, et al.]

• 2

“Neural Architecture Optimization” [Luo, et al.]

2](https://image.slidesharecdn.com/neuripsautoml-190126062134/85/AutoML-in-NeurIPS-2018-2-320.jpg)

![Today’s Papers

• Hyperparameter Optimization

“Massively Parallel Hyperparameter Tuning” [Li, et al.]

• Neural Architecture Search

“Neural Architecture Optimization” [Luo, et al.]

9](https://image.slidesharecdn.com/neuripsautoml-190126062134/85/AutoML-in-NeurIPS-2018-9-320.jpg)

![Related Work: Successive Halving (SHA)

13

• ( )

•

• Hyperband [16, Li, et al.]](https://image.slidesharecdn.com/neuripsautoml-190126062134/85/AutoML-in-NeurIPS-2018-13-320.jpg)

![Related Work: NASNet Search Space

30

• [16, Zoph et al.]

• NASNet Space ImageNet SOTA [17, Zoph et al.]

–

– ResNet ResBlock](https://image.slidesharecdn.com/neuripsautoml-190126062134/85/AutoML-in-NeurIPS-2018-30-320.jpg)