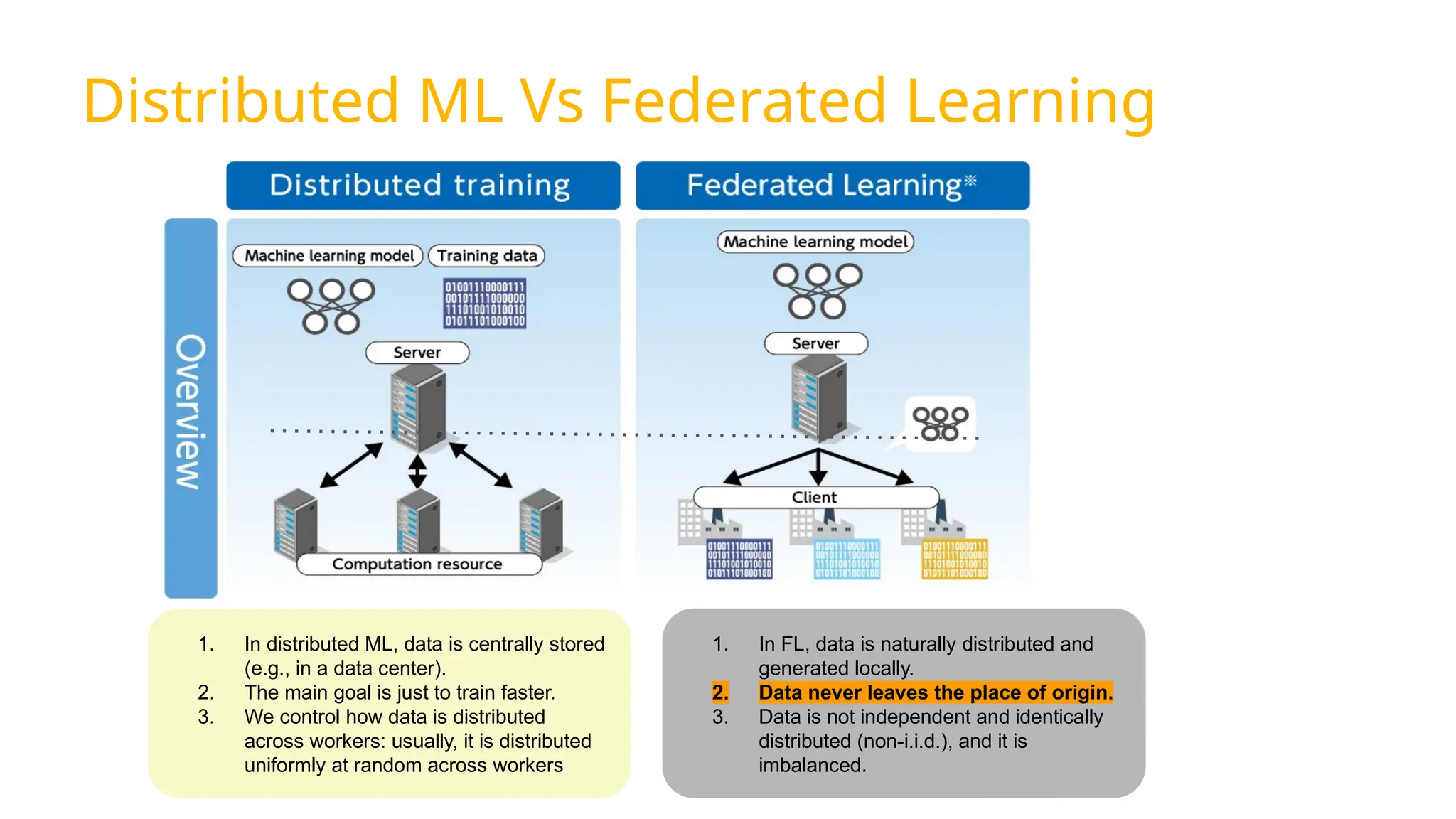

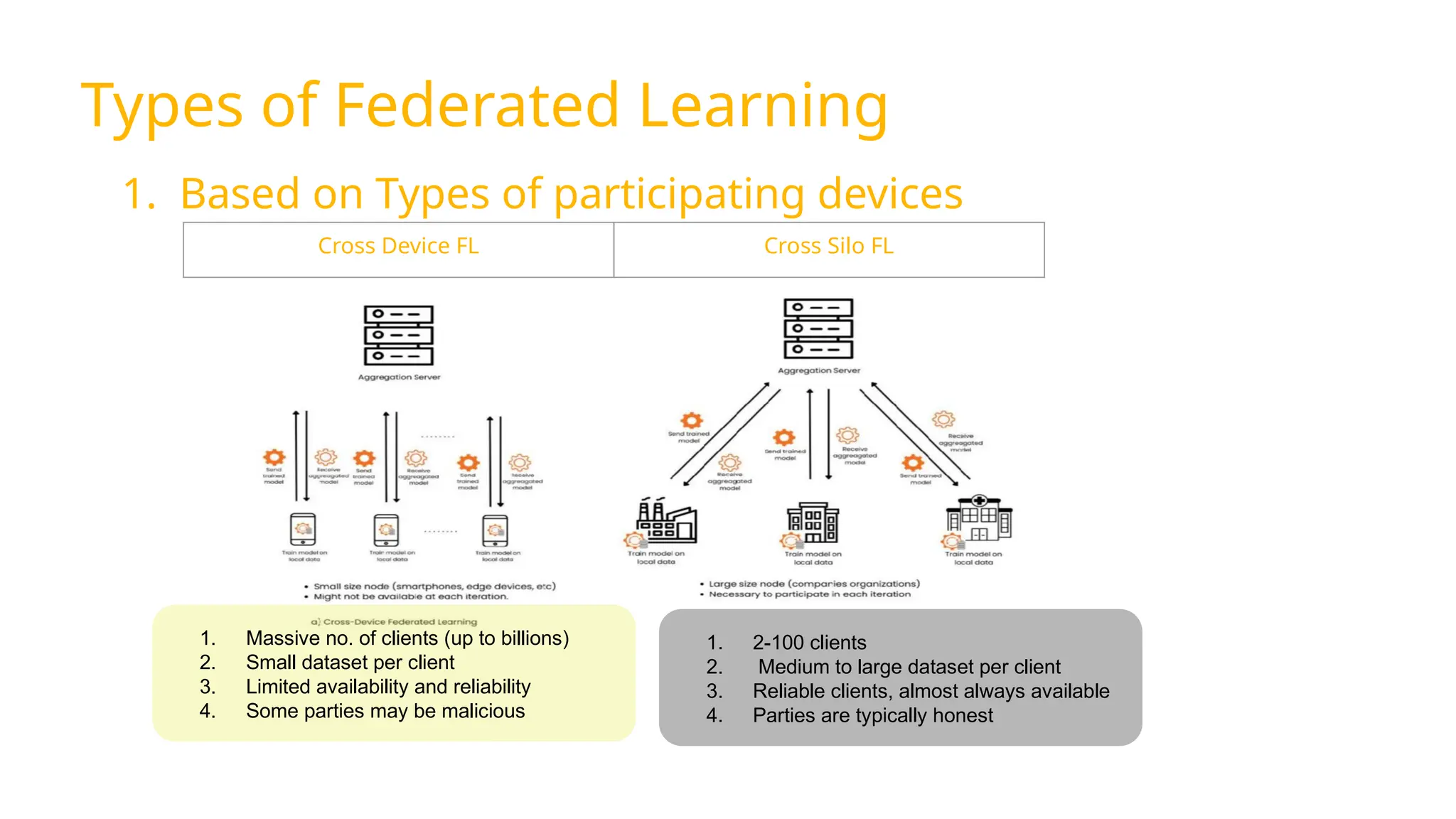

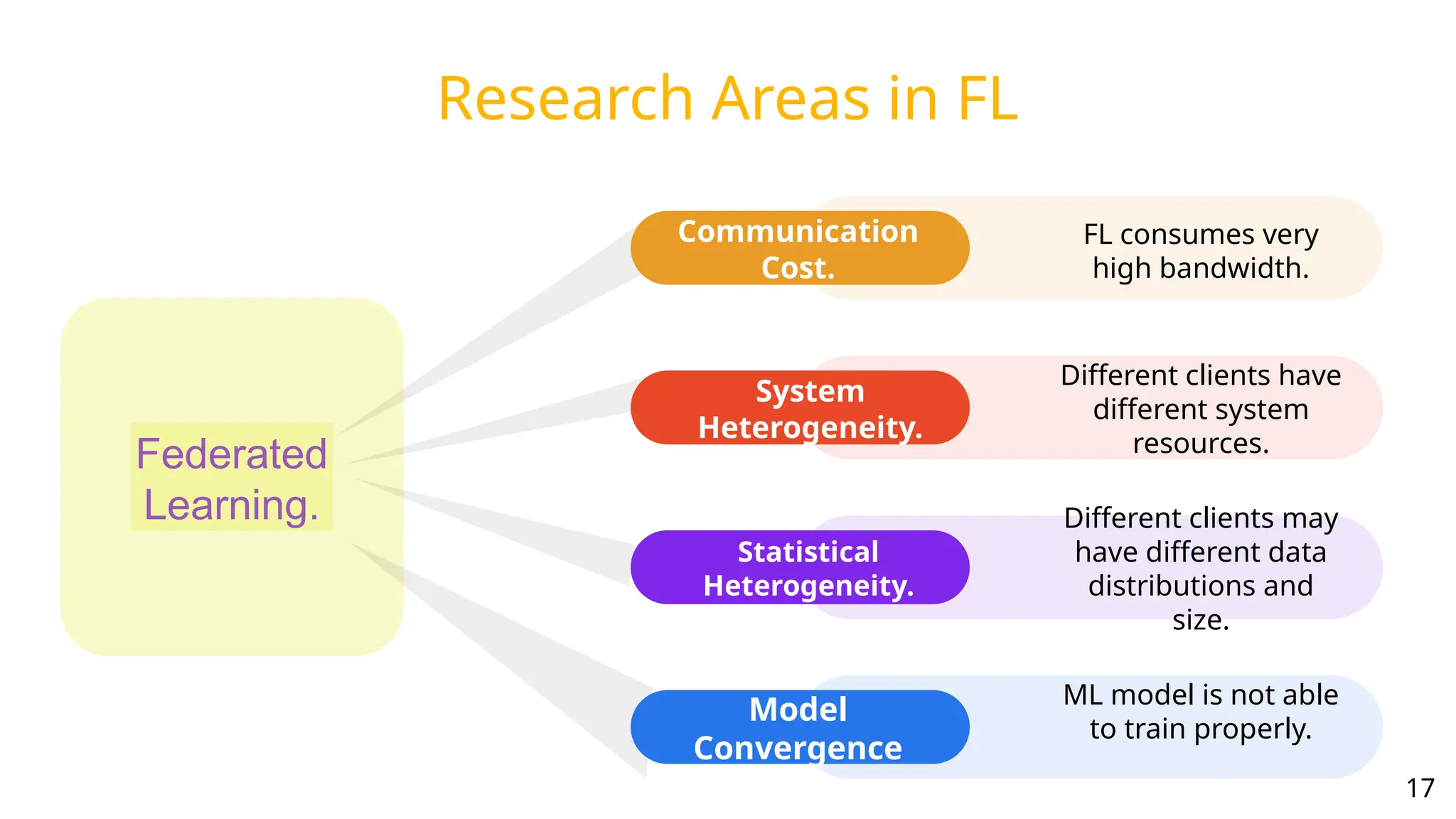

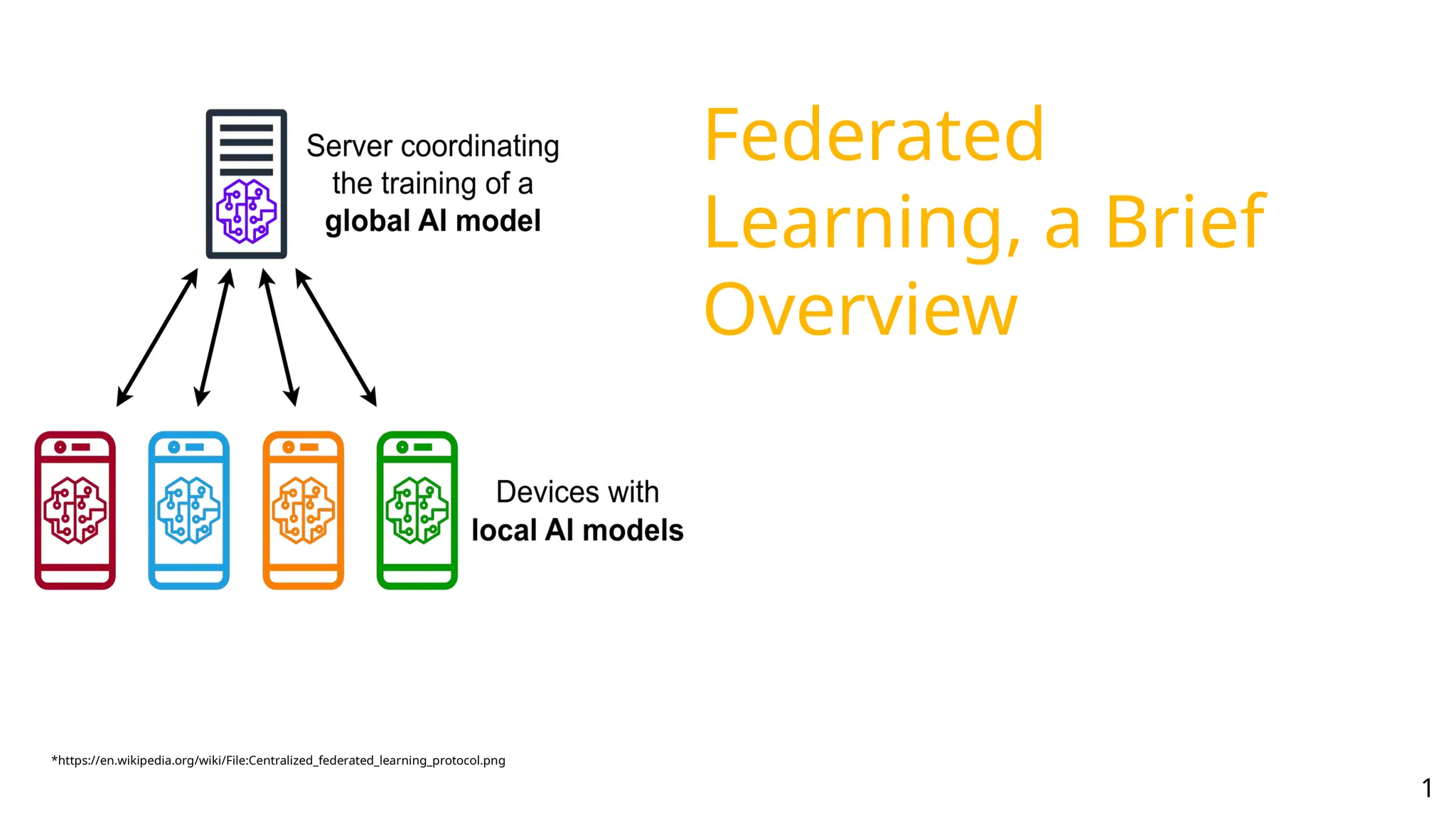

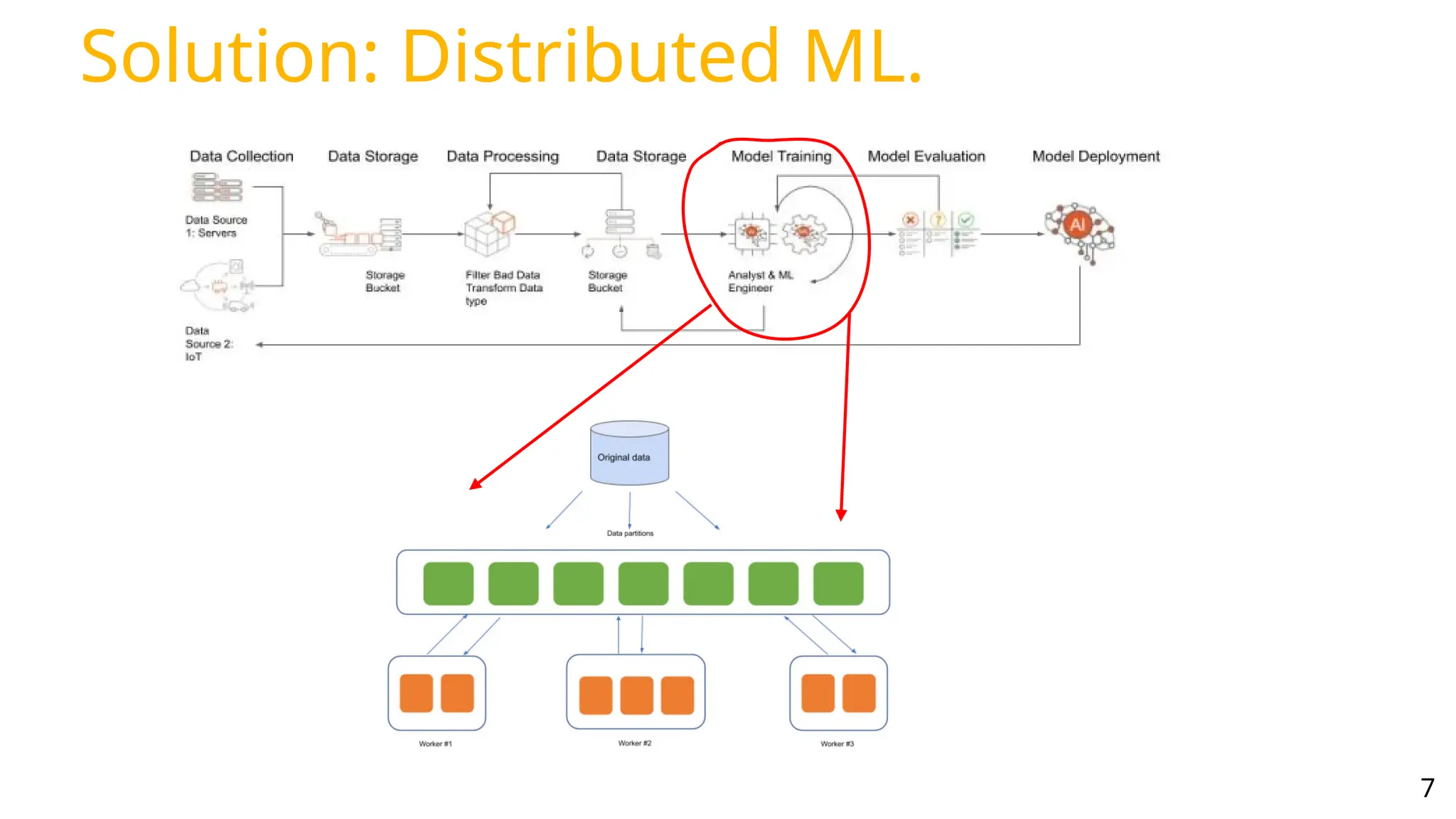

Federated learning is a distributed machine learning approach that utilizes decentralized data located on edge devices for training models while ensuring data privacy and security. It contrasts with traditional distributed machine learning, as the data never leaves its source, and various constraints like regulatory compliance affect data handling. Key challenges in federated learning include high bandwidth consumption, communication costs, and variations in client resource availability and data distributions.

![Solution: Federated Learning

Federated Learning (FL) [1] is a distributed

machine learning approach in which large

decentralized datasets, residing on edge devices

like mobile phones and IoT devices, are used to

train a Machine Learning (ML) model.

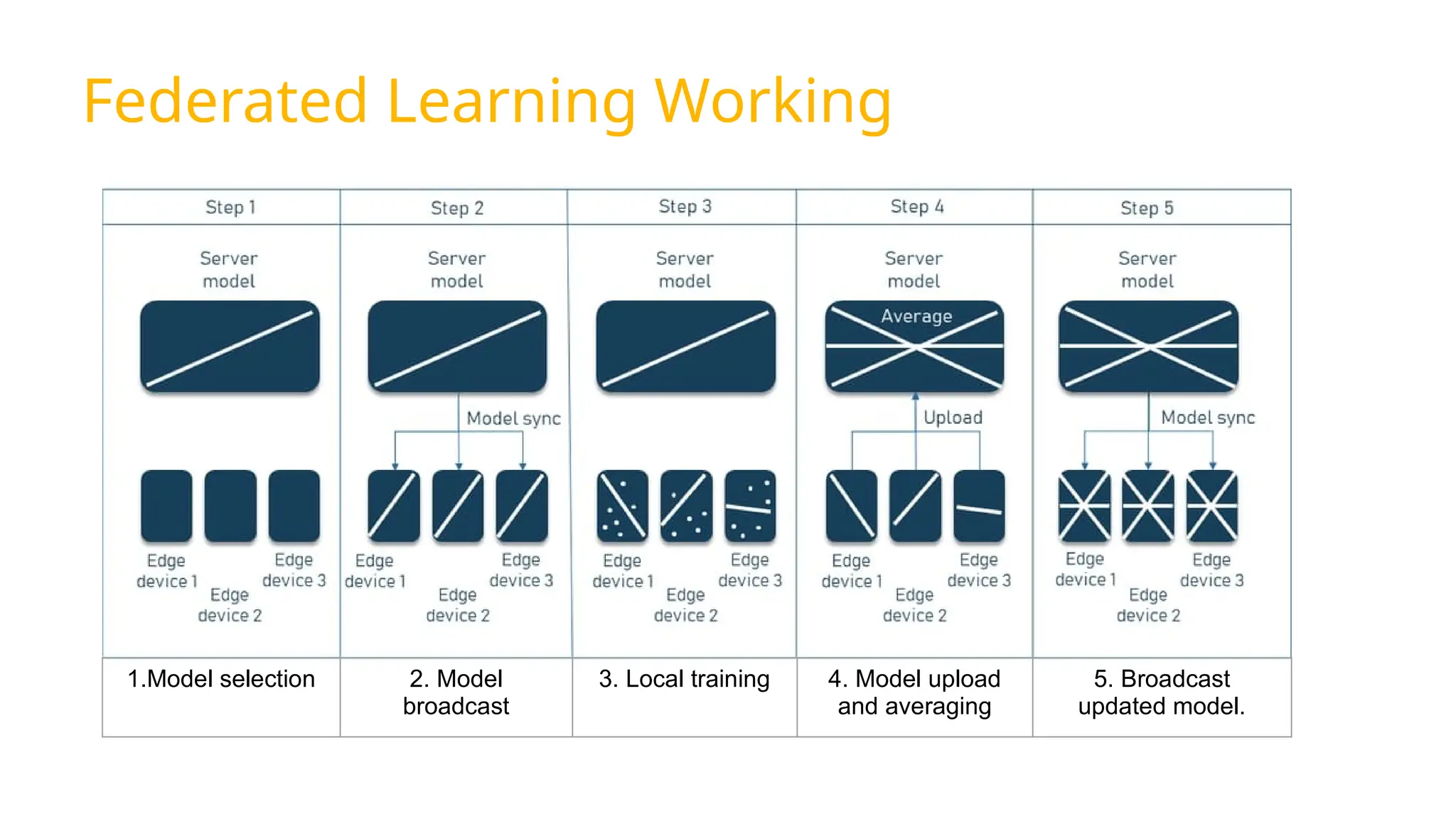

Some Important standard terms in FL.

1. Server: A computational device that orchestrates the

whole FL process and is responsible for weight

aggregation.

2. Client: A device that has some computational resources

and local data associated with it. e.g mobile phones, IoT

devices, personal computers etc etc.

3. Round: Round or communication round is one round

trip journey of model weights from server to clients and

back to server.

*https://blog.ml.cmu.edu/wp-content/uploads/2019/11/Screen-

Shot-2019-11-12-at-10.42.34-AM-1024x506.png

9](https://image.slidesharecdn.com/federatedlearningoverviewfinal-250203093720-723c2e55/75/Federated-Learning-Overview-and-New-Research-Areas-9-2048.jpg)