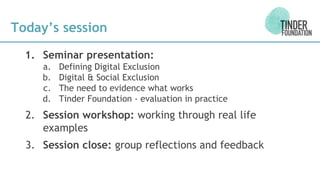

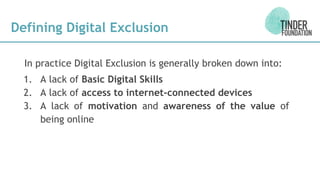

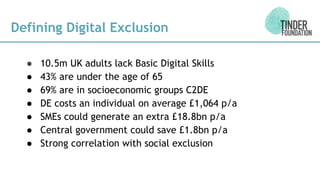

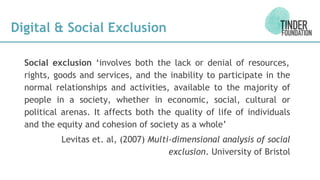

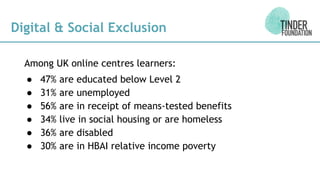

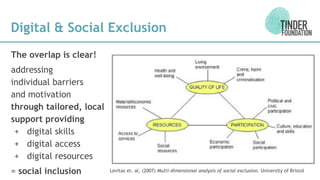

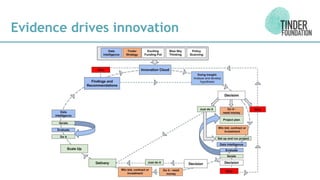

This document outlines a seminar presentation given by Dr. Alice Mathers and James Richardson of Tinder Foundation on evaluating digital inclusion projects. It discusses defining digital and social exclusion and their overlap, as well as the need to evidence what intervention strategies are effective. Two case studies are presented: an eReading Rooms project and a Vodafone mobile devices initiative. Attendees then participate in a workshop designing evaluation frameworks for sample digital inclusion projects focusing on aims, methods, outcomes, and adding value.