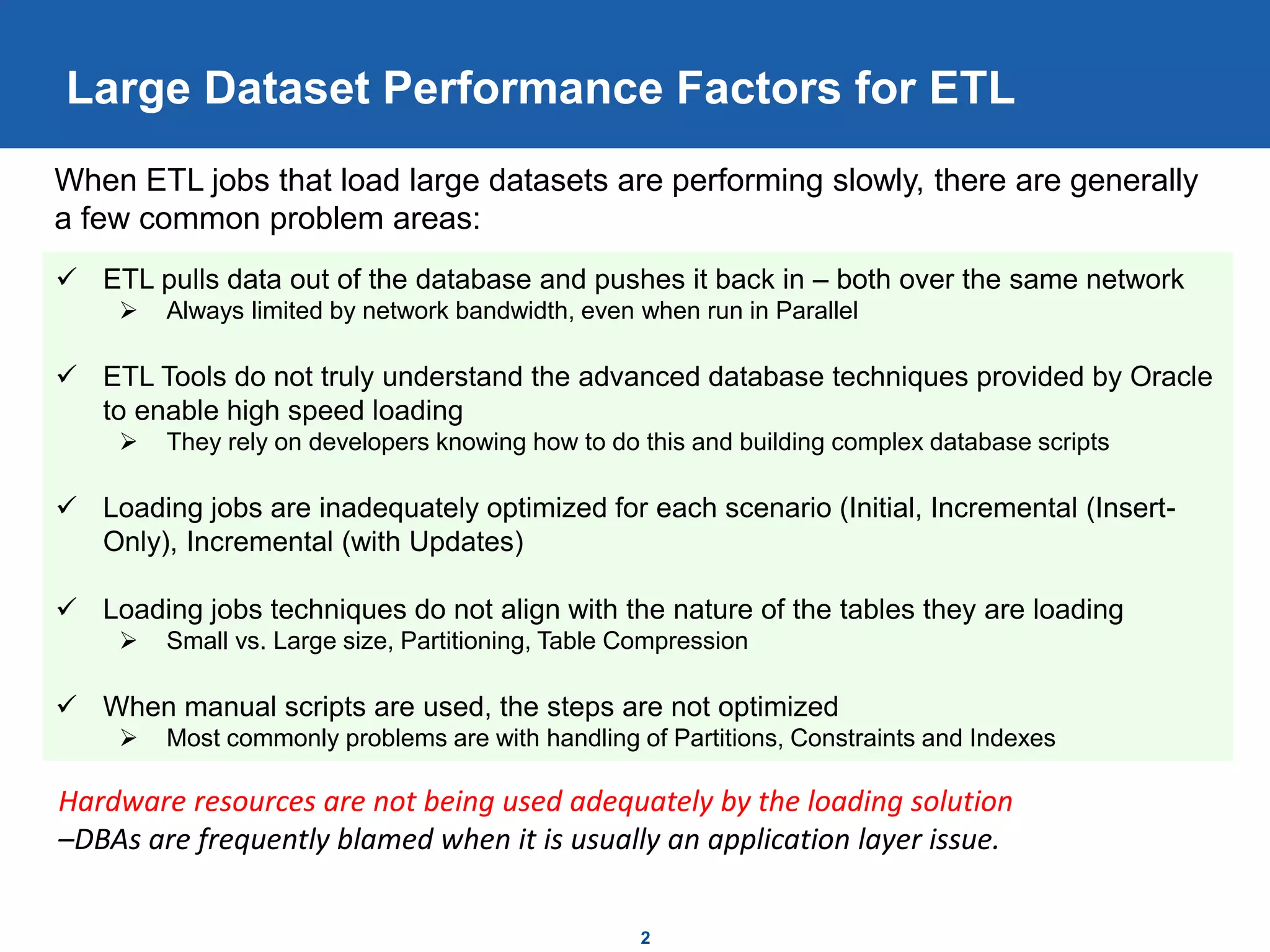

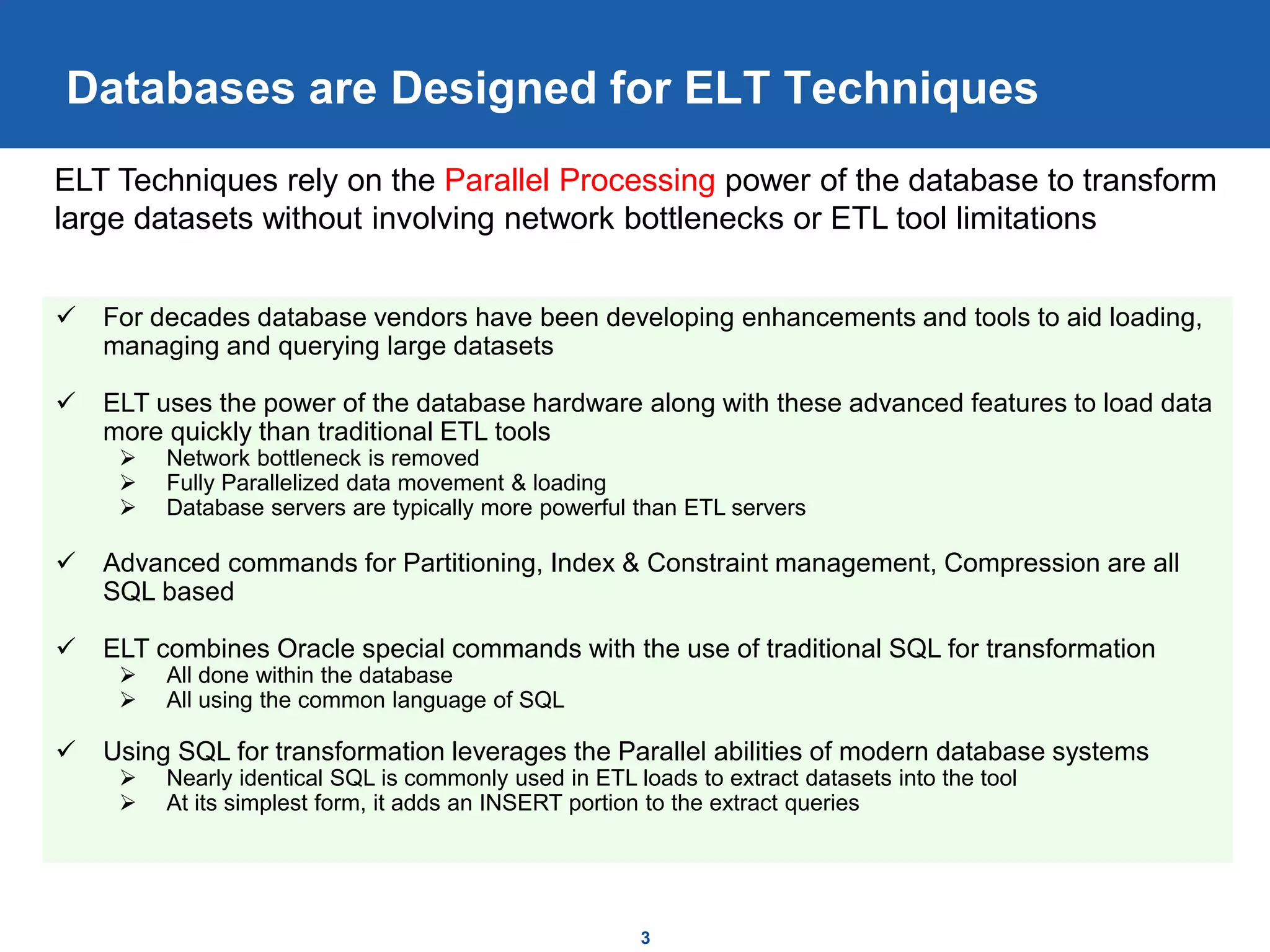

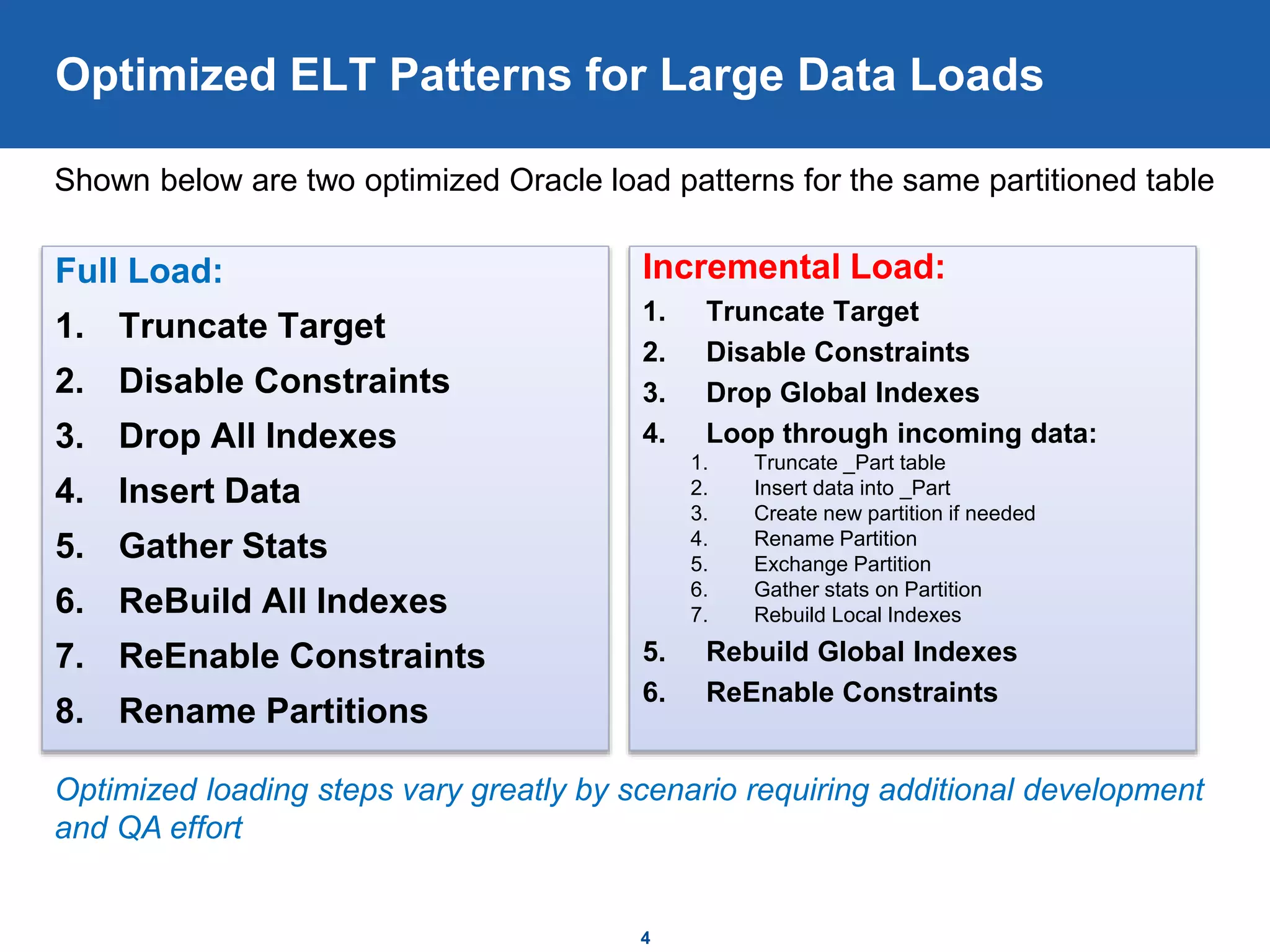

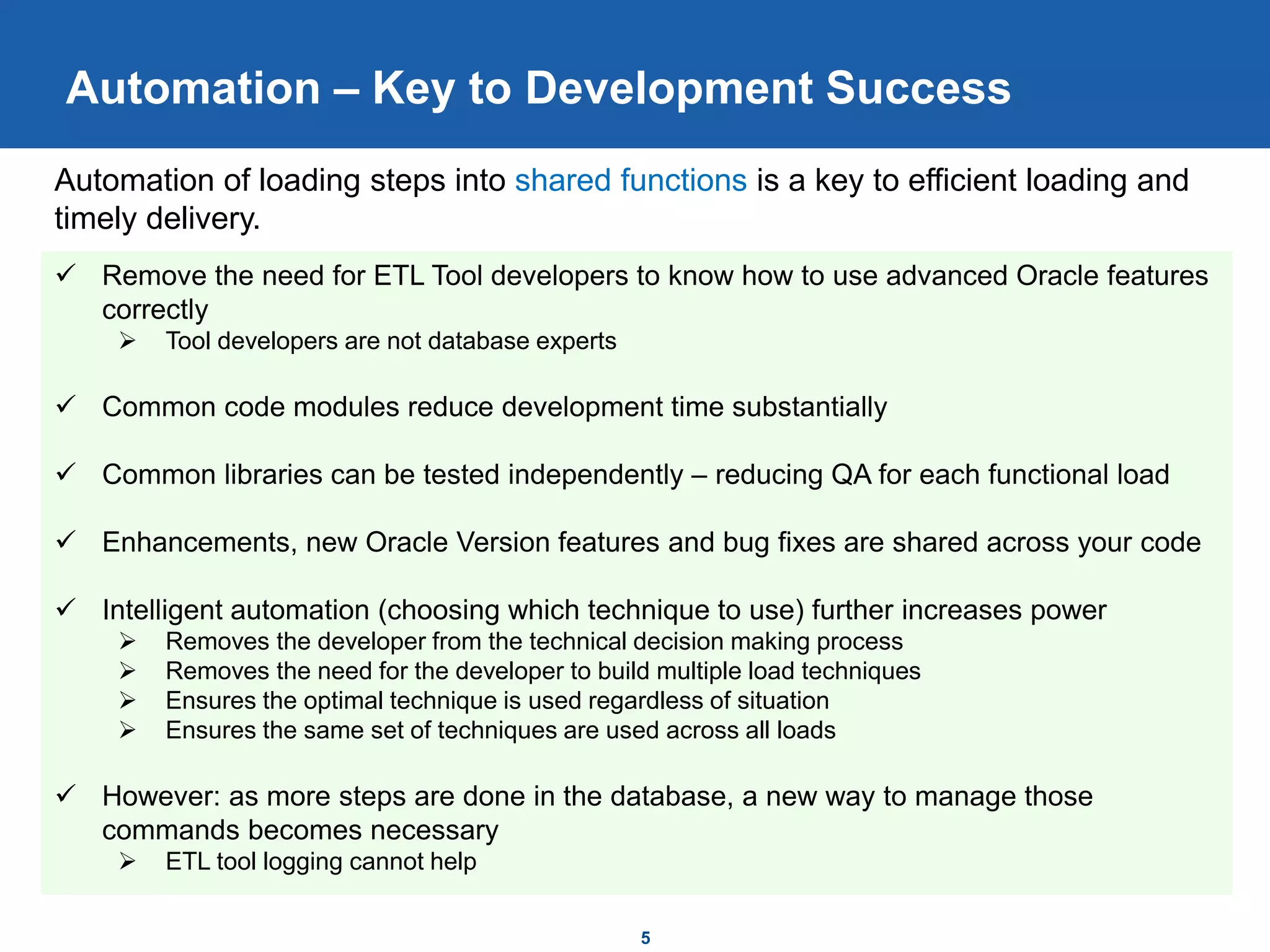

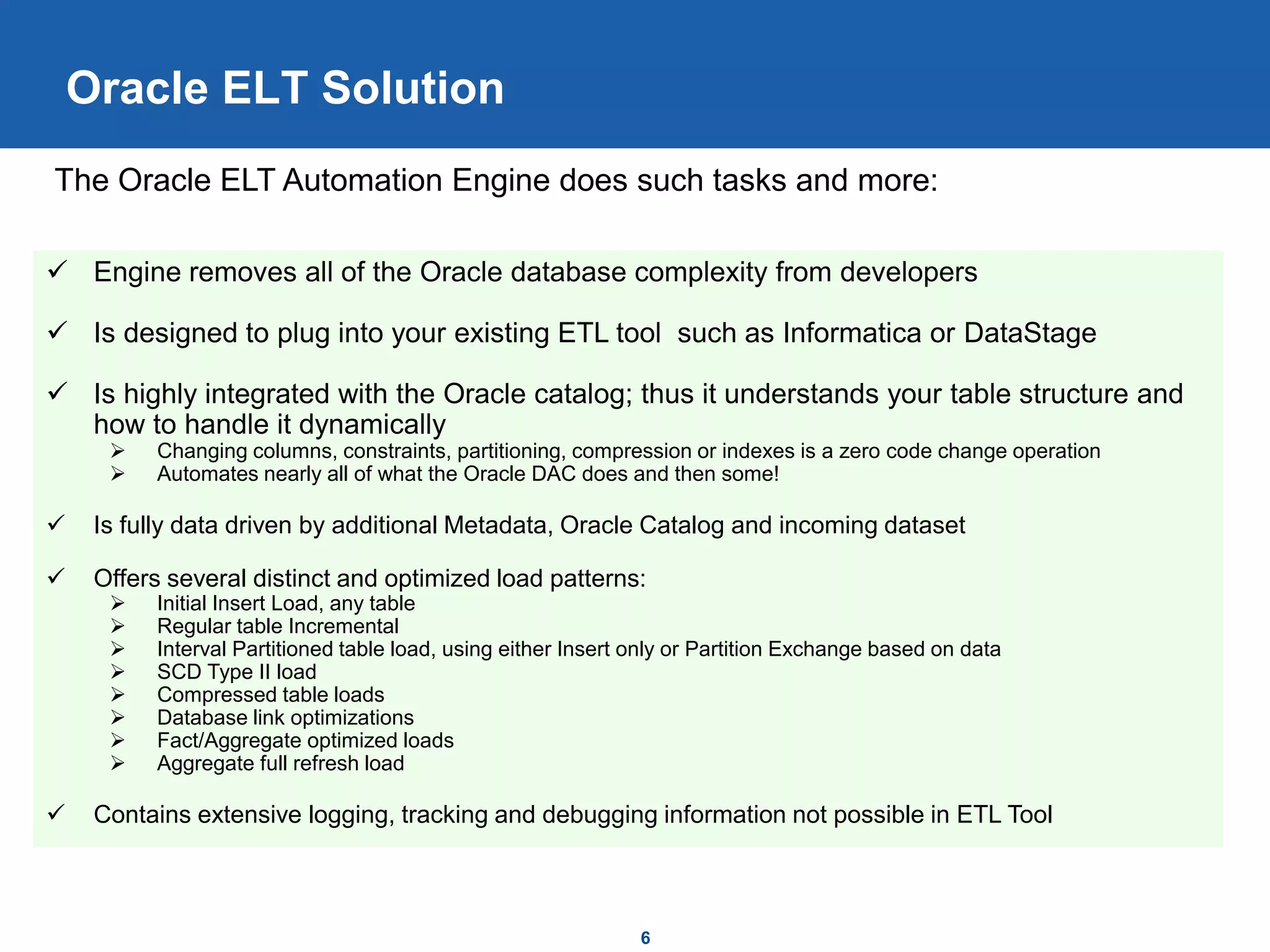

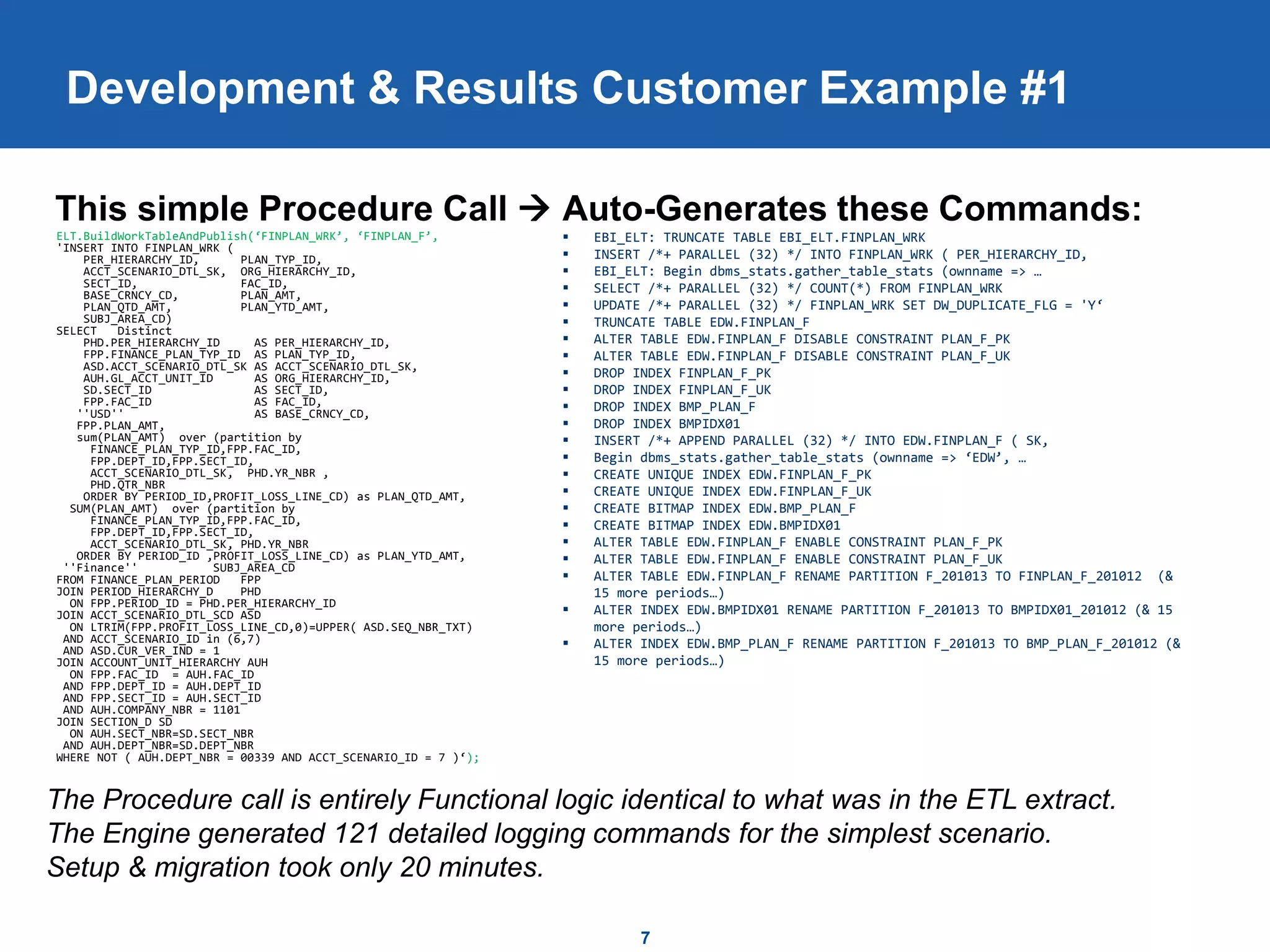

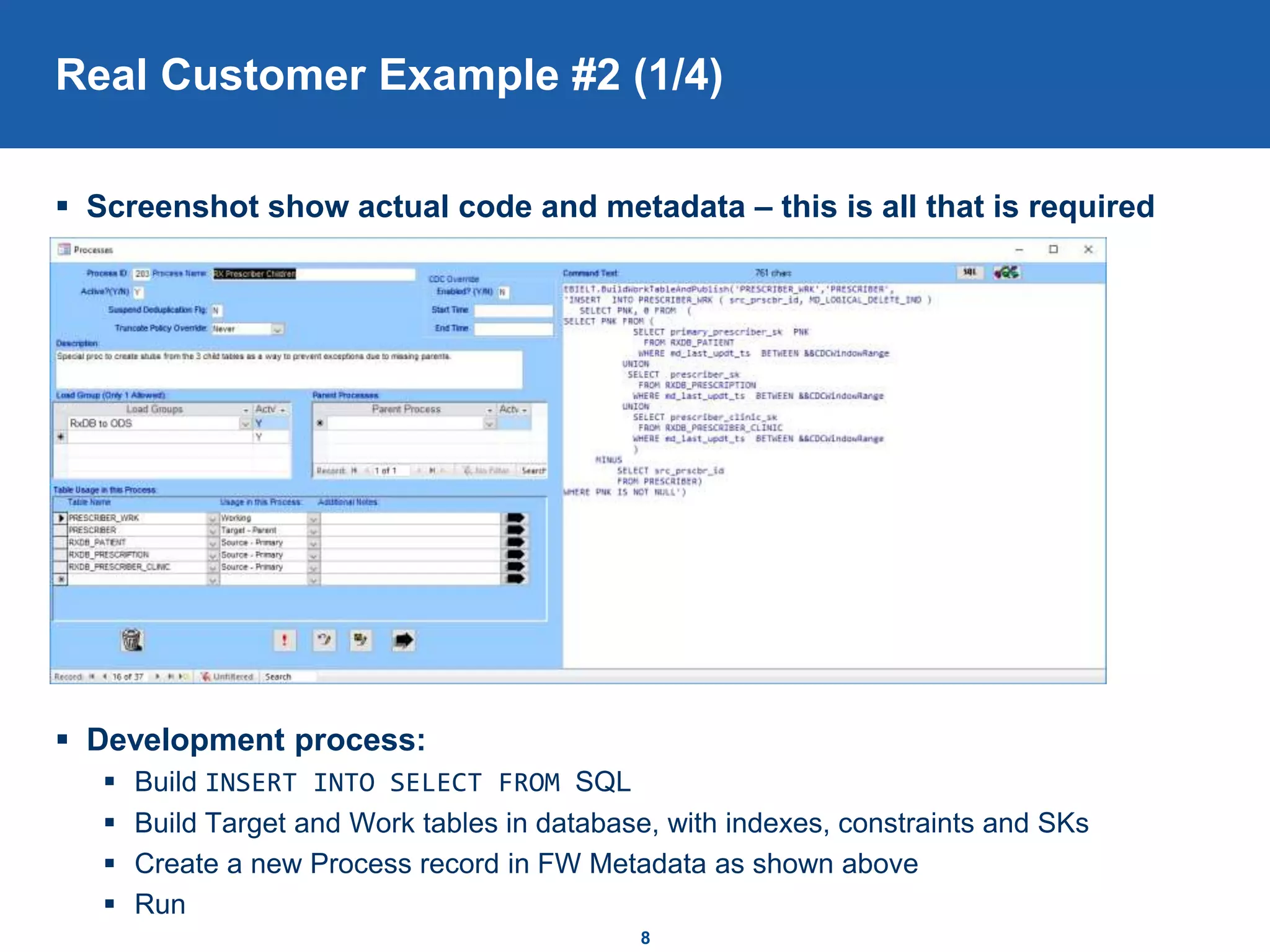

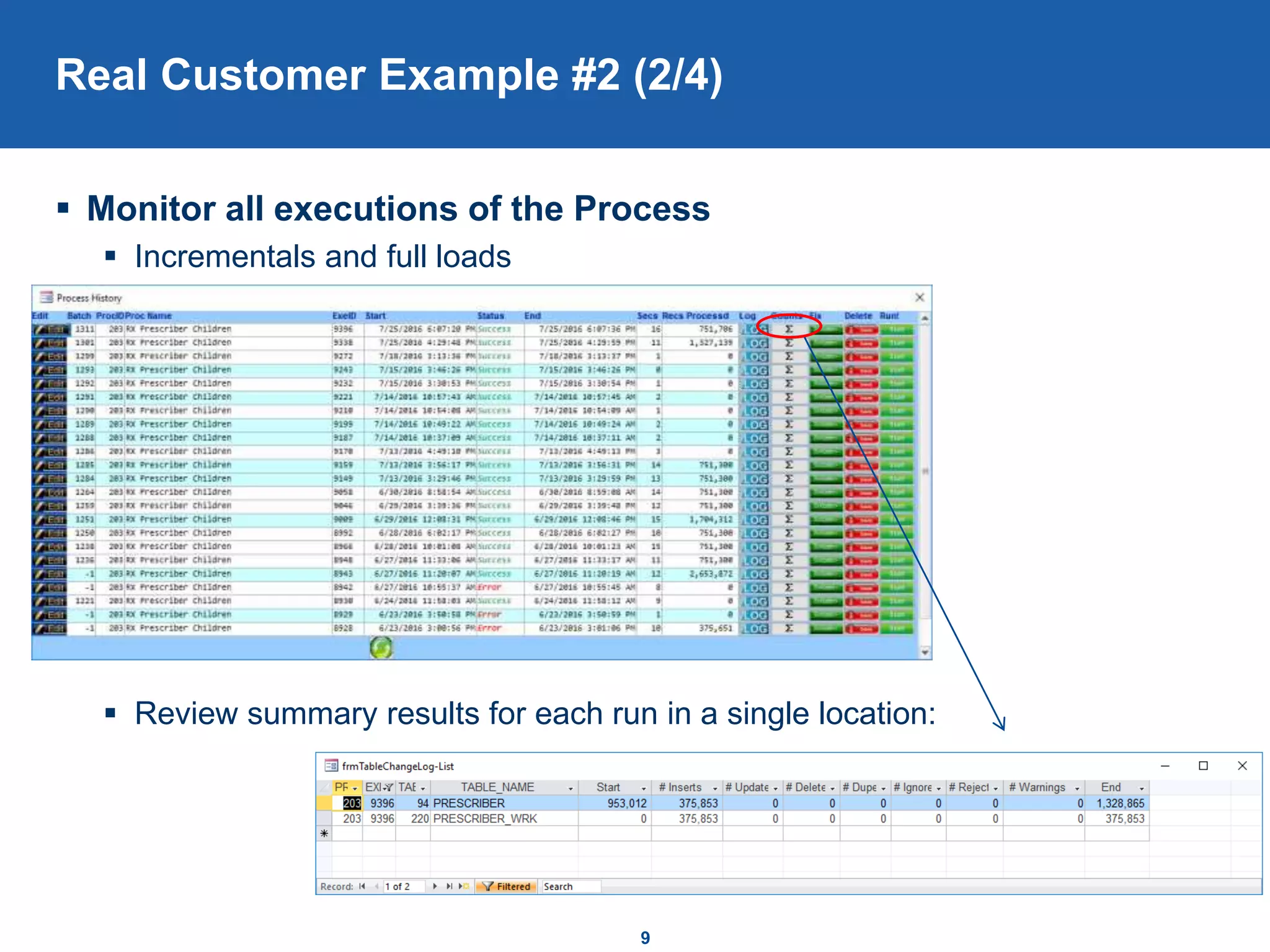

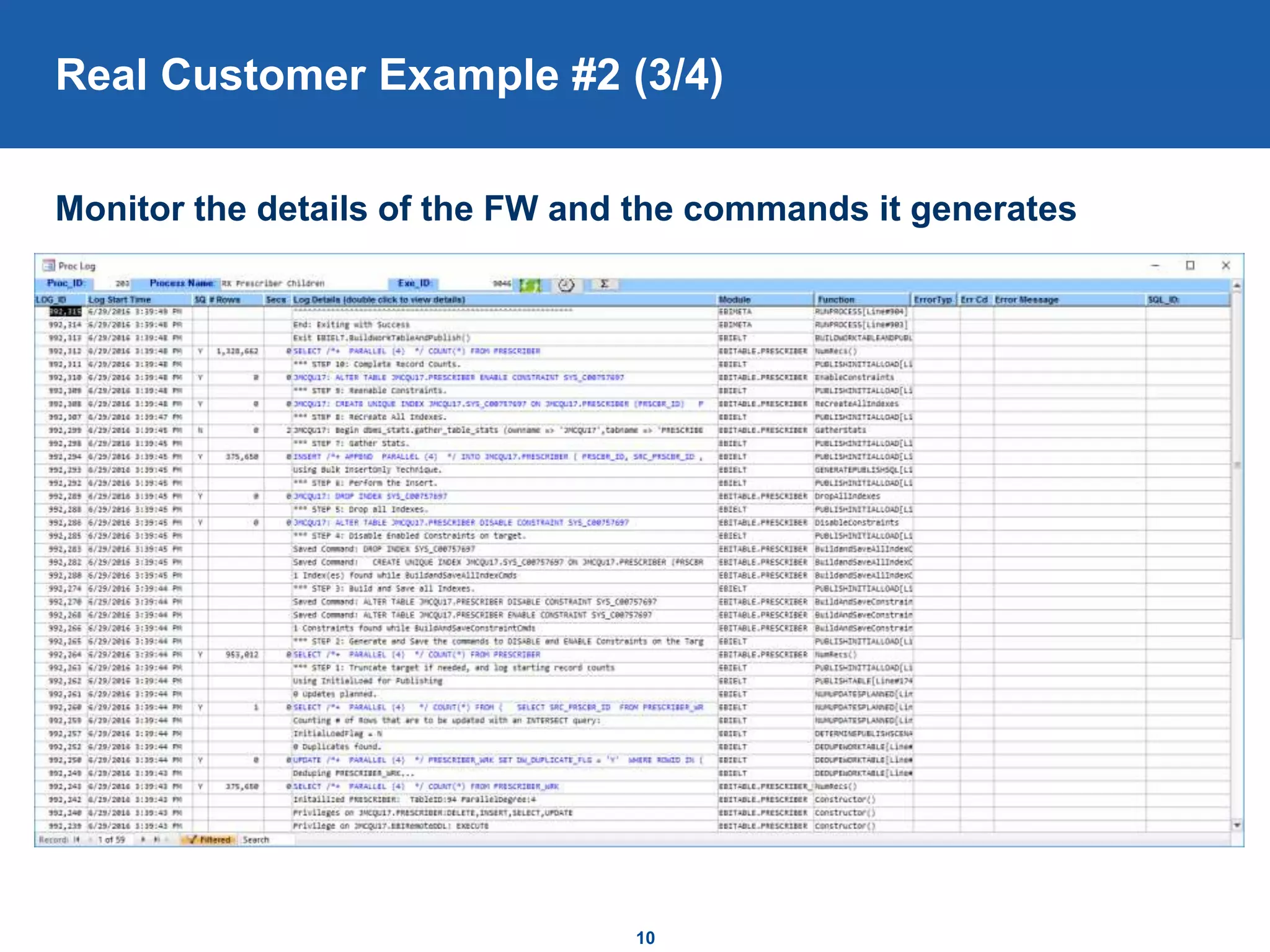

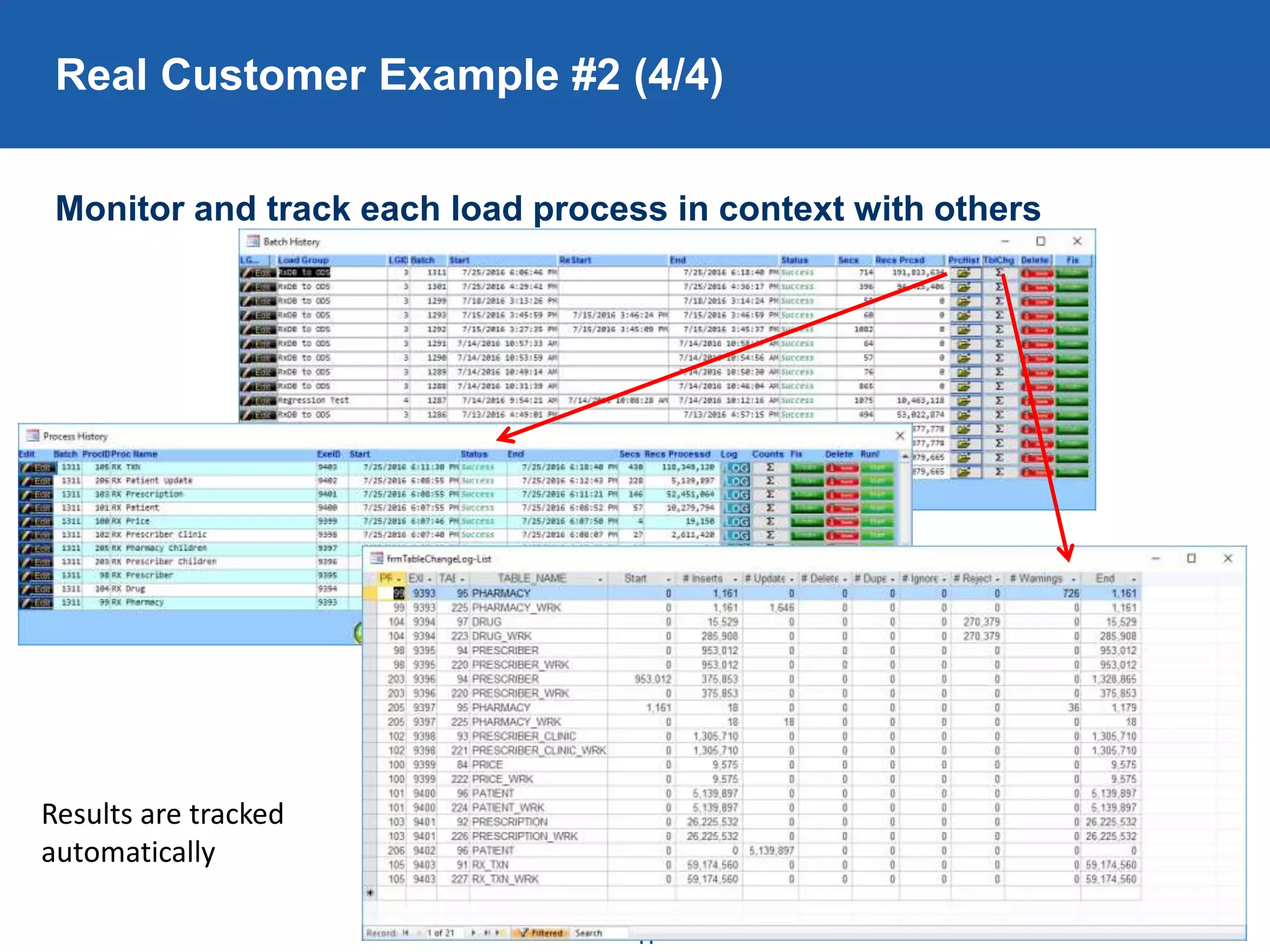

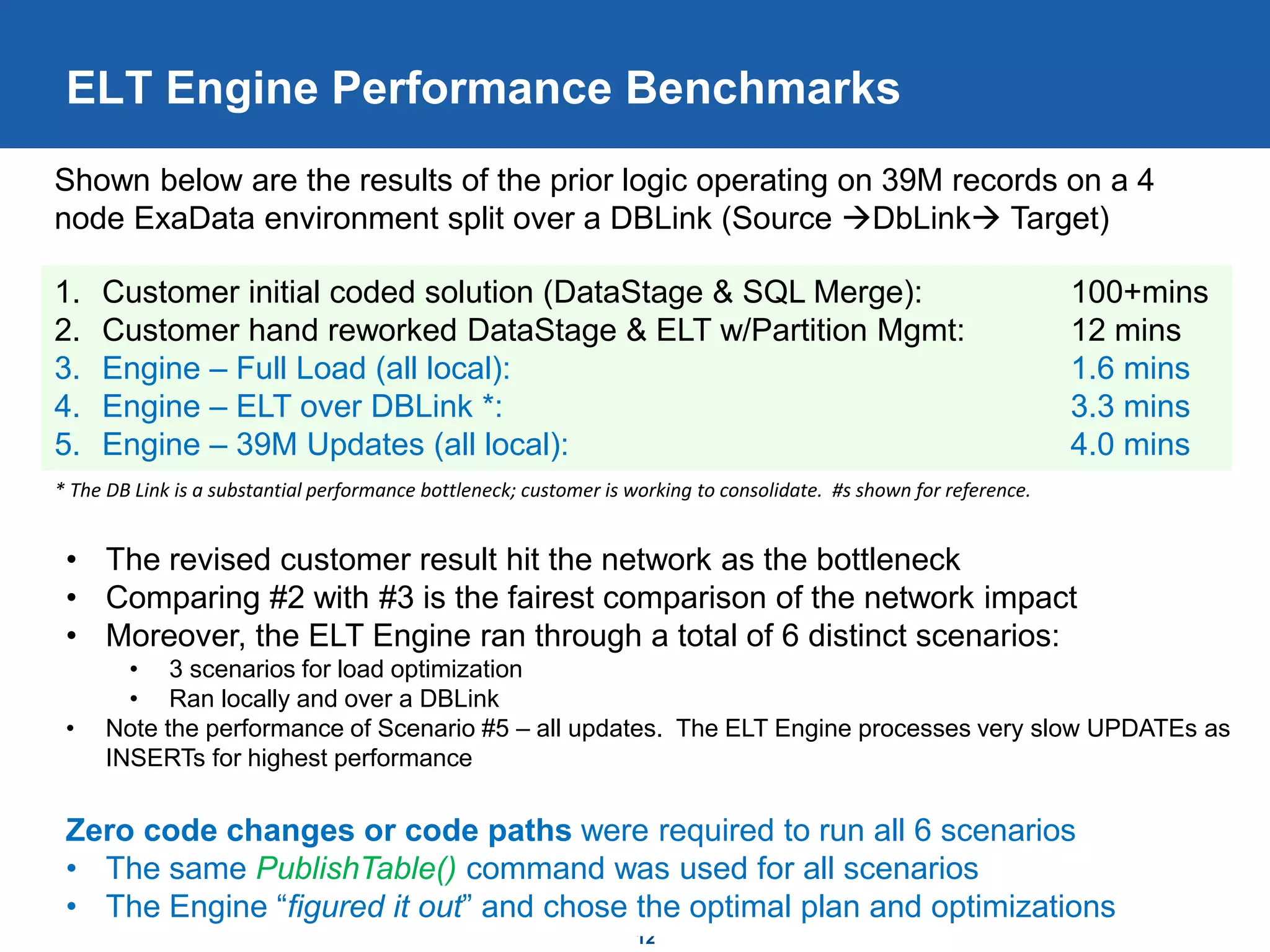

The document discusses the Oracle ELT Engine, which is a solution that automates Extract, Load, and Transform (ELT) processes within Oracle databases. It removes complexity from ETL tool developers by understanding Oracle database techniques and optimizing loads. The Engine generates SQL commands to efficiently load and manage large datasets using techniques like partitioning, parallel processing, and indexing. It provides logging and flexibility to support various scenarios like initial loads, incremental loads, and compressed table loads. Customers saw improvements over hand-coded ETL solutions, with one seeing a load drop from over 100 minutes to just 1.6 minutes.