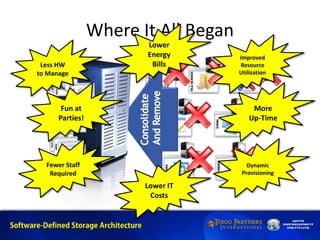

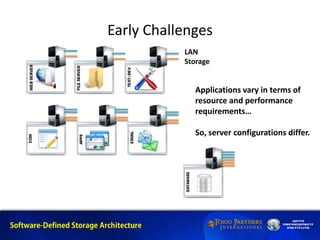

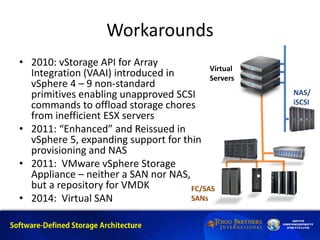

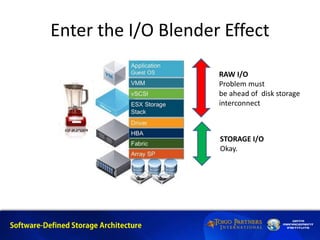

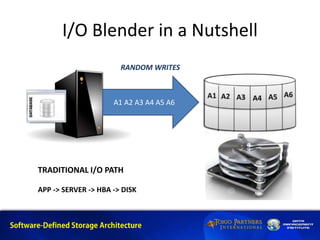

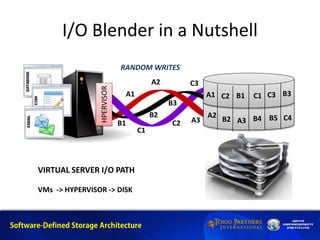

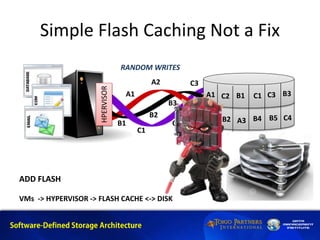

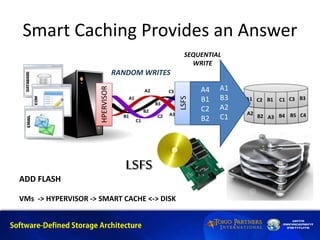

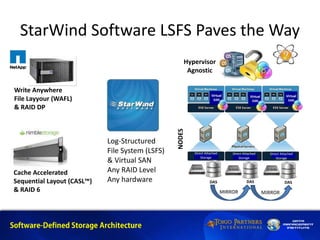

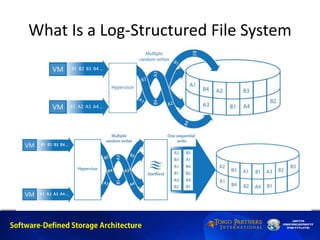

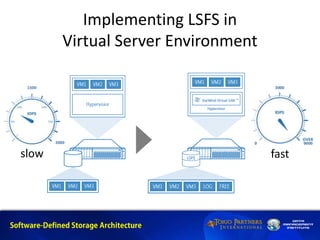

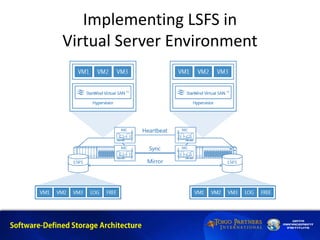

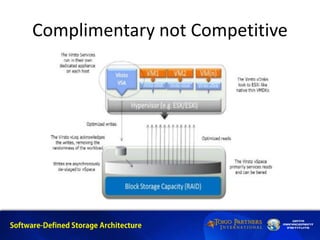

The document discusses the challenges of achieving optimal storage performance in virtual server environments, focusing on the I/O blender effect that complicates resource utilization. It highlights Starwind Software's log-structured file system (LSFS) as a solution that improves performance and integrates effectively with hypervisors without competing against major vendors. The document also provides background information on Starwind Software and its offerings in the field of virtualization and storage management.