- Virtualization is driving increased storage needs due to server consolidation and high I/O density workloads like VDI. This requires consistent high performance from storage.

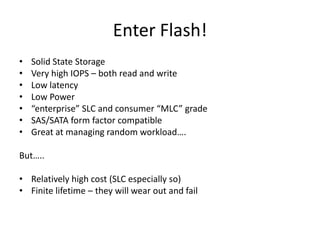

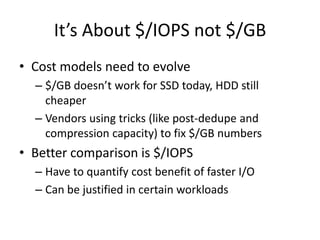

- Flash/SSD storage provides very high IOPS and low latency needed for virtualized environments but comes at a higher cost per GB than HDDs. It is better to evaluate storage on a cost per IOPS basis.

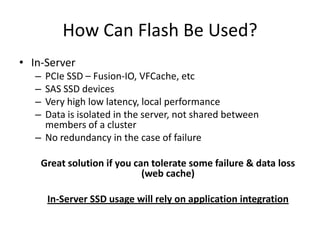

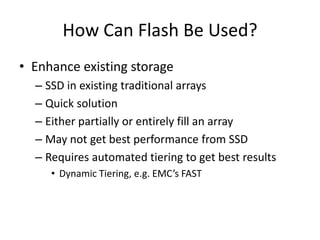

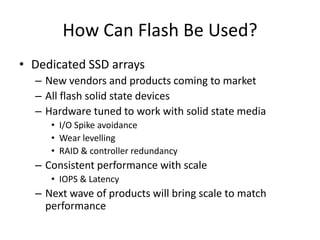

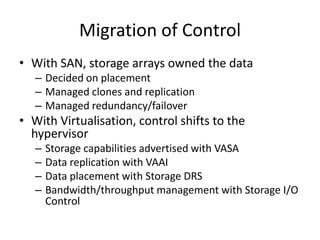

- There are different approaches for using flash including all-flash arrays, hybrid arrays with flash acceleration tiers, and server-side flash drives. Control of data placement and management is also shifting from storage arrays to hypervisors.