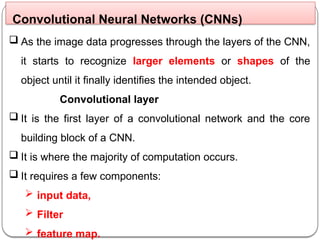

Chapter four provides an overview of deep learning, focusing on artificial neural networks (ANNs), including their structure, such as input, hidden, and output layers, and the mechanism of neurons and weights. It discusses various neural network types like feedforward neural networks, convolutional neural networks (CNNs), and recurrent neural networks (RNNs), alongside advanced topics like generative adversarial networks (GANs), transfer learning, and attention mechanisms. The chapter highlights the importance of activation functions, loss functions, and the roles of different layers in processing data for prediction and classification tasks.