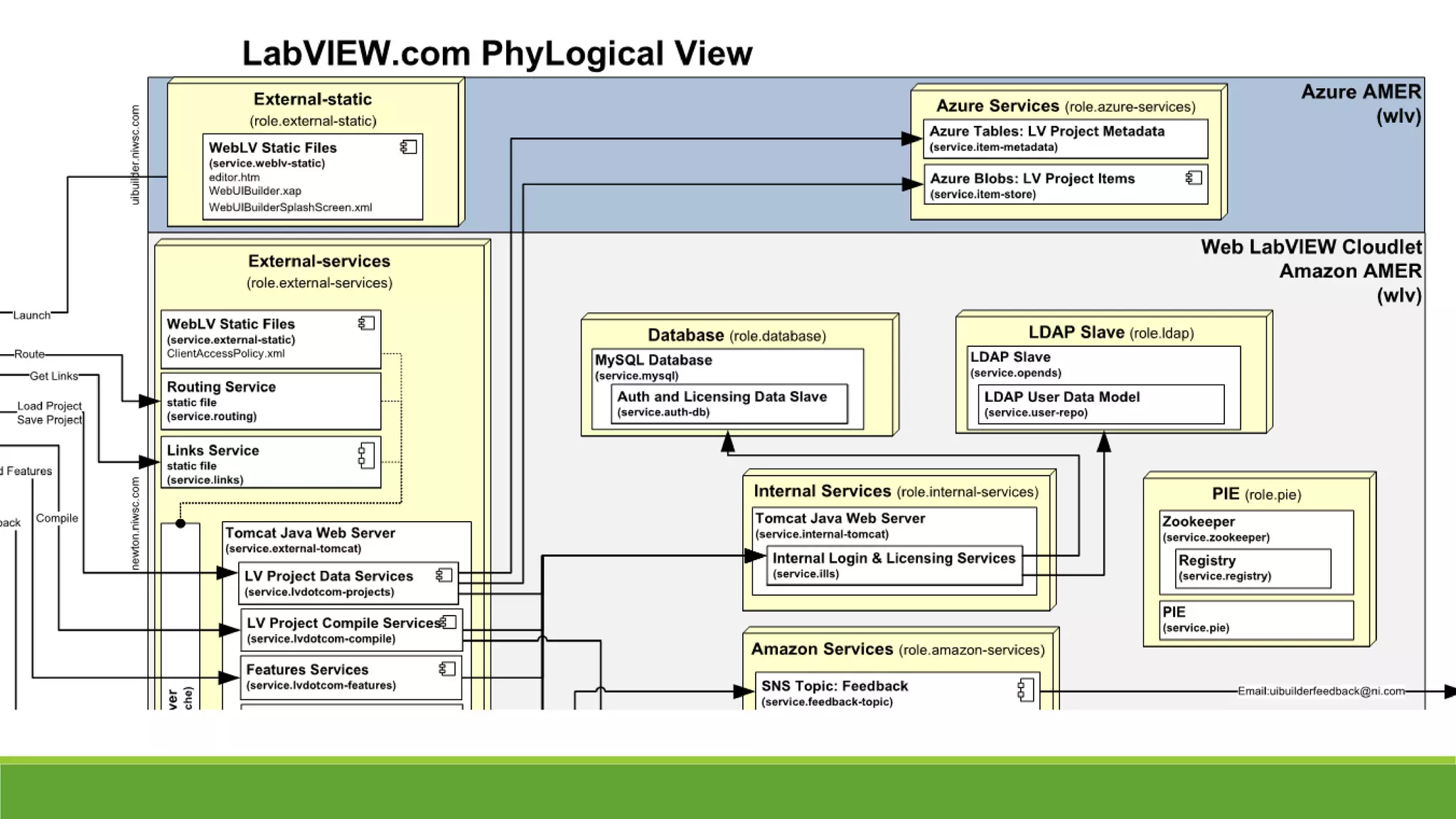

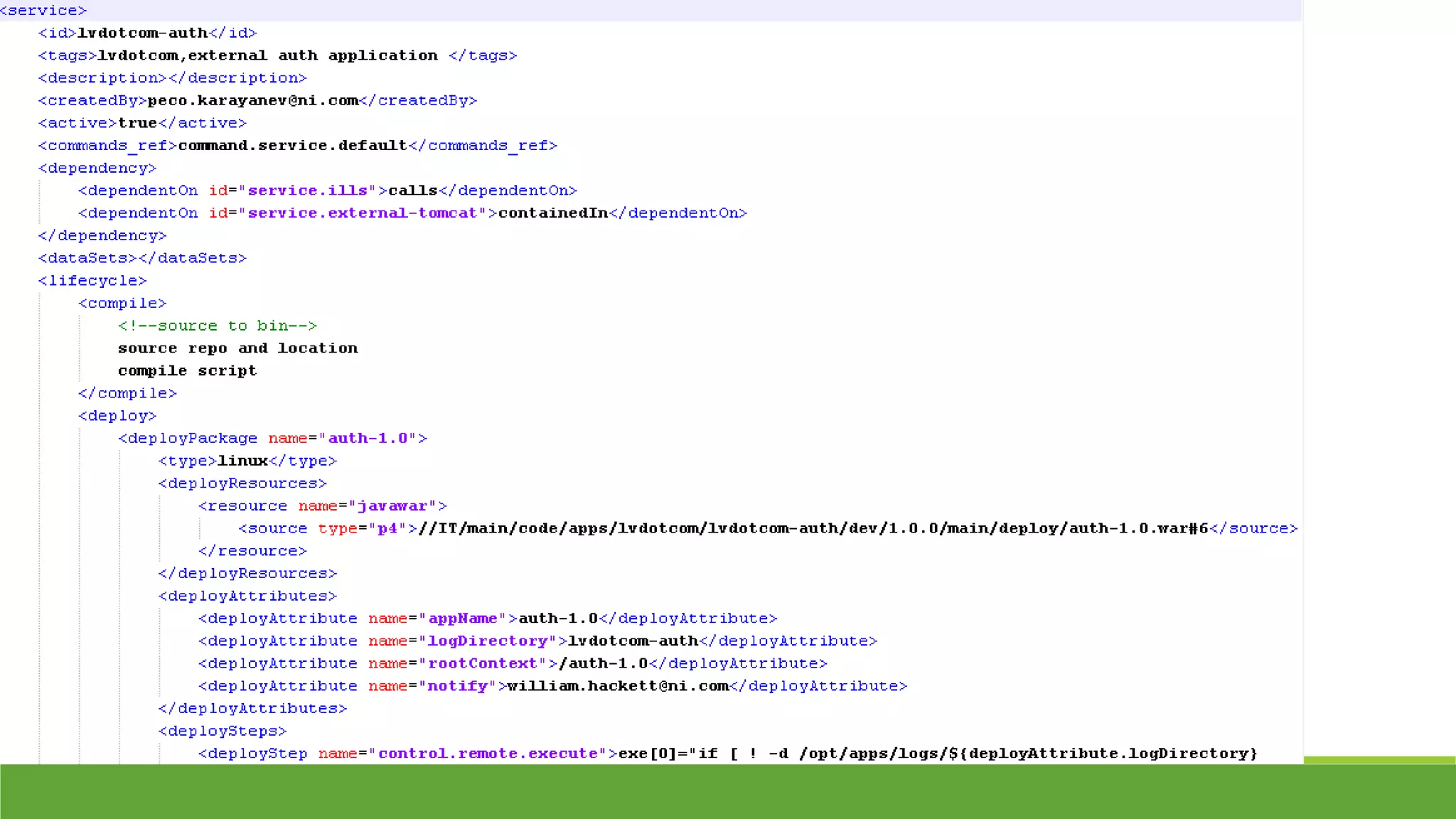

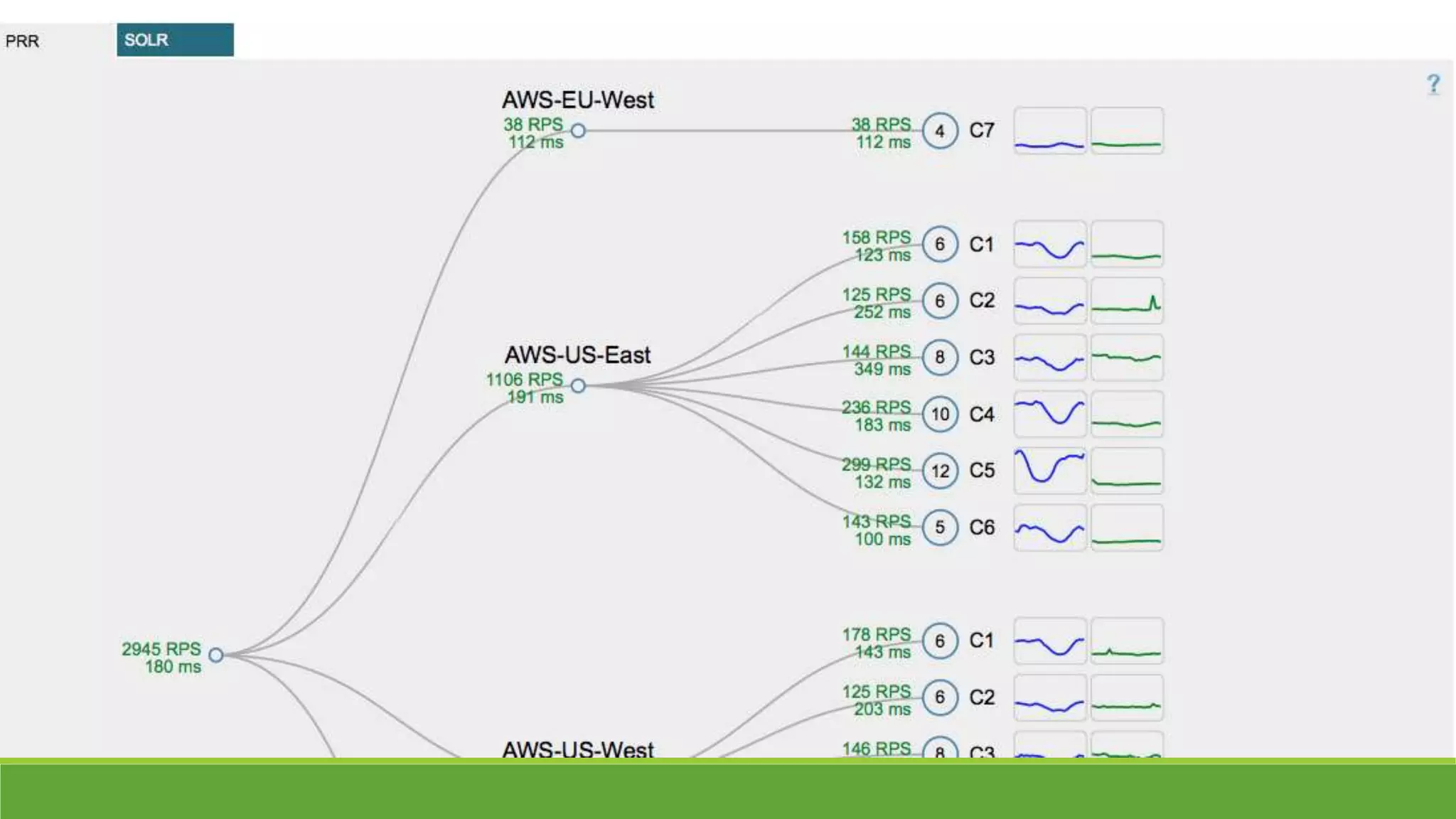

The document details the DevOps transformation initiatives at National Instruments and Bazaarvoice, highlighting the challenges faced, strategies implemented, and outcomes achieved during the transitions. Key elements included a focus on automation, team collaboration, agile processes, and improved release management, which significantly reduced time to market for new products. The results showcased enhanced operational efficiency, customer satisfaction, and better alignment between development and operations teams.