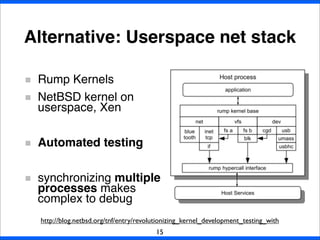

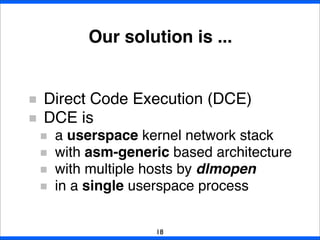

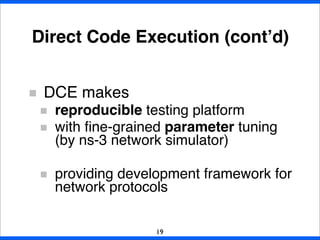

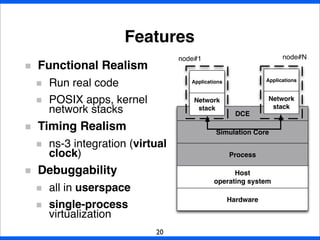

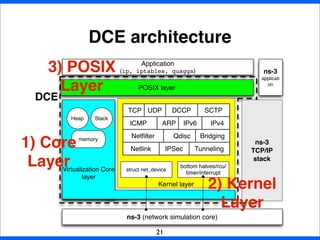

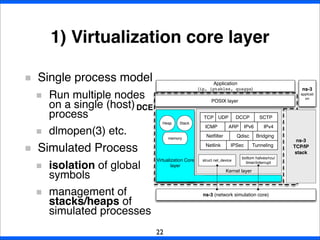

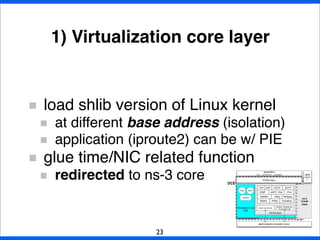

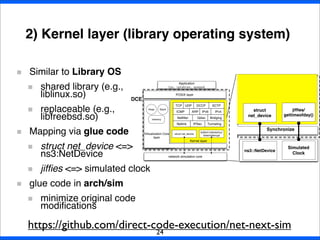

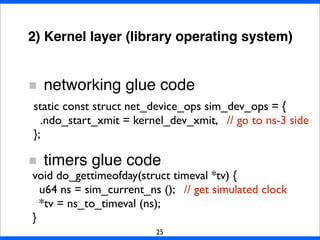

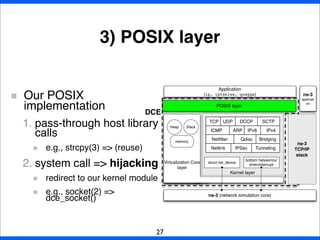

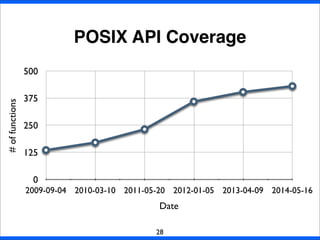

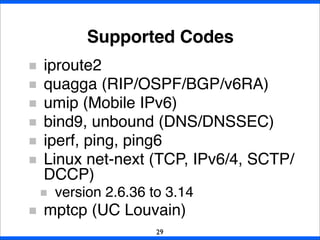

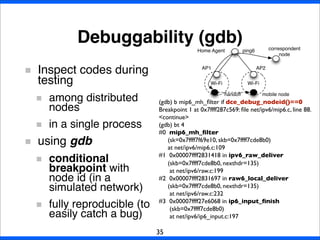

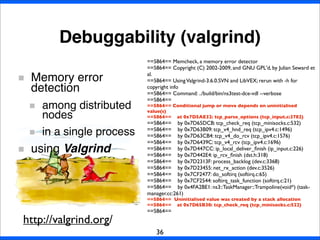

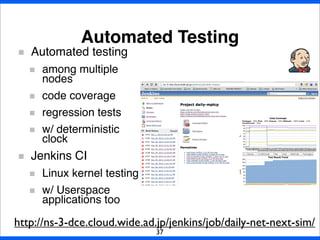

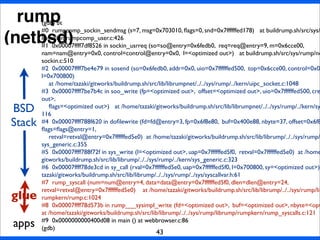

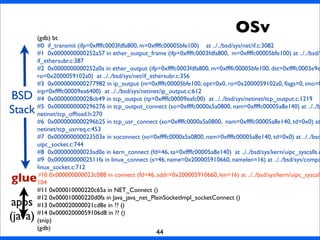

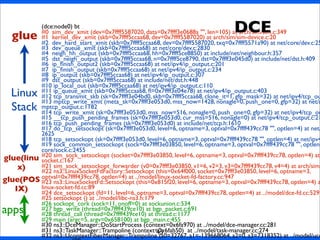

Direct Code Execution (DCE) is a userspace kernel network stack that allows running real network stack code in a single process. DCE provides a testing platform that enables reproducible testing, fine-grained parameter tuning, and a development framework for network protocols. It achieves this through a virtualization core layer that runs multiple network nodes within a single process, a kernel layer that replaces the kernel with a shared library, and a POSIX layer that redirects system calls to the kernel library. This allows full control and observability for testing and debugging the network stack.