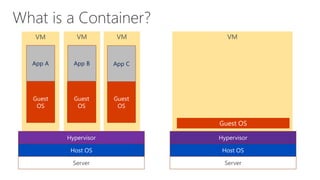

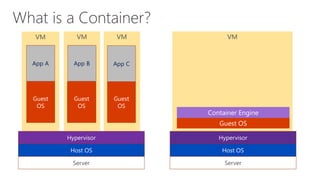

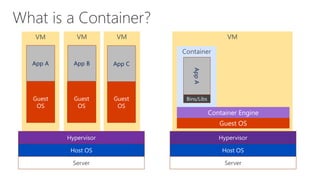

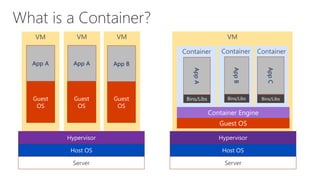

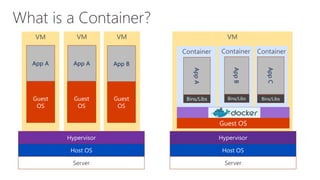

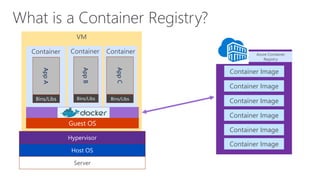

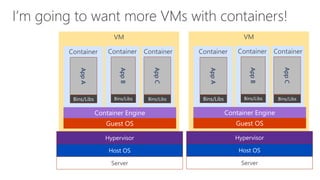

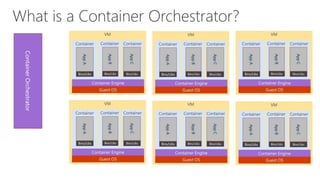

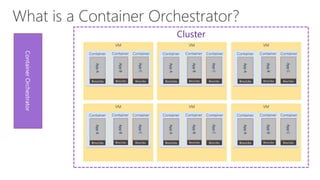

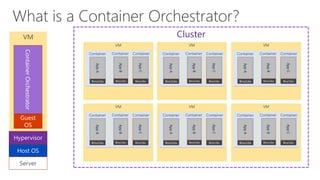

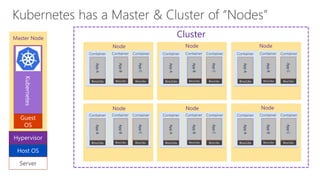

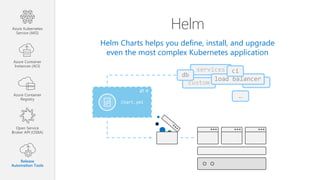

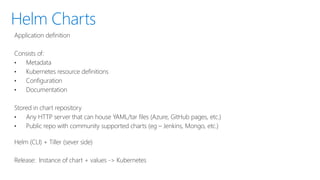

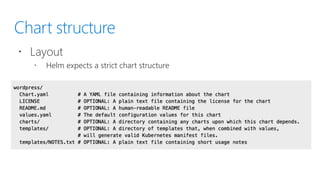

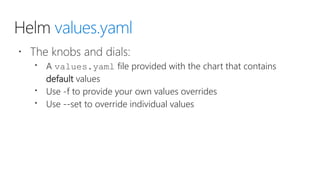

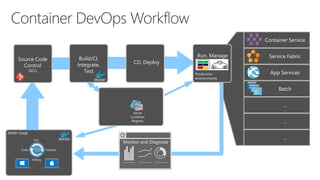

Containers are an application-centric way to deliver scalable applications on infrastructure of choice. Containers package code and dependencies together, and run similarly to virtual machines but are more portable and resource-efficient. Kubernetes is an open-source system for automating deployment, scaling, and management of containerized applications across clusters of hosts. Helm helps define, install, and upgrade complex Kubernetes applications using charts that package application code, dependencies, and configuration.