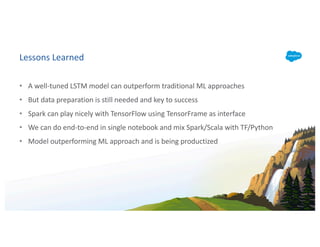

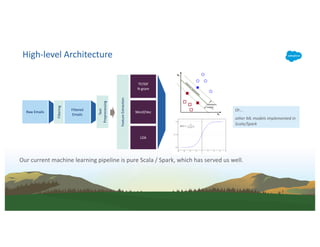

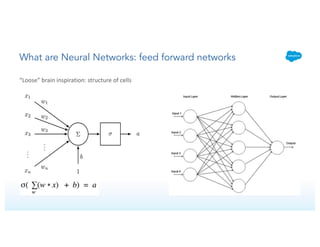

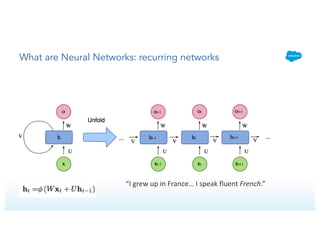

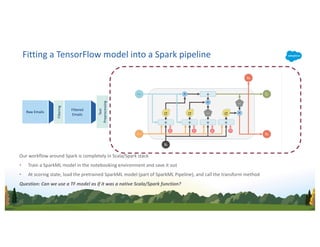

The document presents a presentation on using deep learning for natural language processing with Apache Spark and TensorFlow, focusing on email classification. It discusses the architecture of the deep learning model, including features such as filtering text, tokenization, and the implementation of bidirectional LSTM networks. Key takeaways include effective data preparation and the integration of TensorFlow with Spark for end-to-end machine learning workflows.

![Detailed Considerations for the Model

• We applied dropout on recurrent connections* and inputs…

• As well as L2 regularization on the model parameters.

About dropout and regularization

Cf

0 Cf

1 Cf

2 Cf

3

x0 x1 x2 x3

Cb

0 Cb

1 Cb

2 Cb

3

Ob

0 Of

3

trainable_vars = tf.trainable_variables()

regularization_loss = tf.reduce_sum(

[tf.nn.l2_loss (v) for v in trainable_vars])

loss = original_loss

+ reg_weight * regularization_loss

*Gal & Ghahramani NIPS 2016](https://image.slidesharecdn.com/3alexisrooswenhaoliu-180614024257/85/Deep-Learning-for-Natural-Language-Processing-Using-Apache-Spark-and-TensorFlowwith-Alexis-Roos-and-Wenhao-liu-20-320.jpg)

![Scala/Spark Pipeline + TensorFlow Model

TensorFrames / SparkDL as Interface

BatchSize

Sequence Length

Embedding Length

VocabularySize

tf.nn.embedding_lookup

Sequence

Length

BatchSize

Em

bedding

Length

[[10 19853 3920 8425 43 … 18646]

[235 489 165638 46562 … 16516]]

…

[[0.19853 0.3920 0.8646 0.459 … 0.1865]

…

[0.684 0.1894 0.1564 0.9874 … 0.354] ]

Filtering

Text

Preprocessing

Raw Emails

Filtered

Emails

Embedding Matrix

Encoded Input

Input Tensor

* Shi Yan, Understanding LSTM and its diagrams](https://image.slidesharecdn.com/3alexisrooswenhaoliu-180614024257/85/Deep-Learning-for-Natural-Language-Processing-Using-Apache-Spark-and-TensorFlowwith-Alexis-Roos-and-Wenhao-liu-25-320.jpg)

![TensorFrames turns a TensorFlow model into a UDF.

Save the model:

Save –> Load –> Score

%python

graph_def = tfx.strip_and_freeze_until(["input_data", "predicted"], sess.graph, sess = sess)

tf.train.write_graph(graph_def, “/model”, ”model.pb", False)

%scala

val graph = new com.databricks.sparkdl.python.GraphModelFactory()

.sqlContext(sqlContext)

.fetches(asJava(Seq("prediction")))

.inputs(asJava(Seq("input_data")), asJava(Seq(”input_data")))

.graphFromFile("/model/model.pb")

graph.registerUDF("model")

Score with the model:

Load the model:

%scala

val predictions = inputDataSet.selectExpr("InputData", "model(InputData)")](https://image.slidesharecdn.com/3alexisrooswenhaoliu-180614024257/85/Deep-Learning-for-Natural-Language-Processing-Using-Apache-Spark-and-TensorFlowwith-Alexis-Roos-and-Wenhao-liu-26-320.jpg)