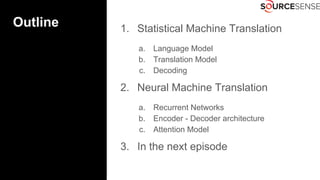

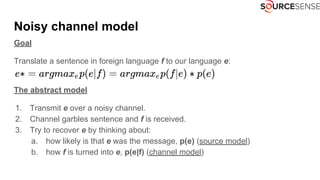

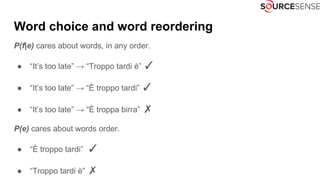

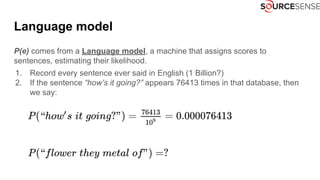

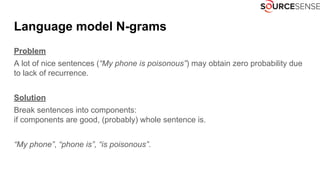

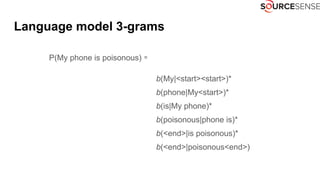

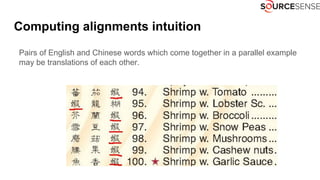

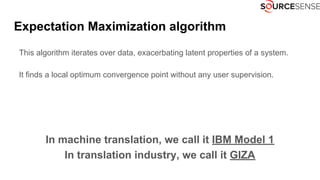

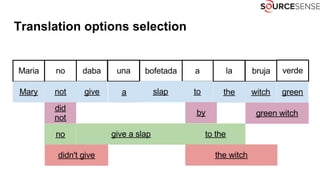

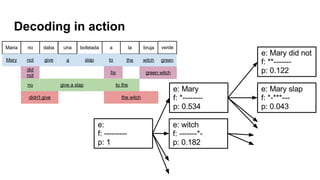

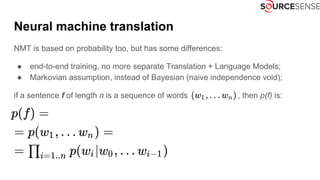

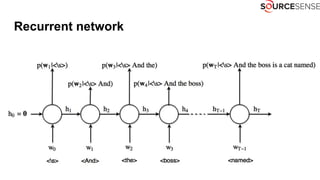

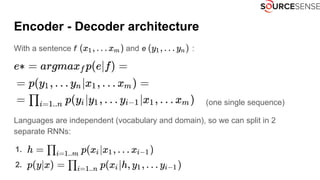

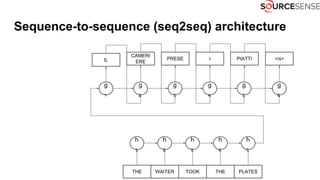

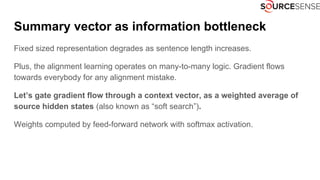

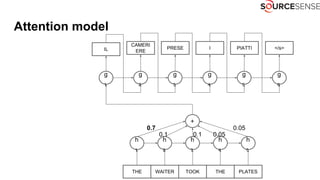

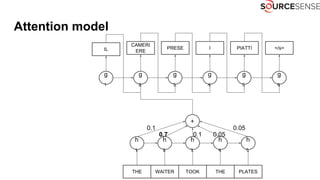

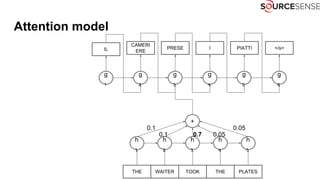

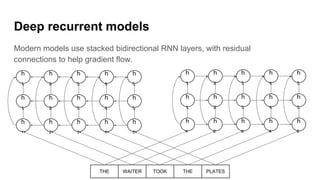

The document discusses deep learning's impact on machine translation, detailing the evolution from statistical to neural machine translation, and introduces concepts like language models, translation models, and the attention mechanism. It explains how probabilistic methods and architectures, such as encoder-decoder and attention models, facilitate improved translation through advanced training techniques. Additionally, it mentions future directions for machine translation, including online adaptation and zero-shot translation.