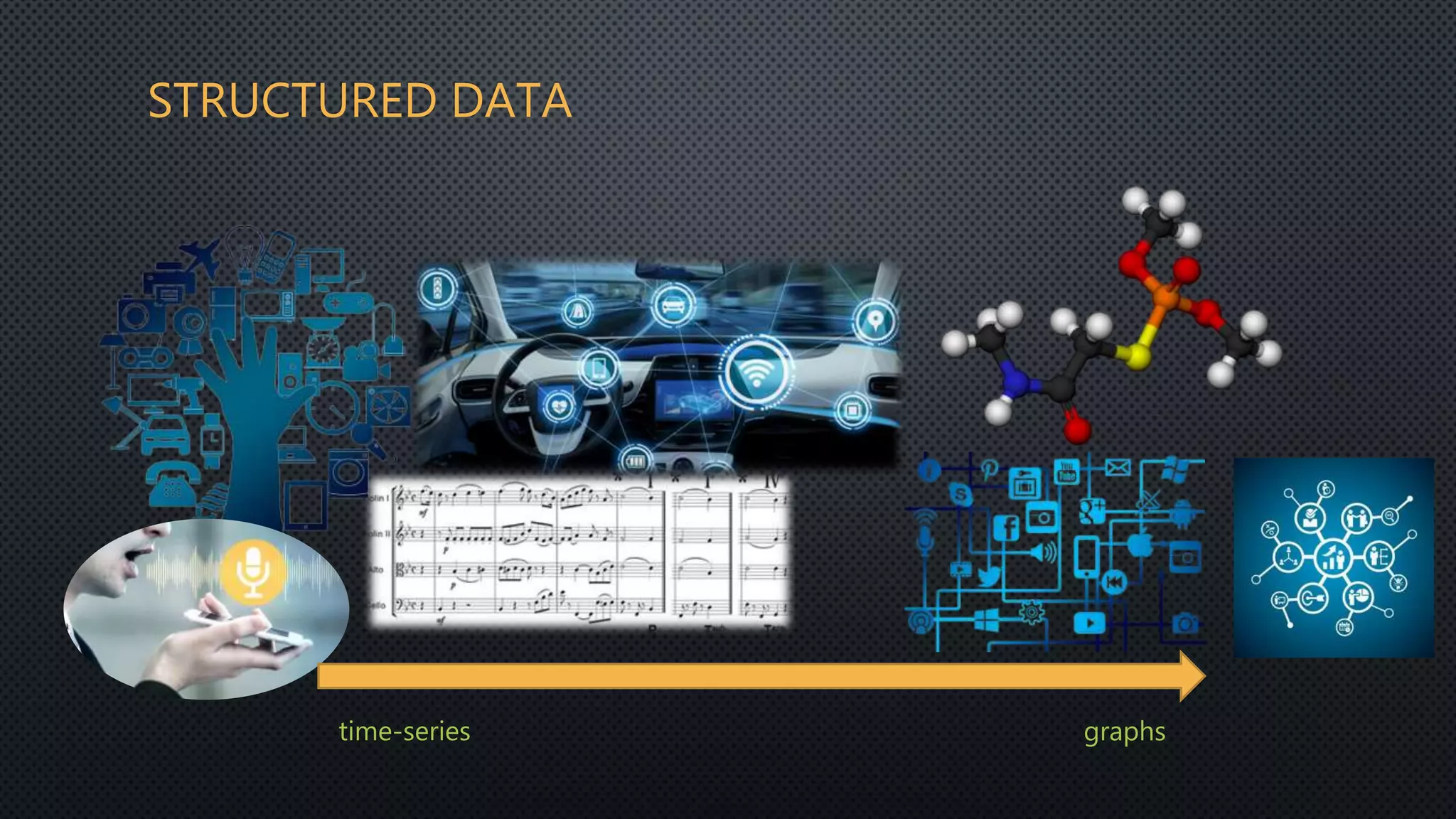

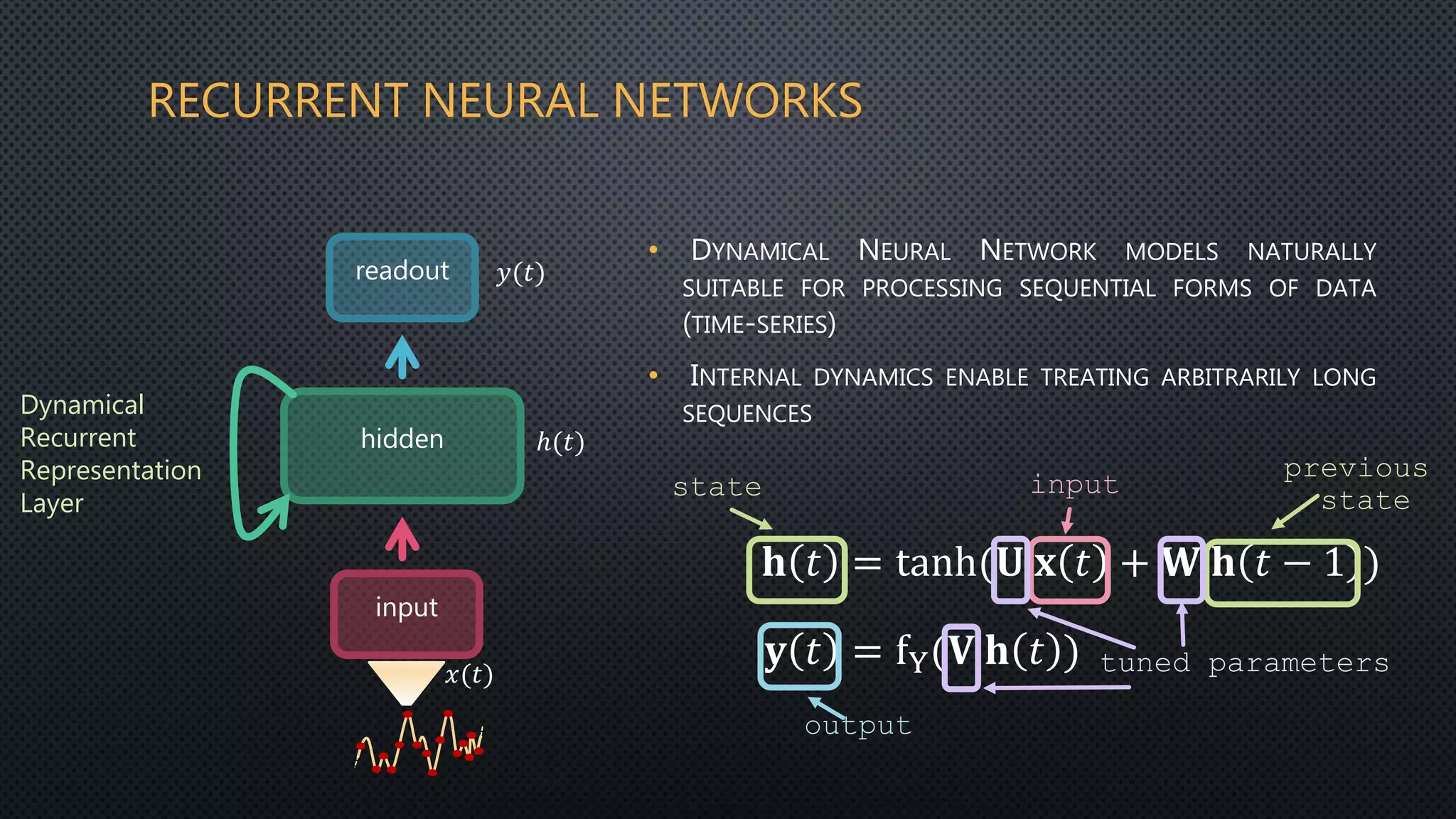

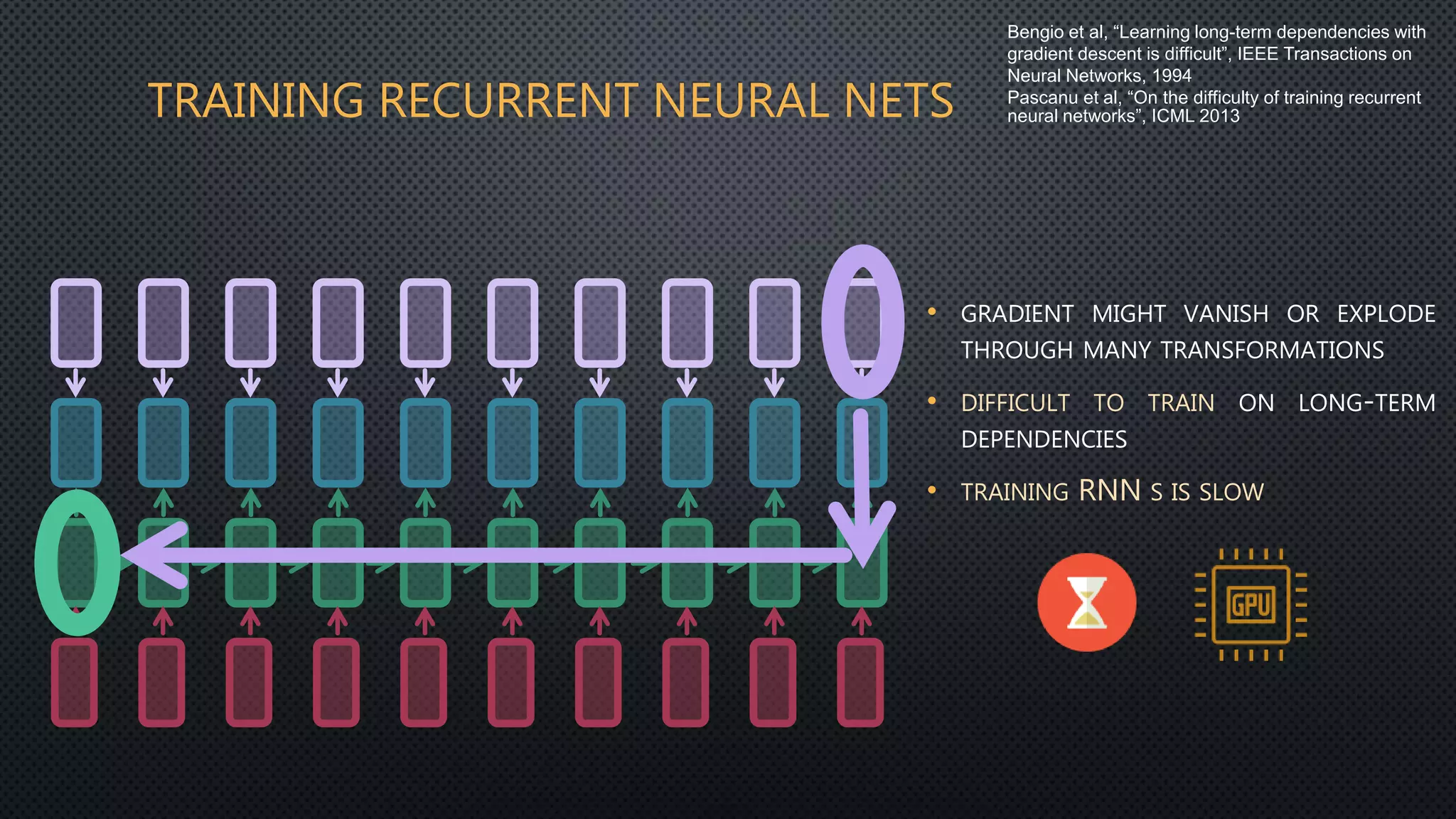

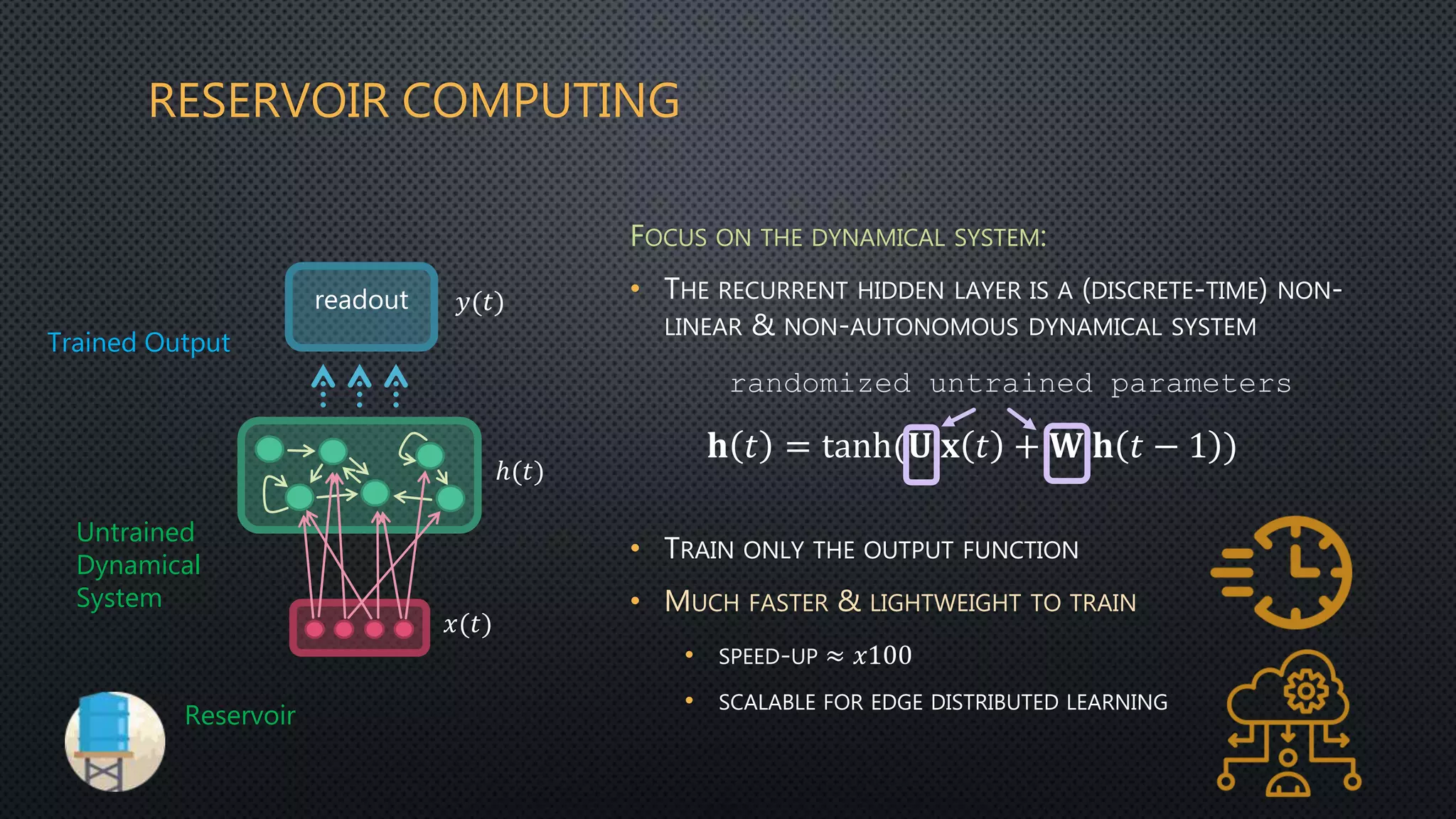

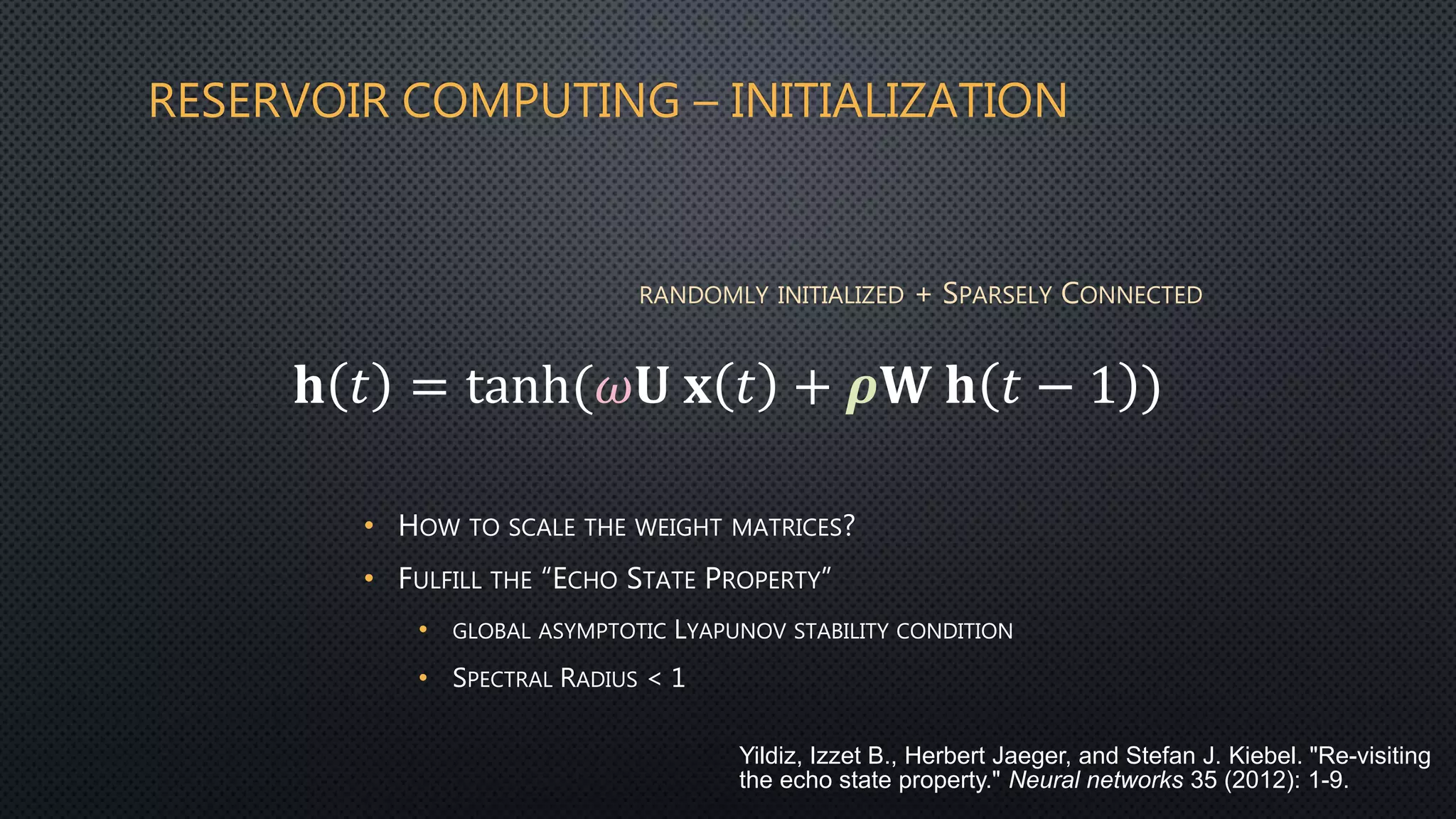

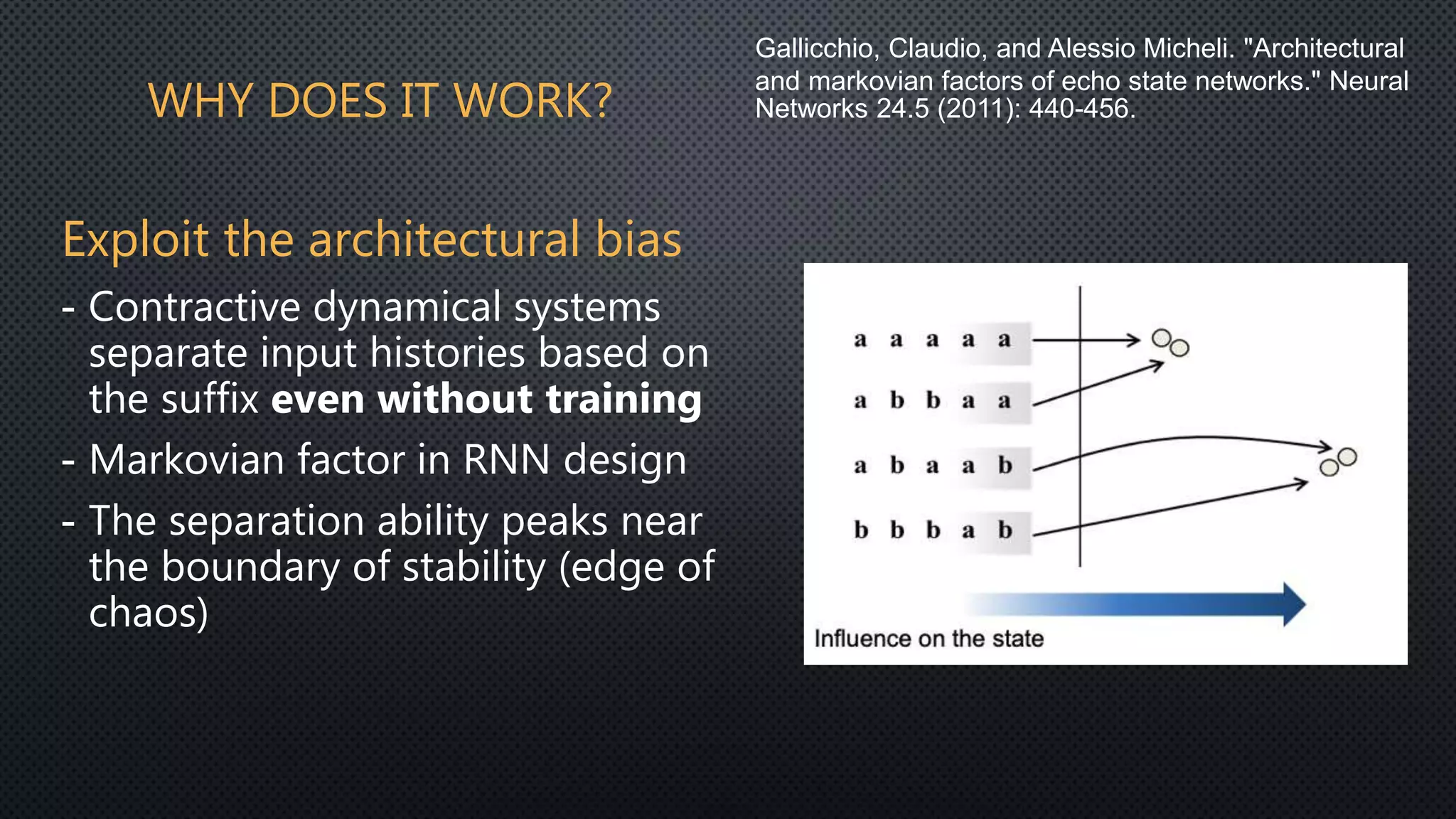

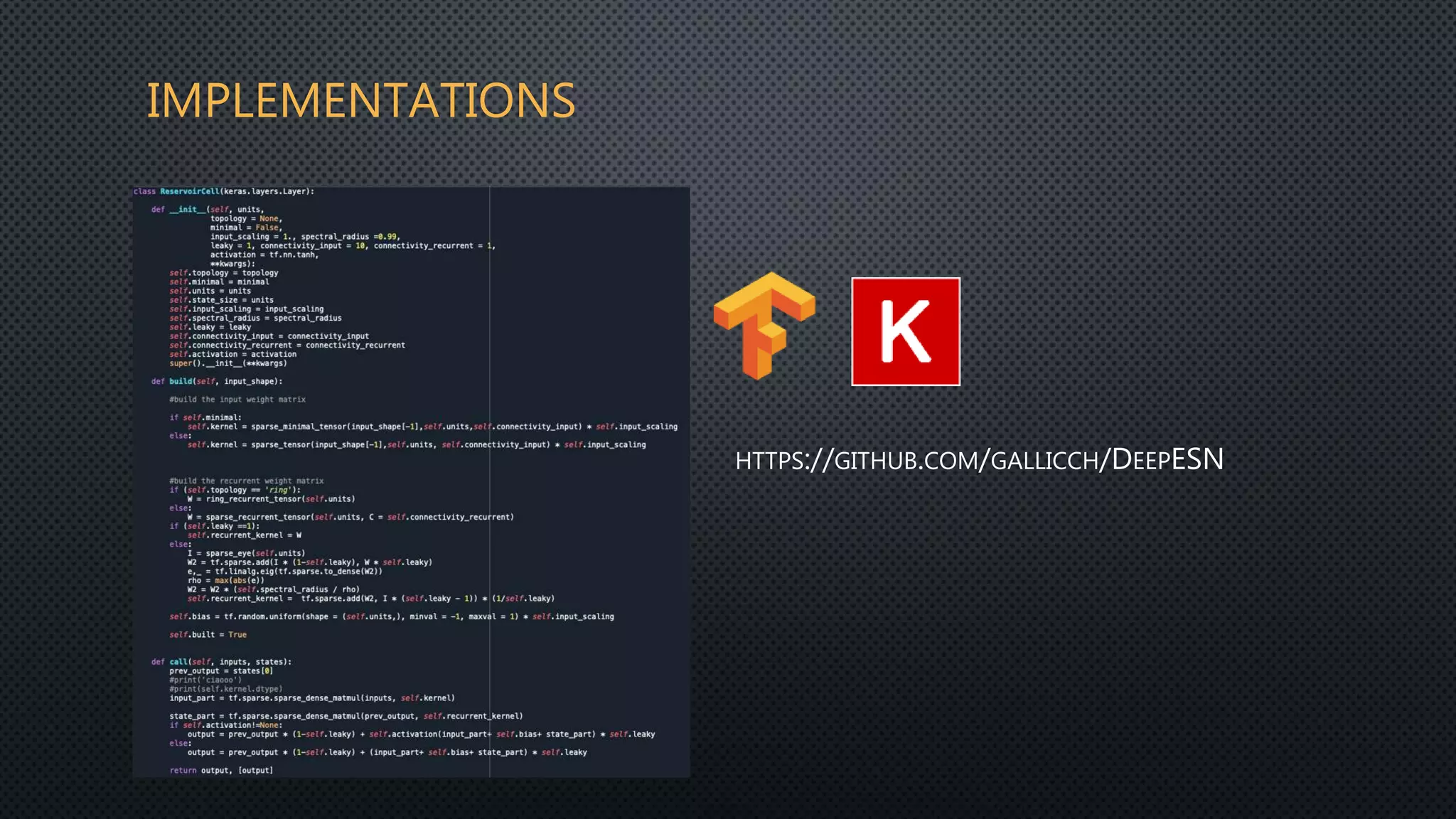

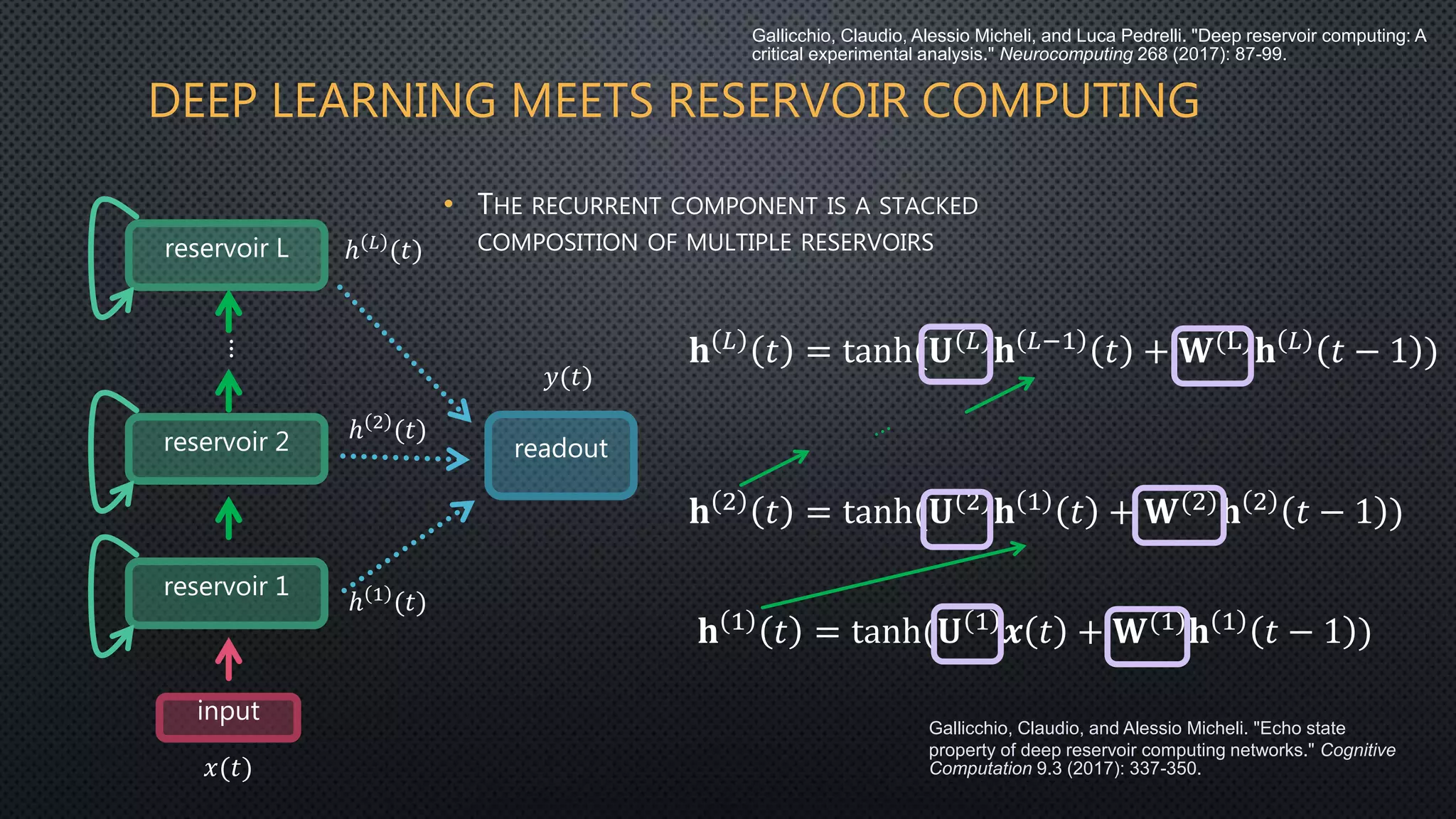

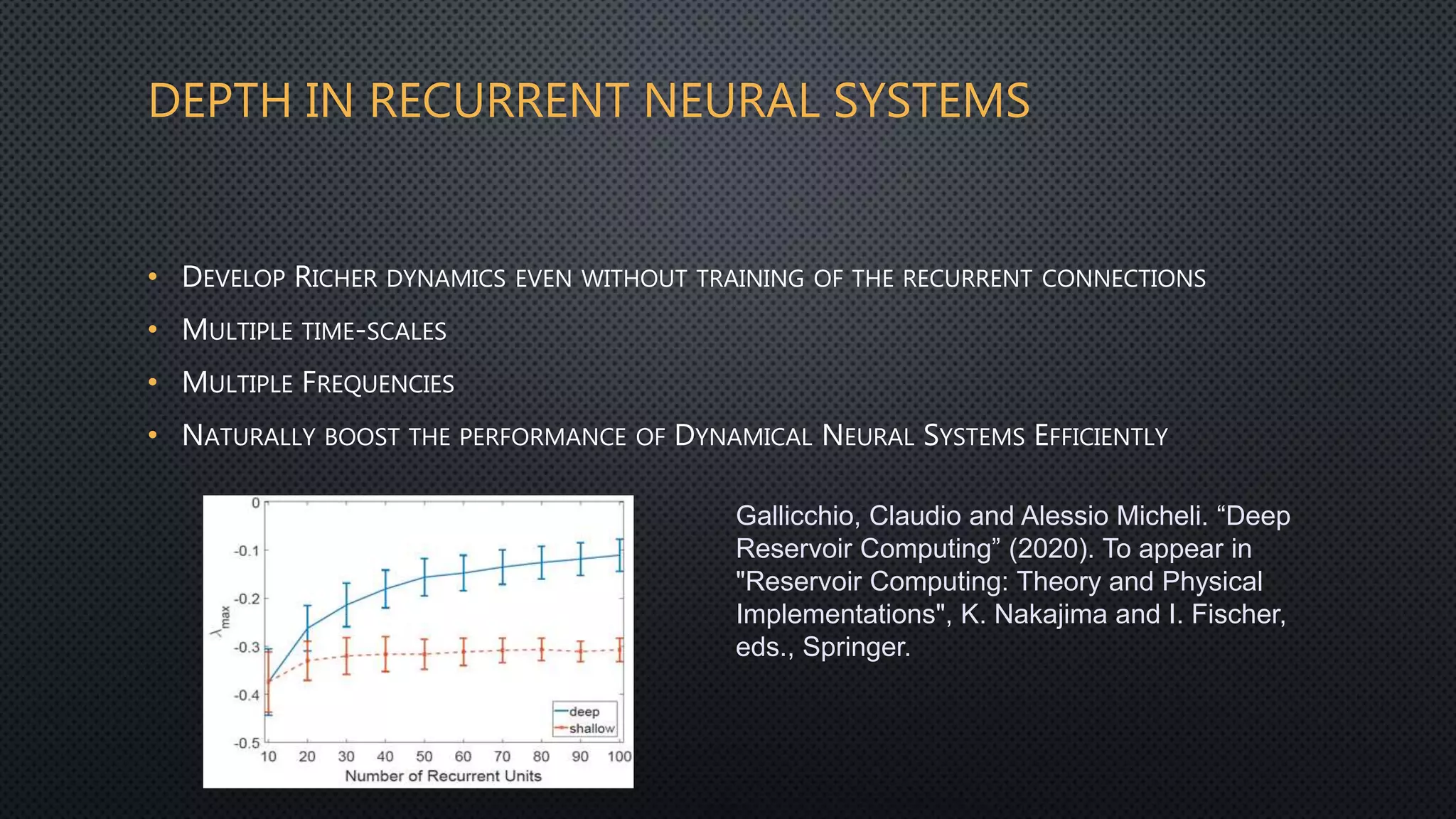

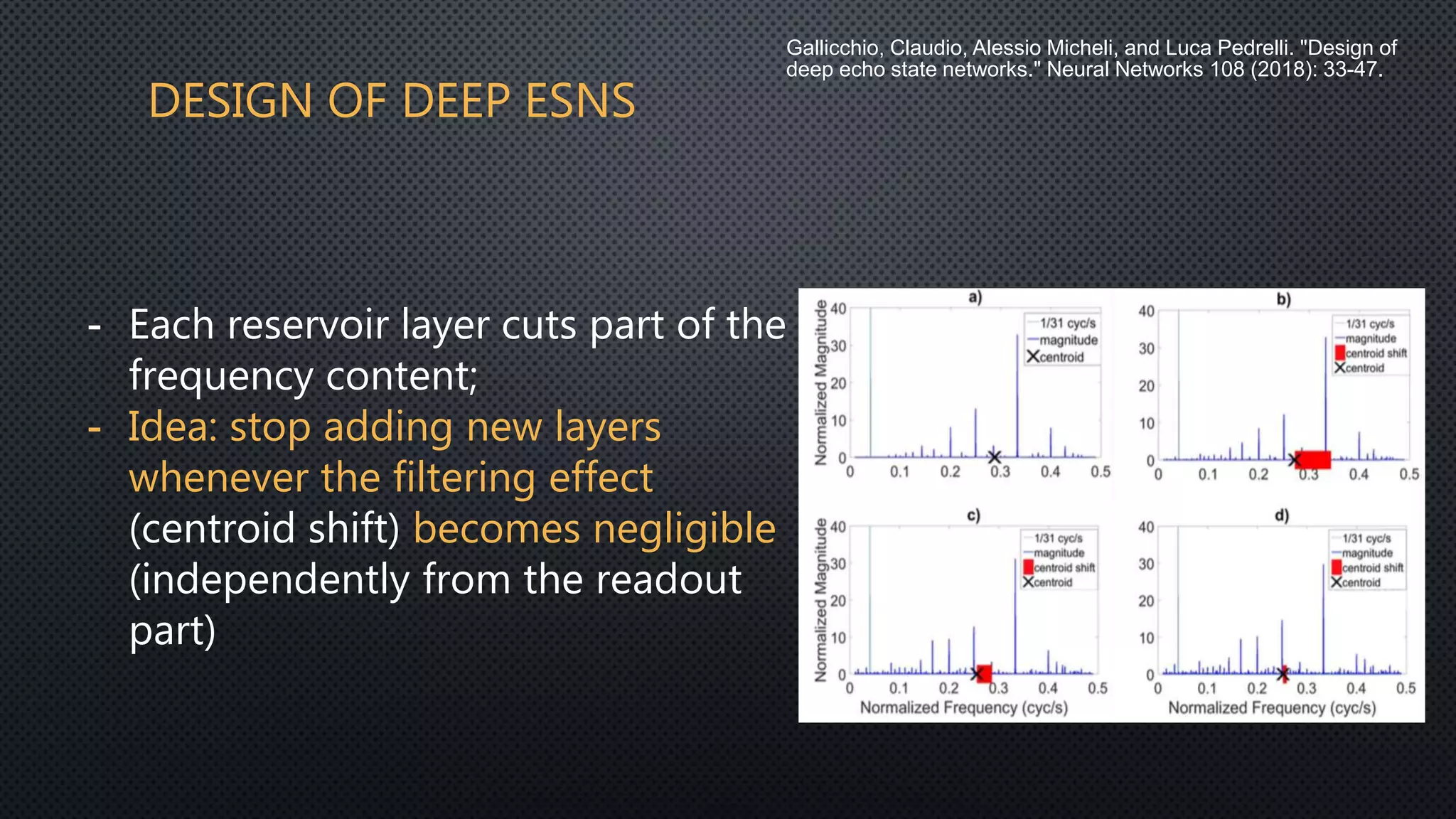

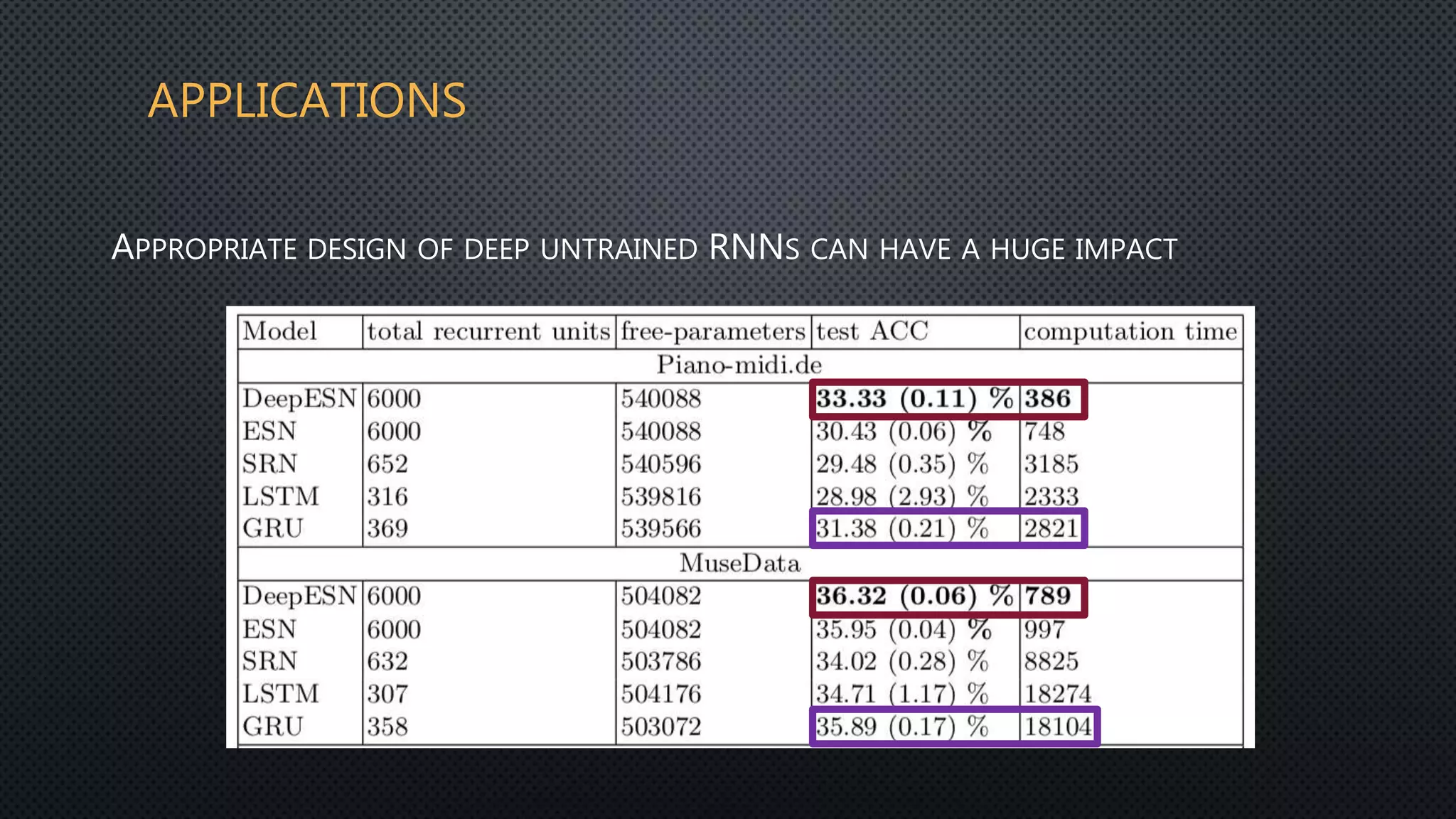

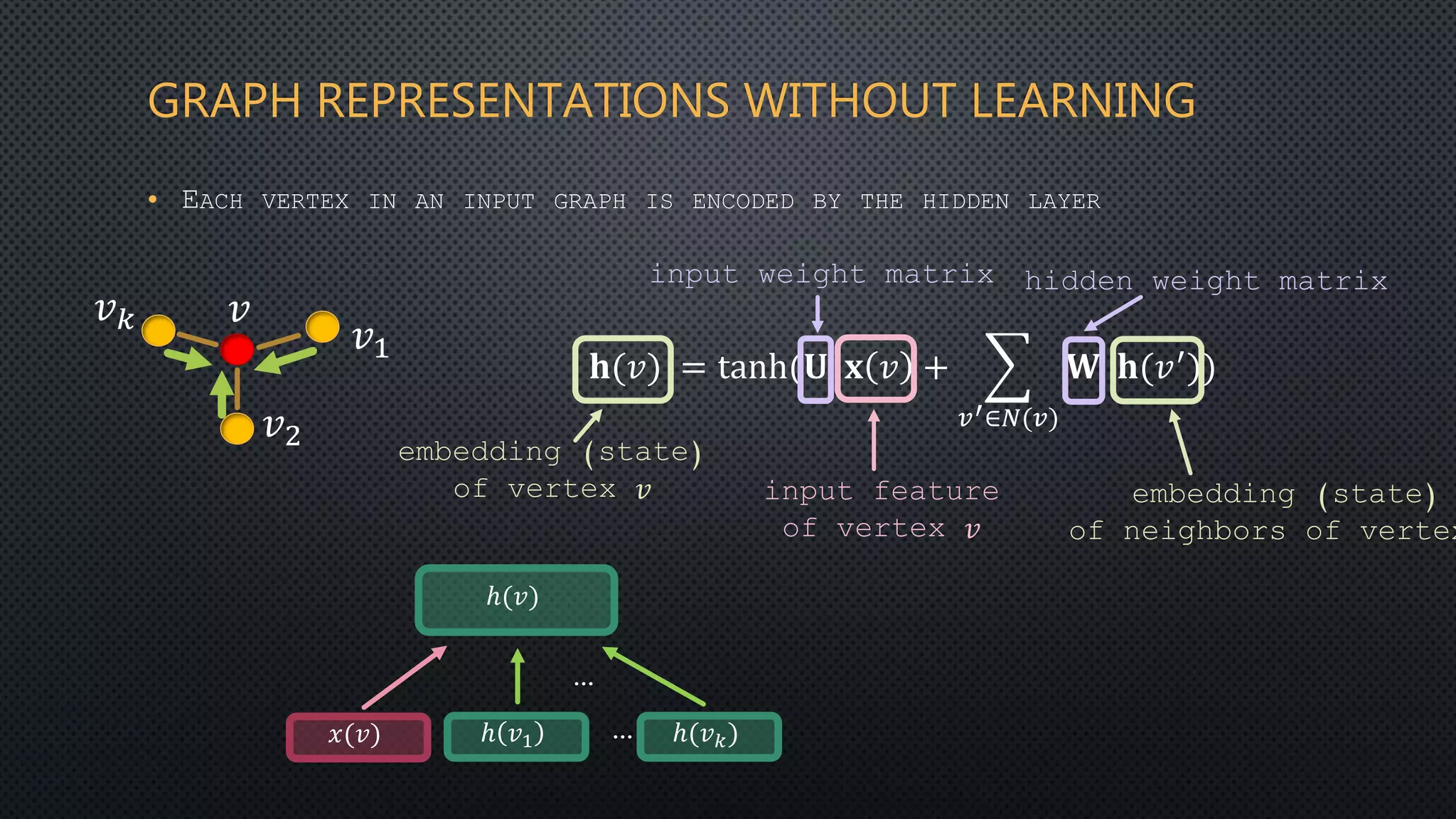

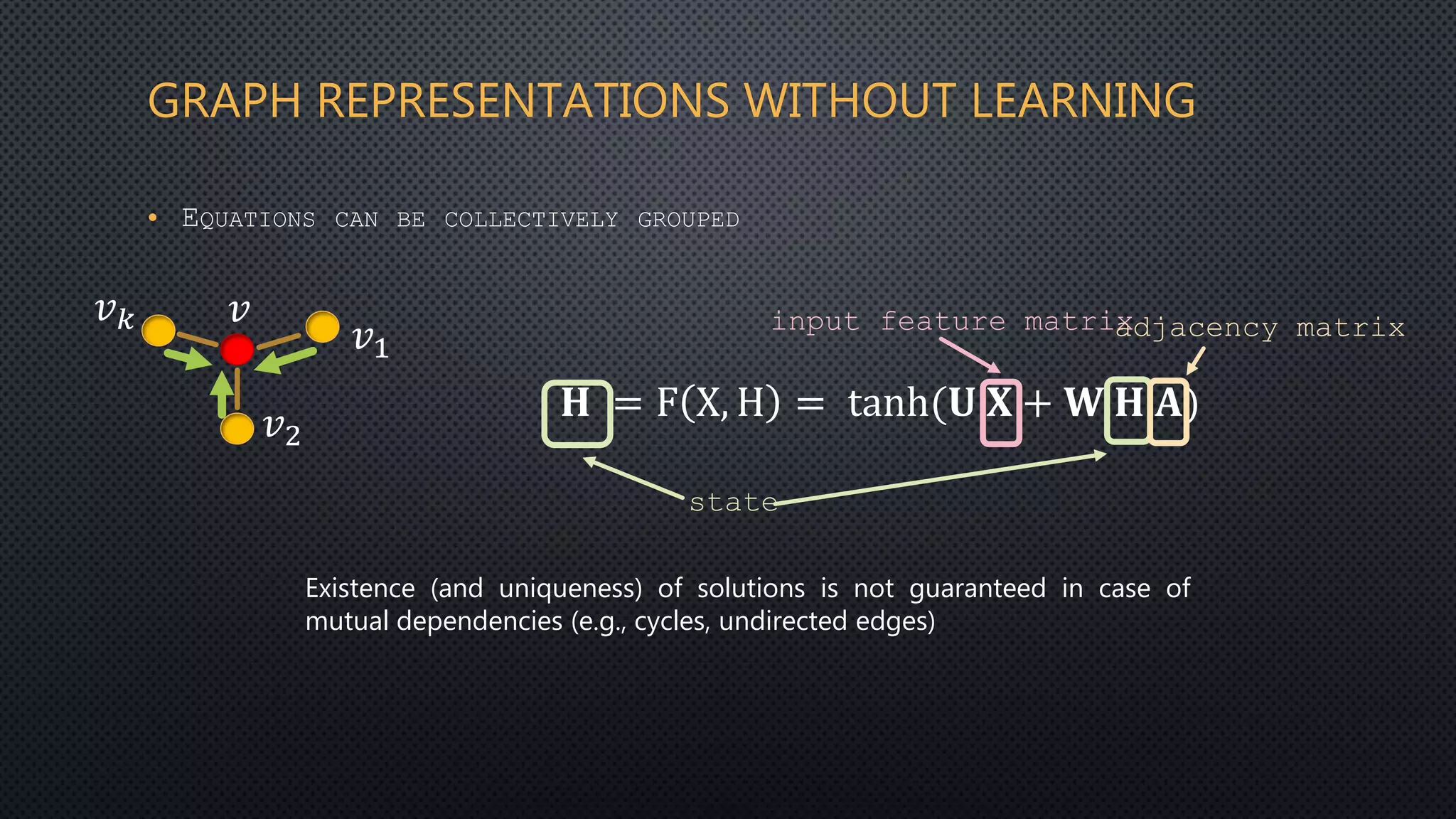

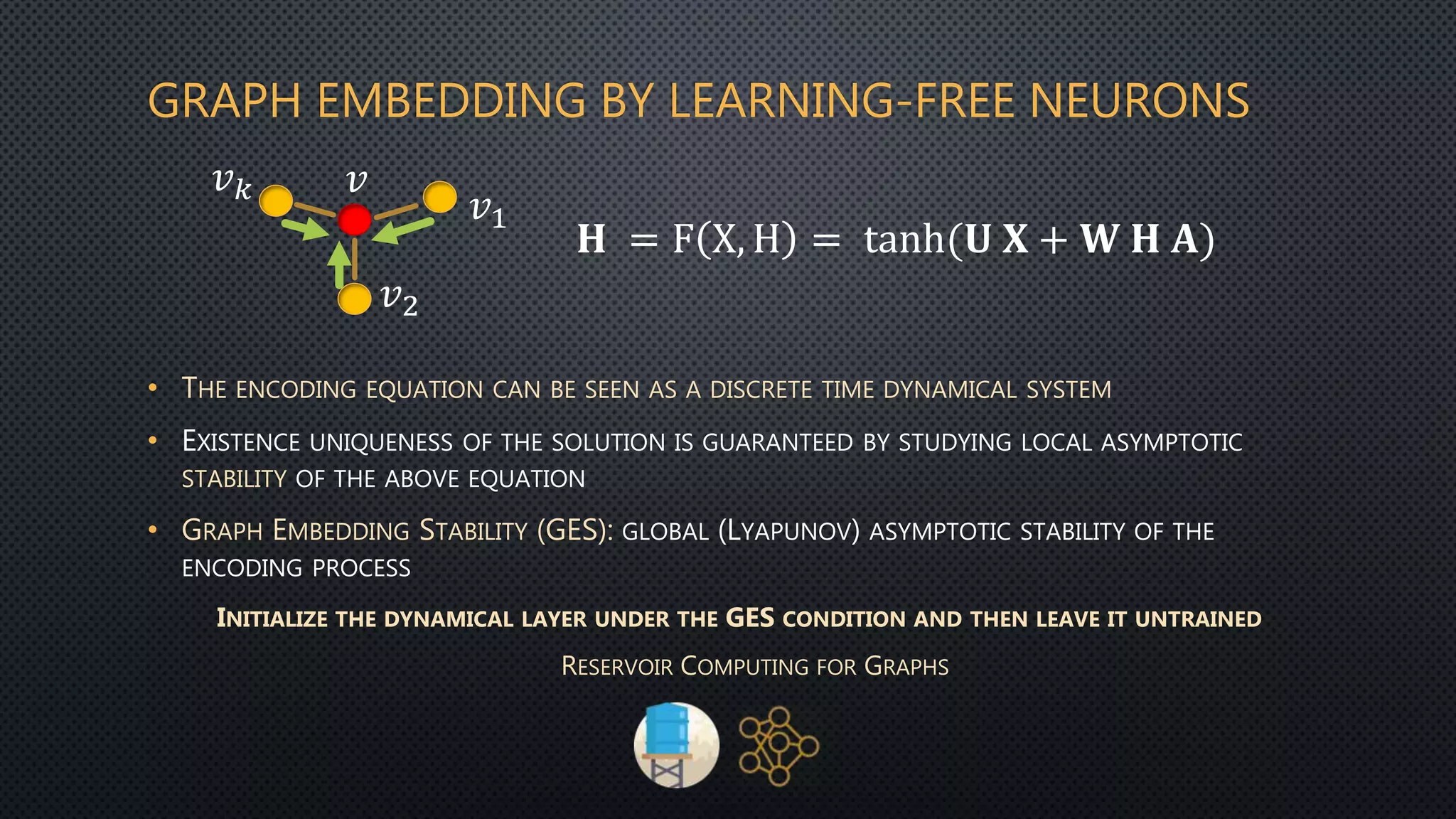

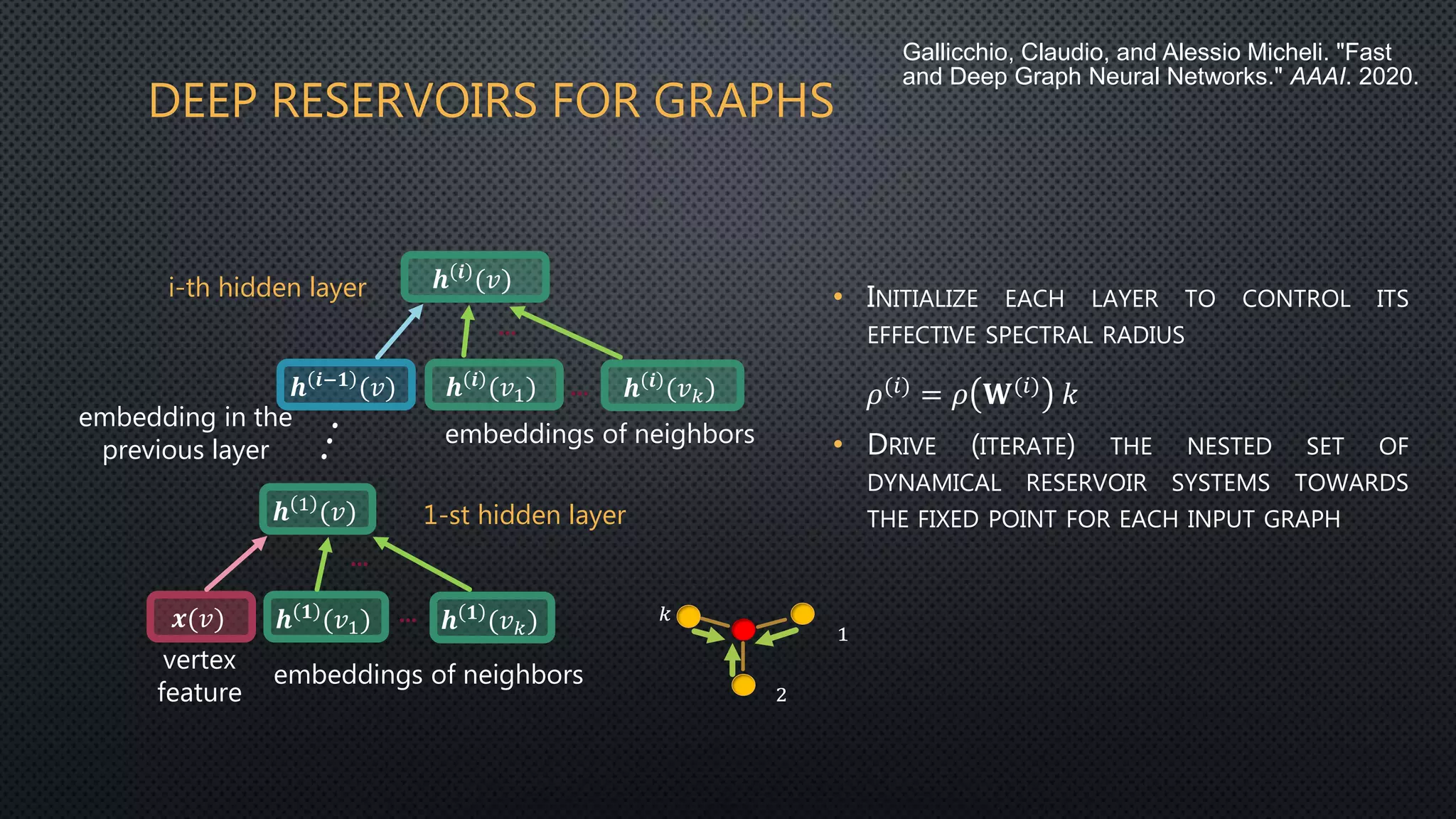

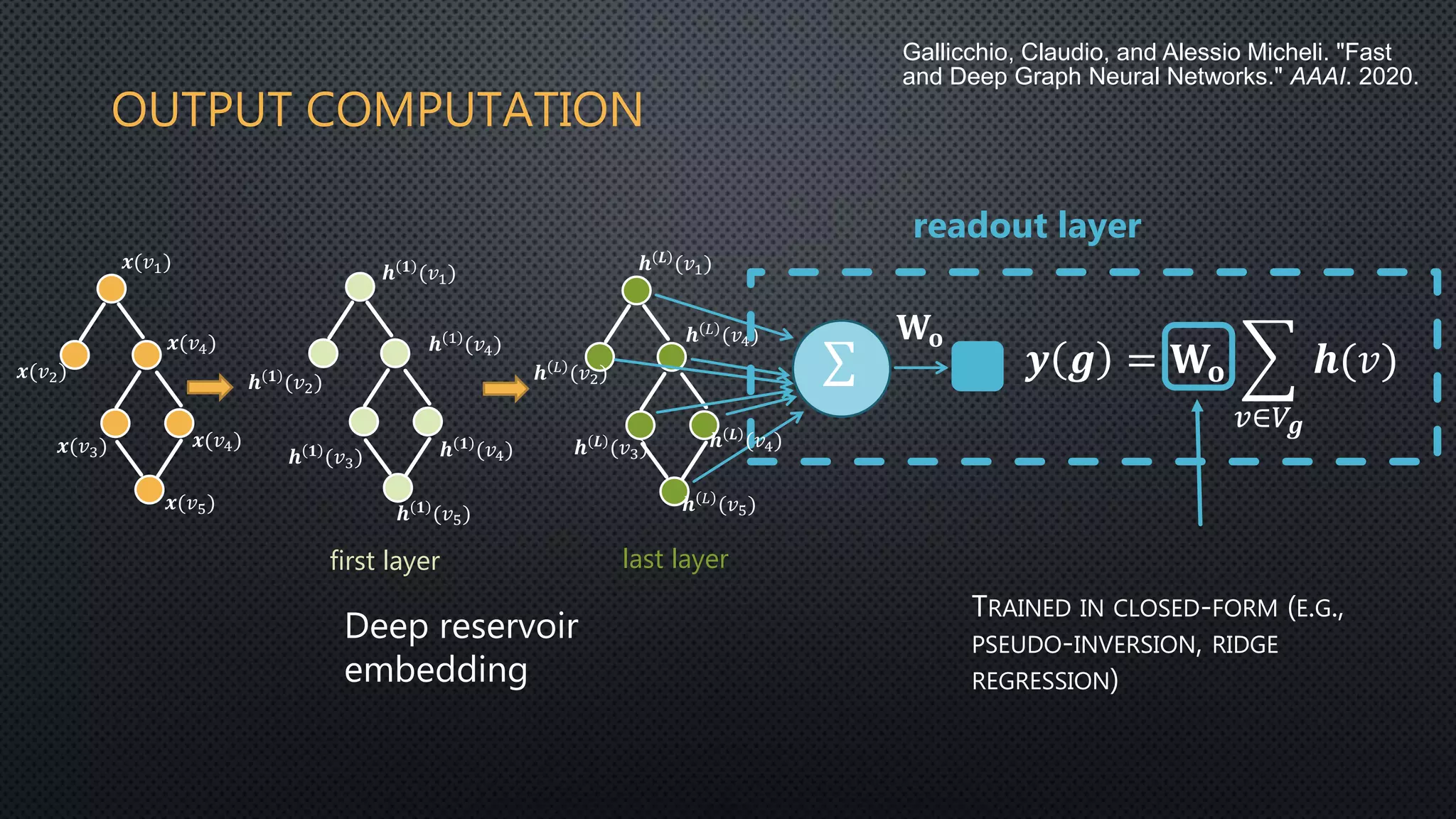

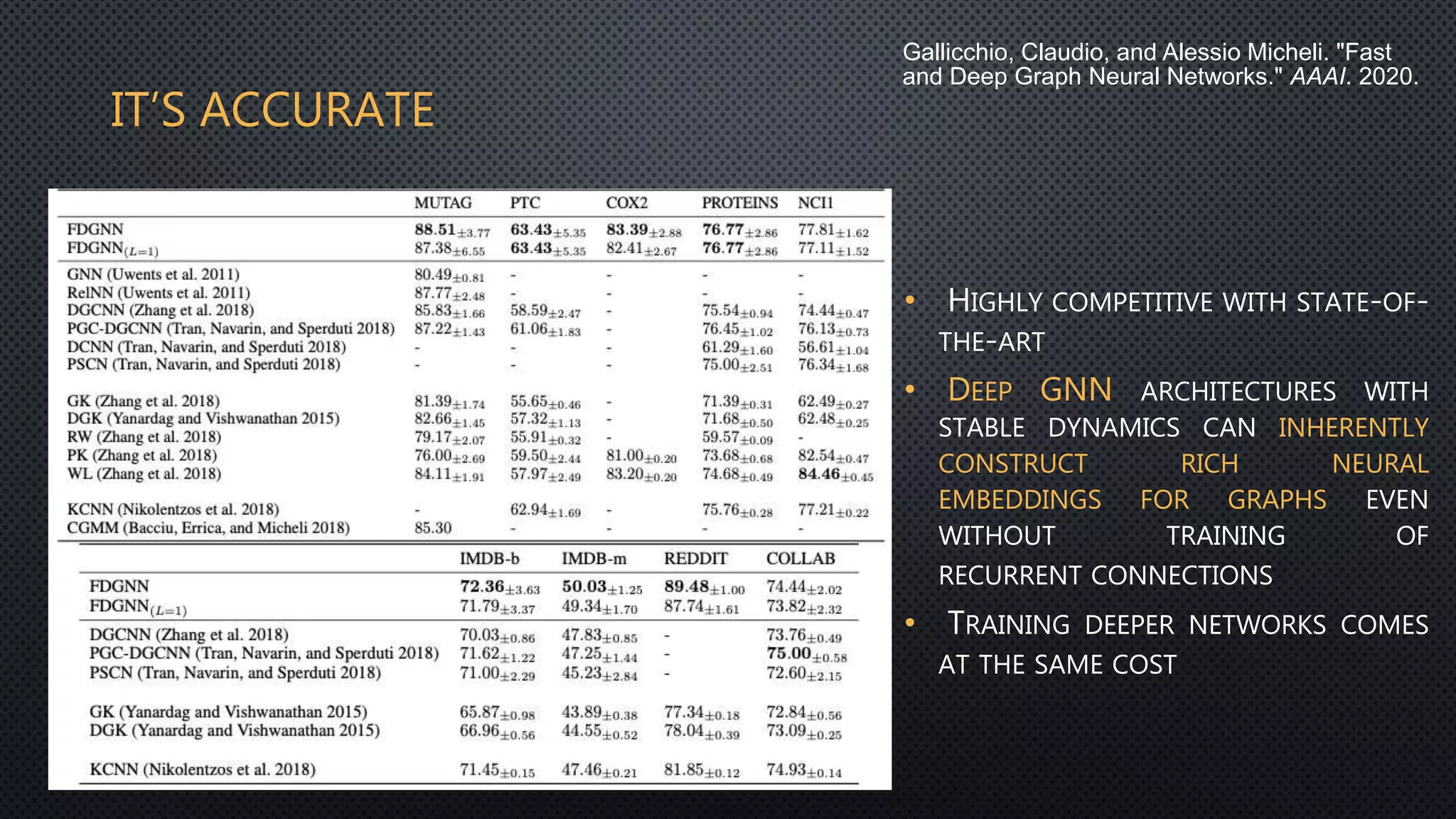

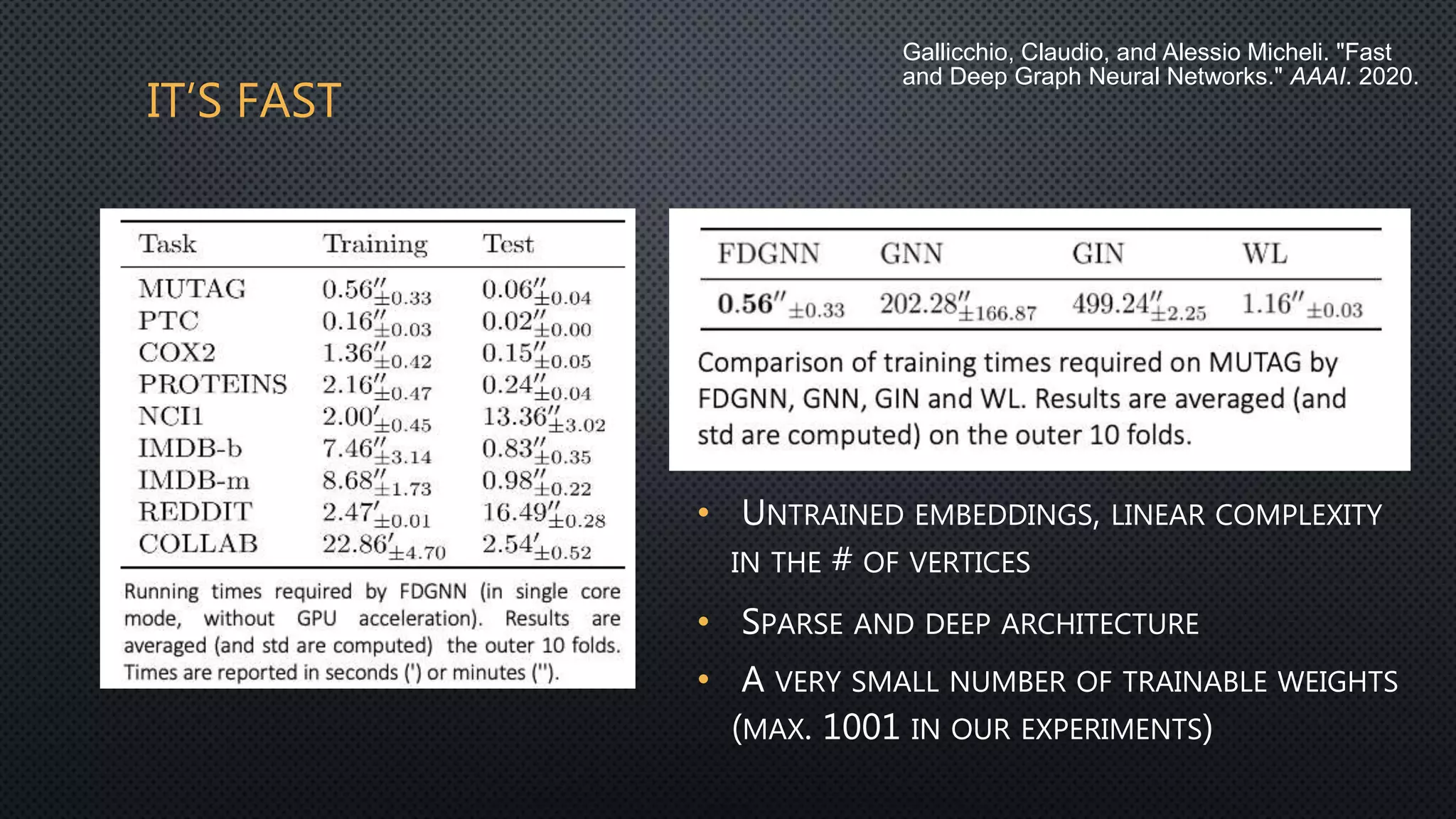

This document discusses deep reservoir computing for structured data like time-series and graphs. Recurrent neural networks are naturally suited for sequential data but are difficult to train. Reservoir computing addresses this by only training the output layer, leaving the recurrent hidden layer untrained. Stacking multiple reservoir layers leads to deep reservoir computing, which develops richer dynamics. For graphs, each node is encoded by the fixed point of a dynamical system implemented as a reservoir network. Deep reservoirs can inherently construct rich embeddings for graphs without training recurrent connections. This makes graph neural networks accurate yet fast compared to state-of-the-art methods that require training deep architectures.