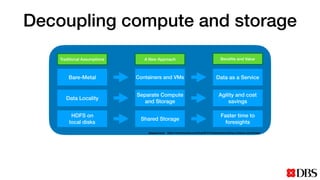

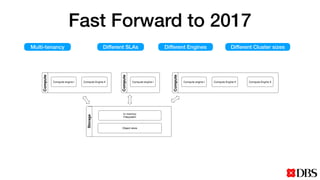

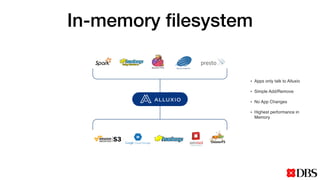

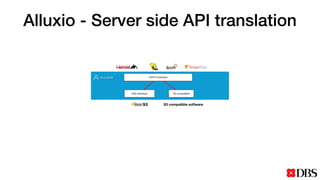

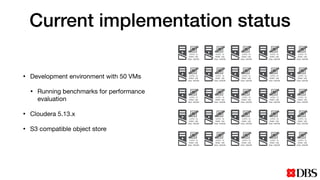

The document discusses the challenges of scaling compute and storage in data processing workloads, particularly in relation to Hadoop at DBS. It proposes decoupling compute and storage to improve flexibility, performance, and cost-effectiveness through technologies such as Alluxio and object stores. Current implementation status includes development with virtual machines and performance benchmarks using Cloudera and S3-compatible environments.