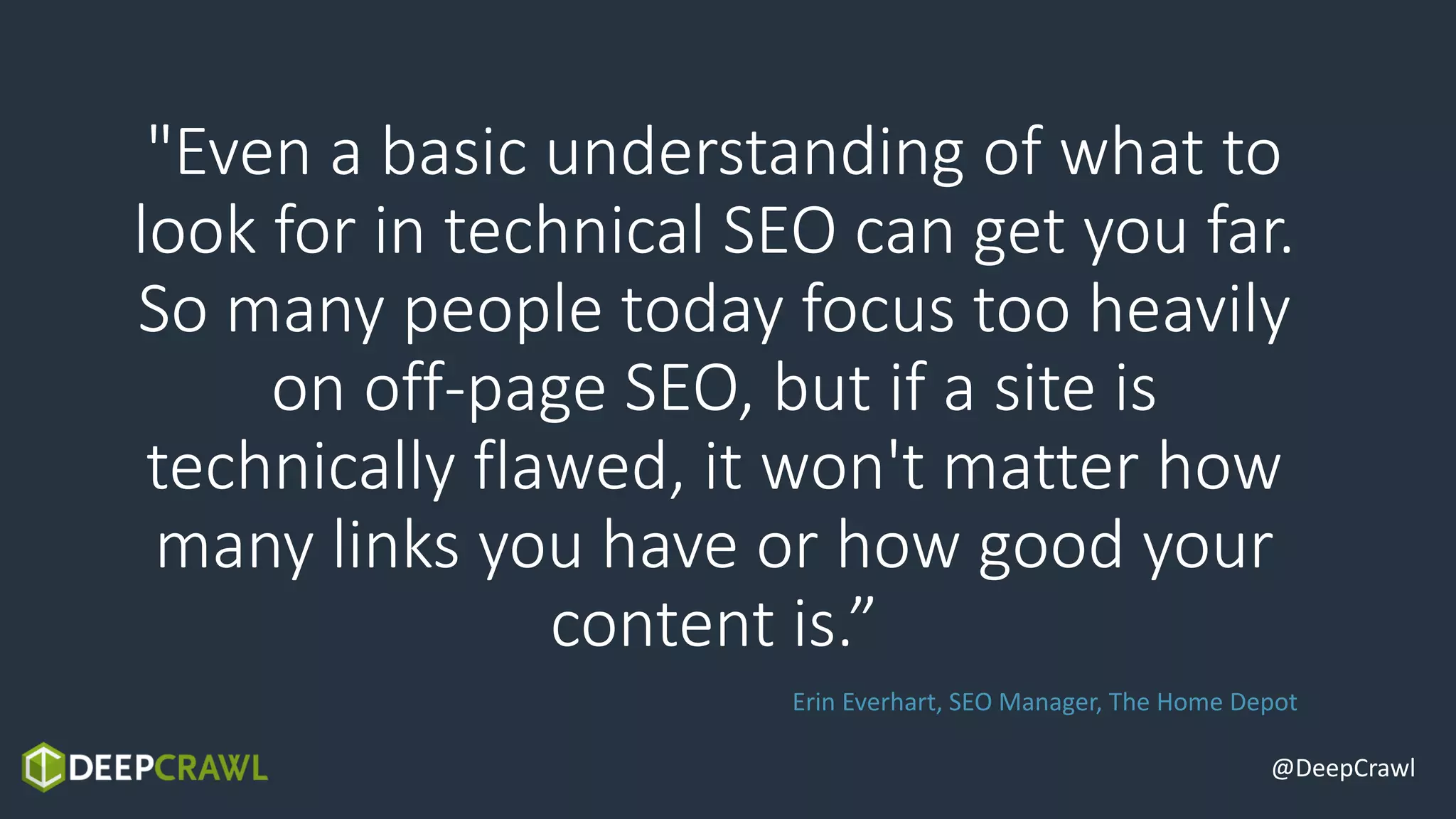

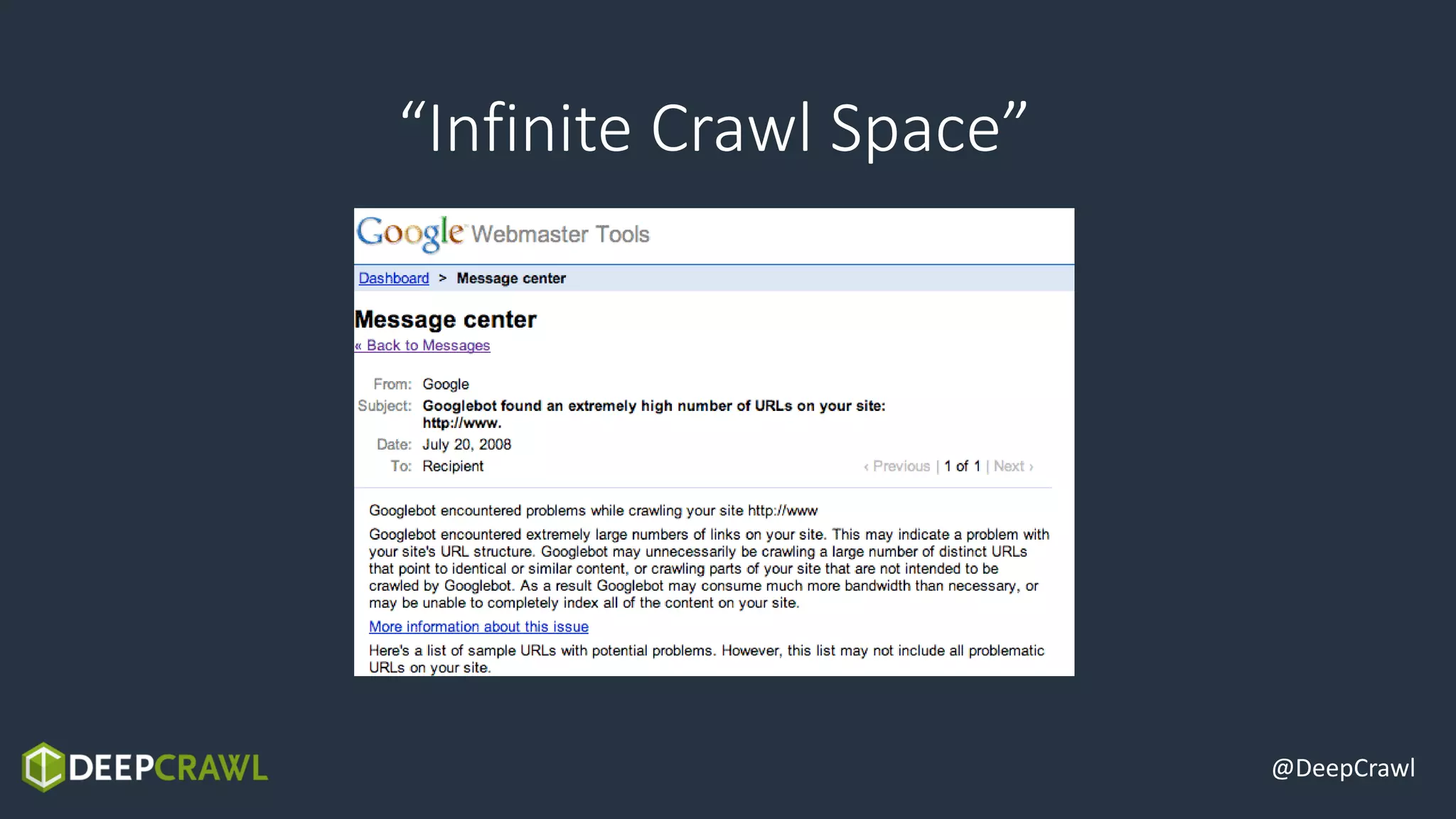

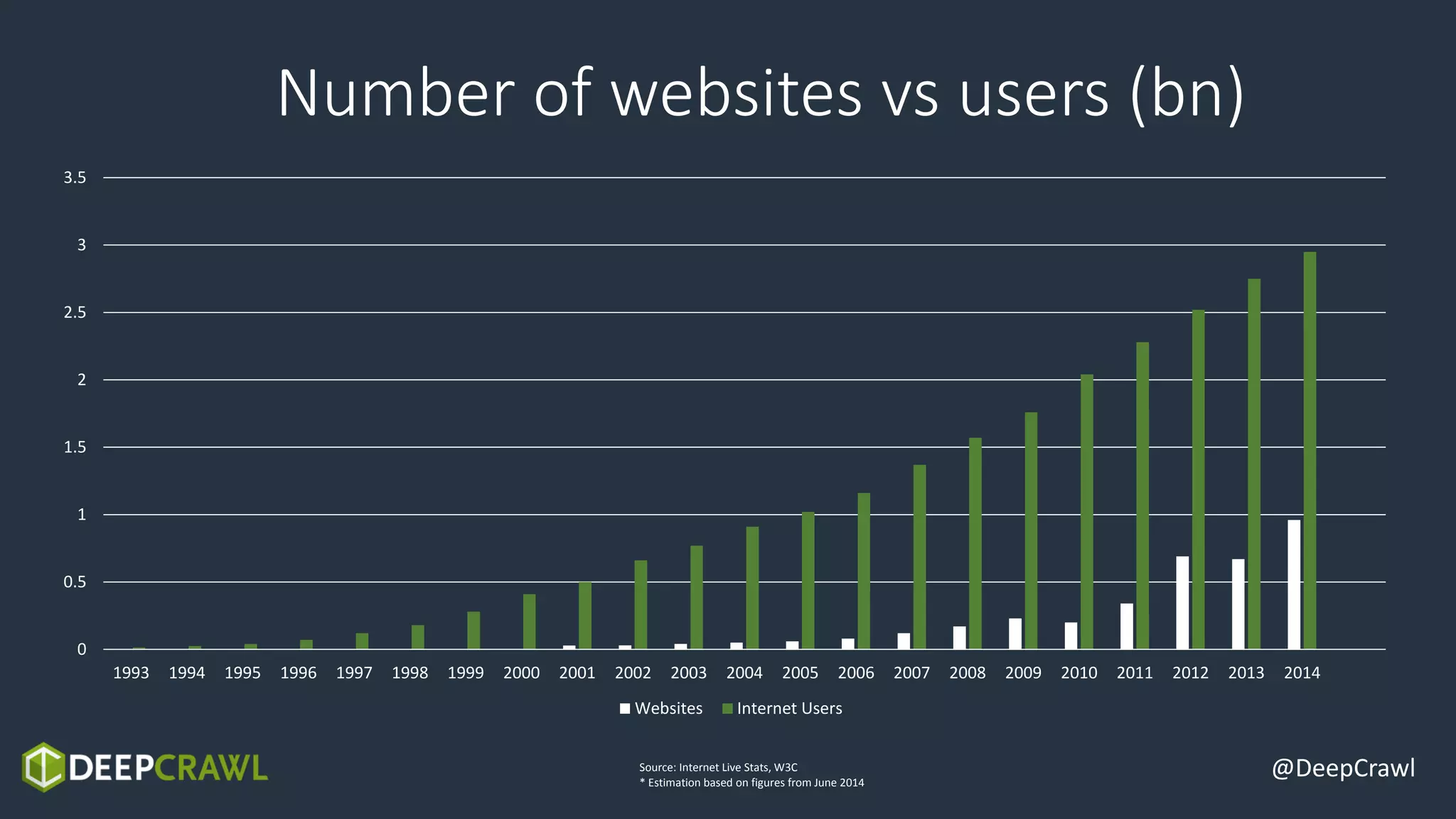

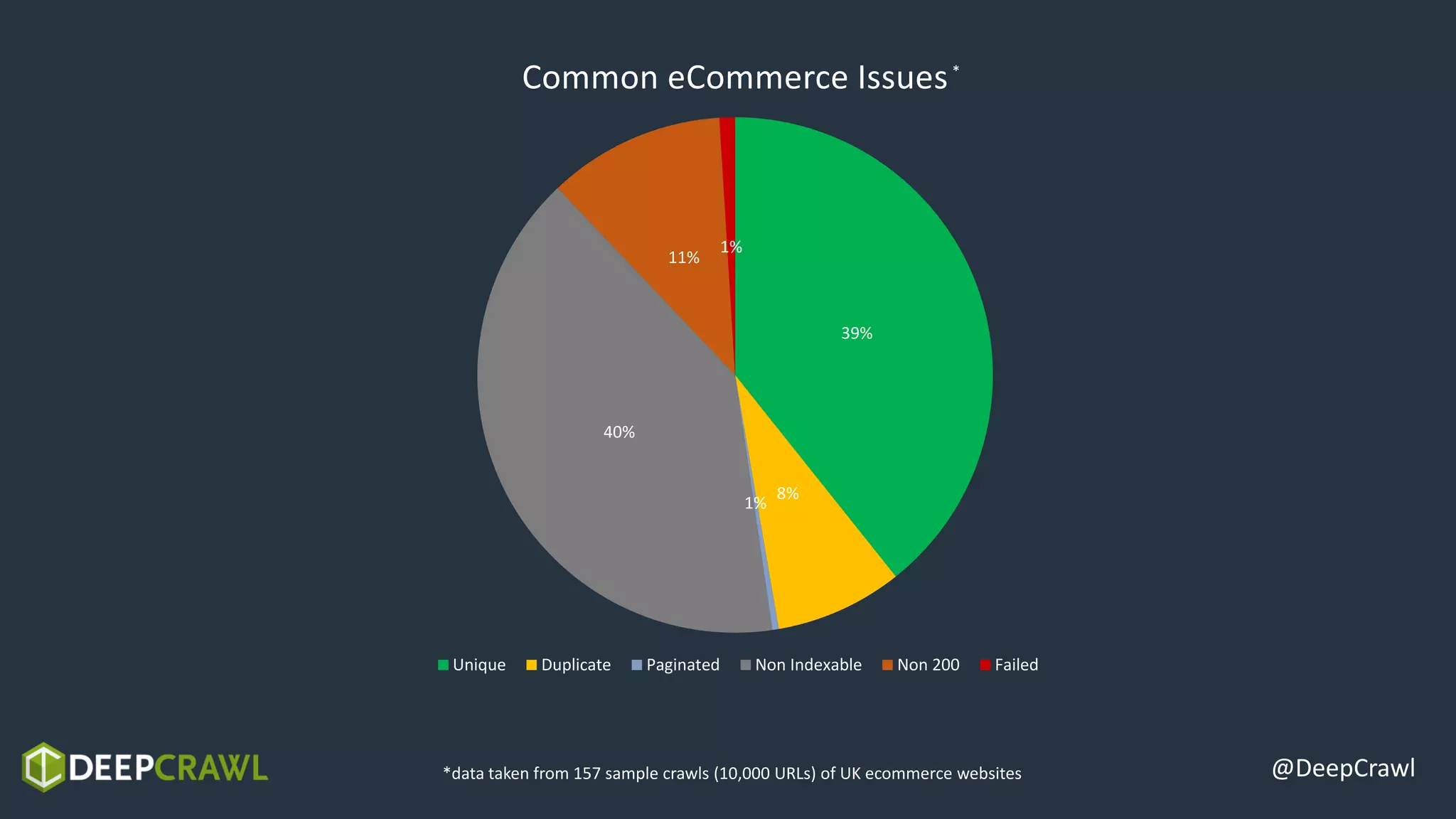

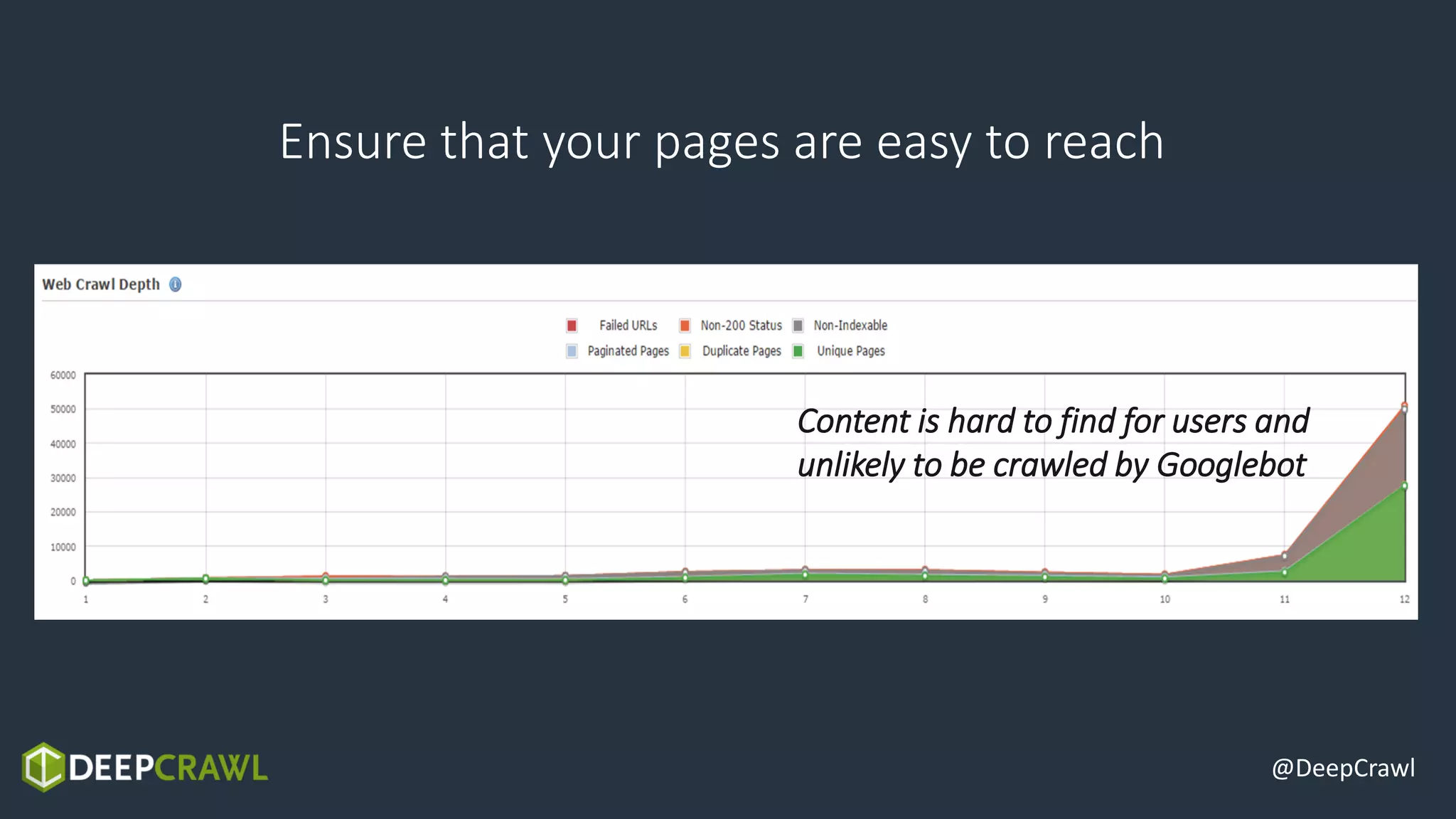

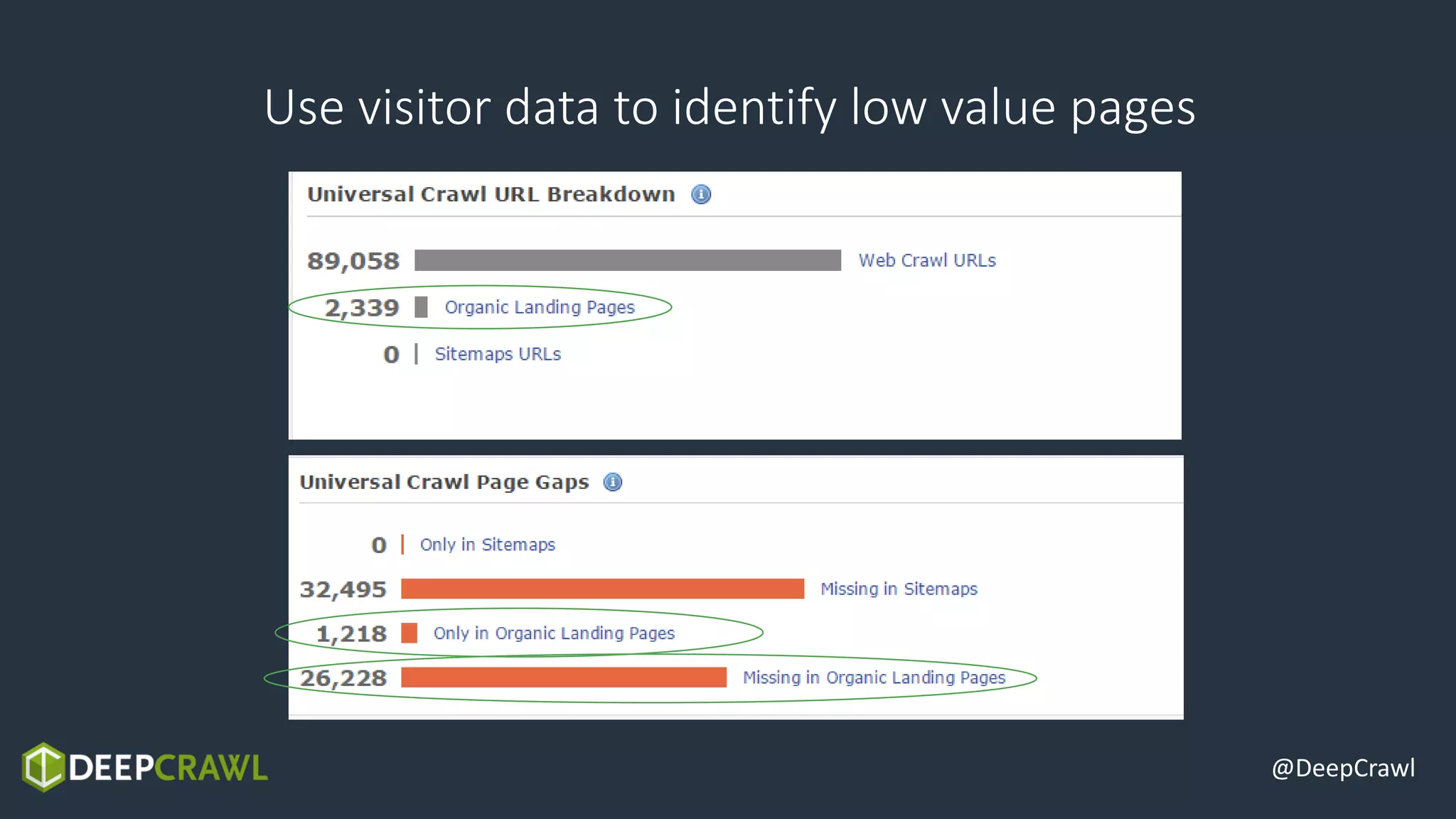

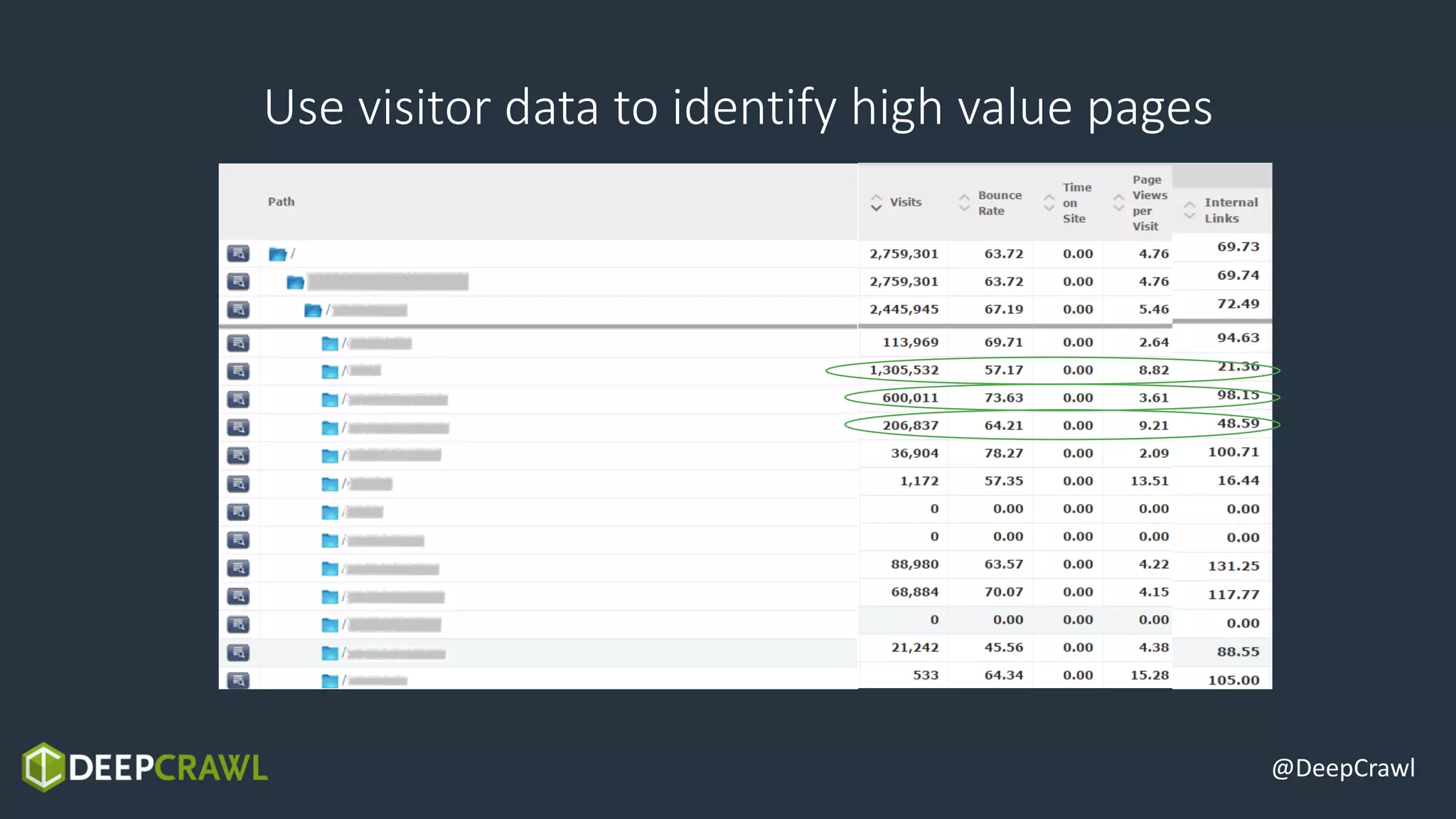

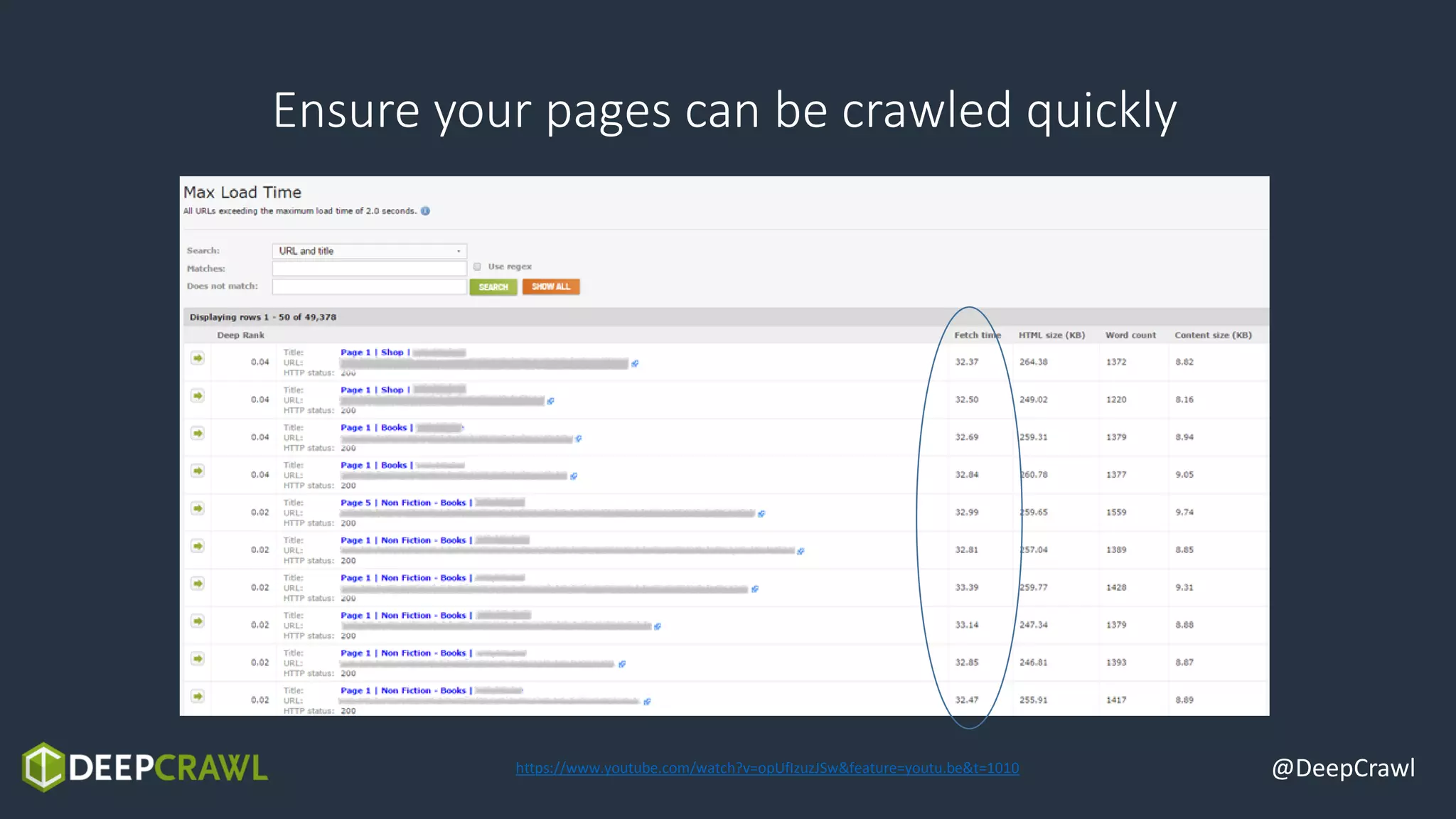

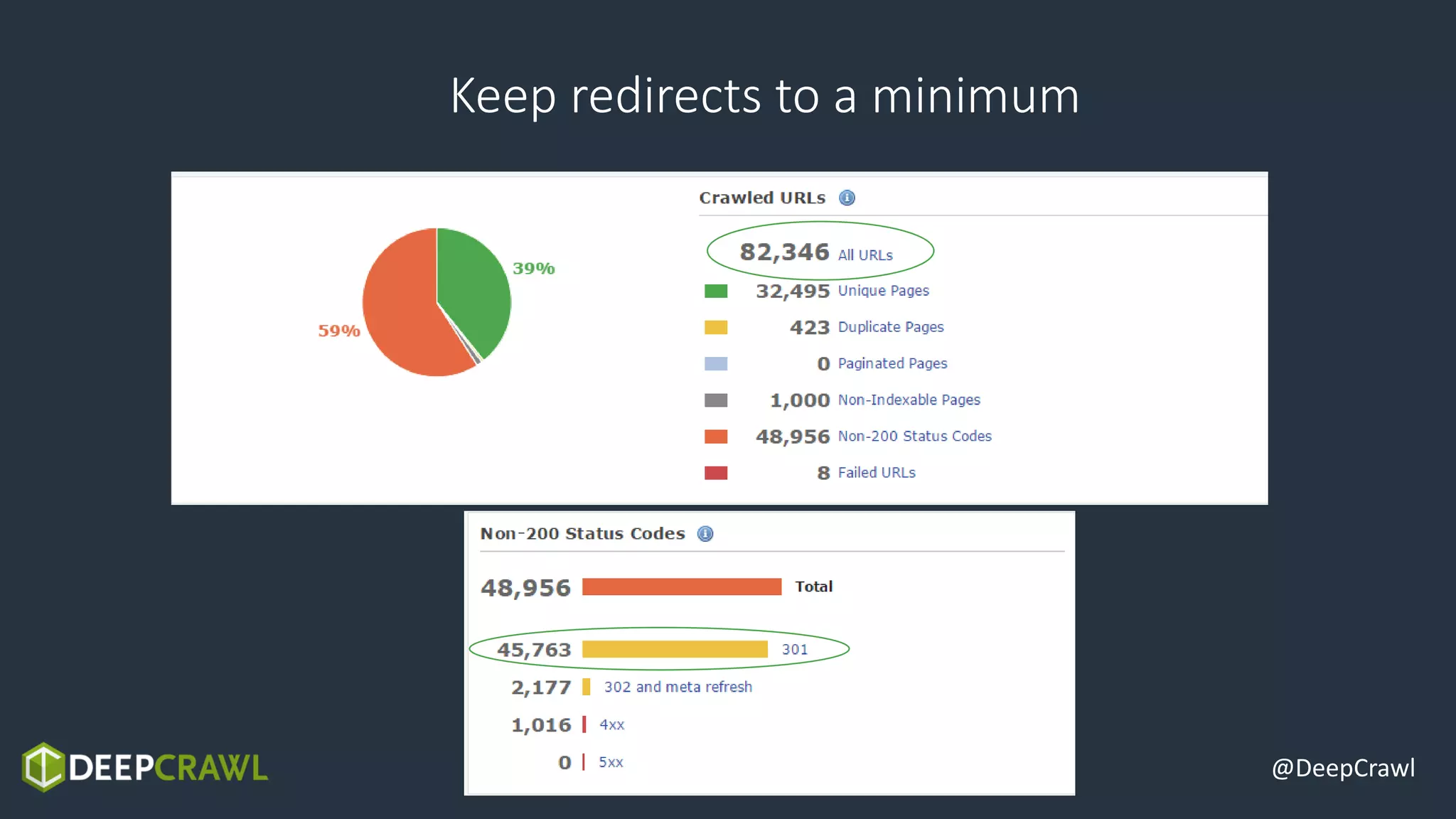

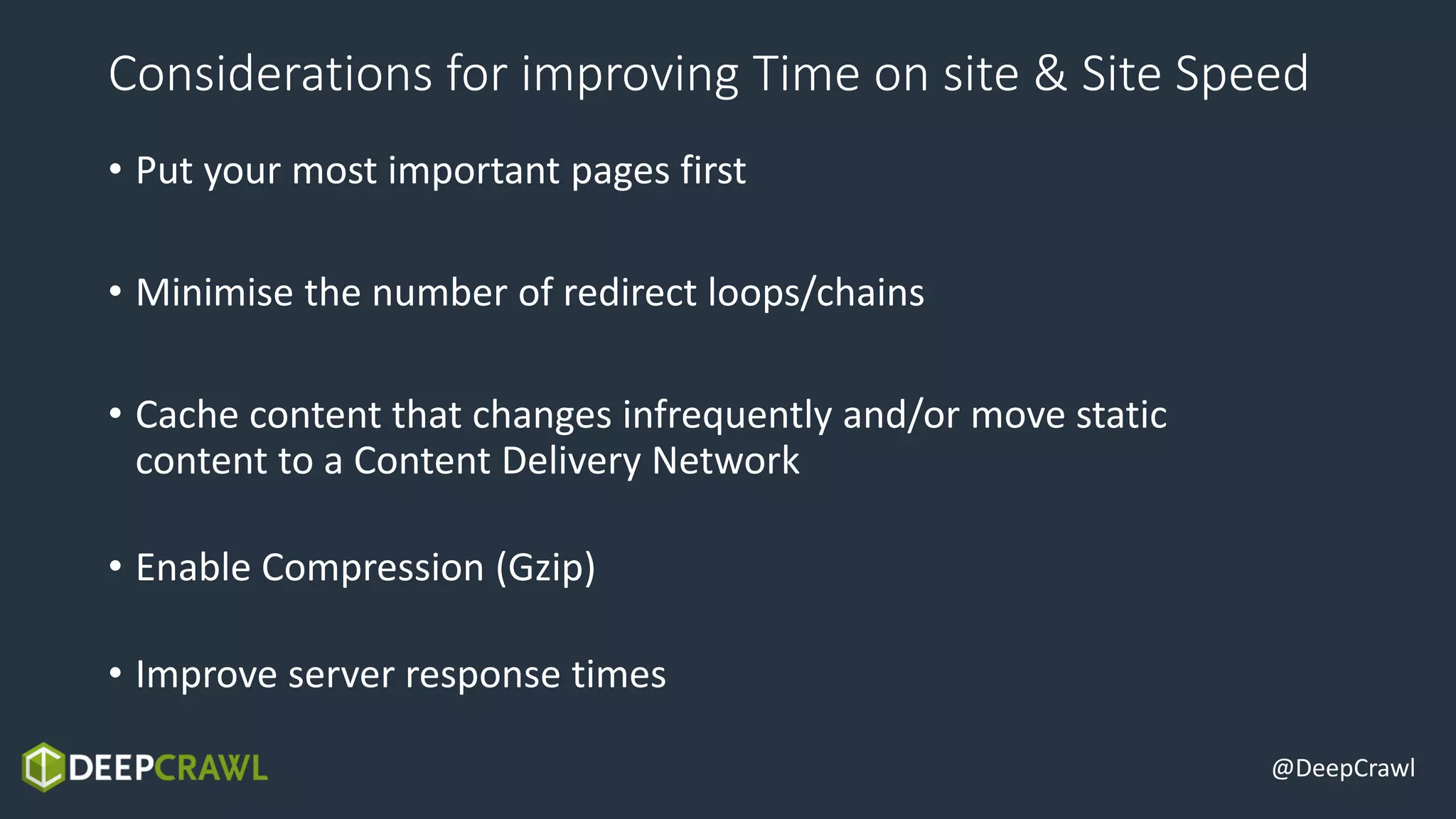

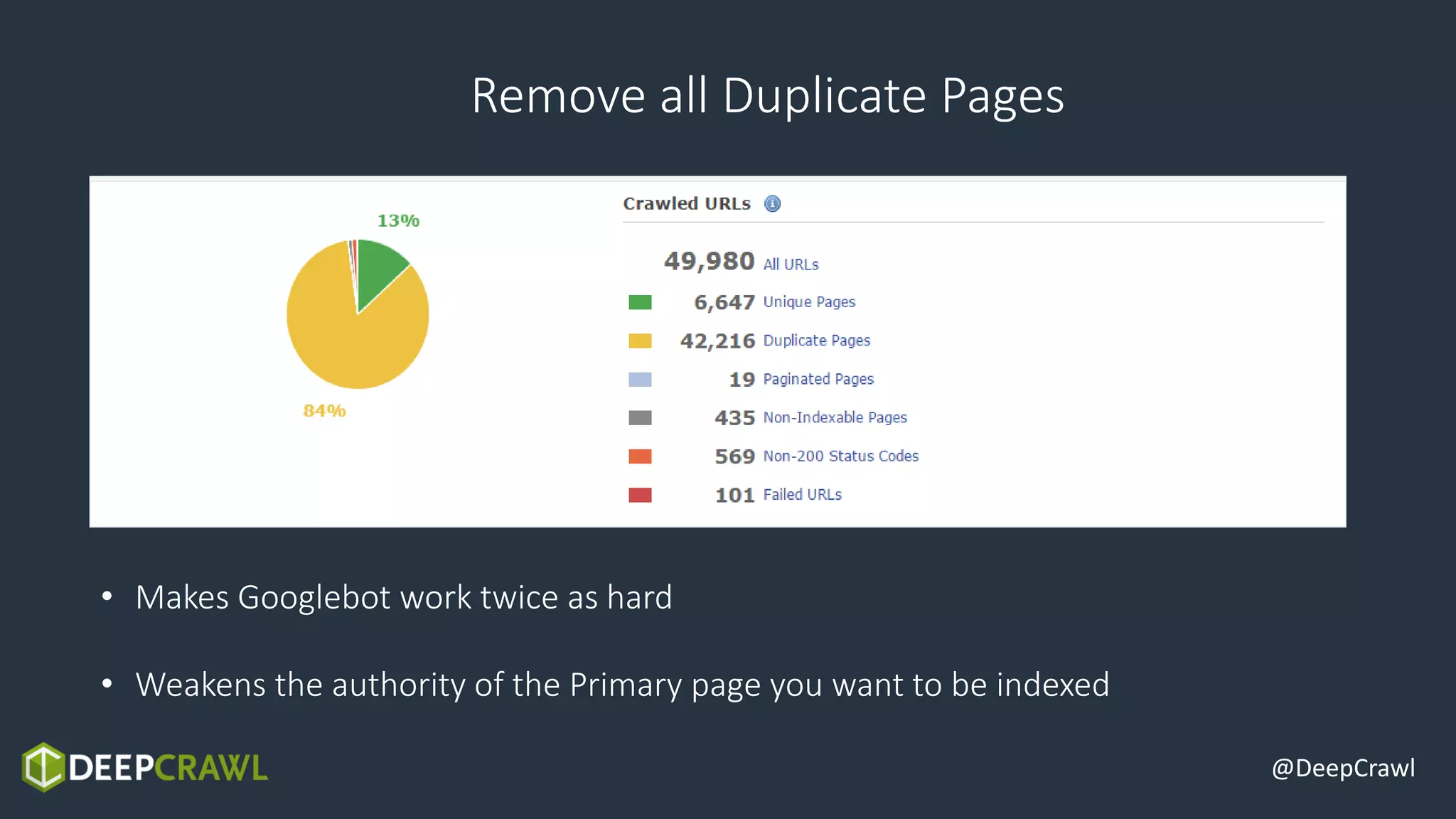

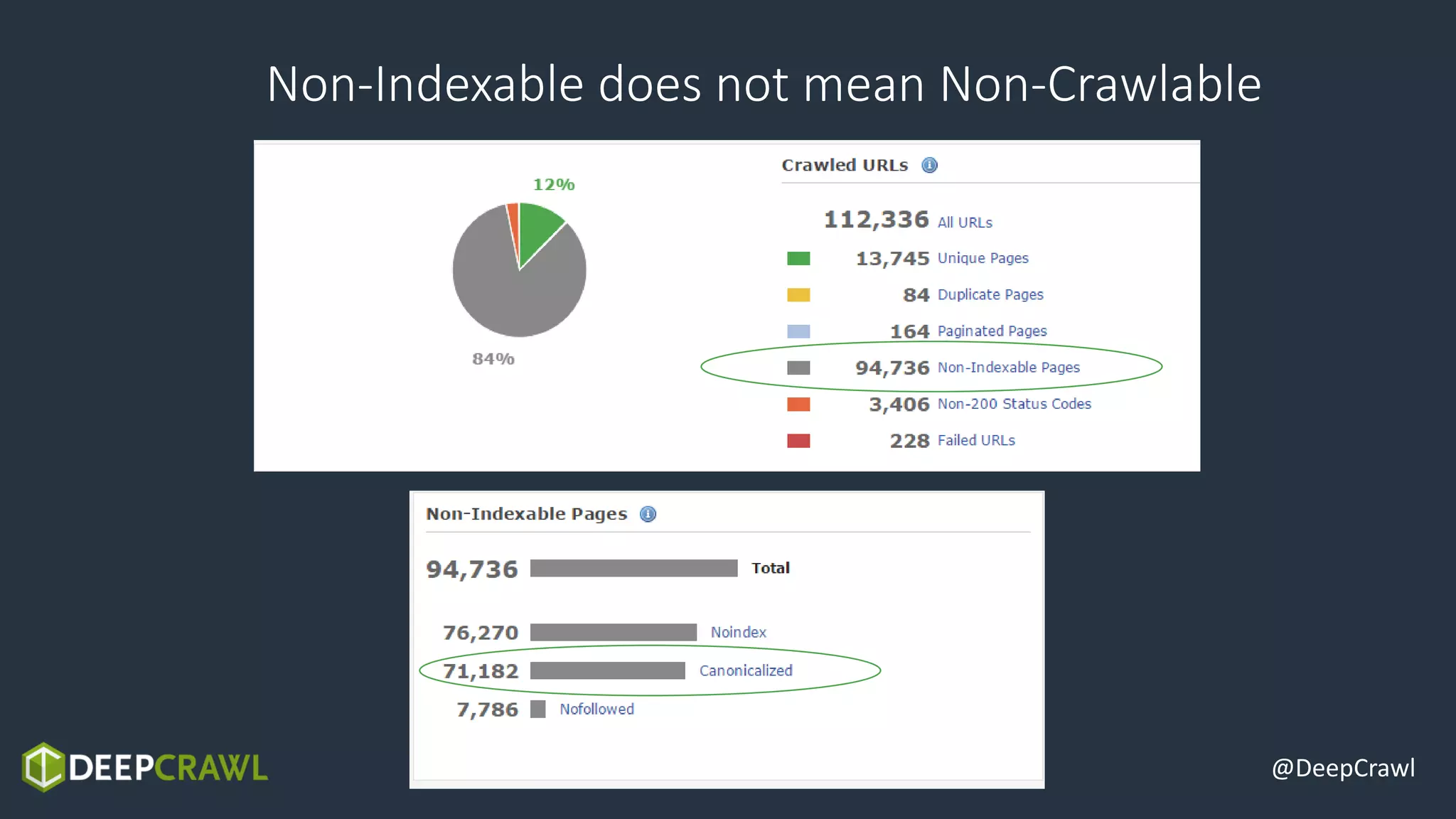

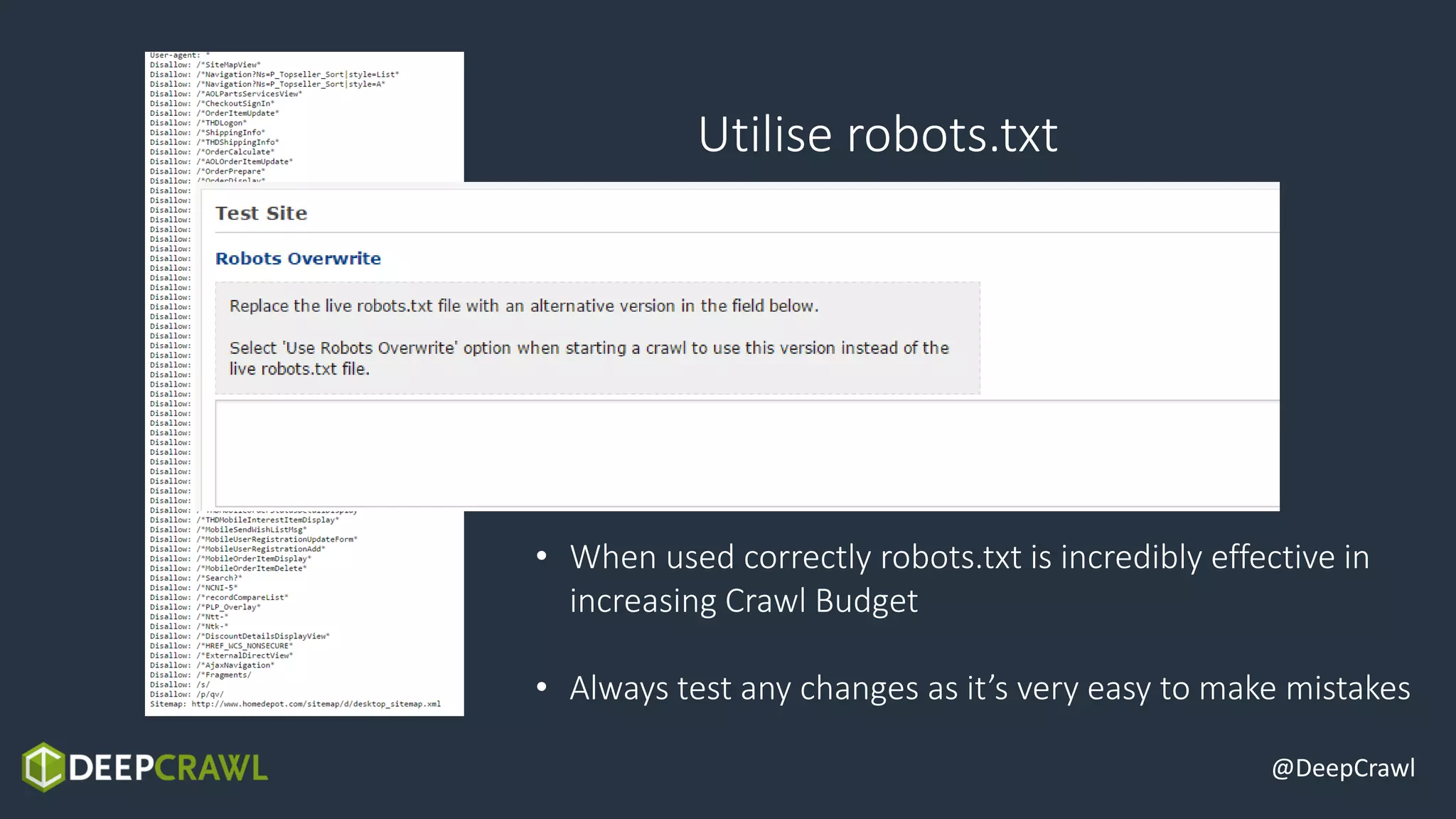

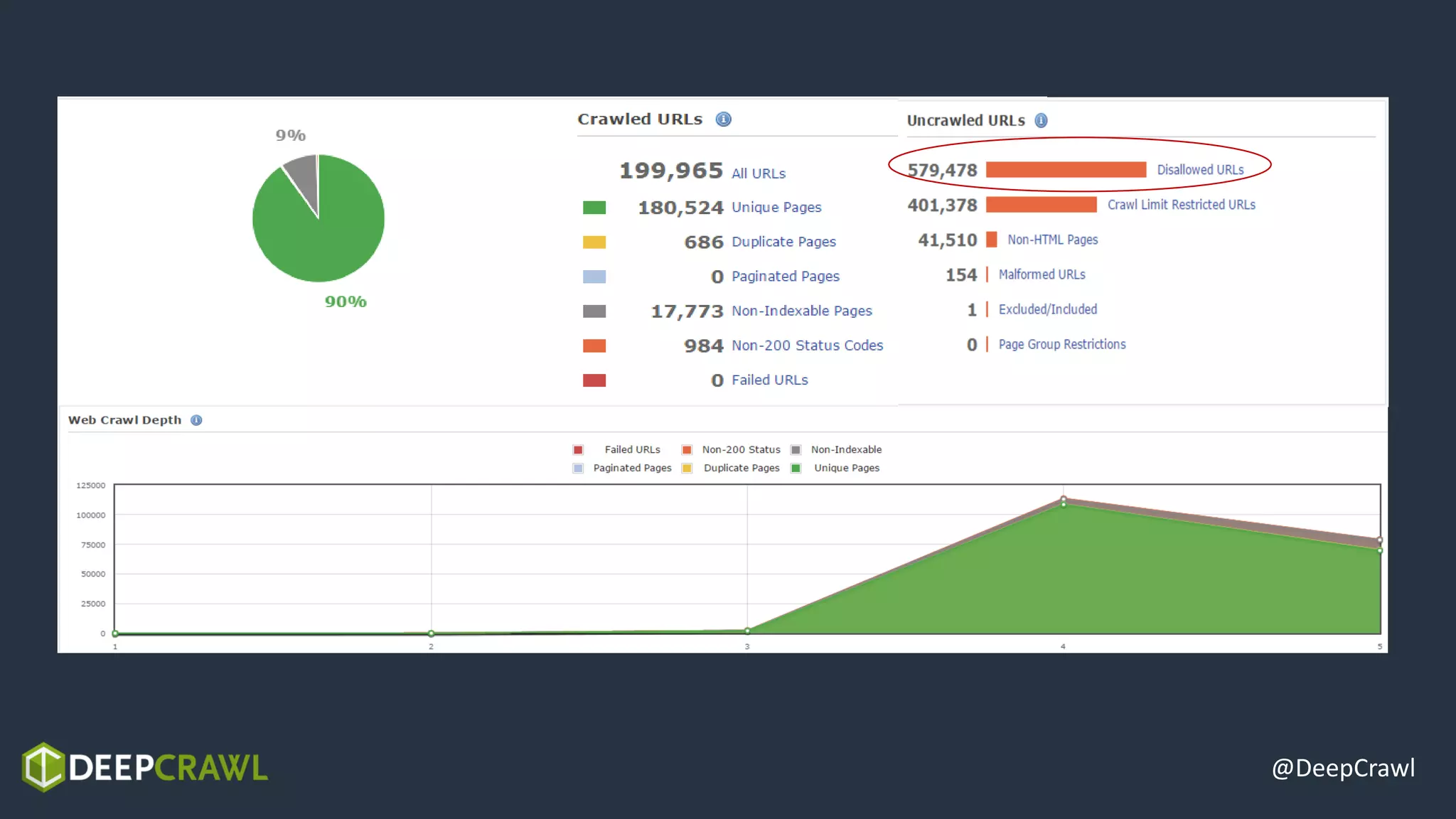

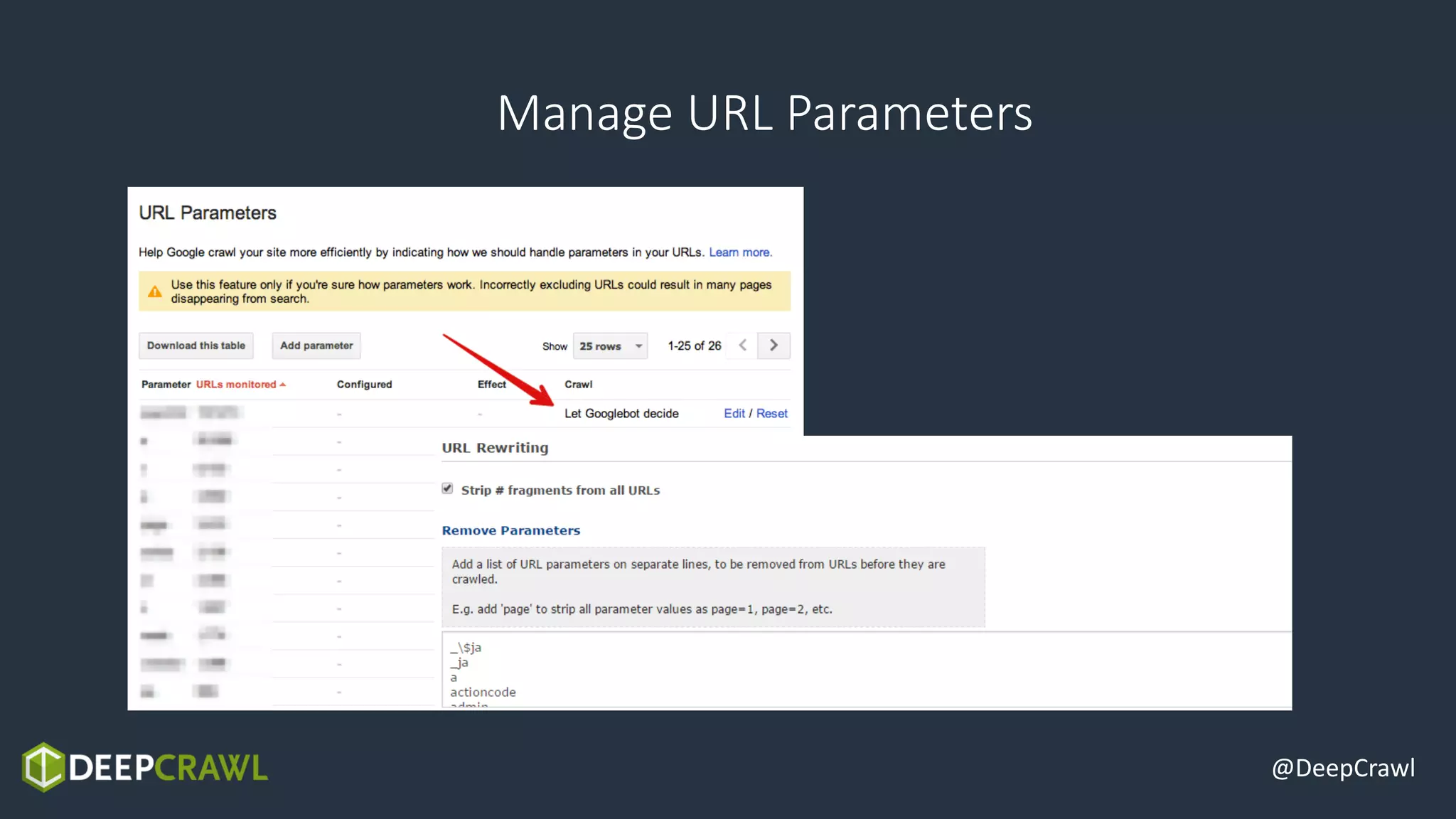

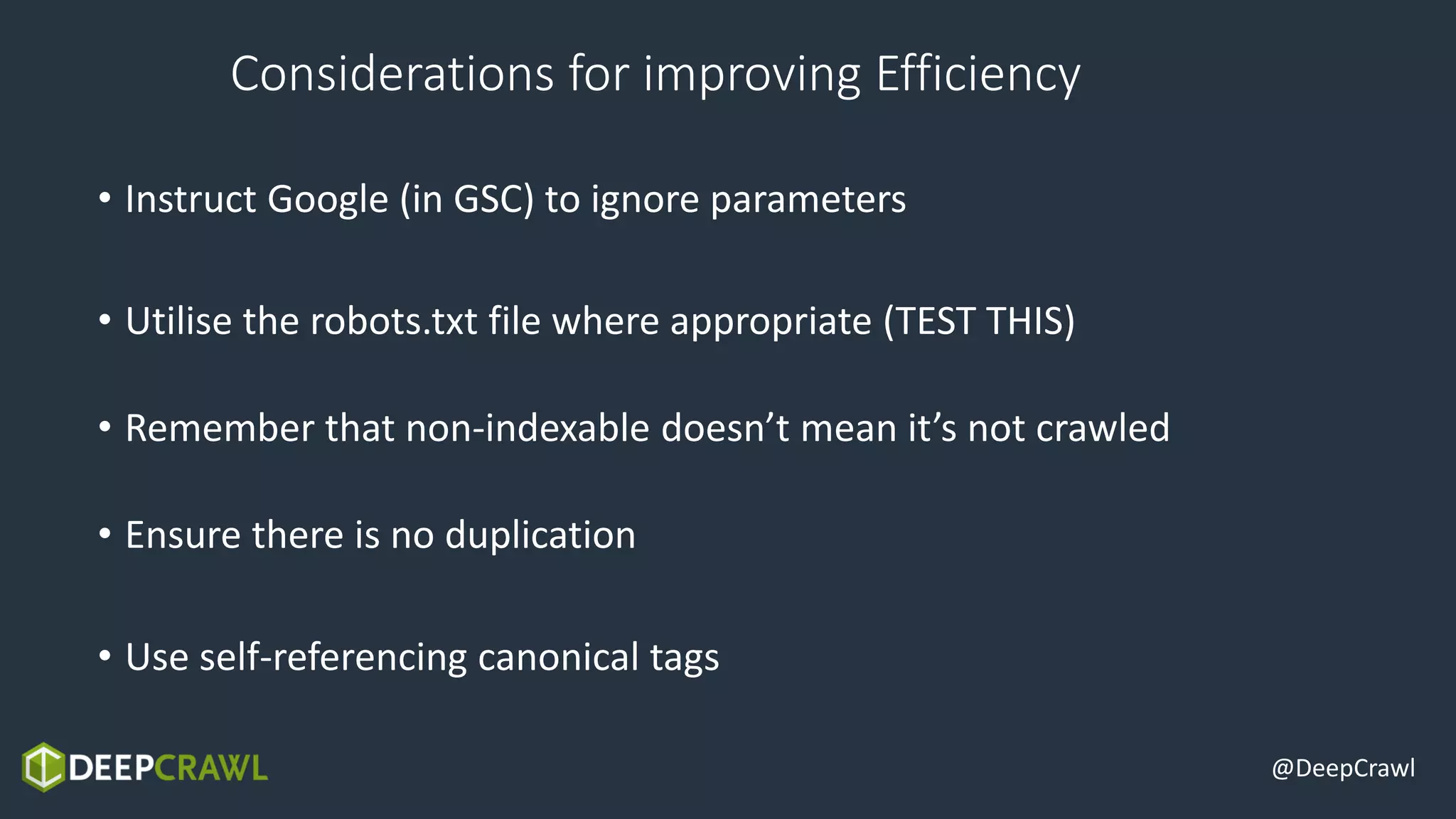

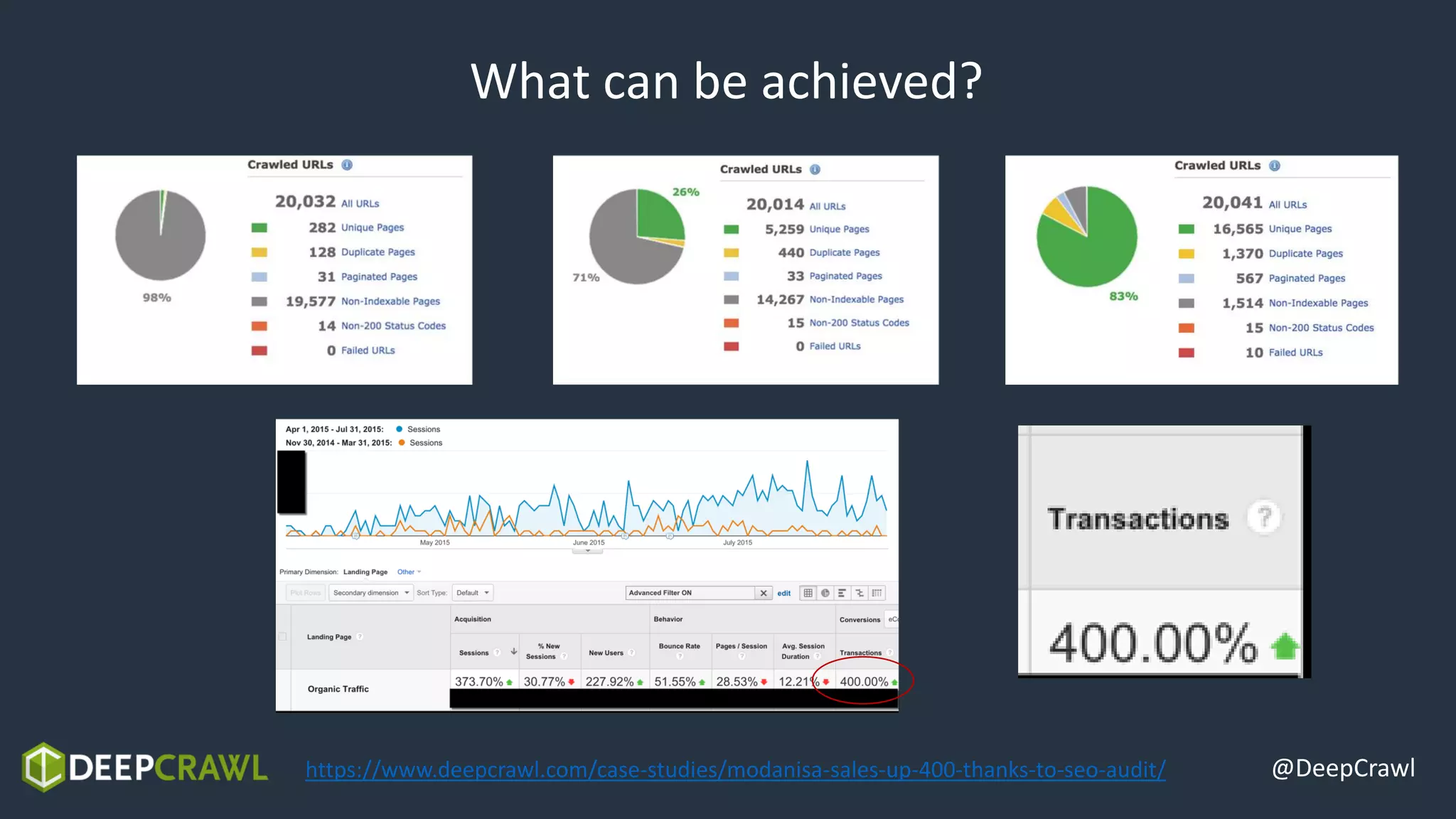

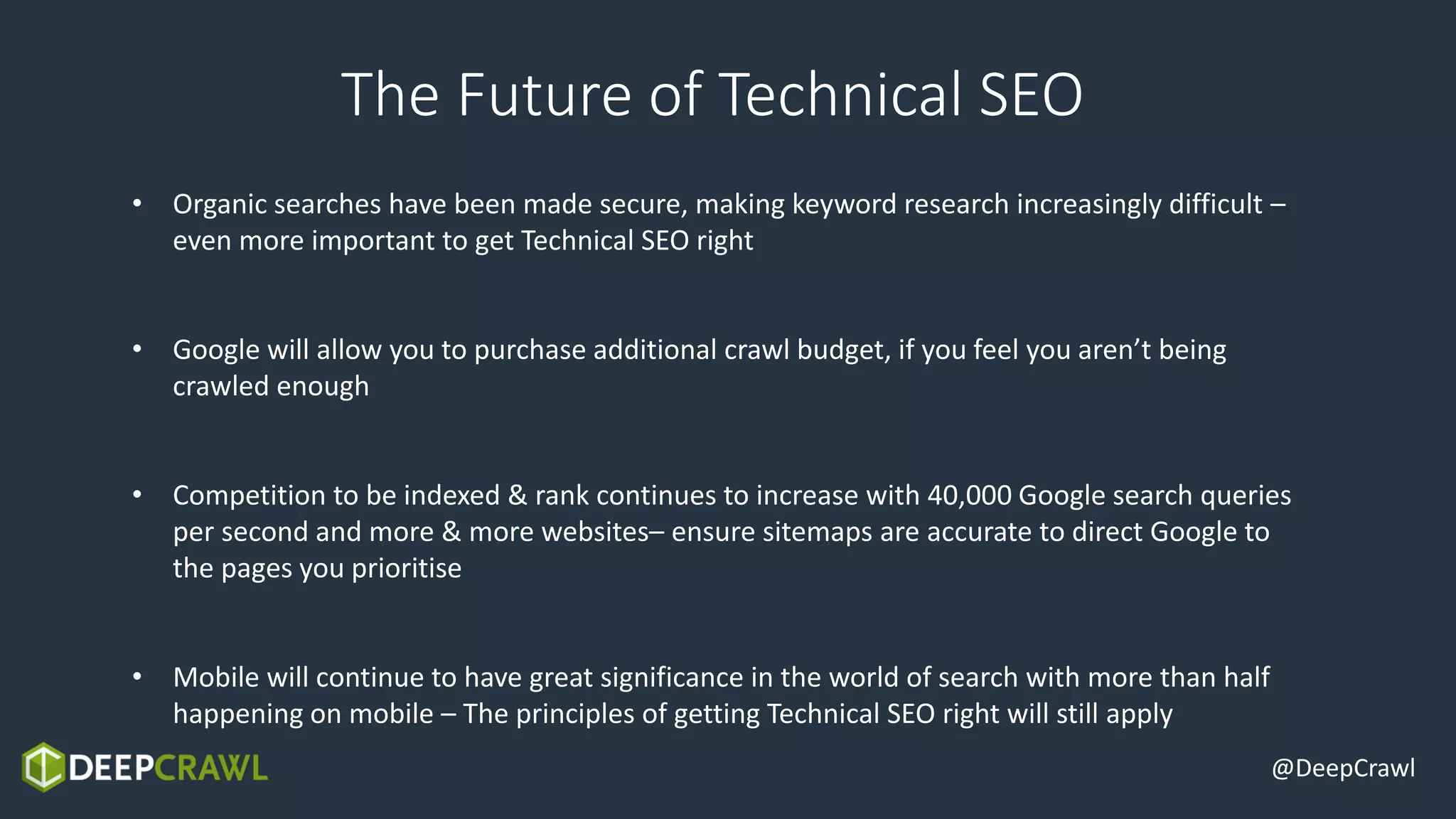

The document discusses the significance of technical SEO and its impact on crawl budget, site speed, and authority. It emphasizes the need to optimize site access, reduce duplicate content, and manage URL parameters for improved crawling efficiency. The future of technical SEO hinges on adapting to secure organic searches and mobile optimization as competition for indexing increases.