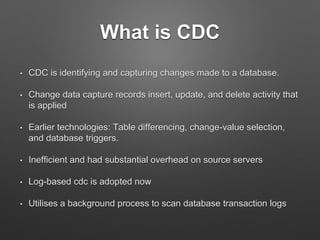

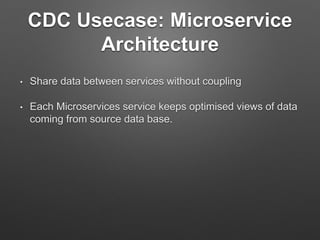

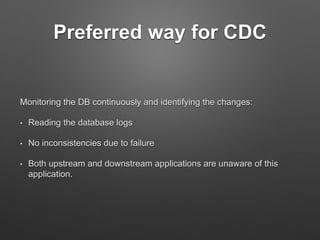

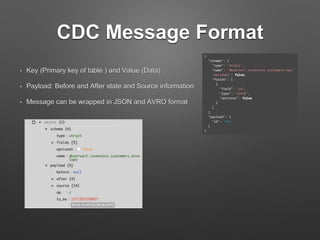

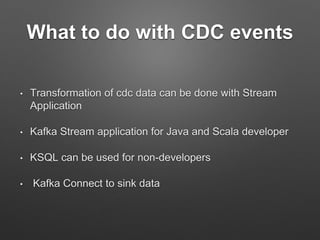

This document provides an overview of data stream processing using Apache Kafka and change data capture (CDC). It defines CDC as identifying and capturing changes made to a database. CDC is useful for applications like data replication, microservice architectures, and caching. The document discusses how Kafka and Kafka Connect can be used to capture change data from databases logs and send them as a stream of events. It also introduces Debezium, an open-source CDC connector for databases like MySQL, PostgreSQL and MongoDB. Finally, it mentions that a live demo will be shown of capturing change events from a MySQL database using Debezium and viewing them in a Kafka topic.