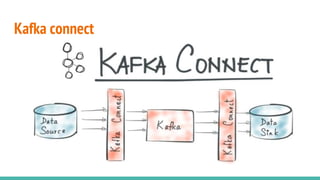

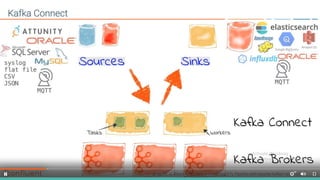

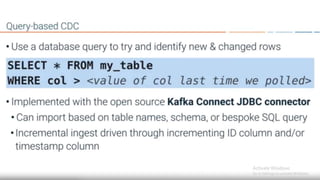

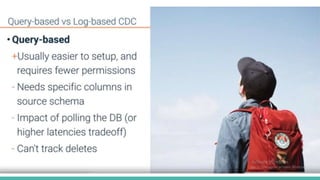

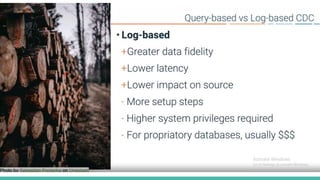

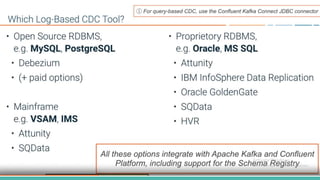

Kafka Connect is a scalable and resilient tool for integrating Kafka with other systems. There are two main options for integrating a database with Kafka - using the JDBC connector for Kafka Connect, or using a log-based Change Data Capture (CDC) tool which also integrates with Kafka Connect. The JDBC connector allows streaming data between Kafka and any Relational Database Management System (RDBMS) that supports JDBC, while CDC tools provide a log of all changes to a database that can then be streamed to Kafka.