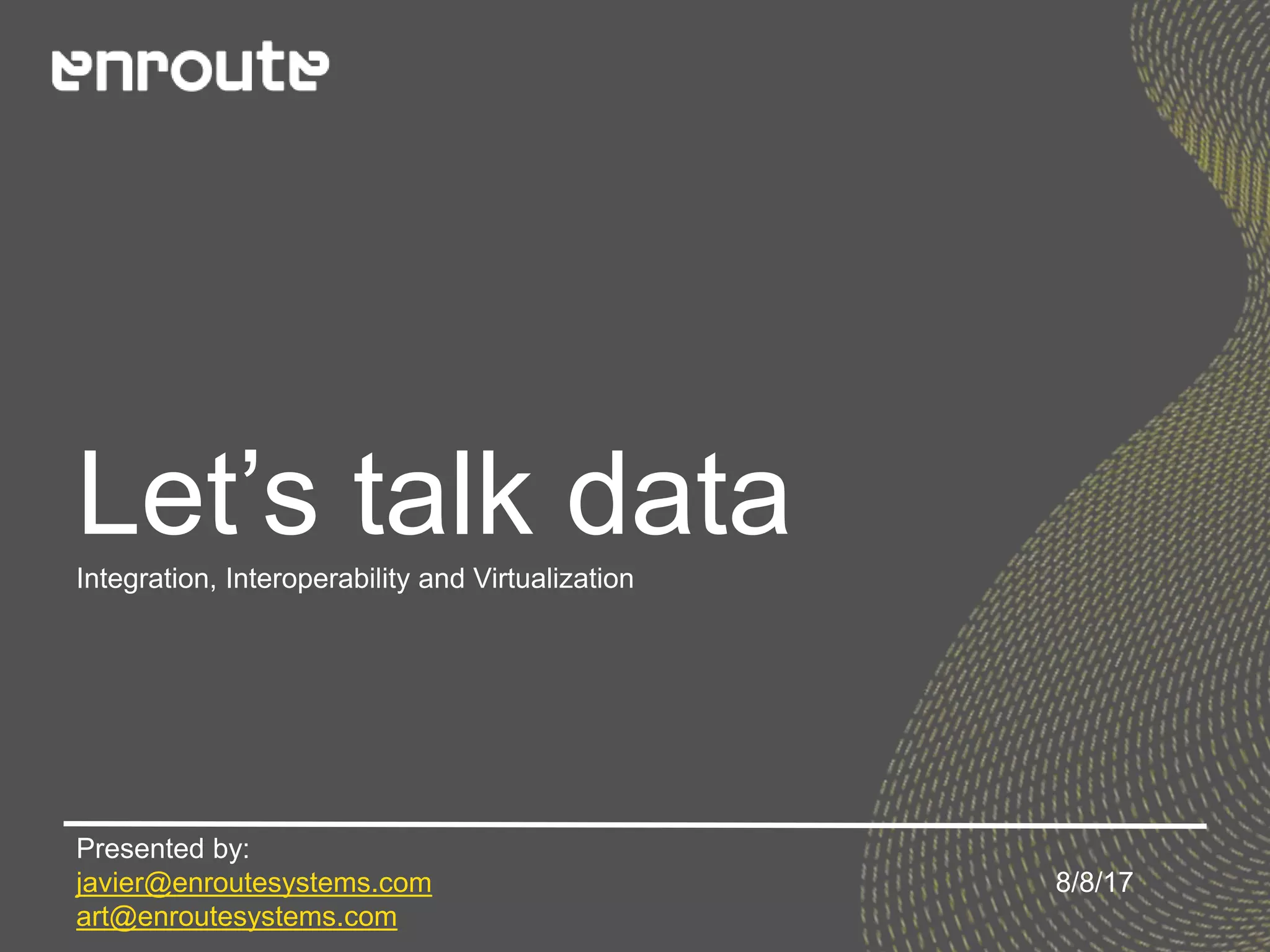

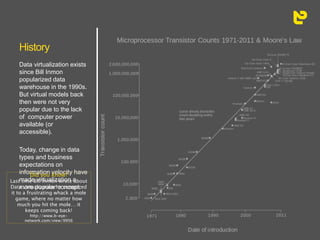

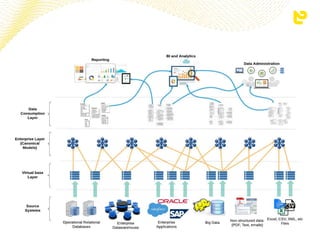

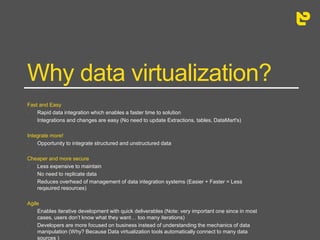

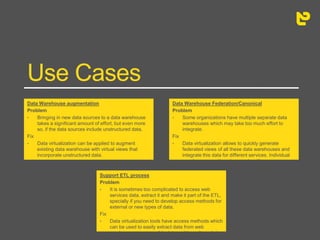

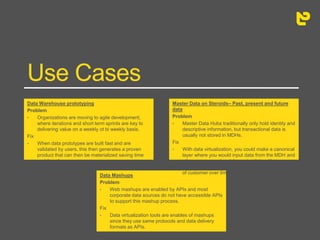

The document discusses data integration and interoperability (DII), highlighting its importance in the context of SOA/microservices and the consolidation of structured and unstructured data. It introduces data virtualization as a modern solution to streamline data access and integration, contrasting it with traditional ETL processes, and presents multiple use cases to illustrate its benefits. The document emphasizes that data virtualization enhances data warehouses rather than replacing them, allowing for quicker, more flexible data management.