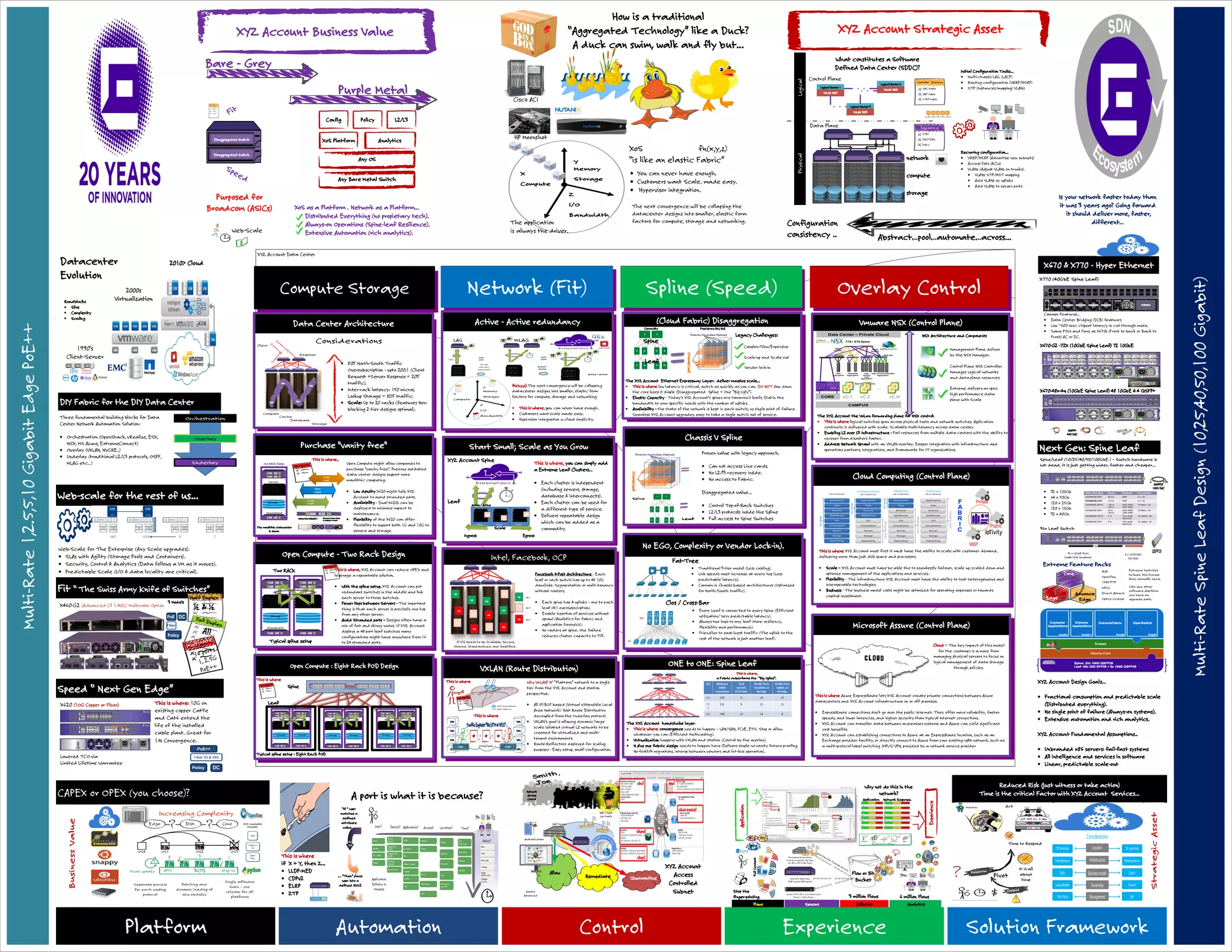

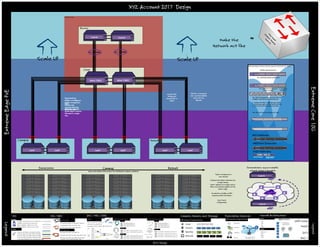

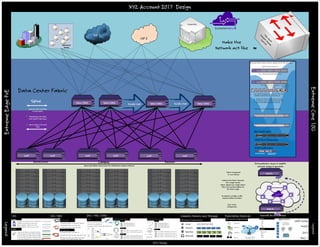

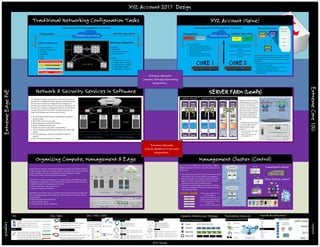

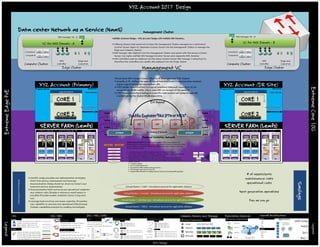

The document discusses a multi-rate spine-leaf network design for data centers, emphasizing flexibility, scalability, and automation. It highlights the importance of low latency, resilient architecture, and the convergence of LAN/SAN technologies while addressing legacy challenges such as vendor lock-in. The use of VXLAN for network virtualization and dynamic provisioning in a multi-tenant environment is also detailed, showcasing a modular approach to data center infrastructure.