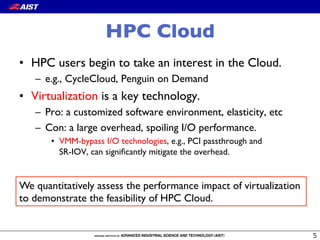

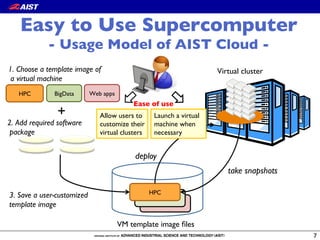

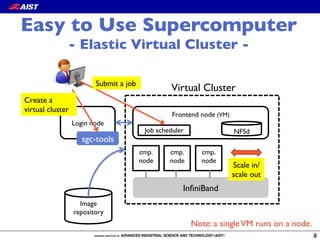

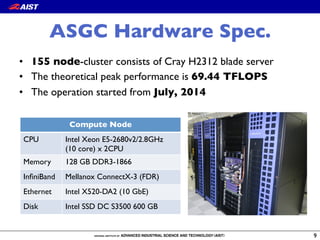

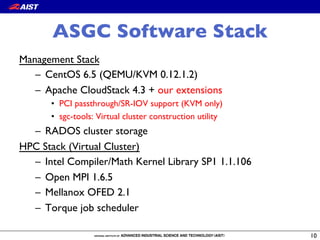

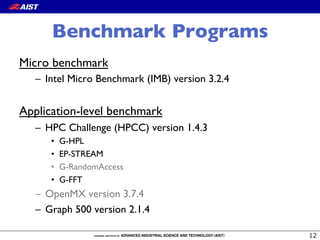

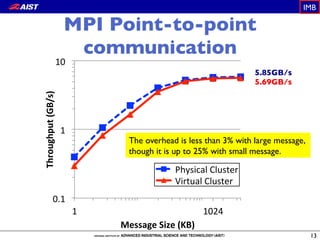

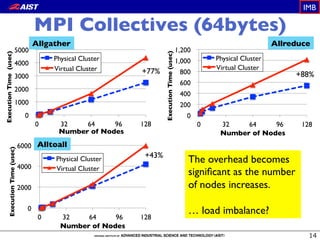

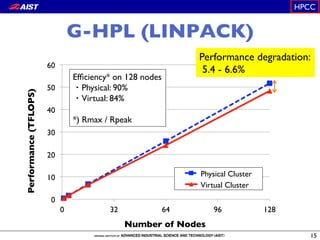

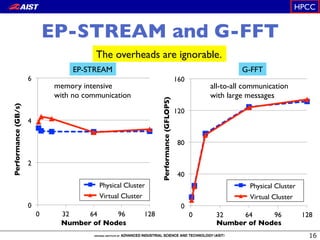

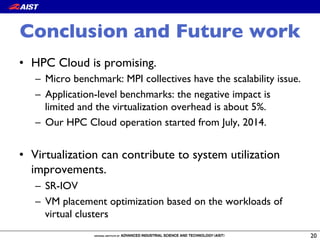

The document evaluates the performance impact of virtualization on high-performance computing (HPC) clouds. Experiments were conducted on the AIST Super Green Cloud, a 155-node HPC cluster. Benchmark results show that while PCI passthrough mitigates I/O overhead, virtualization still incurs performance penalties for MPI collectives as node counts increase. Application benchmarks demonstrate overhead is limited to around 5%. The study concludes HPC clouds are promising due to utilization improvements from virtualization, but further optimization of virtual machine placement and pass-through technologies could help reduce overhead.

![Motivating Observation

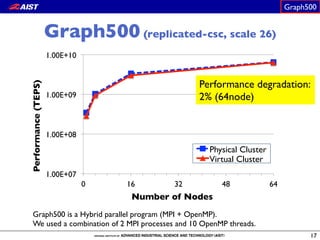

• Performance evaluation of HPC cloud

– (Para-)virtualized I/O incurs a large overhead.

– PCI passthrough significantly mitigate the overhead.

0

50

100

150

200

250

300

BT CG EP FT LU

Executiontime[seconds]

BMM (IB) BMM (10GbE)

KVM (IB) KVM (virtio)

The overhead of I/O virtualization on the NAS Parallel

Benchmarks 3.3.1 class C, 64 processes.

BMM: Bare Metal Machine

KVM (virtio)

VM1

10GbE NIC

VMM

Guest

driver

Physical

driver

Guest OS

KVM (IB)

VM1

IB QDR HCA

VMM

Physical

driver

Guest OS

Bypass

Improvement by !

PCI passthrough](https://image.slidesharecdn.com/cloudcom2014-141217222054-conversion-gate01/85/Exploring-the-Performance-Impact-of-Virtualization-on-an-HPC-Cloud-4-320.jpg)