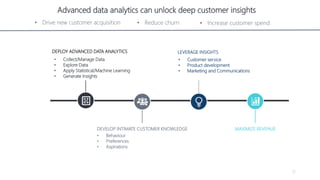

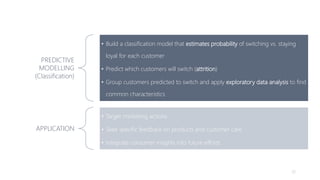

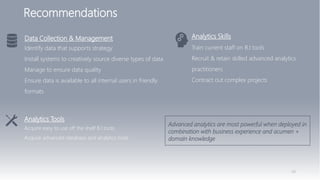

1) The document proposes using advanced data analytics to build knowledge of customer behavior, preferences, and aspirations in order to maximize revenue.

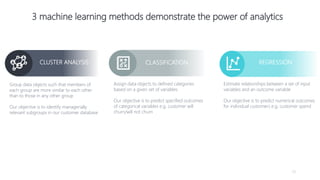

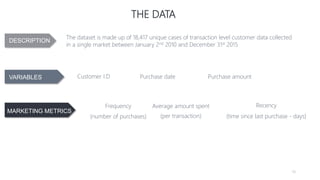

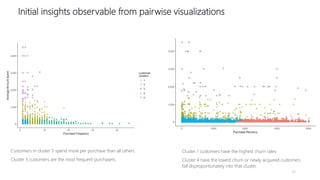

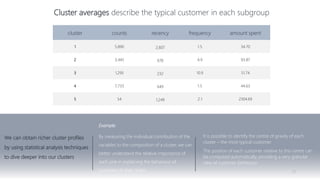

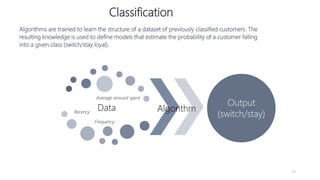

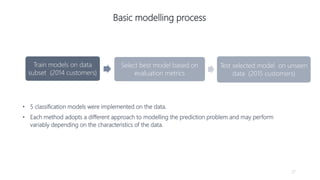

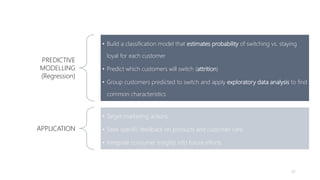

2) A case study uses data from an online beauty/personal care subsidiary to demonstrate how clustering, classification, and regression analyses can provide insights.

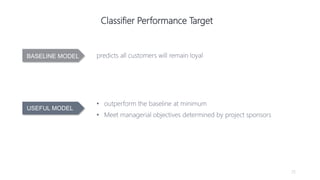

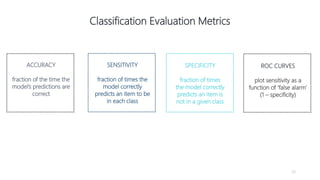

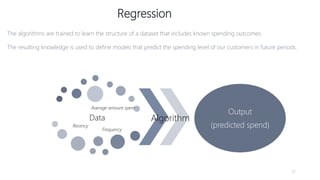

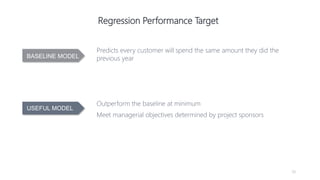

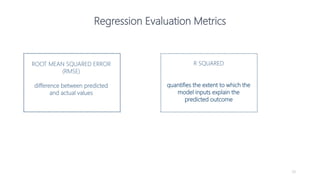

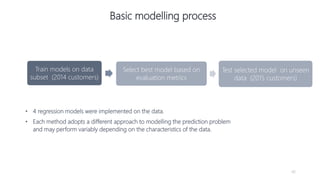

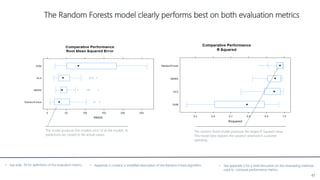

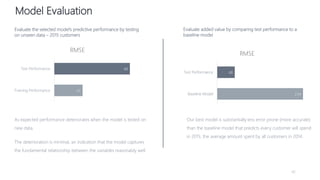

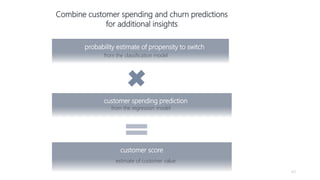

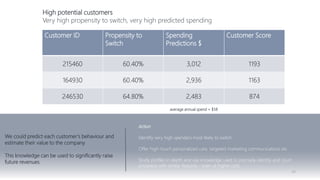

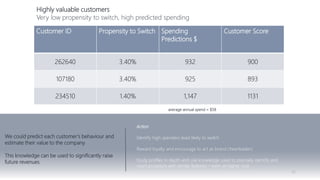

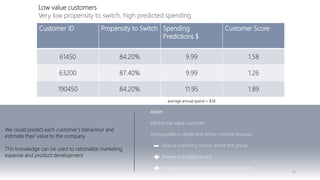

3) The analyses identify customer subgroups, predict which customers will churn, and forecast spending amounts. This knowledge can then be used to target marketing and improve customer retention and spending.